Understanding the kernel in Semantic Kernel

source link: https://learn.microsoft.com/en-us/semantic-kernel/agents/kernel/?tabs=Csharp

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Understanding the kernel in Semantic Kernel

- Article

- 12/14/2023

In this article

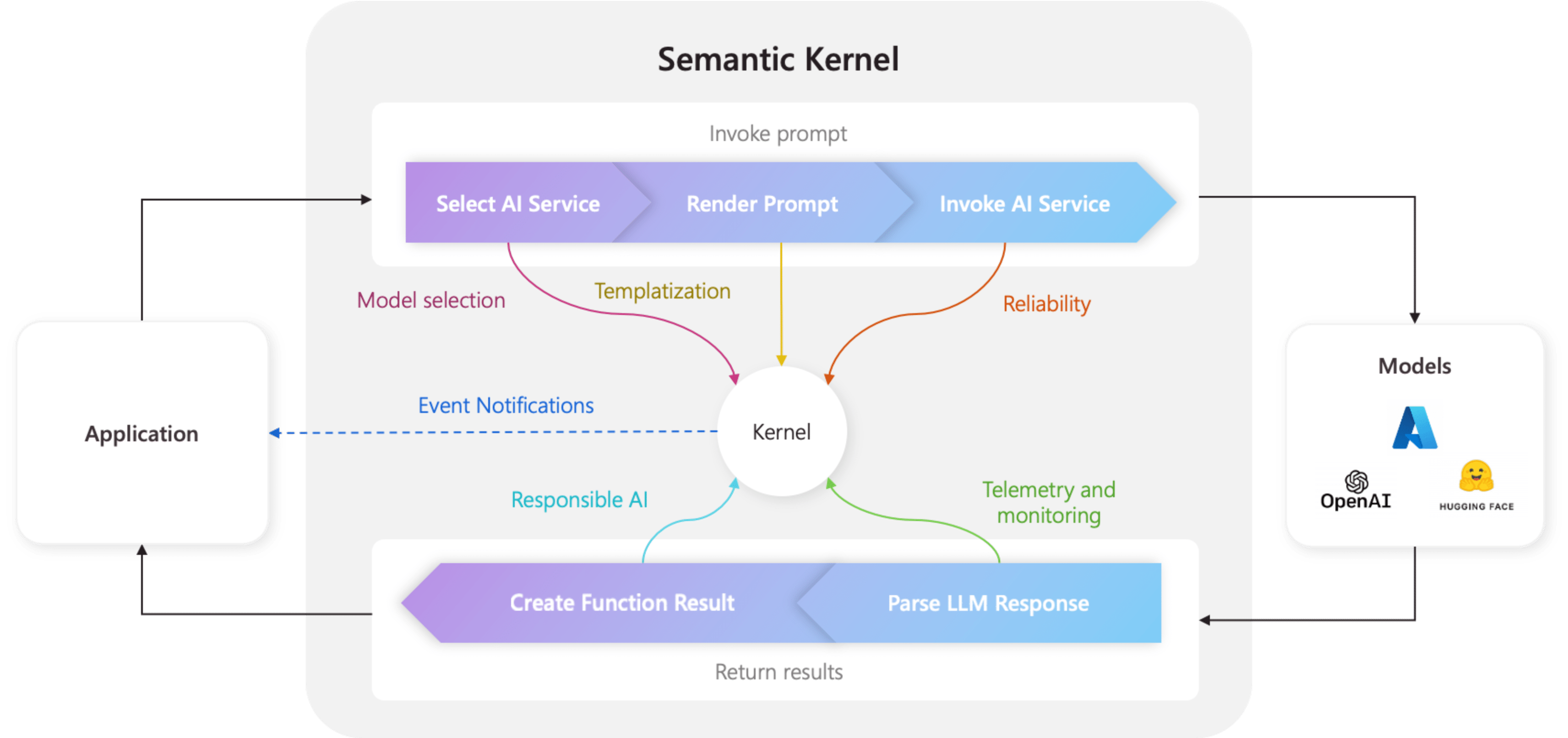

Similar to operating system, the kernel is responsible for managing resources that are necessary to run "code" in an AI application. This includes managing the AI models, services, and plugins that are necessary for both native code and AI services to run together.

If you want to see the code demonstrated in this article in a complete solution, check out the following samples in the public documentation repository.

The kernel is at center of everything

Because the kernel has all of the services and plugins necessary to run both native code and AI services, it is used by nearly every component within the Semantic Kernel SDK. This means that if run any prompt or code in Semantic Kernel, it will always go through a kernel.

This is extremely powerful, because it means you as a developer have a single place where you can configure, and most importantly monitor, your AI application. Take for example, when you invoke a prompt from the kernel. When you do so, the kernel will...

- Select the best AI service to run the prompt.

- Build the prompt using the provided prompt template.

- Send the prompt to the AI service.

- Receive and parse the response.

- Before finally returning the response to your application.

Throughout this entire process, you can create events and middleware that are triggered at each of these steps. This means you can perform actions like logging, provide status updates to users, and most importantly responsible AI. All from a single place.

Building a kernel

Before building a kernel, you should first understand the two types of components that exist within a kernel: services and plugins. Services consist of both AI services and other services that are necessary to run your application (e.g., logging, telemetry, etc.). Plugins, meanwhile, are any code you want AI to call or leverage within a prompt.

In the following examples, you can see how to add a logger, chat completion service, and plugin to the kernel.

With C#, Semantic Kernel natively supports dependency injection. This means you can add a kernel to your application's dependency injection container and use any of your application's services within the kernel by adding them as a service to the kernel.

Import the necessary packages:

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Logging;

using Microsoft.SemanticKernel;

using Plugins;

If you are using a Azure OpenAI, you can use the AddAzureOpenAIChatCompletion method.

var builder = Kernel.CreateBuilder(); ;

builder.Services.AddLogging(c => c.AddDebug().SetMinimumLevel(LogLevel.Trace));

builder.Services.AddAzureOpenAIChatCompletion(

AzureOpenAIDeploymentName, // The name of your deployment (e.g., "gpt-35-turbo")

AzureOpenAIEndpoint, // The endpoint of your Azure OpenAI service

AzureOpenAIApiKey, // The API key of your Azure OpenAI service

modelId: AzureOpenAIModelId // The model ID of your Azure OpenAI service

);

builder.Plugins.AddFromType<TimePlugin>();

builder.Plugins.AddFromPromptDirectory("./plugins/WriterPlugin");

var kernel = builder.Build();

If you are using OpenAI, you can use the AddOpenAIChatCompletionService method.

var builder = Kernel.CreateBuilder();

builder.Services.AddLogging(c => c.AddDebug().SetMinimumLevel(LogLevel.Trace));

builder.Services.AddOpenAIChatCompletion(

OpenAIModelId, // The model ID of your OpenAI service

OpenAIApiKey, // The API key of your OpenAI service

OpenAIOrgId // The organization ID of your OpenAI service

);

builder.Plugins.AddFromType<TimePlugin>();

builder.Plugins.AddFromPromptDirectory("./plugins/WriterPlugin");

var kernel = builder.Build();

Invoking plugins from the kernel

Semantic Kernel makes it easy to run prompts alongside native code because they are both expressed as KernelFunctions. This means you can invoke them in exactly same way.

To run KernelFunctions, Semantic Kernel provides the InvokeAsync method. Simply pass in the function you want to run, its arguments, and the kernel will handle the rest.

Run the GetCurrentUtcTime function from TimePlugin:

var currentTime = await kernel.InvokeAsync("TimePlugin", "GetCurrentUtcTime");

Console.WriteLine(currentTime);

Run the ShortPoem function from WriterPlugin while using the current time as an argument:

var poemResult = await kernel.InvokeAsync("WriterPlugin", "ShortPoem", new() {

{ "input", currentTime }

});

Console.WriteLine(poemResult);

This should return a response similar to the following (except specific to your current time):

There once was a sun in the sky

That shone so bright, it caught the eye

But on December tenth

It decided to vent

And took a break, said "Bye bye!"

Going further with the kernel

For more details on how to configure and leverage these properties, please refer to the following articles:

| Article | Description |

|---|---|

| Adding AI services | Learn how to add additional AI services from OpenAI, Azure OpenAI, Hugging Face, and more to the kernel. |

| Adding telemetry and logs | Gain visibility into what Semantic Kernel is doing by adding telemetry to the kernel. |

Next steps

Once you're done configuring the kernel, you can learn how to create prompts to run AI services from the kernel.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK