OpenHarmony 3.2 Beta Audio——音频渲染

source link: https://ost.51cto.com/posts/21647

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

OpenHarmony 3.2 Beta Audio——音频渲染 原创 精华

作者:巴延兴

Audio是多媒体子系统中的一个重要模块,其涉及的内容比较多,有音频的渲染、音频的采集、音频的策略管理等。本文主要针对音频渲染功能进行详细地分析,并通过源码中提供的例子,对音频渲染进行流程的梳理。

foundation/multimedia/audio_framework

audio_framework

├── frameworks

│ ├── js #js 接口

│ │ └── napi

│ │ └── audio_renderer #audio_renderer NAPI接口

│ │ ├── include

│ │ │ ├── audio_renderer_callback_napi.h

│ │ │ ├── renderer_data_request_callback_napi.h

│ │ │ ├── renderer_period_position_callback_napi.h

│ │ │ └── renderer_position_callback_napi.h

│ │ └── src

│ │ ├── audio_renderer_callback_napi.cpp

│ │ ├── audio_renderer_napi.cpp

│ │ ├── renderer_data_request_callback_napi.cpp

│ │ ├── renderer_period_position_callback_napi.cpp

│ │ └── renderer_position_callback_napi.cpp

│ └── native #native 接口

│ └── audiorenderer

│ ├── BUILD.gn

│ ├── include

│ │ ├── audio_renderer_private.h

│ │ └── audio_renderer_proxy_obj.h

│ ├── src

│ │ ├── audio_renderer.cpp

│ │ └── audio_renderer_proxy_obj.cpp

│ └── test

│ └── example

│ └── audio_renderer_test.cpp

├── interfaces

│ ├── inner_api #native实现的接口

│ │ └── native

│ │ └── audiorenderer #audio渲染本地实现的接口定义

│ │ └── include

│ │ └── audio_renderer.h

│ └── kits #js调用的接口

│ └── js

│ └── audio_renderer #audio渲染NAPI接口的定义

│ └── include

│ └── audio_renderer_napi.h

└── services #服务端

└── audio_service

├── BUILD.gn

├── client #IPC调用中的proxy端

│ ├── include

│ │ ├── audio_manager_proxy.h

│ │ ├── audio_service_client.h

│ └── src

│ ├── audio_manager_proxy.cpp

│ ├── audio_service_client.cpp

└── server #IPC调用中的server端

├── include

│ └── audio_server.h

└── src

├── audio_manager_stub.cpp

└── audio_server.cpp

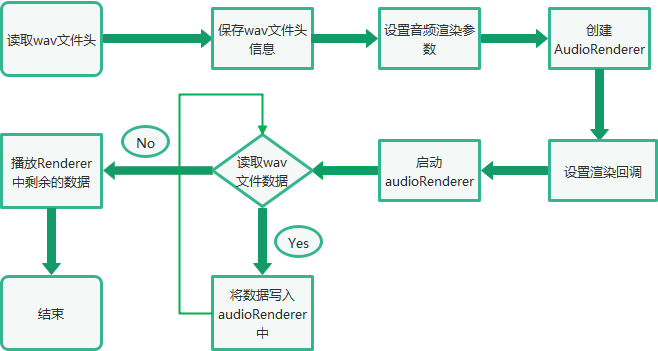

三、音频渲染总体流程

四、Native接口使用

在OpenAtom OpenHarmony(以下简称“OpenHarmony”)系统中,音频模块提供了功能测试代码,本文选取了其中的音频渲染例子作为切入点来进行介绍,例子采用的是对wav格式的音频文件进行渲染。wav格式的音频文件是wav头文件和音频的原始数据,不需要进行数据解码,所以音频渲染直接对原始数据进行操作,文件路径为:foundation/multimedia/audio_framework/frameworks/native/audiorenderer/test/example/audio_renderer_test.cpp

bool TestPlayback(int argc, char *argv[]) const

{

FILE* wavFile = fopen(path, "rb");

//读取wav文件头信息

size_t bytesRead = fread(&wavHeader, 1, headerSize, wavFile);

//设置AudioRenderer参数

AudioRendererOptions rendererOptions = {};

rendererOptions.streamInfo.encoding = AudioEncodingType::ENCODING_PCM;

rendererOptions.streamInfo.samplingRate = static_cast<AudioSamplingRate>(wavHeader.SamplesPerSec);

rendererOptions.streamInfo.format = GetSampleFormat(wavHeader.bitsPerSample);

rendererOptions.streamInfo.channels = static_cast<AudioChannel>(wavHeader.NumOfChan);

rendererOptions.rendererInfo.contentType = contentType;

rendererOptions.rendererInfo.streamUsage = streamUsage;

rendererOptions.rendererInfo.rendererFlags = 0;

//创建AudioRender实例

unique_ptr<AudioRenderer> audioRenderer = AudioRenderer::Create(rendererOptions);

shared_ptr<AudioRendererCallback> cb1 = make_shared<AudioRendererCallbackTestImpl>();

//设置音频渲染回调

ret = audioRenderer->SetRendererCallback(cb1);

//InitRender方法主要调用了audioRenderer实例的Start方法,启动音频渲染

if (!InitRender(audioRenderer)) {

AUDIO_ERR_LOG("AudioRendererTest: Init render failed");

fclose(wavFile);

return false;

}

//StartRender方法主要是读取wavFile文件的数据,然后通过调用audioRenderer实例的Write方法进行播放

if (!StartRender(audioRenderer, wavFile)) {

AUDIO_ERR_LOG("AudioRendererTest: Start render failed");

fclose(wavFile);

return false;

}

//停止渲染

if (!audioRenderer->Stop()) {

AUDIO_ERR_LOG("AudioRendererTest: Stop failed");

}

//释放渲染

if (!audioRenderer->Release()) {

AUDIO_ERR_LOG("AudioRendererTest: Release failed");

}

//关闭wavFile

fclose(wavFile);

return true;

}

首先读取wav文件,通过读取到wav文件的头信息对AudioRendererOptions相关的参数进行设置,包括编码格式、采样率、采样格式、通道数等。根据AudioRendererOptions设置的参数来创建AudioRenderer实例(实际上是AudioRendererPrivate),后续的音频渲染主要是通过AudioRenderer实例进行。创建完成后,调用AudioRenderer的Start方法,启动音频渲染。启动后,通过AudioRenderer实例的Write方法,将数据写入,音频数据会被播放。

五、调用流程

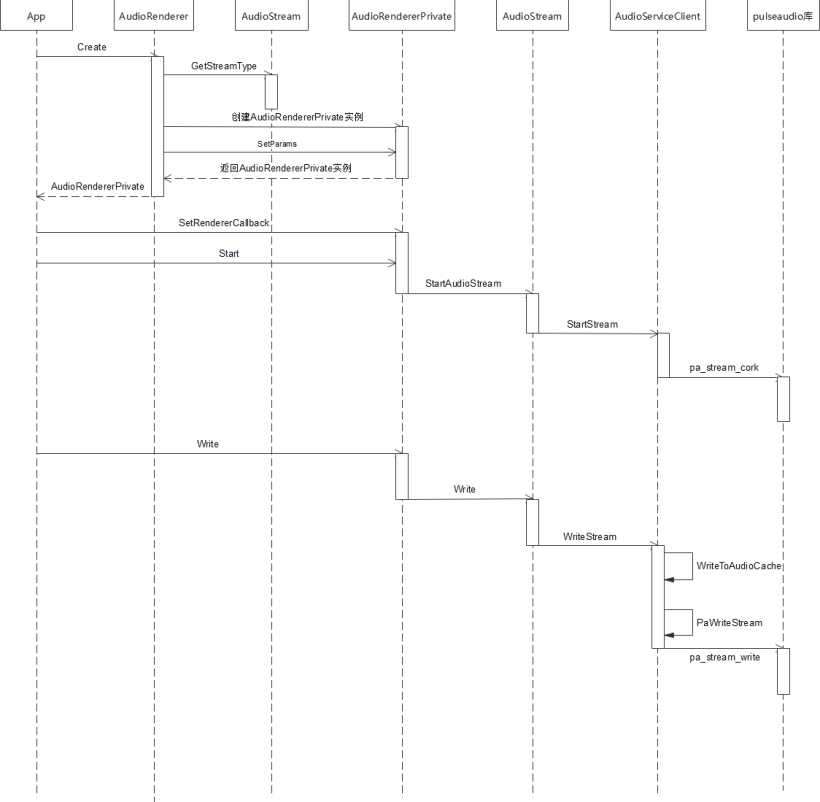

1. 创建AudioRenderer

std::unique_ptr<AudioRenderer> AudioRenderer::Create(const std::string cachePath,

const AudioRendererOptions &rendererOptions, const AppInfo &appInfo)

{

ContentType contentType = rendererOptions.rendererInfo.contentType;

StreamUsage streamUsage = rendererOptions.rendererInfo.streamUsage;

AudioStreamType audioStreamType = AudioStream::GetStreamType(contentType, streamUsage);

auto audioRenderer = std::make_unique<AudioRendererPrivate>(audioStreamType, appInfo);

if (!cachePath.empty()) {

AUDIO_DEBUG_LOG("Set application cache path");

audioRenderer->SetApplicationCachePath(cachePath);

}

audioRenderer->rendererInfo_.contentType = contentType;

audioRenderer->rendererInfo_.streamUsage = streamUsage;

audioRenderer->rendererInfo_.rendererFlags = rendererOptions.rendererInfo.rendererFlags;

AudioRendererParams params;

params.sampleFormat = rendererOptions.streamInfo.format;

params.sampleRate = rendererOptions.streamInfo.samplingRate;

params.channelCount = rendererOptions.streamInfo.channels;

params.encodingType = rendererOptions.streamInfo.encoding;

if (audioRenderer->SetParams(params) != SUCCESS) {

AUDIO_ERR_LOG("SetParams failed in renderer");

audioRenderer = nullptr;

return nullptr;

}

return audioRenderer;

}

首先通过AudioStream的GetStreamType方法获取音频流的类型,根据音频流类型创建AudioRendererPrivate对象,AudioRendererPrivate是AudioRenderer的子类。紧接着对audioRenderer进行参数设置,其中包括采样格式、采样率、通道数、编码格式。设置完成后返回创建的AudioRendererPrivate实例。

2. 设置回调

int32_t AudioRendererPrivate::SetRendererCallback(const std::shared_ptr<AudioRendererCallback> &callback)

{

RendererState state = GetStatus();

if (state == RENDERER_NEW || state == RENDERER_RELEASED) {

return ERR_ILLEGAL_STATE;

}

if (callback == nullptr) {

return ERR_INVALID_PARAM;

}

// Save reference for interrupt callback

if (audioInterruptCallback_ == nullptr) {

return ERROR;

}

std::shared_ptr<AudioInterruptCallbackImpl> cbInterrupt =

std::static_pointer_cast<AudioInterruptCallbackImpl>(audioInterruptCallback_);

cbInterrupt->SaveCallback(callback);

// Save and Set reference for stream callback. Order is important here.

if (audioStreamCallback_ == nullptr) {

audioStreamCallback_ = std::make_shared<AudioStreamCallbackRenderer>();

if (audioStreamCallback_ == nullptr) {

return ERROR;

}

}

std::shared_ptr<AudioStreamCallbackRenderer> cbStream =

std::static_pointer_cast<AudioStreamCallbackRenderer>(audioStreamCallback_);

cbStream->SaveCallback(callback);

(void)audioStream_->SetStreamCallback(audioStreamCallback_);

return SUCCESS;

}

参数传入的回调主要涉及到两个方面:一方面是AudioInterruptCallbackImpl中设置了我们传入的渲染回调,另一方面是AudioStreamCallbackRenderer中也设置了渲染回调。

3. 启动渲染

bool AudioRendererPrivate::Start(StateChangeCmdType cmdType) const

{

AUDIO_INFO_LOG("AudioRenderer::Start");

RendererState state = GetStatus();

AudioInterrupt audioInterrupt;

switch (mode_) {

case InterruptMode::SHARE_MODE:

audioInterrupt = sharedInterrupt_;

break;

case InterruptMode::INDEPENDENT_MODE:

audioInterrupt = audioInterrupt_;

break;

default:

break;

}

AUDIO_INFO_LOG("AudioRenderer::Start::interruptMode: %{public}d, streamType: %{public}d, sessionID: %{public}d",

mode_, audioInterrupt.streamType, audioInterrupt.sessionID);

if (audioInterrupt.streamType == STREAM_DEFAULT || audioInterrupt.sessionID == INVALID_SESSION_ID) {

return false;

}

int32_t ret = AudioPolicyManager::GetInstance().ActivateAudioInterrupt(audioInterrupt);

if (ret != 0) {

AUDIO_ERR_LOG("AudioRendererPrivate::ActivateAudioInterrupt Failed");

return false;

}

return audioStream_->StartAudioStream(cmdType);

}

AudioPolicyManager::GetInstance().ActivateAudioInterrupt这个操作主要是根据AudioInterrupt来进行音频中断的激活,这里涉及了音频策略相关的内容,后续会专门出关于音频策略的文章进行分析。这个方法的核心是通过调用AudioStream的StartAudioStream方法来启动音频流。

bool AudioStream::StartAudioStream(StateChangeCmdType cmdType)

{

int32_t ret = StartStream(cmdType);

resetTime_ = true;

int32_t retCode = clock_gettime(CLOCK_MONOTONIC, &baseTimestamp_);

if (renderMode_ == RENDER_MODE_CALLBACK) {

isReadyToWrite_ = true;

writeThread_ = std::make_unique<std::thread>(&AudioStream::WriteCbTheadLoop, this);

} else if (captureMode_ == CAPTURE_MODE_CALLBACK) {

isReadyToRead_ = true;

readThread_ = std::make_unique<std::thread>(&AudioStream::ReadCbThreadLoop, this);

}

isFirstRead_ = true;

isFirstWrite_ = true;

state_ = RUNNING;

AUDIO_INFO_LOG("StartAudioStream SUCCESS");

if (audioStreamTracker_) {

AUDIO_DEBUG_LOG("AudioStream:Calling Update tracker for Running");

audioStreamTracker_->UpdateTracker(sessionId_, state_, rendererInfo_, capturerInfo_);

}

return true;

}

AudioStream的StartAudioStream主要的工作是调用StartStream方法,StartStream方法是AudioServiceClient类中的方法。AudioServiceClient类是AudioStream的父类。接下来看一下AudioServiceClient的StartStream方法。

int32_t AudioServiceClient::StartStream(StateChangeCmdType cmdType)

{

int error;

lock_guard<mutex> lockdata(dataMutex);

pa_operation *operation = nullptr;

pa_threaded_mainloop_lock(mainLoop);

pa_stream_state_t state = pa_stream_get_state(paStream);

streamCmdStatus = 0;

stateChangeCmdType_ = cmdType;

operation = pa_stream_cork(paStream, 0, PAStreamStartSuccessCb, (void *)this);

while (pa_operation_get_state(operation) == PA_OPERATION_RUNNING) {

pa_threaded_mainloop_wait(mainLoop);

}

pa_operation_unref(operation);

pa_threaded_mainloop_unlock(mainLoop);

if (!streamCmdStatus) {

AUDIO_ERR_LOG("Stream Start Failed");

ResetPAAudioClient();

return AUDIO_CLIENT_START_STREAM_ERR;

} else {

AUDIO_INFO_LOG("Stream Started Successfully");

return AUDIO_CLIENT_SUCCESS;

}

}

StartStream方法中主要是调用了pulseaudio库的pa_stream_cork方法进行流启动,后续就调用到了pulseaudio库中了。pulseaudio库我们暂且不分析。

4. 写入数据

int32_t AudioRendererPrivate::Write(uint8_t *buffer, size_t bufferSize)

{

return audioStream_->Write(buffer, bufferSize);

}

通过调用AudioStream的Write方式实现功能,接下来看一下AudioStream的Write方法。

size_t AudioStream::Write(uint8_t *buffer, size_t buffer_size)

{

int32_t writeError;

StreamBuffer stream;

stream.buffer = buffer;

stream.bufferLen = buffer_size;

isWriteInProgress_ = true;

if (isFirstWrite_) {

if (RenderPrebuf(stream.bufferLen)) {

return ERR_WRITE_FAILED;

}

isFirstWrite_ = false;

}

size_t bytesWritten = WriteStream(stream, writeError);

isWriteInProgress_ = false;

if (writeError != 0) {

AUDIO_ERR_LOG("WriteStream fail,writeError:%{public}d", writeError);

return ERR_WRITE_FAILED;

}

return bytesWritten;

}

Write方法中分成两个阶段,首次写数据,先调用RenderPrebuf方法,将preBuf_的数据写入后再调用WriteStream进行音频数据的写入。

size_t AudioServiceClient::WriteStream(const StreamBuffer &stream, int32_t &pError)

{

size_t cachedLen = WriteToAudioCache(stream);

if (!acache.isFull) {

pError = error;

return cachedLen;

}

pa_threaded_mainloop_lock(mainLoop);

const uint8_t *buffer = acache.buffer.get();

size_t length = acache.totalCacheSize;

error = PaWriteStream(buffer, length);

acache.readIndex += acache.totalCacheSize;

acache.isFull = false;

if (!error && (length >= 0) && !acache.isFull) {

uint8_t *cacheBuffer = acache.buffer.get();

uint32_t offset = acache.readIndex;

uint32_t size = (acache.writeIndex - acache.readIndex);

if (size > 0) {

if (memcpy_s(cacheBuffer, acache.totalCacheSize, cacheBuffer + offset, size)) {

AUDIO_ERR_LOG("Update cache failed");

pa_threaded_mainloop_unlock(mainLoop);

pError = AUDIO_CLIENT_WRITE_STREAM_ERR;

return cachedLen;

}

AUDIO_INFO_LOG("rearranging the audio cache");

}

acache.readIndex = 0;

acache.writeIndex = 0;

if (cachedLen < stream.bufferLen) {

StreamBuffer str;

str.buffer = stream.buffer + cachedLen;

str.bufferLen = stream.bufferLen - cachedLen;

AUDIO_DEBUG_LOG("writing pending data to audio cache: %{public}d", str.bufferLen);

cachedLen += WriteToAudioCache(str);

}

}

pa_threaded_mainloop_unlock(mainLoop);

pError = error;

return cachedLen;

}

WriteStream方法不是直接调用pulseaudio库的写入方法,而是通过WriteToAudioCache方法将数据写入缓存中,如果缓存没有写满则直接返回,不会进入下面的流程,只有当缓存写满后,才会调用下面的PaWriteStream方法。该方法涉及对pulseaudio库写入操作的调用,所以缓存的目的是避免对pulseaudio库频繁地做IO操作,提高了效率。

本文主要对OpenHarmony 3.2 Beta多媒体子系统的音频渲染模块进行介绍,首先梳理了Audio Render的整体流程,然后对几个核心的方法进行代码的分析。整体的流程主要通过pulseaudio库启动流,然后通过pulseaudio库的pa_stream_write方法进行数据的写入,最后播放出音频数据。

音频渲染主要分为以下几个层次:

(1)AudioRenderer的创建,实际创建的是它的子类AudioRendererPrivate实例。

(2)通过AudioRendererPrivate设置渲染的回调。

(3)启动渲染,这一部分代码最终会调用到pulseaudio库中,相当于启动了pulseaudio的流。

(4)通过pulseaudio库的pa_stream_write方法将数据写入设备,进行播放。

更多原创内容请关注:深开鸿技术团队

入门到精通、技巧到案例,系统化分享OpenHarmony开发技术,欢迎投稿和订阅,让我们一起携手前行共建生态。

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK