Cluster API Provider for VMware Cloud Director - VMware Cloud Provider Blog

source link: https://blogs.vmware.com/cloudprovider/2022/03/cluster-api-provider-for-vmware-cloud-director-guide.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Cluster API Provider for VMware Cloud Director

VMware Cloud Director with Container Service Extension provides Kubernetes as a Service to Cloud Providers for Tanzu Kubernetes Clusters with Multi-tenancy at the core. This blog post provides a technical overview of Cluster API for VMware Cloud Director and how to get started with Cluster API.

Cluster API for VMware Cloud Director(CAPVCD) provides the following capabilities:

- Cluster API – Standarized approach to manage multiple Kubernetes cluster by Kubernetes Cluster – Create, scale, manage Kubernetes cluster with declarative API

- Multi-Tenant – With Cluster API Provider for VMWare Cloud Director customers can self-manage their Management clusters AND workload clusters providing complete isolation.

- Multiple Control plane based Management Cluster – Customers can provide their own management cluster with multiple control plan, which gives Management High availability. The Controlplanes of Tanzu Clusters uses NSX-T Advanced Load Balancer.

This blog post describes basic concepts of Cluster API, how providers can offer CAPVCD to their customers, and how customers can self-manage Kubernetes Clusters.

Cluster API for VMware Cloud Director is an additional package to be installed on a Tanzu Kubernetes Cluster. This requires the following components in the VMware Cloud Director and infrastructure. Figure 1 shows high-level infrastructure, this includes Container Service Extension, SDDC Stack, NSXT Unified Appliance, and NSX-T advanced Load Balancer. The customer can use the existing CSE-based Tanzu Kubernetes Cluster to introduce Cluster API in their organizations.

Figure 1 Building components of Kubernetes as a Service for VMware Cloud Director

Cluster API Concepts

Figure 2 Cluster API Concepts at a high level for VMware Cloud Director.

Bootstrap Cluster

This cluster initiates the creation of a Management cluster in a customer Organization. Optionally Bootstrao Cluster also manages Lifecycle of the Management cluster. Customers can use their CSE-created Tanzu Kubernetes Cluster as a bootstrap Cluster. Alternatively, customers can also use Kind as a bootstrap management cluster.

Management Cluster

This cluster is created by each customer organization to manage the workload clusters. The management cluster is usually created with multiple control plane VMS, with Cluster API. At this point, the management cluster manages lifecycle management of all workload clusters in their customer organizations. This TKG cluster has TKG packages and Cluster API Packages as described in the later section.

Workload Cluster

The customers can create workload clusters within their organization using management clusters. The workload clusters can have 1 or multiple control pain to achieve high availability.

For Providers: Offer Cluster API to customers

Provider admin user needs to publish the following rights bundles for successful Cluster API Operation on the Customer end.

- Preserve ExtraConfig Elements during OVA Import and Export (Follow this KB article to enable this right)

- Create a new RDE as follows:

POST: https://<VMware Cloud Director’s URL>/cloudapi/1.0.0/entityTypes

- Publish Rights bundles to Customer Organization

Provider admin can add the above-listed rights to the ‘Default Rights Bundle’ or create a new rights bundle and publish it to each customer organization at the time of onboarding. Figure 3 showcases all necessary rights of customer ACME to enable CAPVCD. Please refer to the documentation for the complete rights bundle for Tanzu Capabilities. After this step is complete, the customer can self-provision bootstrap/Management/Workload Clusters described in the next section.

Figure 3 CAPVCD rights bundles at a glance

For Customers: Self manage TKG clusters with Cluster API

Once The Tanzu Kubernetes Cluster is available as a bootstrap cluster, customer admin can follow these steps

Install clusterctl, kubectl, kind, docker on the local machine:

Convert Tanzu Kubernetes Cluster to Bootstrap Cluster

It is assumed that the customer admin has downloaded kubeconfig of the Tanzu Kubernetes cluster from the VCD tenant portal. This blog post explains how to prepare the Tanzu Kubernetes Cluster on VMware Cloud Director. For this section, we will refer Tanzu Kubernetes Cluster as a Bootstrap cluster.

Initialize cluster API in the bootstrap cluster

Make changes to the following files based on Infrastructure

Provision Management Cluster

To create a Management Cluster, the user can continue to work with the bootstrap cluster. The following code section describes the steps to create the management cluster. Cluster API allows for declarative YAML to create clusters. the mgmt-capi.yaml file stores definitions of various components of the Management cluster. Once the management cluster is up, developers or other users with rights to create a TKG cluster can create a workload cluster with the management cluster’s kubeconfig.

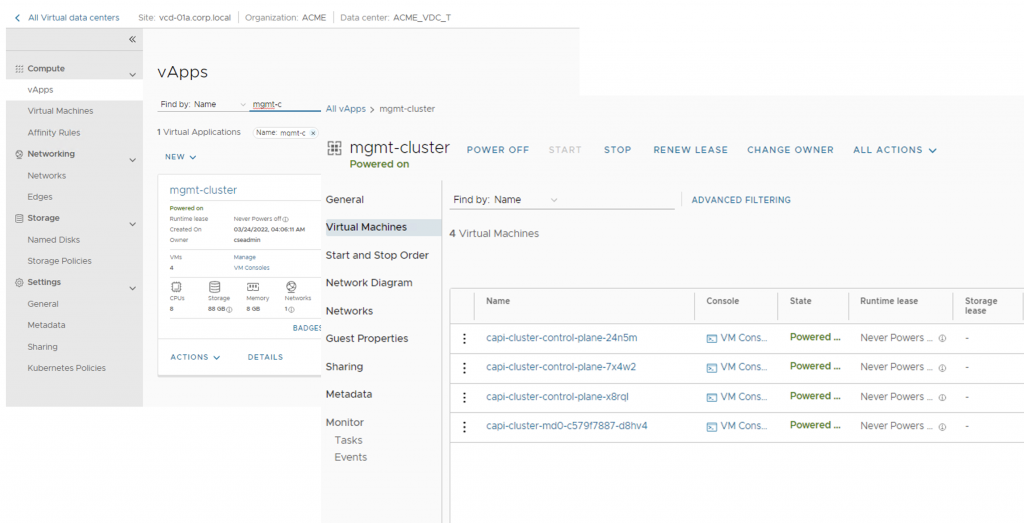

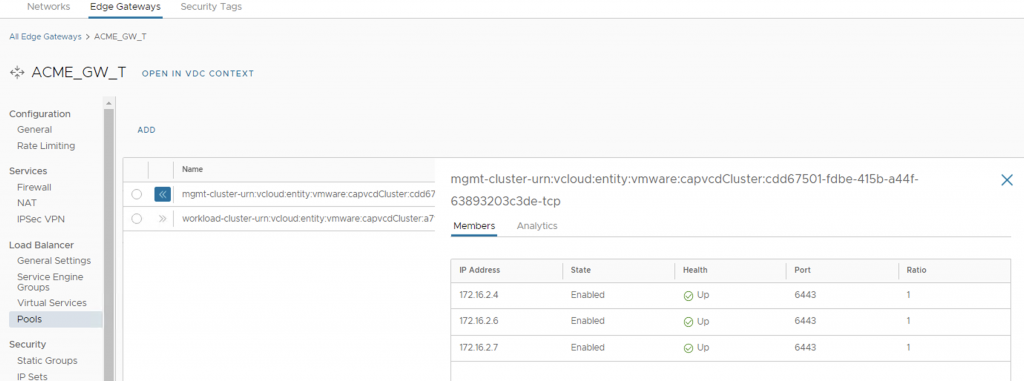

This YAML file creates a Tanzu Kubernetes cluster named mgmt-cluster with TKG version 1.21.2(ubuntu-2004-kube-v1.21.2+vmware.1-tkg.2-14542111852555356776), 3 control plane VMs(kind: KubeadmControlPlane –> replicas:3) and 1 worker plane VM(kind: MachineDeployment –> replicas: 1). Change the spec based on the desired definition of the management cluster. Once the cluster is up and running, install Cluster API on this ‘mgmt-cluster’ and make it the management cluster. The following section shows the steps to convert this new mgmt-cluster as a management cluster and then create a workload cluster. Figure 4, 6 and 7 showcase created mgmt-cluster, its NAT rules and Load Balancer Pool (backed by NSX-T Advanced LB) on VMware Cloud Director.

Figure 4 Created Management Cluster on VMware Cloud Director tenant portal

Provision workload Cluster

Once the Management cluster is ready, create a new cluster as follows to create and manage new workload clusters with multiple control planes.

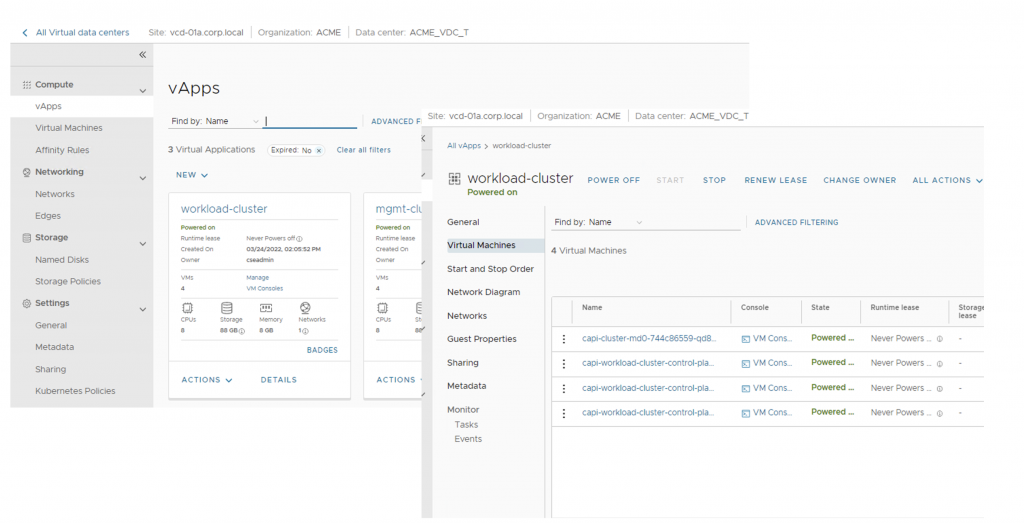

Following screenshots showcase created vApp for Workload cluster and its NAT and Loadbalancer pool (backed by NSX-T Advanced Load Balancer)

Figure 5 Created workload Cluster on VMware Cloud Director tenant portal

Figure 6 Virtual Services and Pools created for each cluster’s control plane

Figure 7: NAT Rules on Edge Gateway for Workload and Management Cluster

Lifecycle management of the Management cluster

Customers can scale Management Clusters by modifying the YAML definition (In this example – capi_mgmt.yaml and capi_workload.yaml) to the desired state. These commands can be performed from the bootstrap cluster, which is the parent of the management cluster

To summarize, this blog post covered the High-level overview of the Cluster API on VMware Cloud Director for providers and customers onboarding, management, and launching workload clusters in the customer organization.

References:

Sachi Bhatt

Sachi is a Technical Product Manager at VMware in Cloud Services Business Unit.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK