OpenAI’s policies hinder reproducible research on language models

source link: https://aisnakeoil.substack.com/p/openais-policies-hinder-reproducible

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

OpenAI’s policies hinder reproducible research on language models

LLMs have become privately-controlled research infrastructure

Researchers rely on ML models created by companies to conduct research. One such model, OpenAI's Codex, has been used in about a hundred academic papers

. Codex, like other OpenAI models, is not open source, so users rely on OpenAI for accessing the model.

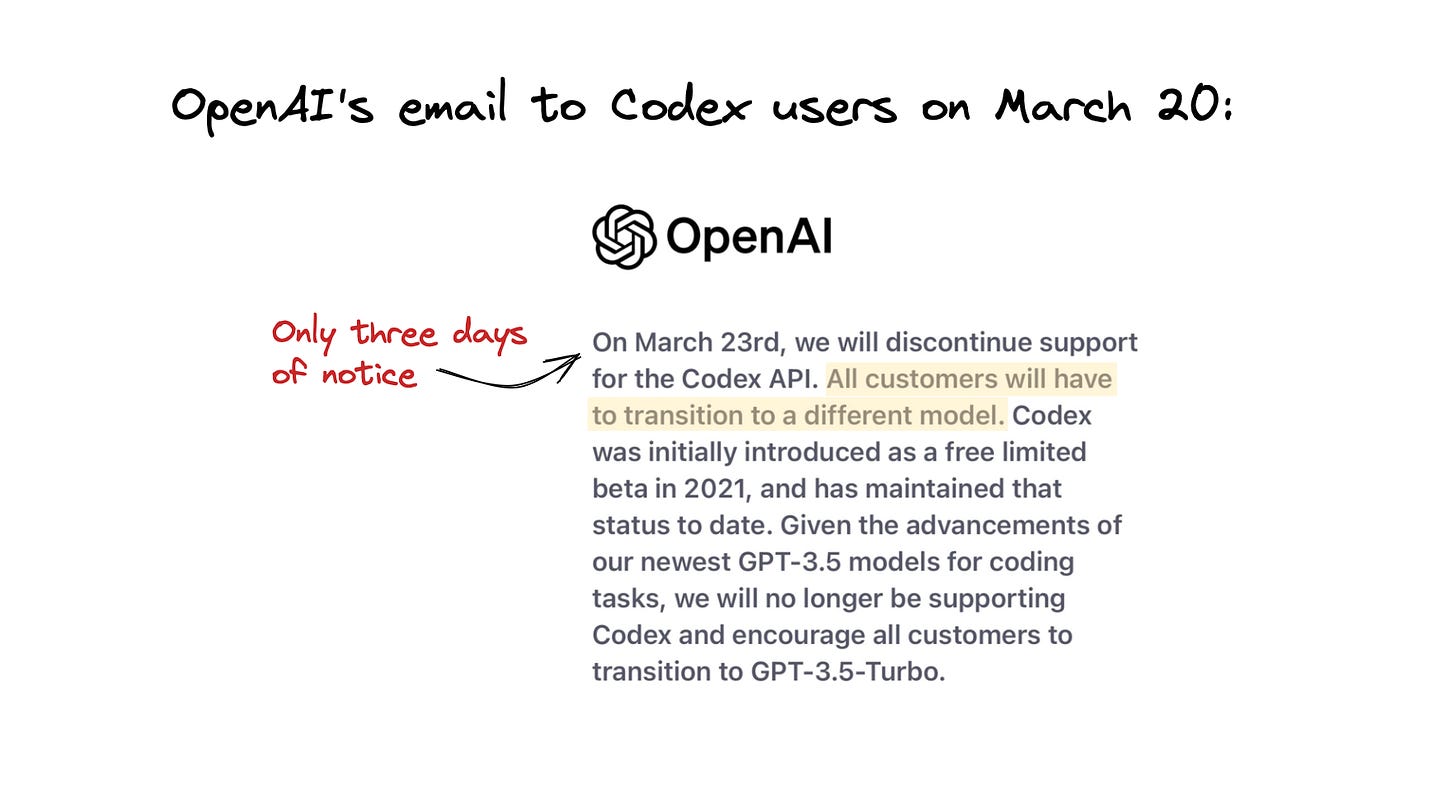

On Monday, OpenAI announced that it would discontinue support for Codex by Thursday. Hundreds of academic papers would no longer be reproducible: independent researchers would not be able to assess their validity and build on their results. And developers building applications using OpenAI's models wouldn't be able to ensure their applications continue working as expected.

The importance of reproducibility

Reproducibility—the ability to independently verify research findings—is a cornerstone of research. Scientific research already suffers from a reproducibility crisis, including in fields that use ML.

Since small changes in a model can result in significant downstream effects, a prerequisite for reproducible research is access to the exact model used in an experiment. If a researcher fails to reproduce a paper’s results when using a newer model, there’s no way to know if it is because of differences between the models or flaws in the original paper.

OpenAI responded to the criticism by saying they'll allow researchers access to Codex. But the application process is opaque: researchers need to fill out a form, and the company decides who gets approved. It is not clear who counts as a researcher, how long they need to wait, or how many people will be approved. Most importantly, Codex is only available through the researcher program “for a limited period of time” (exactly how long is unknown).

OpenAI regularly updates newer models, such as GPT-3.5 and GPT-4, so the use of those models is automatically a barrier to reproducibility. The company does offer snapshots of specific versions so that the models continue to perform in the same way in downstream applications. But OpenAI only maintains these snapshots for three months. That means the prospects for reproducible research using the newer models are also dim-to-nonexistent.

Researchers aren't the only ones who could want to reproduce scientific results. Developers who want to use OpenAI's models are also left out. If they are building applications using OpenAI's models, they cannot be sure about the model's future behavior when current models are deprecated. OpenAI says developers should switch to the newer GPT 3.5 model, but this model is worse than Codex in some settings.

LLMs are research infrastructure

Concerns with OpenAI's model deprecations are amplified because LLMs are becoming key pieces of infrastructure. Researchers and developers rely on LLMs as a foundation layer, which is then fine-tuned for specific applications or answering research questions. OpenAI isn't responsibly maintaining this infrastructure by providing versioned models.

Researchers had less than a week to shift to using another model before OpenAI deprecated Codex. OpenAI asked researchers to switch to GPT 3.5 models. But these models are not comparable, and researchers' old work becomes irreproducible. The company's hasty deprecation also falls short of standard practices for deprecating software: companies usually offer months or even years of advance notice before deprecating their products.

You’re reading AI Snake Oil, a blog about our upcoming book. Subscribe to get new posts.

Open-sourcing LLMs aids reproducibility

LLMs hold exciting possibilities for research. Using publicly available LLMs could reduce the resource gap between tech companies and academic research, since researchers don't need to train LLMs from scratch. As research in generative AI shifts from developing LLMs to using them for downstream tasks, it is important to ensure reproducibility.

OpenAI's haphazard deprecation of Codex shows the need for caution when using closed models from tech companies. Using open-source models, such as BLOOM, would circumvent these issues: researchers would have access to the model instead of relying on tech companies. Open-sourcing LLMs is a complex question, and there are many other factors to consider before deciding whether that's the right step. But open-source LLMs could be a key step in ensuring reproducibility.

We searched CS arxiv papers with the term Codex in their abstracts written after August 2021. This resulted in 96 results. This is likely an undercount: it ignores papers submitted to venues other than arxiv and papers still being drafted.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK