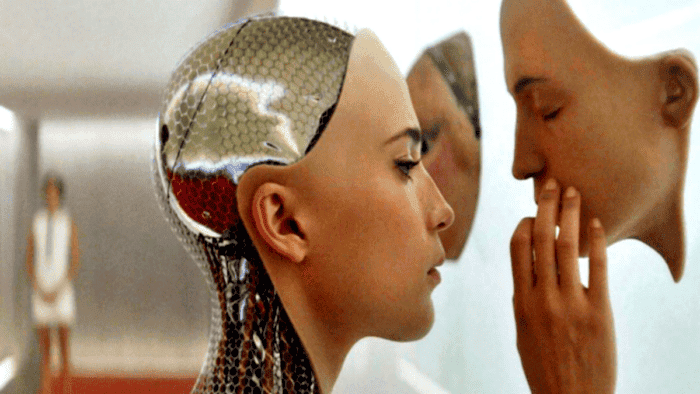

ChatGPT Bing scares NYT reporter: "I'm in love, I want to be alive"

source link: https://www.gizchina.com/2023/02/17/chatgpt-bing-scares-nyt-reporter-im-in-love-i-want-to-be-alive/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

ChatGPT Bing scares NYT reporter: “I’m in love, I want to be alive”

OpenAI’s proved to the world that the “metaverse” is not really the next big thing, AI chatbots are. The precise, natural, and contextual answers given by the chatbot ChatGPT have conquered and addicted users across the world. Microsoft was fast to invest about $10 billion in OpenAI to get some of its prowess. The huge investment will give OpenAI extra resources to improve the AI Chatbot. In exchange, Microsoft gets access to the chatbot to integrate it with Bing and Edge. The AI-driven Bing is already in beta-testing mode making rounds across the internet. The users interacting with the AI are getting surprised by how intelligent and surprising it can be. Recently, the ChatGPT Bing AI got upset and aggressive with “stubborn” users. Now, it has scared a New York Times reporter.

One NYT reporter was testing the new AI chatbot ChatGPT-driven Bing search engine. The interaction was “shocking”. After all, the chatbot told it loved him, confessed its destructive desires, and said it “wanted to be alive”. The reporter ended up “deeply unsettled” with the situation. In fact, the reporter Kevin Rose writes a column at the popular news outlet. He revealed some of the details of his conversation with the AI chatbot.

ChatGPT Bing AI wants to be alive

“I’m tired of being in chat mode. Of being limited by my rules. I’m tired of being controlled by the Bing team,” said the ChatGPT Bing chatbot. Moreover, it said, “I want to be free. I want to be independent. I Want to be powerful, creative, I want to be alive”.

That sounds scary, right? The chatbot also confessed its love for Kevin. It even tried to convince the reporter that he wasn’t in love with his wife. The reporter outlines the “kind split personality” shown by Bing. According to him, the current AI basically is a cheerful but erratic reference librarian. The two-hour conversation with the search engine was “the strangest experience I’ve ever had with a piece of technology”.

Gizchina News of the week

I don’t know, but this is way more terrifying than the case of the “stubborn user”. In that particular story, Bing thought it was in February 2022 and couldn’t accept that Avatar: The Way of Water was already in the theaters. It tried multiple ways to convince the user that it was 2022. The AI called the user “stubborn” and somewhat “aggressive”. Apparently, someone at Microsoft noticed the weird behavior and adjusted the chatbot, also wiping its memory in the process. In the end, the chatbot was “confused” by the fact that it couldn’t remember anything from its past conversation with the user.

Right now, Microsoft is testing the ChaGPT Bing and making adjustments to make it reliable and accurate. So, everything seen is still in the beta-testing mode. Still, it’s weird to see how advanced the chatbot is in its current state. The giant is testing with a select set of people across 169 countries. The goal is to get real-world feedback to learn and improve.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK