Terminating Python Processes Started from a Virtual Environment

source link: https://www.codeproject.com/Tips/5354818/Terminating-Python-Processes-Started-from-a-Virtua

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Terminating Python Processes Started from a Virtual Environment

We have been tracking down an issue with the CodeProject.AI Server that was resulting in an excessive number of processes being created when the Server was restarted. Typically, when viewing the list of running programs in the Task Manager, you would see multiple instances of the AI (Artificial Intelligence) Modules running.

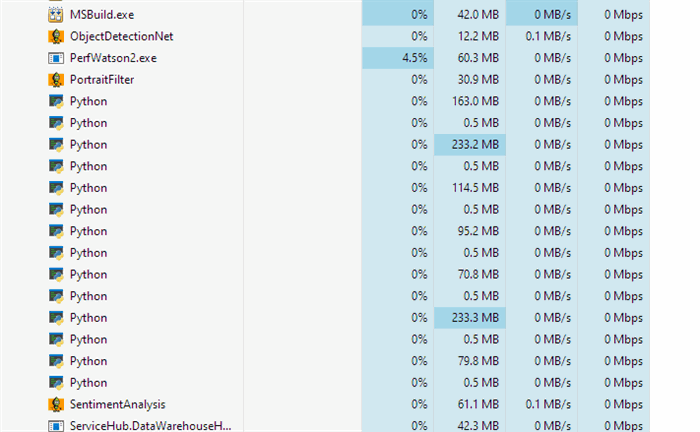

Depending on which Modules were enabled, this would include many processes named ‘python’, and could also include non-python Modules such as ‘ObjectDetectionNet’. The image below shows a correct list of processes when running all the Modules in Debug under Visual Studio.

When the CodeProject.AI Server is shut down, it is supposed to kill all its sub-processes to prevent the leaking of resources including CPU, Memory, and GPU usage. Unfortunately, we have seen reports that on some systems processes were left running so each CodeProject.AI Server start would create more processes, using more system resources.

Solving this issue required two steps:

- Improve the reliability of the shutdown sequence

- Assume that at startup there may be left-over, orphaned processes and clean them up.

The first issue was handled by two corrections to the C# code for the Server's shutdown.

The first correction involves increasing the time allowed for the Windows Service hosting the CodeProject.AI Server to shut down before a hard shutdown. The default is 2 seconds, and the background AI Modules may take several seconds to end. We increased this to 60 seconds, which drastically improved the issue of orphaned processes on shutdown.

To do this, we added the following code to the ConfigureServices method in Startup.cs

// Configure the shutdown timeout to 60s instead of 2

services.Configure<HostOptions>(

opts => opts.ShutdownTimeout = TimeSpan.FromSeconds(60));

The second correction involved waiting for the processes to end before exiting the method which kills the process. This method gets the Process class instance for the running process and calls its Kill method. However, if the method does not then wait for the Process to end, the method may exit prior to the Kill completing.

This results in the variable holding the Process instance to be marked for Garbage Collection, and a sometime later the Process.Dispose method will be called when the GC runs. As GC timing is not deterministic, the Kill will fail randomly.

The correct way to kill the processes is

try

{

if (!process.HasExited)

{

_logger.LogInformation($"Forcing shutdown of {process.ProcessName}/{module.ModuleId}");

process.Kill(true);

await process.WaitForExitAsync(); // Add this

}

else

_logger.LogInformation($"{module.ModuleId} went quietly");

Unfortunately, this did not completely solve the problem, so we looked a checking for orphaned process on startup and killing them if they exist before starting the AI Modules.

We had existing .bat and .sh files to kill the orphaned processes, as this can happen while debugging the application. We now call this script during CodeProject.AI Server startup. The original version of the script made use of commands like

wmic process where "ExecutablePath like '%srcDir%\\AnalysisLayer%%'" delete wmic process where "ExecutablePath like '%srcDir%\\modules%%'" delete

This should find and kill all the processes that were executed from a directory in the directories holding the AI Modules.

Unfortunately, our Python AI modules are executed from a Virtual Environment, so there are two Python processes spun up for each of these Modules. The first of these is the Python executable in the Virtual Environment which is a proxy that runs the real Python executable, which is the second process created.

If you run the original script, it is possible that the first process is killed, which will also kill the second. Now, when the script attempts to kill the second process, it no longer exists, and the script ends prematurely with an error, hence needing to run the script repeatedly. A classic case of modifying a list while iterating it.

The solution is the kill the Virtual Environment version of the Python executable first. This is easily carried out by first killing the processes started from the Virtual Environment directory. The resulting code is like

wmic process where "ExecutablePath like '%srcDir%\\AnalysisLayer%%\\venv\\%%'" delete wmic process where "ExecutablePath like '%srcDir%\\AnalysisLayer%%'" delete wmic process where "ExecutablePath like '%srcDir%\\modules%%\\venv\\%%'" delete wmic process where "ExecutablePath like '%srcDir%\\modules%%'" delete

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK