Experience the Future of Communication With GPT-3: Consume GPT-3 API Through Mul...

source link: https://dzone.com/articles/experience-the-future-of-communication-with-chatgp

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

ChatGPT

It is a chatbot that uses the GPT-3 (Generative Pre-trained Transformer 3) language model developed by OpenAI to generate human-like responses to user input. The chatbot is designed to be able to carry on conversations with users in a natural, conversational manner, and can be used for a variety of purposes such as customer service, online support, and virtual assistance.

OpenAI API

OpenAI offers a number of Application Programming Interfaces (APIs) that allow developers to access the company's powerful AI models and use them in their own projects. These APIs provide access to a wide range of capabilities, including natural language processing, computer vision, and robotics.

These APIs are accessed via HTTP requests, which can be sent in a variety of programming languages, including Python, Java, and MuleSoft. It also provides client libraries for several popular programming languages to make it easy for developers to get started. Most interestingly, you can train the model on your own dataset and make it work best on a specific domain. Fine-tuning the model will make it more accurate, perform better and give you the best results.

MuleSoft

It is a company that provides integration software for connecting applications, data, and devices. Its products include a platform for building APIs (Application Programming Interfaces) and integrations, as well as tools for managing and deploying those APIs and integrations. MuleSoft’s products are designed to help organizations connect their systems and data, enabling them to more easily share information and automate business processes.

Steps To Call GPT-3 model API in MuleSoft

- Account: Create an account using your email id or continue with Google or Microsoft account:

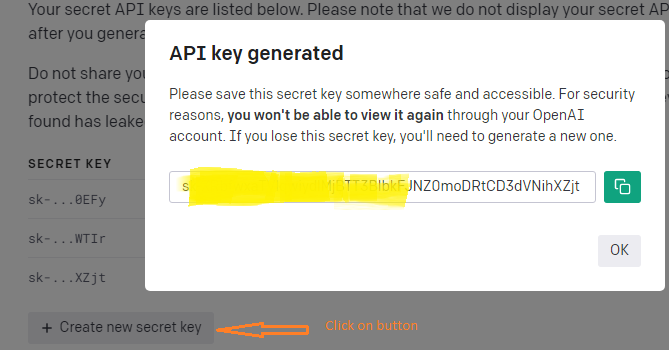

- Authentication: The OpenAI API uses API keys for authentication. Click API Keys to generate the API key.

Do not share your API key with others, or expose it in the browser or other client-side code. In order to protect the security of your account, OpenAI may also automatically rotate any API key that we’ve found has leaked publicly. (Source: documentation)

The OpenAI API is powered by a family of models with different capabilities and price points.

- The highly efficient GPT-3 model is categorized into four models based on the power level suitable to do their task.

| Model | Description | Max Request (Tokens | Training Data (Upto) |

|---|---|---|---|

| text-davinci-003 | Most capable GPT-3 model. Perform any task w.r.t other models with higher quality, longer output, and better instruction-following | 4000 | Jun-21 |

| text-curie-001 | Very capable, but faster and lower cost than Davinci. | 2048 | Jun-19 |

| text-babbage-001 | Very fast and lower cost, Perform straight forward task | 2048 | Jul-19 |

| text-ada-001 | Perform simple task | 2048 | Aug-19 |

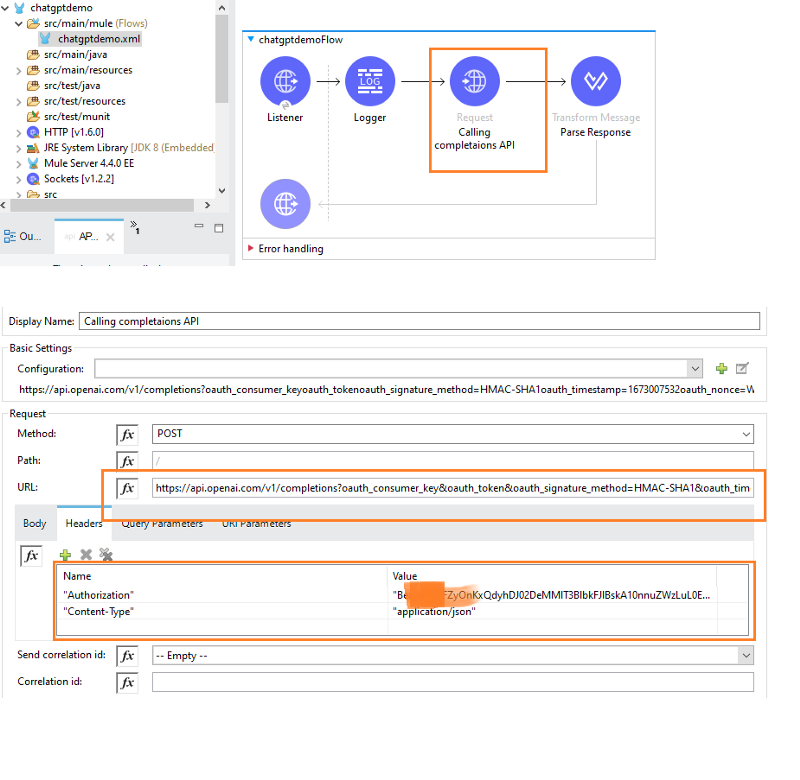

- Code snippet to call GPT-3 model (OpenAI API) in Mule application:

<?xml version="1.0" encoding="UTF-8"?>

<mule xmlns:ee="http://www.mulesoft.org/schema/mule/ee/core" xmlns:http="http://www.mulesoft.org/schema/mule/http"

xmlns="http://www.mulesoft.org/schema/mule/core"

xmlns:doc="http://www.mulesoft.org/schema/mule/documentation" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.mulesoft.org/schema/mule/core http://www.mulesoft.org/schema/mule/core/current/mule.xsd

http://www.mulesoft.org/schema/mule/http http://www.mulesoft.org/schema/mule/http/current/mule-http.xsd

http://www.mulesoft.org/schema/mule/ee/core http://www.mulesoft.org/schema/mule/ee/core/current/mule-ee.xsd">

<http:listener-config name="HTTP_Listener_config" doc:name="HTTP Listener config" doc:id="e5a1354b-1cf2-4963-a89f-36d035c95045" >

<http:listener-connection host="0.0.0.0" port="8091" />

</http:listener-config>

<flow name="chatgptdemoFlow" doc:id="b5747310-6c6d-4e1a-8bab-6fdfb1d6db3d" >

<http:listener doc:name="Listener" doc:id="1819cccd-1751-4e9e-8e71-92a7c187ad8c" config-ref="HTTP_Listener_config" path="completions"/>

<logger level="INFO" doc:name="Logger" doc:id="3049e8f0-bbbb-484f-bf19-ab4eb4d83cba" message="Calling completaion API of openAI"/>

<http:request method="POST" doc:name="Calling completaions API" doc:id="390d1af1-de73-4640-b92c-4eaed6ff70d4" url="https://api.openai.com/v1/completions?oauth_consumer_key&oauth_token&oauth_signature_method=HMAC-SHA1&oauth_timestamp=1673007532&oauth_nonce=WKkU9q&oauth_version=1.0&oauth_signature=RXuuOb4jqCef9sRbTmhSfRwXg4I=">

<http:headers ><![CDATA[#[output application/java

---

{

"Authorization" : "Bearer sk-***",

"Content-Type" : "application/json"

}]]]></http:headers>

</http:request>

<ee:transform doc:name="Parse Response" doc:id="061cb180-48c9-428e-87aa-f4f55a39a6f2" >

<ee:message >

<ee:set-payload ><![CDATA[%dw 2.0

import * from dw::core::Arrays

output application/json

---

(((payload.choices[0].text splitBy ".") partition ((item) -> item startsWith "\n" ))[1] ) map "$$":$]]></ee:set-payload>

</ee:message>

</ee:transform>

</flow>

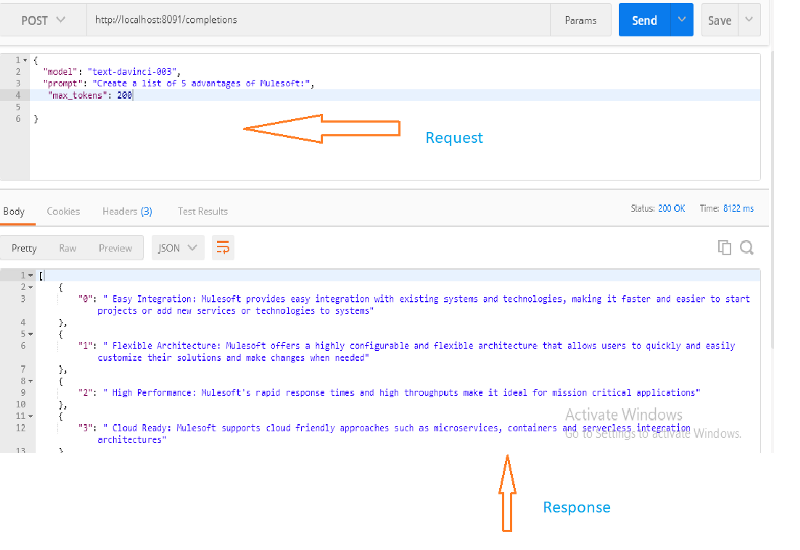

</mule>- Make a call to the Mule application through the API client. For example, I am using Postman.

- Request payload:

{

"model": "text-davinci-003",

"prompt": "Create a list of 5 advantages of MuleSoft:",

"max_tokens": 150

}

model: The OpenAI API is powered by a family of models with different capabilities and price points.prompt: The prompt(s) to generate completions for, encoded as a string, array of strings, array of tokens, or array of token arrays.max_tokens: The maximum number of tokens to generate in the completion.

For more details, refer to the API reference.

- Response payload

[

{

"0": " Easy Integration: Mulesoft provides easy integration with existing systems and technologies, making it faster and easier to start projects or add new services or technologies to systems"

},

{

"1": " Flexible Architecture: Mulesoft offers a highly configurable and flexible architecture that allows users to quickly and easily customize their solutions and make changes when needed"

},

{

"2": " High Performance: Mulesoft's rapid response times and high throughputs make it ideal for mission critical applications"

},

{

"3": " Cloud Ready: Mulesoft supports cloud friendly approaches such as microservices, containers and serverless integration architectures"

},

{

"4": " Efficient Development Cycles: The Mulesoft Anypoint platform includes a range of tools and services that speed up and streamline development cycles, helping to reduce the time and effort associated with creating applications"

}

]Where GPT-3 Could Potentially Be Used

Content Creation

The API can be utilized to generate written materials and translate them from one language to another.

Software Code Generation

The API can be used to generate software code and simplify complicated code, making it more comprehensible for new developers.

Sentiment Analysis

The API can be used to determine the sentiment of text, allowing businesses and organizations to understand the public's perception of their products, services, or brand.

Complex Computation

The API can assist in large data processing and handle complex calculations efficiently.

Limitations

Like all natural language processing (NLP) systems, GPT-3 has limitations in its ability to understand and respond to user inputs. Here are a few potential limitations of GPT-3:

- Reliability: Chatbots may not always produce acceptable outputs and determining the cause can be difficult. Complex queries may not be understood or responded to appropriately.

- Interpretability: The chatbot may not recognize all variations of user input, leading to misunderstandings or inappropriate responses if not trained on diverse data.

- Accuracy: Chatbot, being a machine learning model, can make mistakes. Regular review and testing are needed to ensure proper functioning.

- Efficiency: GPT-3's test-time sample efficiency is close to humans, but pre-training still involves a large amount of text.

Overall, GPT-3 is a useful tool that can improve communication and productivity, but it is important to be aware of its limitations and use it appropriately.

Conclusion

As chatbots continue to advance, they have the potential to significantly improve how we communicate and interact with technology. This is just an example of the various innovative approaches that are being developed to enhance chatbot performance and capabilities.

As we continue to explore the potential of GPT-3 and other language generation tools, it’s important that we remain authentic and responsible in our use of these tools. This means being transparent about their role in the writing process and ensuring that the resulting text is of high quality and accuracy.

References

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK