6

Kubernetes 1.25.4数据平面自带nginx负载均衡实现高可用 - 大雨转暴雨

source link: https://www.cnblogs.com/wdy001/p/16908711.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Kubernetes 1.25.4数据平面自带nginx负载均衡实现高可用

1、环境准备

要点: 1、使用一个FQDN统一作为API Server的接入点; 2、加入集群之前,每个节点都将该FQDN解析至第一个Master; 3、加入集群之后,每个Master节点将该FQDN都解析至自身的IP地址; 4、加入集群之后,在Worker上配置nginx以对API Server进行代理,并将该FQDN解析至自身的IP地址;

1-1、主机清单

| 主机名 | IP地址 | 系统版本 |

|---|---|---|

| k8s-master01 k8s-master01.wang.org kubeapi.wang.org kubeapi | 10.0.0.101 | Ubuntu2004 |

| k8s-master02 k8s-master02.wang.org | 10.0.0.102 | Ubuntu2004 |

| k8s-master03 k8s-master03.wang.org | 10.0.0.103 | Ubuntu2004 |

| k8s-node01 k8s-node01.wang.org | 10.0.0.104 | Ubuntu2004 |

| k8s-node02 k8s-node02.wang.org | 10.0.0.105 | Ubuntu2004 |

1-1、设置主机名

#所有节点执行:

[root@ubuntu2004 ~]#hostnamectl set-hostname k8s-master011-2、关闭防火墙

#所有节点执行:

[root@k8s-master01 ~]# ufw disable

[root@k8s-master01 ~]# ufw status1-3、时间同步

#所有节点执行:

[root@k8s-master01 ~]# apt install -y chrony

[root@k8s-master01 ~]# systemctl restart chrony

[root@k8s-master01 ~]# systemctl status chrony

[root@k8s-master01 ~]# chronyc sources1-4、主机名互相解析

#所有节点执行:

[root@k8s-master01 ~]#vim /etc/hosts

10.0.0.101 k8s-master01 k8s-master01.wang.org kubeapi.wang.org kubeapi

10.0.0.102 k8s-master02 k8s-master02.wang.org

10.0.0.103 k8s-master03 k8s-master03.wang.org

10.0.0.104 k8s-node01 k8s-node01.wang.org

10.0.0.105 k8s-node02 k8s-node02.wang.org1-5、禁用swap

#所有节点执行:

[root@k8s-master01 ~]# sed -r -i '/\/swap/s@^@#@' /etc/fstab

[root@k8s-master01 ~]# swapoff -a

[root@k8s-master01 ~]# systemctl --type swap

#若不禁用Swap设备,需要在后续编辑kubelet的配置文件/etc/default/kubelet,设置其忽略Swap启用的状态错误,内容:KUBELET_EXTRA_ARGS="--fail-swap-on=false"2、安装docker

#所有节点执行:

#安装必要的一些系统工具

[root@k8s-master01 ~]# apt update

[root@k8s-master01 ~]# apt -y install apt-transport-https ca-certificates curl software-properties-common

#安装GPG证书

[root@k8s-master01 ~]# curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | apt-key add -

OK

#写入软件源信息

[root@k8s-master01 ~]# add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

#更新并安装Docker-CE

[root@k8s-master01 ~]# apt update

[root@k8s-master01 ~]# apt install -y docker-ce #所有节点执行:

kubelet需要让docker容器引擎使用systemd作为CGroup的驱动,其默认值为cgroupfs,因而,我们还需要编辑docker的配置文件/etc/docker/daemon.json,添加如下内容,其中的registry-mirrors用于指明使用的镜像加速服务。

[root@k8s-master01 ~]# mkdir /etc/docker #如果已存在,请忽略此步

[root@k8s-master01 ~]# vim /etc/docker/daemon.json

{

"registry-mirrors": [

"https://docker.mirrors.ustc.edu.cn",

"https://hub-mirror.c.163.com",

"https://reg-mirror.qiniu.com",

"https://registry.docker-cn.com",

"https://pgavrk5n.mirror.aliyuncs.com"

],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "200m"

},

"storage-driver": "overlay2"

}

[root@k8s-master01 ~]#systemctl daemon-reload && systemctl enable --now docker && docker version

Client: Docker Engine - Community

Version: 20.10.21

#注:kubeadm部署Kubernetes集群的过程中,默认使用Google的Registry服务k8s.gcr.io上的镜像,由于2022年仓库已经改为registry.k8s.io,国内可以直接访问,所以现在不需要镜像加速或者绿色上网就可以拉镜像了,如果使用国内镜像请参考https://blog.51cto.com/dayu/58113073、安装cri-dockerd

#所有节点执行:

#下载地址:https://github.com/Mirantis/cri-dockerd

[root@k8s-master01 ~]# apt install ./cri-dockerd_0.2.6.3-0.ubuntu-focal_amd64.deb -y

#完成安装后,相应的服务cri-dockerd.service便会自动启动

[root@k8s-master01 ~]#systemctl restart cri-docker.service && systemctl status cri-docker.service4、安装kubeadm、kubelet和kubectl

#所有节点执行:

#在各主机上生成kubelet和kubeadm等相关程序包的仓库,可参考阿里云官网

[root@k8s-master01 ~]# apt update

[root@k8s-master01 ~]# apt install -y apt-transport-https curl

[root@k8s-master01 ~]# curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

[root@k8s-master01 ~]#cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

#更新仓库并安装

[root@k8s-master01 ~]# apt update

[root@k8s-master01 ~]# apt install -y kubelet kubeadm kubectl

#注意:先不要启动,只是设置开机自启动

[root@k8s-master01 ~]# systemctl enable kubelet

#确定kubeadm等程序文件的版本

[root@k8s-master01 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"25", GitVersion:"v1.25.4", GitCommit:"872a965c6c6526caa949f0c6ac028ef7aff3fb78", GitTreeState:"clean", BuildDate:"2022-11-09T13:35:06Z", GoVersion:"go1.19.3", Compiler:"gc", Platform:"linux/amd64"}5、整合kubelet和cri-dockerd

5-1、配置cri-dockerd

#所有节点执行:

[root@k8s-master01 ~]# vim /usr/lib/systemd/system/cri-docker.service

#ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd://

ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.8 --container-runtime-endpoint fd:// --network-plugin=cni --cni-bin-dir=/opt/cni/bin --cni-cache-dir=/var/lib/cni/cache --cni-conf-dir=/etc/cni/net.d

#说明:

需要添加的各配置参数(各参数的值要与系统部署的CNI插件的实际路径相对应):

--network-plugin:指定网络插件规范的类型,这里要使用CNI;

--cni-bin-dir:指定CNI插件二进制程序文件的搜索目录;

--cni-cache-dir:CNI插件使用的缓存目录;

--cni-conf-dir:CNI插件加载配置文件的目录;

配置完成后,重载并重启cri-docker.service服务。

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl restart cri-docker.service

[root@k8s-master01 ~]# systemctl status cri-docker5-2、配置kubelet

#所有节点执行:

#配置kubelet,为其指定cri-dockerd在本地打开的Unix Sock文件的路径,该路径一般默认为“/run/cri-dockerd.sock“

[root@k8s-master01 ~]# mkdir /etc/sysconfig

[root@k8s-master01 ~]# vim /etc/sysconfig/kubelet

KUBELET_KUBEADM_ARGS="--container-runtime=remote --container-runtime-endpoint=/run/cri-dockerd.sock"

[root@k8s-master01 ~]# cat /etc/sysconfig/kubelet

KUBELET_KUBEADM_ARGS="--container-runtime=remote --container-runtime-endpoint=/run/cri-dockerd.sock"

#说明:该配置也可不进行,而是直接在后面的各kubeadm命令上使用“--cri-socket unix:///run/cri-dockerd.sock”选项6、初始化第一个主节点

#第一个主节点执行:

#列出k8s所需要的镜像

[root@k8s-master01 ~]#kubeadm config images list

registry.k8s.io/kube-apiserver:v1.25.4

registry.k8s.io/kube-controller-manager:v1.25.4

registry.k8s.io/kube-scheduler:v1.25.4

registry.k8s.io/kube-proxy:v1.25.4

registry.k8s.io/pause:3.8

registry.k8s.io/etcd:3.5.5-0

registry.k8s.io/coredns/coredns:v1.9.3

#使用阿里云拉取所需镜像

[root@k8s-master01 ~]#kubeadm config images pull --image-repository=registry.aliyuncs.com/google_containers --cri-socket unix:///run/cri-dockerd.sock

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.25.4

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.25.4

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.25.4

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.25.4

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.8

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.5-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.9.3

#kubeadm可通过配置文件加载配置,以定制更丰富的部署选项。获取内置的初始配置文件的命令

kubeadm config print init-defaults

[root@k8s-master01 ~]#vim kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

kind: InitConfiguration

localAPIEndpoint:

# 这里的地址即为初始化的控制平面第一个节点的IP地址;

advertiseAddress: 10.0.0.101

bindPort: 6443

nodeRegistration:

criSocket: unix:///run/cri-dockerd.sock

imagePullPolicy: IfNotPresent

# 第一个控制平面节点的主机名称;

name: k8s-master01.wang.org

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

# 控制平面的接入端点,我们这里选择适配到kubeapi.wang.com这一域名上;

controlPlaneEndpoint: "kubeapi.wang.org:6443"

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.25.4

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

# 用于配置kube-proxy上为Service指定的代理模式,默认为iptables;

mode: "ipvs"

[root@k8s-master01 ~]#kubeadm init --config kubeadm-config.yaml --upload-certs

#如提示以下信息,代表初始化完成,请记录信息,以便后续使用:

.....

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join kubeapi.wang.org:6443 --token c9r0oz.nfw6c83xm07hzwy6 \

--discovery-token-ca-cert-hash sha256:5a46d743466eac029eafae4a8204c769a7867c1e64d144f22a769c55e09da3bd \

--control-plane --certificate-key 900b6459a376f9000c49af401bcd12e70e55d3154aa7b71f04e891c914cf661c

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join kubeapi.wang.org:6443 --token c9r0oz.nfw6c83xm07hzwy6 \

--discovery-token-ca-cert-hash sha256:5a46d743466eac029eafae4a8204c769a7867c1e64d144f22a769c55e09da3bd

[root@k8s-master01 ~]# mkdir -p $HOME/.kube

[root@k8s-master01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

7、部署网络插件flanner

#所有节点执行:

#下载链接:

https://github.com/flannel-io/flannel/releases

[root@k8s-master01 ~]# mkdir /opt/bin

[root@k8s-master01 ~]# cp flanneld-amd64 /opt/bin/flanneld

[root@k8s-master01 ~]# chmod +x /opt/bin/flanneld

[root@k8s-master01 ~]# ll /opt/bin/flanneld

-rwxr-xr-x 1 root root 39358256 11月 19 20:41 /opt/bin/flanneld*#第一个主节点执行:

#部署kube-flannel

[root@k8s-master01 ~]#kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

#确认Pod的状态为“Running”

[root@k8s-master01 ~]#kubectl get pods -n kube-flannel

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-7hqhk 1/1 Running 0 119s

#此时,k8s-master01已经就绪

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready control-plane 20m v1.25.38、添加其他节点到集群中

#k8s-master02和k8s-master03执行:

#k8s-master02和k8s-master03加入集群

[root@k8s-master02 ~]#kubeadm join kubeapi.wang.org:6443 --token c9r0oz.nfw6c83xm07hzwy6 --discovery-token-ca-cert-hash sha256:5a46d743466eac029eafae4a8204c769a7867c1e64d144f22a769c55e09da3bd --control-plane --certificate-key 900b6459a376f9000c49af401bcd12e70e55d3154aa7b71f04e891c914cf661c --cri-socket unix:///run/cri-dockerd.sock

#注意,命令需要加上--cri-socket unix:///run/cri-dockerd.sock

#注意:token要复制对,要不然会报以下错误(本人因为复制少了一个字母,折腾了一个多小时):

Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

error execution phase control-plane-prepare/download-certs: error downloading certs: error decoding certificate key: encoding/hex: odd length hex string

To see the stack trace of this error execute with --v=5 or higher

# 使master02、master03也能管理集群:

[root@k8s-master02 ~]#mkdir -p $HOME/.kube

[root@k8s-master02 ~]#sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master02 ~]#sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master02 ~]#kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://kubeapi.wang.org:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

#此时master02和master03已经可以管理集群了,但是是通过kubeapi.wang.org这个域名连接到101管理的,为了让master02和master03连接本机管理集群,所以需要修改hosts把域名指向本机(因为没有配置单独的dns和vip)

[root@k8s-master02 ~]#vim /etc/hosts

10.0.0.101 k8s-master01 k8s-master01.wang.org

10.0.0.102 k8s-master02 k8s-master02.wang.org kubeapi.wang.org kubeapi

10.0.0.103 k8s-master03 k8s-master03.wang.org#k8s-node01、k8s-node02执行

#node节点加入集群

[root@k8s-node01 ~]#kubeadm join kubeapi.wang.org:6443 --token c9r0oz.nfw6c83xm07hzwy6 --discovery-token-ca-cert-hash sha256:5a46d743466eac029eafae4a8204c769a7867c1e64d144f22a769c55e09da3bd --cri-socket unix:///run/cri-dockerd.sock

#注意,命令需要加上--cri-socket unix:///run/cri-dockerd.sock#master01节点验证:

[root@k8s-master01 ~]#kubectl get pods -n kube-flannel -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NO

kube-flannel-ds-92npq 1/1 Running 0 10m 10.0.0.103 k8s-master03 <none>

kube-flannel-ds-g9ch7 1/1 Running 0 5m22s 10.0.0.102 k8s-master02 <none>

kube-flannel-ds-nft4j 1/1 Running 0 10m 10.0.0.101 k8s-master01.wang.org <none>

kube-flannel-ds-t7z7k 1/1 Running 0 10m 10.0.0.104 k8s-node01 <none>

kube-flannel-ds-z9s8w 1/1 Running 0 10m 10.0.0.105 k8s-node02 <none> 9、node节点安装nginx,实现高可用

#node01、node02执行:

#数据平面使用nginx自带负载均衡:

[root@k8s-node01 ~]#apt install -y nginx

[root@k8s-node01 ~]#vim /etc/nginx/nginx.conf

#注意stream 适合http同级别

......

stream {

upstream apiservers {

server k8s-master01.wang.org:6443 max_fails=2 fail_timeout=30s;

server k8s-master02.wang.org:6443 max_fails=2 fail_timeout=30s;

server k8s-master03.wang.org:6443 max_fails=2 fail_timeout=30s;

}

server {

listen 6443;

proxy_pass apiservers;

}

}

......

[root@k8s-node01 ~]#nginx -t

[root@k8s-node01 ~]#nginx -s reload

[root@k8s-node01 ~]#vim /etc/hosts

10.0.0.104 k8s-node01 k8s-node01.wang.org kubeapi.wang.org kubeapi #node02解析kubeapi域名为自己

[root@k8s-node01 ~]#kubectl config view --kubeconfig=/etc/kubernetes/kubelet.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://kubeapi.wang.org:6443

name: default-cluster

contexts:

- context:

cluster: default-cluster

namespace: default

user: default-auth

name: default-context

current-context: default-context

kind: Config

preferences: {}

users:

- name: default-auth

user:

client-certificate: /var/lib/kubelet/pki/kubelet-client-current.pem

client-key: /var/lib/kubelet/pki/kubelet-client-current.pem10、测试

[root@k8s-master01 ~]#kubectl create deployment demoapp --image=ikubernetes/demoapp:v1.0 --replicas=2

[root@k8s-master01 ~]#kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-bvfds 0/1 ContainerCreating 0 11s <none> k8s-node02 <none> <none>

demoapp-55c5f88dcb-sv744 0/1 ContainerCreating 0 11s <none> k8s-node01 <none> <none>

[root@k8s-master01 ~]#kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-55c5f88dcb-bvfds 1/1 Running 0 84s 10.244.4.2 k8s-node02 <none> <none>

demoapp-55c5f88dcb-sv744 1/1 Running 0 84s 10.244.3.2 k8s-node01 <none> <none>

[root@k8s-master01 ~]#kubectl create svc nodeport demoapp --tcp=80:80

[root@k8s-master01 ~]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp NodePort 10.99.247.102 <none> 80:32606/TCP 4s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 52m

[root@k8s-master01 ~]#kubectl get ep

NAME ENDPOINTS AGE

demoapp 10.244.3.2:80,10.244.4.2:80 12s

kubernetes 10.0.0.101:6443,10.0.0.102:6443,10.0.0.103:6443 52m

[root@k8s-master01 ~]#curl 10.99.247.102

iKubernetes demoapp v1.0 !! ClientIP: 10.244.0.0, ServerName: demoapp-55c5f88dcb-bvfds, ServerIP: 10.244.4.2!

[root@k8s-master01 ~]#curl 10.99.247.102

iKubernetes demoapp v1.0 !! ClientIP: 10.244.0.0, ServerName: demoapp-55c5f88dcb-sv744, ServerIP: 10.244.3.2!

[root@k8s-master01 ~]#curl 10.99.247.102

iKubernetes demoapp v1.0 !! ClientIP: 10.244.0.0, ServerName: demoapp-55c5f88dcb-bvfds, ServerIP: 10.244.4.2!

[root@k8s-master01 ~]#curl 10.99.247.102

iKubernetes demoapp v1.0 !! ClientIP: 10.244.0.0, ServerName: demoapp-55c5f88dcb-sv744, ServerIP: 10.244.3.2!11、命令补全

[root@k8s-master01 ~]#apt install -y bash-completion

[root@k8s-master01 ~]#source /usr/share/bash-completion/bash_completion

[root@k8s-master01 ~]#source <(kubectl completion bash)

[root@k8s-master01 ~]#echo "source <(kubectl completion bash)" >> ~/.bashrc12、部署cs动态置备

[root@k8s-master01 ~]#apt install nfs-server nfs-common

[root@k8s-master01 ~]#vim /etc/exports

/data/test 10.0.0.0/24(rw,no_subtree_check,no_root_squash)

[root@k8s-master01 ~]#mkdir /data/test -p

[root@k8s-master01 ~]#exportfs -ar

[root@k8s-master01 ~]#showmount -e 10.0.0.101

===============================================

[root@k8s-master01 ~]#kubectl create ns nfs

namespace/nfs created

[root@k8s-master01 ~]#kubectl get ns

NAME STATUS AGE

default Active 66m

kube-flannel Active 59m

kube-node-lease Active 66m

kube-public Active 66m

kube-system Active 66m

nfs Active 8s

[root@k8s-master01 ~]#kubectl create -f https://raw.githubusercontent.com/kubernetes-csi/csi-driver-nfs/master/deploy/example/nfs-provisioner/nfs-server.yaml -n nfs

[root@k8s-master01 ~]#kubectl get pod -n nfs

NAME READY STATUS RESTARTS AGE

nfs-server-5847b99d99-56fhg 1/1 Running 0 28s

[root@k8s-master01 ~]#curl -skSL https://raw.githubusercontent.com/kubernetes-csi/csi-driver-nfs/v3.1.0/deploy/install-driver.sh | bash -s v3.1.0 --

[root@k8s-master01 ~]#kubectl -n kube-system get pod -o wide -l app=csi-nfs-controller

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

csi-nfs-controller-65cf7d587-hfwk2 3/3 Running 0 2m11s 10.0.0.105 k8s-node02 <none> <none>

csi-nfs-controller-65cf7d587-n6hmk 3/3 Running 0 2m11s 10.0.0.104 k8s-node01 <none> <none>

[root@k8s-master01 ~]#kubectl -n kube-system get pod -o wide -l app=csi-nfs-node

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

csi-nfs-node-2mwfc 3/3 Running 0 2m23s 10.0.0.104 k8s-node01 <none> <none>

csi-nfs-node-9c2j4 3/3 Running 0 2m23s 10.0.0.102 k8s-master02 <none> <none>

csi-nfs-node-c4fll 3/3 Running 0 2m23s 10.0.0.103 k8s-master03 <none> <none>

csi-nfs-node-k2zcv 3/3 Running 0 2m23s 10.0.0.105 k8s-node02 <none> <none>

csi-nfs-node-vq2pv 3/3 Running 0 2m23s 10.0.0.101 k8s-master01.wang.org <none> <none>

[root@k8s-master01 ~]#vim nfs-csi-cs.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-csi

provisioner: nfs.csi.k8s.io

parameters:

# server: nfs-server.nfs.svc.cluster.local

server: 10.0.0.101 #此处用的是真实nfs,使用官网配置pvc无法bound,一直在pending状态

share: /data/test

# csi.storage.k8s.io/provisioner-secret is only needed for providing mountOptions in DeleteVolume

# csi.storage.k8s.io/provisioner-secret-name: "mount-options"

# csi.storage.k8s.io/provisioner-secret-namespace: "default"

#reclaimPolicy: Delete

reclaimPolicy: Retain

volumeBindingMode: Immediate

mountOptions:

- hard

- nfsvers=4.1

[root@k8s-master01 ~]#kubectl apply -f nfs-csi-cs.yaml

[root@k8s-master01 ~]#kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-csi nfs.csi.k8s.io Retain Immediate false 3s

[root@k8s-master01 ~]#vim pvc-nfs-csi-dynamic.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-nfs-dynamic

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: nfs-csi

[root@k8s-master01 ~]#kubectl apply -f pvc-nfs-csi-dynamic.yaml

[root@k8s-master01 ~]#kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-nfs-dynamic Bound pvc-a693d483-8fc6-4e91-8779-6d0097b2e075 1Gi RWX nfs-csi 5s13、部署ingress

[root@k8s-master01 ~]#kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.5.1/deploy/static/provider/cloud/deploy.yaml

[root@k8s-master01 ~]#kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-cv8zl 0/1 Completed 0 105s

ingress-nginx-admission-patch-nmjpg 0/1 Completed 0 105s

ingress-nginx-controller-8574b6d7c9-hxxj6 1/1 Running 0 105s

[root@k8s-master01 ~]#kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-cv8zl 0/1 Completed 0 7m5s

pod/ingress-nginx-admission-patch-nmjpg 0/1 Completed 0 7m5s

pod/ingress-nginx-controller-8574b6d7c9-hxxj6 1/1 Running 0 7m5s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller LoadBalancer 10.99.251.134 <pending> 80:30418/TCP,443:31389/TCP 7m6s

service/ingress-nginx-controller-admission ClusterIP 10.103.59.126 <none> 443/TCP 7m6s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ingress-nginx-controller 1/1 1 1 7m5s

NAME DESIRED CURRENT READY AGE

replicaset.apps/ingress-nginx-controller-8574b6d7c9 1 1 1 7m5s

NAME COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create 1/1 16s 7m6s

job.batch/ingress-nginx-admission-patch 1/1 17s 7m6s14、部署metric server

[root@k8s-master01 ~]#kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

[root@k8s-master01 ~]#kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

......

metrics-server-8b7cc9967-ggmxc 0/1 Running 0 9s

......

#提示:如果状态一直是running但是READY状态一直未就绪,那么就执行下列操作:

===========如果状态一直是running但是READY状态一直未就绪,那么就执行下列操作===========

[root@k8s-master01 ~]#curl -LO https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

[root@k8s-master01 ~]#vim components.yaml

......

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP #修改

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls #增加

image: k8s.gcr.io/metrics-server/metrics-server:v0.6.1

......

[root@k8s-master01 ~]#kubectl apply -f components.yaml

========================================================================================

#metric就绪后就可以查询node、pod的cpu内存等信息了

[root@k8s-master01 ~]#kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master01.wang.org 817m 40% 1113Mi 60%

k8s-master02 735m 36% 1045Mi 56%

k8s-master03 673m 33% 1027Mi 55%

k8s-node01 314m 15% 877Mi 47%

k8s-node02 260m 13% 821Mi 44%

[root@k8s-master01 ~]#kubectl top pod -n ingress-nginx

NAME CPU(cores) MEMORY(bytes)

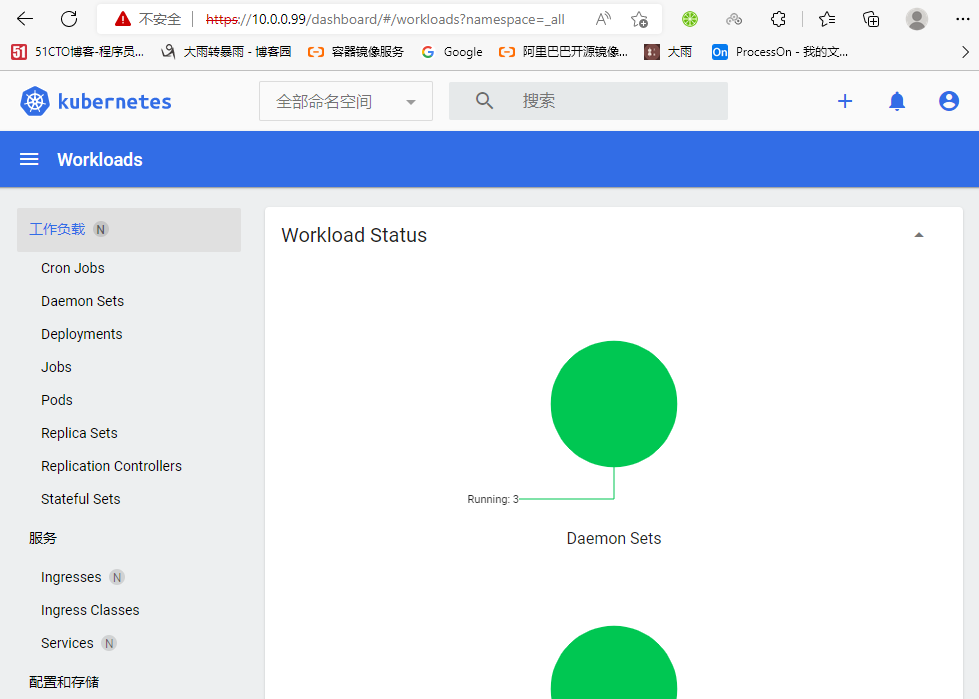

ingress-nginx-controller-8574b6d7c9-48pw6 2m 70Mi 15、部署dashboard

[root@k8s-master01 ~]#kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

[root@k8s-master01 ~]#kubectl get ns

NAME STATUS AGE

default Active 13h

ingress-nginx Active 17m

kube-flannel Active 13h

kube-node-lease Active 13h

kube-public Active 13h

kube-system Active 13h

kubernetes-dashboard Active 9s

nfs Active 12h

[root@k8s-master01 ~]#kubectl edit svc ingress-nginx-controller -n ingress-nginx

......

externalTrafficPolicy: Cluster

externalIPs:

- 10.0.0.99

......

[root@k8s-master01 ~]#kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.99.251.134 10.0.0.99 80:30418/TCP,443:31389/TCP 20m

ingress-nginx-controller-admission ClusterIP 10.103.59.126 <none> 443/TCP 20m

[root@k8s-master01 ~]#vim dashboard.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: dashboard

annotations:

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/ssl-passthrough: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

nginx.ingress.kubernetes.io/rewrite-target: /$2

namespace: kubernetes-dashboard

spec:

ingressClassName: nginx

rules:

- http:

paths:

- path: /dashboard(/|$)(.*)

backend:

service:

name: kubernetes-dashboard

port:

number: 443

pathType: Prefix

[root@k8s-master01 ~]#kubectl apply -f dashboard.yaml

ingress.networking.k8s.io/dashboard created

[root@k8s-master01 ~]#kubectl get ingress -n kubernetes-dashboard

NAME CLASS HOSTS ADDRESS PORTS AGE

dashboard nginx * 10.0.0.99 80 11s

#创建帐户

[root@k8s-master01 ~]#vim dashboard-admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

#绑定角色为集群管理员

[root@k8s-master01 ~]#vim dashboard-admin-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

#获取令牌

[root@k8s-master01 ~]#kubectl -n kubernetes-dashboard create token admin-user

eyJhbGciOiJSUzI1NiIsImtpZCI6InBVQUp4ckNnSmxyajhLR0FKQ0ZZazZjbmZpd2hoNjY0SDRPeUhnN2JiUGMifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjY4OTMyMDAxLCJpYXQiOjE2Njg5Mjg0MDEsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiMjhhODM4NzgtM2NmZC00YmNjLWEyMzEtMDNlZDBmNWE5YzRlIn19LCJuYmYiOjE2Njg5Mjg0MDEsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbi11c2VyIn0.aZPqgYF-3-XYsATAaH6BNRJ8YpQNxoOZn5n-76UAUq_hEGzOUAqSGVkVyb8S1oK2XLMh24Ybf7bD-eB9h4JWoyptIcQmCaoeYlaoR-fglO5SfCDpfThPKGs1WI1TlZVi3mn92c4YlhweYX2mS60iQ4gdywMehj34nqoRqoqjCOf0AA8XGjUwDlcQEjgJJSOHp_XN7NP3t-4EZDCAbzfj5bvWEfhA8wxTULz-J0MDiJ9j8xBjQvx0M9GtQeXHmuCexg08o5IuBHVBoq5iAkN_t2AJJtC1VUMA9AVkSH1HbmMs4go6cGdrgM9kHvz7hpvQZ4Na5Gte52LFITHrvA3wiw

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK