So long and thanks for all the bits

source link: https://www.ncsc.gov.uk/blog-post/so-long-thanks-for-all-the-bits

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

blog post

So long and thanks for all the bits

Ian Levy

It’s with a heavy heart that I’m announcing that I’m leaving the NCSC, GCHQ and Crown Service.

Being Technical Director of the NCSC has been the best job in the world, and truly an honour. I’ve spent more than two decades in GCHQ, with the last decade in this role (and its prior incarnations). I’ve met and worked with some of the most amazing people, including some of the brightest people on the planet, and learned an awful lot. I’ve got to give a special mention to everyone in the NCSC and wider GCHQ because they’re just awesome. And I’ve also had the pleasure of working with vendors, regulators, wider industry, academia, international partners and a whole bunch of others. I like to think I’ve done some good in this role, and I know I couldn’t have accomplished half as much without them.

Regardless, there’s a lot left to do. So, I thought I’d leave by sharing ten things I’ve learned and one idea for the future. This post is long and a bit self-indulgent, but it’s my leaving blog, so suck it up 😊

Yes! It’s a quantum state superposition joke to start us off. And who doesn’t love a bit of Dirac notation abuse?

As a community, cyber security folk are simultaneously incredibly smart and incredibly dumb. This superposition doesn’t seem to be unique to our community though.

In World War Two, the Boeing B-17 Flying Fortress was the workhorse bomber of the US. As you’d expect, a lot of them were lost during combat, but a lot were also lost when they were landing back at base. Pilots were blamed. The training was blamed. Poor maintenance was blamed. Even the quality of the runways was blamed. None of this was supported by any evidence.

After the war, someone actually did some research to try to understand the real problem. Imagine you’ve been on a stressful bombing raid for twelve hours. You’re tired. It’s dark. It’s raining and you’re on final approach to your base. You reach over to throw the switch that engages the landing gear and suddenly, with a lurch, your aircraft stalls and smashes into the ground, killing everyone.

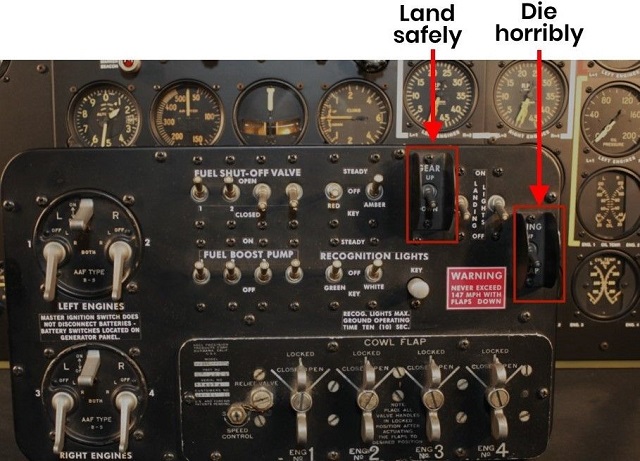

This picture of a B-17 cockpit instrument panel shows what the pilot would see:

B-17 cockpit instrument panel

There’s no amount of training in the world that could compensate for this design flaw. There’s nothing to stop the most obvious error turning into the catastrophic outcome. The ‘land safely’ switch (operates the landing gear) and the ‘die horribly’ switch (operates the flaps) are very close to each other and identical.

‘Blaming users for not being able to operate a terrible design safely.’ Sound familiar? But this investigation led to something called shape coding which persists as a design language today. Most modern aircraft have a lever to operate the landing gear and the little toggle on the end of the lever is wheel shaped. So, when you grab it, you know what you’ve got hold of. The flaps control is generally a big square knob and – guess what – they’re not next to each other. The aircraft world has learned from its mistakes.

Revised levers for landing gear, wing flaps

In cyber security, we come up with exquisite solutions to incredibly hard problems, manage risks that would make most people’s toes curl and get computers to do things nobody thought was possible (or in some cases desirable). But we also continue to place ridiculous demands on users (deep breath: must not mention clicking links in emails or password policies), implicitly expect arbitrarily complex implementations of technology to be perfect and vulnerability-free in the long term, and then berate those who build the stuff we use when they fail to properly defend themselves from everything a hostile state can throw at them.

I’ve always found this cognitive dissonance interesting, but I haven’t found a way to reliably and easily work out when we’re asking for daft things. The nearest I’ve got is to try to determine where the security burden lies and whether it’s reasonable to ask that party to shoulder it.

Is it reasonable to ask a ten-person software company to defend themselves from the Russians?

Nope.

Is it reasonable to ask a critical infrastructure company to not have their management systems connected to the internet with a password of ‘Super5ecre7P@ssw0rd!’?

Bloody right it is!

The trick for making cyber security scalable in the long term is to get the various security burdens in the right place and incentivise the entities that need to manage those burdens properly. Sometimes the obvious person to manage a burden won’t be the optimal one, so we may want to shift things around so they scale better. And let’s be honest, we do suffer a lot from groupthink in cyber security. So, here’s my plea. The cyber security community should:

- talk to people who aren’t like us and actually listen to them

- stop blaming people who don’t have our l33t skillz when something goes wrong

- build stuff that works for most people, most of the time (rather than the easy or shiny thing)

- put ourselves in the shoes of our users and ask if we’re really being sensible in our expectations

We haven’t got that right yet.

2. We’re still treating the symptoms, not the cause

This month marks the 50th anniversary of memory safety vulnerabilities. Back in October 1972, a USAF study first described a memory safety vulnerability (page 61, although the whole two-volume report is fascinating). In the 1990s, Aleph One published the seminal work ‘Smashing the Stack for Fun and Profit’ explaining how to exploit buffer overflows. In the first three months of 2022, there were about 400 memory safety vulnerabilities reported to the National Vulnerability Database (I used any of CWE-118 through CWE-131 as my approximation).

As Anderson et al say in their report, this is the result of a fundamental flaw in the design of the hardware and software we use today, the exact same fundamental flaw they identified in 1972.

And what is our response to this issue? Basically, we tell people to write better code and try harder. Sometimes we give them coping mechanisms (which we give cool-sounding names like ‘static and dynamic analysis’ or ‘taint tracking’). But in the end, we blame the programmer. That’s treating the symptoms, not the underlying cause and once again harks back to the B-17 design problem.

I think we do that because fixing the real problem is really hard.

Doing so breaks a bunch of existing stuff.

Doing so costs people money.

Doing so seems to be daft if you’re in the commercial ecosystem. This is where government can step in. Under the Digital Secure By Design programme, we’re funding the creation of a memory safe microprocessor and associated toolchain. If this works, it won’t fix the stuff we already have. But at least it’ll be possible to build systems that can’t be exploited through memory safety errors without expecting every programmer to be perfect all the time.

All of the Active Cyber Defence services are really treating symptoms, rather than underlying causes. I’m really proud of what we’ve achieved in the ACD programme and we've used it to force some systemic changes. But even that programme is about mitigating harm caused by the problems we see, rather than fixing the problems. We really need to get to the root causes and solutions to some of those really thorny issues. For example, one problem (in my opinion) is that it’s too easy to set up free hosting for your cybercrime site. There’s no friction and no risk to dissuade would-be-crims. Hosting companies aren’t incentivised to make it harder, because if one of them does it, they just lose custom to a competitor, which is commercial insanity.

Should government regulate the provision of hosting? Almost certainly not (at least in democratic countries). But there’s got to be some way of squaring this circle, and the many other apparent paradoxes that have dogged cyber security for years. I’ve just not had the chance to work out how, so someone else needs to (hint, hint).

3. Data is awesome . . .

The data we generate from the ACD services, from our incident work and other sources is awesome. It tells us lots of things and some of those things are even useful. But our data doesn’t always align with other people’s data, and not everyone is totally honest about what their data tells them, how biased it is (including selection and observation bias, which some ACD services suffer from) and so on. We have lots of data that could provide us with proxy metrics and ‘sort of’ understanding about cyber security problems. Lots of the metrics produced around cyber security are really input metrics (rather than understanding outcomes) and that’s very unsatisfactory. What we don’t have yet is a proper framework and data set for understanding what’s really important: are we actually reducing vulnerability, risk and harm by the interventions we perform?

Nearly two years ago, we started a set of ‘grand challenges’ in the NCSC and one of them was to understand the vulnerability of the UK, which is a first step in answering this really hard question. That team has been working diligently since then and we’re starting to see the beginnings of some results and an approach. We’ll start talking about that work in the coming weeks including, importantly, how we think the data should be presented to best help people make informed decisions from it.

Spoiler alert: we’re not going to describe the vulnerability or security of the UK using a single number 😊

4. . . . but there’s still not enough science

Back when we set up the NCSC, I said that we’d make cyber security more of a science. We set up the Research Institutes to bring academic rigour to some of the hard problems in cyber security and recognised the Academic Centres of Excellence for education and research to help generate a pipeline of skilled people and novel research to take things forward. And they’ve come up with some really cool stuff that has advanced the state of cyber security as a science.

In the NCSC, all the guidance and advice we put out has a rigorous evidence base and a logical argument behind it. That’s hard to do well, but we strive to do so and have a proper tech-QC process to try to make sure we give good advice. That’s really helpful when you’re publishing controversial advice, going against the accepted wisdom and orthodoxy or calling something dumb when it’s just dumb. Our rationale is published, the evidence we’ve gone on is mainly published and others can look at our arguments, logic and conclusions, and try to spot errors. That’s much more useful than shouting hyperbole from the rooftops and reminding everyone that ‘attackers are bad’, but the problem of lack of science is endemic across cyber security. Vendors make grandiose claims about their products without any supporting evidence. We have collectively got a bit better with the ‘winged ninja cyber monkey’ problem (ie, talking entirely in extremes and engendering fear) but it often pops back into discussions.

A winged ninja cyber monkey (courtesy of LEGO)

We still don’t have a way of reasoning about cyber security outcomes in a repeatable, evidence-based way across the community. I think we’ve shown that, in our little bit of the world, it is possible. The question is how we generalise that to the whole subject, so we’re not making hugely important decisions on gut feeling and instinct.

We’ve started to try to put methodologies together to help people better understand risk, including taking an existing complex risk management methodology and adapting it to cyber security. This methodology − STPA/STAMP − is used in lots of places already, so there should be support for people trying to use it for cyber security. I’m also sure that if we have more rigour behind those decisions, we’ll be able to explain them more easily to non-cyber security experts, whether that’s the CEO of a multinational company or just a person on the street trying to stay safe online.

5. “Much to learn you have, young Padawan!”

There have been a couple of big incidents in the last year or so that have rocked the cyber security world. The attack on SolarWinds, now attributed to the Russian state, was hailed by some as a heinous, unacceptable attack. The vulnerability in the widely deployed Log4J component caused mass panic (only some of which was justified) and has kicked off a load of knee-jerk reactions around understanding software supply chains that aren’t necessarily well thought through. Both of these attacks remind me of Groundhog Day.

The Log4J problem is equivalent to the problem we had with Heartbleed back in 2014, despite being a different sort of vulnerability. The community had alighted on a good solution to a problem and reused the hell out of it. But we hadn’t put the right support in place to make sure that component, OpenSSL, was developed and maintained like the security of the world depended on it. Because it did. The SolarWinds issue was a supply-chain attack (going after a supplier to get to your real target). It’s not the first time we’ve seen that, either. Back in 2002, someone borked the distribution server for the most popular mail server programme, sendmail, and for months, many, many servers on the internet were running compromised code. That same year, the OpenSSH distribution was borked to compromise people who built that product to provide secure access to their enterprise.

My point here is that we rarely learn from the past (or generalise from the point problems we see to the more general case) to get ahead of the attackers. Going back to the aircraft analogy, if that industry managed risk and vulnerability the way we do in cyber security, there would be planes literally falling out of the sky. We should learn from that sector and the safety industry more generally[1]. The US have set up the Cyber Safety Review Board, based on the National Transportation Safety Board, which will hopefully be a first step in systematising learnings from incidents and the like, to drive change in the cyber security ecosystem. At the moment, it’s only looking at major incidents, but it’s a start. I hope the community supports this (and similar efforts), and helps them scale and move from just looking at incidents to tackling some of the underlying issues we all know need fixing. Without some sort of feedback loop, we are destined to be a bit dumb forever.

6. Assurance usually isn’t

For many years, we’ve been trying to work out how to differentiate ‘good stuff’ from ‘not so good stuff’.

We have things like the Common Criteria scheme that intend to tell people something is ‘good’ by having a lab look at some documents provided by the vendor and, in some cases, actually look at a tiny bit of the software. In the general case, these schemes just don’t provide any useful information for risk management and (again in my humble opinion) actually end up reducing overall system security. This is because system owners feel they no longer need to think about security because they have some ‘certificate of goodness’.

One of the worst examples of this is the GSMA’s NESAS scheme, which intends to give governments confidence that the telecoms products they’re allowing to be used in their national networks are appropriately secure. It does this by giving us 21 letters describing whether 21 categories of stuff have been assessed as compliant or not (you can see the reports on the GSMA website). It provides no useful information to end users of the equipment to make risk management decisions.

The commercial model for almost all lab-based assurance schemes seems to incentivise a race to the bottom. Imagine you’re a product developer and you need to pass one of these schemes to sell to a big client. You go to the first lab and they say your product is a bag of spanners and it needs fundamental redesign to be secure. The second lab says that if you tweak the target of evaluation, they can give you a certificate. Unfortunately, this is all too real and, again, the incentives seem to be driving for something other than a good security outcome.

We published our new (principles-based) approach to assurance last year. It’s very different to traditional assurance schemes, but we think that it will drive fundamentally better risk management (and therefore security) in the long term. However, even in the best-case scenario, assurance activities only tell you so much. They don’t tell you a product is ‘secure’ or that it can be ‘trusted’. If you talk about ‘trusted vendors’ and ‘trusted marketplaces’, you’re implying something about the product that isn’t true. Even if you trust the vendor, you’re implying that no bad actor will ever be able to write code for them, either through a cyber attack or a dodgy developer on the take.

We've got to be much more honest about what assurance activities are worthwhile and what they tell us in the real world, rather than the best case. This is really hard as assurance certificates have become a government sales tax, a marketing tool and a crutch for people who don’t want to manage risk properly. We need to break that cycle and design systems where the security of the overall system isn’t reliant on every component acting perfectly all the time, so we can be more sensible about the confidence we need in individual elements of a system.

7. Incentives really matter

Imagine you’re about to switch broadband providers and you want to compare two companies. One offers to send you a 60-page document describing:

- how it secures the PE- and P-nodes in its MPLS network

- how the OLTs in the fibre network are managed

- how much effort it puts into making sure the ONT in your house is built and supported properly

The other one offers you free Netflix.

Of course, everyone chooses the ISP with free Netflix. In the telecoms sector, customers don’t pay for security, so there’s no incentive to do more than your competitors and that is what would drive the sector to get better. That’s where regulation can help and is one of the reasons that we, with DCMS, pushed so hard for what became the Telecoms Security Act. The detailed security requirements in the Code of Practice make it clear what’s expected of telecoms operators and the fines Ofcom can levy when that’s not met are material. We’re already seeing this drive better outcomes in the sector.

If you ask anyone in the intelligence community who was responsible for the SolarWinds attack I referenced earlier, they’ll say it was the Russian Foreign Intelligence Service, the SVR. But Matt Stoller makes an interesting argument in his article that it’s actually the ownership model for the company that’s really the underlying issue. The private equity house that owns SolarWinds acted like an entity that wanted to make money, rather than one that wanted to promote secure software development and operations. Who knew?

Now, I don’t agree with everything Matt says in this article, but it’s hard not to link the commercial approach of the company with the incentive and capability of the people in it. It’s then hard not to link the actions of those people, driven by their incentives and capabilities, with the set of circumstances that led to the attack being so easy for the Russians. Of course, the blame still sits with the SVR, but did we accidentally make it easier for them?

In both cases, the companies are doing exactly what they’re supposed to do – generate shareholder value. But we implicitly expect these companies to manage our national security risk by proxy, often without even telling them. Even in the best case, their commercial risk model is not the same as a national security risk model, and their commercial incentives are definitely not aligned with managing long-term national security. In the likely case, it’s worse. So, I think we need to stop just shouting “DO MORE SECURITY!” at companies and help incentivise them to do what we need long term. Sometimes that will be regulation, like the Telecoms Security Act, but not always. Sometimes we’re going to have to (shock, horror!) pay them to do what we need when it’s paranoid lunatic national security stuff. But making the market demand the right sort of security and rewarding those that do it well has got to be a decent place to start. Trying to manage cyber security completely devoid of the vendors’ commercial contexts doesn’t seem sensible to me.

8. Market shaping for fun and profit (and national security)

The transistor, invented in the US in 1947, is the foundational building block of the digital electronics that run the world today. The first commercial, interactive, transistorised computer, the DEC PDP-1, was released in 1960 and had a processor unit that weighed more than 500 kg, contained 2,700 transistors and could perform 100,000 additions in a second.

DEC PDP-1 (1960)

The main processor in the phone in your pocket probably contains about 15 billion transistors, with each square millimetre of the chip housing anything up to 200 million transistors. It can perform trillions of operations in a second. The science behind this evolution is astounding, but the underlying physics is the same, so I imagine those 1960 engineers could understand the basics of a modern microprocessor. What is more amazing is the scale of the market for this technology and its pervasiveness, from phones to missiles, from cars to energy grids.

Open science has driven the required fundamental advances in mathematics, chemistry, physics and engineering. But those technical advances alone couldn’t have driven the development of the digital revolution we now enjoy. Each small step forward needed a commercial driver or outlet, a way of turning the invention into revenue to grow companies and further drive innovation. That has been enabled by common technical standards that allow worldwide markets and globalisation of supply chains. Globalisation allows the selection of services and supplies from different companies and countries on purely economic and functional grounds, leading to specialisation in highly complex and expensive areas, like semiconductor manufacture.

The problem is that, left to its own devices, this ecosystem optimises for one variable only: cost. And cost is very fungible and can be arbitrarily affected by actors who don’t follow the implicit rules (for example, by providing a load of state funding to prop up companies and allow them to sell below cost to corner a market). Even without state aid, a company with a massive domestic market will always be lower cost than one with a smaller domestic market, just through economies of scale. So, China has an advantage in a globalised world.

If we are to achieve the ambition set out in the Integrated Review, if we are to become a science and technology superpower, if we are going to lead in technologies that are critical for our security, then just ‘doing more awesome research’ isn’t going to cut it. We need to get into market shaping, and that’s uncomfortable. We need the global market to demand the things we’re going to produce, and we need to protect them as they are developed and make sure people can’t just come and buy or steal them.

Also, if we build small groups of interdependent companies, we can make them into ‘sticky ecosystems’ that are harder to copy and more easily provide good gearing into the global market. We don’t need to control everything we rely on, but we need to have enough skin in the game to be a player on the world stage and we need options so we’re not overly dependent on singletons which helps us to build resilience. That leads you to a question of whether unicorns[2] are actually ever desirable from a national security point of view, but that question probably needs beer to help analysis!

9. Standards are really boring, but really, really important

The globalisation I‘ve just mentioned is driven by standards that govern how tech works in the real world. These standards are why we could all still communicate during the pandemic, regardless of what country we were in, what machine we used, what video calling software we preferred, or what ISP we used. These standards are why your mobile phone will work in almost every country on the planet. These standards are why you can use any browser on any device to access any website hosted anywhere in the world.

These standards are incredibly detailed and technical and are developed over many years by groups of companies, governments, civil society groups and individuals coming together in things called Standard Development Organisations (SDOs [3]). Most people that go to standards meetings have an agenda, and it’s usually to get something into a standard for commercial reasons. Many standards include technology that’s patented by the contributors and these Standard Essential Patents are critical to making money out of standards, so companies want to get their patented technologies into standards.

This means that, for big global standards like 5G, many companies from around the world own the patents in the standard, and you need a licence from them to build a product. That includes Chinese companies, and this gives us a weird interdependency (and not an insignificant amount of national security risk) when you actually try to implement. This is also why standards bodies are becoming a tool of great power competition – control the standards and you can stack the deck to make your technology more likely to be implemented. Sometimes that’s just about money, but sometimes there could be other reasons. It’s interesting that Chinese people or Chinese companies hold leadership positions in more than 80% of key working groups in the main telecoms SDOs. Just saying.

The other thing we’ve seen happen is individuals and companies making standards reflect their values in a narrow field, without much thought about what else could go wrong. Two examples here would be DNS-over-HTTPS (DOH) and the MASQUE protocols that underpin things like Apple Private Relay. Both of these were defined by the Internet Engineering Task Force, the organisation that sets the standards for the internet, which it does through humming[4]. They were intended to provide more privacy to users from all sorts of parties, but mainly government and big tech companies. The problem is that DOH makes enterprise cyber security very hard and also damages things like ISP parental controls, and some filtering for child sexual abuse images. Apple Private Relay makes law enforcement’s life much harder when looking at who’s visiting certain dodgy websites, but also potentially reduces the resilience of mobile networks because it messes with the caching strategies in place today and makes diagnosing problems harder. It also makes it impossible for those networks not to charge for certain data traffic because they can’t see which sites a phone is trying to visit.

In both cases, we’ve got third parties affecting our national security and our ability to do cyber security, driven by other intents. In the case of a great power competition being played out in the SDOs, we need to find a way to make sure we can still have the technology we want and need that also meets our vision of security without completely balkanising the internet, since it’s not clear we’d win in that case (remember the market-shaping stuff above). In the case of companies and individuals, we need to have a more widely accepted understanding of the balance between all the different characteristics we expect on the internet. I don’t want to start off a whole privacy-versus-security screaming match; we can (and should) have both. But neither should one axiomatically trump the other. We need to reassess the design goals that we want for the future services we’ll want and make sure they’re vested to the relevant standards' bodies. The alternative is that things like NewIP will dominate and then we’re all in the brown and smelly stuff.

'Brown and smelly stuff' (courtesy of @Sourdust)

10. Details matter

OK, I know I’ve been banging on about this forever, but the last few years have shown how important this mantra really is. Deep down, we all know how much details matter, but we also all seem to find it easy to fall back to generalisations and hyperbole.

It is critical that we get into the detail of things, whether deeply technical things that only three people on the planet care about, or discussions of policy that will affect everyone in the country. If we don’t, we'll eventually make a catastrophic error that will have real-world consequences.

While it’s not pure cyber security, the somewhat polarised discussions about child safety and end-to-end encryption typify this problem for me, but I see it in many places. I honestly think that getting into the real detail of hard problems is what helps us see pathways to solutions. As I said at the start, there’s a long way to go for cyber security and a lot of hard problems still to solve. Details are your friend!

Towards a grand unified theory of the cyberz

Well, maybe not that grand, but here’s an idea for something we could seek to build over the next few years to help us all do cyber security better, make it more repeatable and get ahead of the attackers.

At the moment, we (and as far as I can tell every other national incident response function) record incidents mainly in narrative form. That’s interesting but doesn’t help us do really useful things with the data. What if we had a global, machine-readable standard for incidents? Well, we’d be able to work out whether a particular product was really hard for people to configure properly, what attackers were particularly going after, work out which mitigations are systemically hard for people to get right and maybe even discover zero-day vulnerabilities that are being exploited much more quickly. We may even be able to put real costs on cyber security screw-ups.

We could do all of this in a semi-automated way, and also in a way that allows each incident response authority to protect the privacy of the victims they're helping, while still exchanging and discovering useful data between global authorities (think a bunch of high-function crypt primitives, or ‘hipster crypt’ as one of our people calls it). This isn’t that hard. It’s some combination of CVSS, CVE, CWE, MITRE ATT&CK paths and something to describe impact in various ways, including second and third-order impacts to cover things like citizens being defrauded through secondary attacks when a big company spills their personal data to cyber criminals.

Next, imagine you could model an entire network as a graph with the nodes representing functions, data sources, bits of kit and so on, and the edges representing the relationships between them. We’ve started this with the CNI Knowledgebase that we’ve talked about in the last few NCSC Annual Reviews, and that’s helping us reason about cascade failures in the UK’s critical infrastructure. So, it should be possible to do something similar with enterprise and ‘local’ control systems. We could then bring a system-theoretic approach to understanding the risks and impacts (or collectively hazards) through application of, for example, STAMP and STPA. Overlaying the MITRE ATT&CK paths that get you to those hazards should then help you understand which mitigations you need to put in place. We did something like this as part of the threat model for the Telecoms Security Act, although in a manual and non-scalable way.

If we had a database of products, features and required configurations and the MITRE ATT&CK paths that the vendor believed were mitigated, we could then automatically start to work out whether the products you'd deployed as some of those graph nodes actually mitigated the ways attackers could try to pop you and – importantly – make sure that you had at least one mitigation on each path, and understood what configuration was necessary for each to be effective. Some of the NCSC for Startups alumni are building products and services that get towards that. I’ve seen products deployed in ways that don’t mitigate the intended threat too many times . . .

From that, you’d understand what level of confidence you need in each of the things in your system. You could then use the principles-based assurance approach to get that confidence at the different levels necessary. For some components, you’d be OK with vendor assertions. For some, you’d want a lot more understanding, possibly even someone to validate the implementation for you. Maybe we’d use the MITRE D3FEND approach to objectively judge how effective a particular product feature was at defending against a particular ATT&CK path? In any case, we’d get away from saying "THIS PRODUCT IS AWESOME!" to "this feature of this product intends to mitigate the particular attack I’m using it for, I have confidence in the developer’s competence and approach, and I think I’ve configured it properly." That seems more doable.

Penetration testing has been around for decades, but we’ve really only used it to embarrass people when they don’t design or build a system as they'd intended. If we had machine-readable pentesting results, along the lines of the incident data, we’d be able to link that work with the graph of the system (and the rich data we have about it) to make sure that we hadn’t missed any attack paths, and that we’d actually built what we thought. It’d be much easier to understand what was going on.

Of course, something like this wouldn’t cope with people just not patching their stuff, but there are other ways of getting that data and feeding it into something like this. If you take the simplest approach, if vendors started providing machine-readable blobs with every vulnerability notification or patch that described the affected function (the versions, the ATT&CK path that was enabled and so on), we’d be able to automatically work out whether a patch was necessary and how risky something was in the context of this system, rather than the generic case. That would surely help operators to target their scarce effort more effectively.

Finally, it will all go wrong at some point, so we’d be back to the incident data. That feedback loop would enable us to evolve our approach, design patterns, assurance requirements, vendor security requirements and so on. And with that, we may finally get repeatable, evidence-based, rigorous cyber security (that doesn’t need an army of cyber ninjas to get right) that enables better business risk management. Which in the end is what cyber security is all about.

Signing off

If you’ve got this far, thanks for reading this very long blog. I hope there’s something useful in it. I know that the people in the NCSC will continue to work tirelessly to make the UK the safest place to live and work online, and to do their best to implement the national strategy. I know that whoever takes over as NCSC Technical Director will have the best team on the planet to help them.

I really hope that everyone outside the NCSC that has supported or challenged me will continue to do that for my successor. Whoever they are, they’ll have the best job in the world! I’ll be on enforced gardening leave for a few months, but any email to me at NCSC will be dealt with by the team.

It really has been a privilege and an honour to do this job but it’s time for me to move on. So, I’ll finish with a tweak to the genius of Douglas Adams: so long, and thanks for all the bits.

Ian Levy

[1]Yes, there are some real challenges in bringing safety and security culture together which we need to tackle, but that doesn’t mean we can’t learn in the meantime.

[2]Companies worth more than a billion pounds that normally corner a significant portion of a key market, rather than the weird horsey things with pokey bits on their heads.

[3]Usually. There are some instances of companies producing de facto standards outside of any process or SDOs that only consist of governments, but the point still stands.

[4]The IETF works on a ‘broad consensus’ system and pre-pandemic, votes were decided by how loud people hummed. Seriously, this is how the internet has been evolved over the years.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK