Etcd Cluster on Kubernetes

source link: https://xigang.github.io/2019/10/11/etcd-cluster-on-kubernetes/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

在kubernetes上部署etcd集群

- 高可用,保证始终有期望的etcd实例在运行

- 灵活,可以动态的调整etcd实例的数量,并且可以平滑的通过更新镜像的方式升级etcd版本

- 需要ceph存储要稳定,并且IO延迟要尽量的小

- 对kubernetes集群的网络要求高,需要集群的网络稳定,防止etcd集群抖动,以及集群内各个pod之间互相影响

Requirements

- kubernetes (version 1.8+)

- CoreDNS

- etcd 3.2.13+

- ceph RBD(https://kubernetes.io/docs/concepts/storage/storage-classes/#ceph-rbd)

Deploy etcd operator

- 克隆 etcd-operator repo

$ git clone https://github.com/coreos/etcd-operator.git

- 设置etcd operator RBAC规则

$ sh example/rbac/create_role.sh

- 安装 etcd operator

$ kubectl create -f example/deployment.yaml

检查etcd-operator服务是否启动成功:

$ kubectl get deployment etcd-operator

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

etcd-operator 1 1 1 1 10d

- etcd operator会自动的创建一个kubernetes Custom Resource Definition(CRD) -

EtcdCluster

$ kubectl get customresourcedefinitions

NAME KIND

etcdclusters.etcd.database.coreos.com CustomResourceDefinition.v1beta1.apiextensions.k8s.io

创建 TLS etcd集群

etcd证书制作参考:

将证书做到kubernetes secret中:

kubectl create secret generic etcd-addops-client-tls --from-file=etcd-client-ca.crt --from-file=etcd-client.crt --from-file=etcd-client.key

kubectl create secret generic etcd-addops-peer-tls --from-file=peer-ca.crt --from-file=peer.crt --from-file=peer.key

kubectl create secret generic etcd-addops-server-tls --from-file=server-ca.crt --from-file=server.crt --from-file=server.key

编辑example-etcd-cluster.yaml文件内容如下:

apiVersion: "etcd.database.coreos.com/v1beta2"

kind: "EtcdCluster"

metadata:

name: "example" #etcd集群名称

annotations:

etcd.database.coreos.com/scope: clusterwide

spec:

size: 3 #etcd集群实例数量

pod:

annotations: # 是否暴露etcd集群监控指标

prometheus.io/scrape: "true"

prometheus.io/port: "2379"

persistentVolumeClaimSpec: #使用pvc将etcd的数据落盘持久化

storageClassName: rbd

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

TLS: #etcd集群 TLS认证

static:

member:

peerSecret: etcd-addops-peer-tls

serverSecret: etcd-addops-server-tls

operatorSecret: etcd-addops-client-tls

编辑完成之后,创建etcd集群:

kubectl create -f example/example-etcd-cluster.yaml

关于更多关于EtcdCluster Spec的定义,可以参考:

https://github.com/coreos/etcd-operator/blob/master/doc/user/spec_examples.md

查看etcd集群pods的运行情况:

# kubectl get pods

example-mn7zg95s8s 1/1 Running 0 95m

example-x6grchxhmb 1/1 Running 0 99m

example-xx8fskdwd6 1/1 Running 0 98m

查看etcd集群pvc的情况:

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

example-mn7zg95s8s Bound pvc-288ae7d2-eb2e-11e9-9846-fa163e3101ea 1Gi RWO rbd 96m

example-x6grchxhmb Bound pvc-a1059994-eb2d-11e9-9846-fa163e3101ea 1Gi RWO rbd 100m

example-xx8fskdwd6 Bound pvc-c3c173b0-eb2d-11e9-9846-fa163e3101ea 1Gi RWO rbd 99m

查看etcd集群pv的情况:

# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-288ae7d2-eb2e-11e9-9846-fa163e3101ea 1Gi RWO Delete Bound default/example-mn7zg95s8s rbd 97m

pvc-a1059994-eb2d-11e9-9846-fa163e3101ea 1Gi RWO Delete Bound default/example-x6grchxhmb rbd 100m

pvc-c3c173b0-eb2d-11e9-9846-fa163e3101ea 1Gi RWO Delete Bound default/example-xx8fskdwd6 rbd 99m

访问etcd集群

kuberetes集群内部访问etcd集群:

# docker exec -it 9136a005229e sh

/ # ETCDCTL_API=3 etcdctl --cacert="/etc/etcdtls/operator/etcd-tls/etcd-client-c

a.crt" --cert="/etc/etcdtls/operator/etcd-tls/etcd-client.crt" --key="/etc/etcdt

ls/operator/etcd-tls/etcd-client.key" --endpoints="https://example-client.defaul

t.svc:2379" member list

51c79f48887c71c3, started, example-mn7zg95s8s, https://example-mn7zg95s8s.example.default.svc:2380, https://example-mn7zg95s8s.example.default.svc:2379

589b77d81c2e267d, started, example-xx8fskdwd6, https://example-xx8fskdwd6.example.default.svc:2380, https://example-xx8fskdwd6.example.default.svc:2379

64f355e75da60cdb, started, example-x6grchxhmb, https://example-x6grchxhmb.example.default.svc:2380, https://example-x6grchxhmb.example.default.svc:2379

kubernetes集群外部访问etcd集群:

需要将etcd各个实例的ip关联到一个可用的lvs vip下,然后通过vip或者vip关联的域名对运行在kubernetes集群中的etcd集群进行访问:

/ # ETCDCTL_API=3 etcdctl --cacert="/etc/etcdtls/operator/etcd-tls/etcd-client-c

a.crt" --cert="/etc/etcdtls/operator/etcd-tls/etcd-client.crt" --key="/etc/etcdt

ls/operator/etcd-tls/etcd-client.key" --endpoints="https://<vip>:2379" m

ember list

51c79f48887c71c3, started, example-mn7zg95s8s, https://example-mn7zg95s8s.example.default.svc:2380, https://example-mn7zg95s8s.example.default.svc:2379

589b77d81c2e267d, started, example-xx8fskdwd6, https://example-xx8fskdwd6.example.default.svc:2380, https://example-xx8fskdwd6.example.default.svc:2379

64f355e75da60cdb, started, example-x6grchxhmb, https://example-x6grchxhmb.example.default.svc:2380, https://example-x6grchxhmb.example.default.svc:2379

/ #

etcd集群数据备份及恢复

etcd集群数据备份

部署etcd-backup-operator:

$ kubectl create -f example/etcd-backup-operator/deployment.yaml

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

etcd-backup-operator-68f847c4d9-k6pnj 1/1 Running 1 6h11m

检查etcd-backup-operator是否创建EtcdBackup CRD成功:

$ kubectl get crd

NAME KIND

etcdbackups.etcd.database.coreos.com CustomResourceDefinition.v1beta1.apiextensions.k8s.io

配置AWS secret(这里将数据上传到S3上)

- 在本地创建credentials和config文件(注意文件名称必须是:credentials和config)

$ cat $AWS_DIR/credentials

[default]

aws_access_key_id = XXX

aws_secret_access_key = XXX

$ cat $AWS_DIR/config

[default]

region = <region>

- 创建 aws secret:

kubectl create secret generic aws --from-file=$AWS_DIR/credentials --from-file=$AWS_DIR/config

- 创建EtcdBackup CR, 编辑periodic_backup_cr.yaml如下:

apiVersion: "etcd.database.coreos.com/v1beta2"

kind: "EtcdBackup"

metadata:

name: example-etcd-cluster-periodic-backup

spec:

etcdEndpoints: ["https://example-client.default.svc:2379"]

clientTLSSecret: etcd-addops-client-tls

storageType: S3

backupPolicy:

# 0 > enable periodic backup

backupIntervalInSecond: 125

maxBackups: 4

s3:

# The format of "path" must be: "<s3-bucket-name>/<path-to-backup-file>"

# e.g: "mybucket/etcd.backup"

path: etcd-bucket/etcd.backup

awsSecret: aws

endpoint: http://xx.xxx.xx.cn

forcePathStyle: true

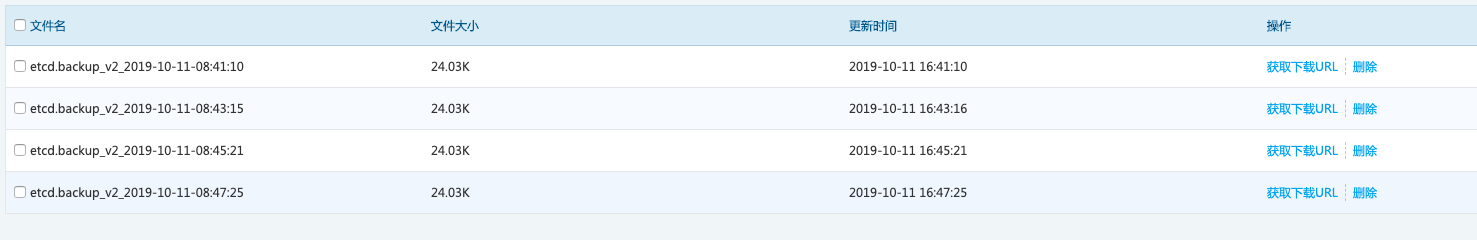

- 检查etcd需要备份的数据是否,已经备注到了S3上:

etcd集群数据恢复

etcd-restore-operator会使用backup数据将运行在kubernetes上的etcd集群恢复,恢复的处理流程是:需要将已经存在的etcd集群删除掉,然后在使用backup数据创建一个新的etcd集群,作为恢复的etcd集群。

部署etcd-restore-operator

$ kubectl create -f example/etcd-restore-operator/deployment.yaml

检查etcd-restore-operator pod是否创建成功

# kubectl get pods

NAME READY STATUS RESTARTS AGE

etcd-restore-operator-859c9f479-lkzsm 1/1 Running 2 49m

检查etcd-restore-operator是否创建EtcdRestore CRD成功

$ kubectl get crd

NAME KIND

etcdrestores.etcd.database.coreos.com CustomResourceDefinition.v1beta1.apiextensions.k8s.io

创建EtcdRestore CR,编辑restore_cr.yaml文件

apiVersion: "etcd.database.coreos.com/v1beta2"

kind: "EtcdRestore"

metadata:

# The restore CR name must be the same as spec.etcdCluster.name

name: example

spec:

etcdCluster:

# The namespace is the same as this EtcdRestore CR

name: example

backupStorageType: S3

s3:

# The format of "path" must be: "<s3-bucket-name>/<path-to-backup-file>"

# e.g: "mybucket/etcd.backup"

path: etcd-bucket/etcd.backup_v2_2019-10-11-08:53:40

awsSecret: aws

endpoint: http://xx.xx.xx.cn

forcePathStyle: true

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK