Applying machine learning to GitOps

source link: https://developers.redhat.com/articles/2021/06/29/applying-machine-learning-gitops

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Applying machine learning to GitOps Skip to main content

Machine learning helps us make better decisions by learning from existing data models and applying good predictions to the next output. In this article, we will explore how to apply machine learning in each phase of the GitOps life cycle. With machine learning and GitOps, we can:

- Improve deployment accuracy and predict which deployments will likely fail and need more attention.

- Streamline the deployment validation process.

- Enhance existing quality metrics and drive better deployment results.

Infrastructure automation with GitOps

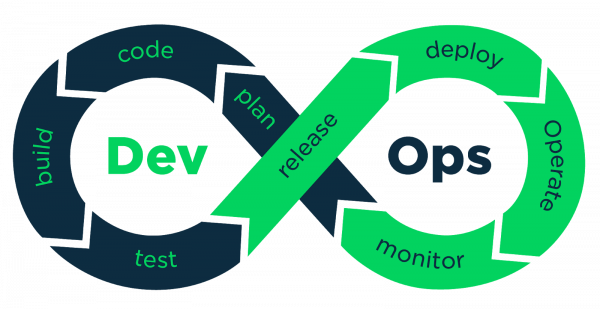

Git enables collaboration between developers by tracking changes in commit history. It applies changes to the desired states as version-controlled commits. DevOps speeds up the process from development to deployment in production. DevOps practitioners use CI/CD to automate builds and deliver iteratively, relying on tools like Jenkins and Argo CD to apply version control to application and infrastructure code. Figure 1 shows the phases of the DevOps development cycle.

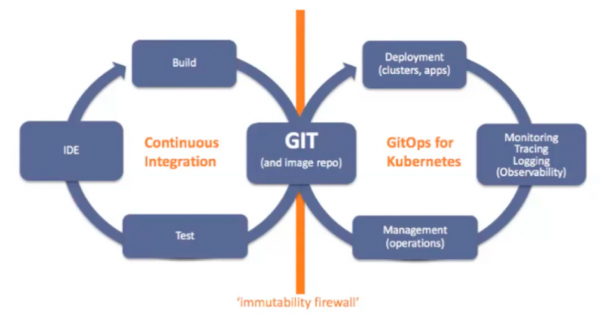

Figure 1: The DevOps development cycle: Plan, code, build, test, release, deploy, operate, and monitor.When we combine Git and DevOps, we get GitOps. GitOps is DevOps for infrastructure automation. It is a developer workflow for automating operations. Figure 2 shows the phases of the GitOps development life cycle.

Figure 2: The GitOps development life cycle: Build, commit changes to a Git repository, test, integrated development environment (IDE) and Git development, monitoring, and management.GitOps and machine learningGitOps and machine learning

With GitOps, we track code commits and configuration changes. Changes made to the source code go into an image repository like Docker Hub. This practice is called continuous integration and can be automated with Jenkins. On the other hand, configuration changes are driven by Git commit. We put the files in Git and synchronize them in Kubernetes. We can use tools like Argo CD to continuously monitor applications running in the cluster and compare them against the target state in the Git repository. This enables Kubernetes to automatically update or roll back if there is a difference between Git and what’s in the cluster. With GitOps, developers can deploy many times a day, allowing for higher velocity.

Machine learning (ML) accelerates each step in the GitOps cycle. It reduces maintenance in building, testing, deploying, monitoring, and security scanning. It also automatically generates tests for validation.

Predictive builds

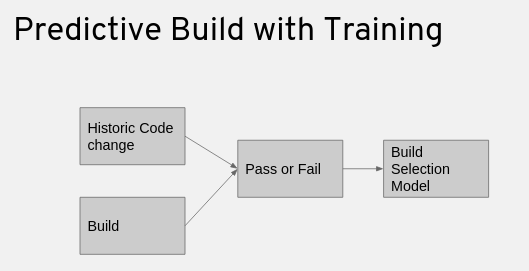

First, machine learning speeds up the build process. Traditionally, GitOps uses information extracted from build metadata to determine which packages to build when there is a particular code change. By analyzing build dependencies in code, GitOps determines all packages that depend on the modified code and rebuilds all dependency packages.

With machine learning, we can train the system to select the builds that are most likely to fail for a particular change to avoid rebuilding all dependency packages. Using a large data set containing results of packages on historical code changes, as shown in Figure 3, machine learning trains the system to learn from previous code changes to come up with a build selection model.

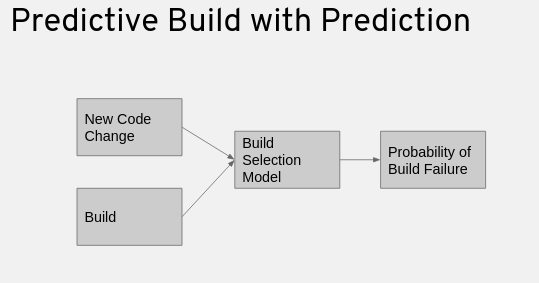

Figure 3: Predictive build with training.The system learns whether the build passes or fails based on the features from the previous code changes, as shown in Figure 4. Then, when the system analyzes new code changes, it applies the learned model to the code change. For any particular build, we can use the build selection model to predict the likelihood of a build failure.

Figure 4: Predictive build with prediction.Security scanning

Machine learning is also used in security scanning. For example, JFrog Xray is a security scanning tool that integrates with Artifactory to identify vulnerabilities and security violations before production releases. Artifact security scanning is an important step in the CI/CD pipeline. The idea is that we can use historical data to prioritize artifact scanning and scan the artifact that’s most likely to fail. This allows us to fail fast and recover fast.

We can apply machine learning to explore a model that filters out unwanted pull requests (PRs). As developers, we work on large projects that have constant flows of pull requests. To make predictions about PRs, we need to create a model for the predictor. The steps to create a predictor are as follows:

- Define relevant features.

- Gather data from data sources.

- Perform data normalizations.

- Train models.

- Choose the best model.

- Evaluate the chosen predictor.

We use the extract, transform, and load (ETL) process to normalize our data, as shown in Figure 5.

Figure 5: The ETL process.

The steps to create the predictor are shown in Figure 6.

Figure 6: Building the predictor using the ETL process.

Validating deployments

We can also apply machine learning to website deployment validations. Many websites have dependencies such as external components and third-party injection JavaScript. In this situation, the CI/CD deployment status can be successful while the third-party injected components might contain defects in the websites.

To use the machine learning approach, we can capture the entire web page as an image and divide that image into multiple UX components. The division of the UX components can generate training and test data to feed into the learning model. With the model in place, we can test against any new UX components across different browser resolutions and dimensions by feeding the UX image components into the model. Incorrect images, text, and layouts will be classified as defects. We can use the images to train a data model to discover defects.

Figure 7 shows an example of the deployment validation model used by machine learning. Once the training model is complete, we can use the same model to check for different pages on the website. Each image is classified with a score between 0 and 1. A score closer to 0 signifies a model prediction of a potential test case failure such as a UI defect. A score closer to 1 signifies a prediction of a test case that meets the quality criteria. A cutoff threshold determines a value below which the image has a potential UI defect.

Figure 7: Deployment validation model.

The supervised learning algorithm takes a set of training images as the training data. The learning algorithm is provided with the training data to learn about a desired function. The validation of the learning algorithm is used to compare with the test data. This process of learning from training data and validating against the test data is called modeling. The process of deciding the particular images into a pass or failed status is called classification.

Figure 8 shows an example of HTML injections by third-party JavaScript. We can leverage machine learning to validate HTML injections.

Figure 8: HTML injection by third-party JavaScript.

The corresponding HTML code inside the iframe object after the HTML injection is shown in Figure 9.

Figure 9: HTML code generated by injection.

The high-level view of the image segmentation and processing workflow using machine learning is shown in Figure 10.

Figure 10: The image segmentation workflow.

Alerting and monitoring

Machine learning can also be applied to alerting and monitoring. For example, machine learning can identify the disk space usage based on Prometheus alerts and predict when the disk space usage will hit the upper limit an hour in advance. Using machine learning, the system can send out notifications to the team early on potential production issues. We need to understand the key performance indicator (KPI) metrics for proactive production monitoring. The predictive disk outage requires making adjustments to the algorithms in production. The production data feeds back into the model to fine-tune the data set. The prediction accuracy will improve based on rules that have worked in the past.

Figure 11 is an example of disk usage monitoring using machine learning prediction.

Figure 11: Disk usage monitoring with prediction.

Testing

Traditionally, regression testing uses information extracted from build metadata to determine which tests to run on a particular code change. By analyzing build dependencies in the code, one can determine all tests that need to be run based on dependencies. If a change happens to one of the low-level libraries, it is inefficient to rerun all tests on every dependency.

Machine learning also plays an important role in testing to improve efficiency. We can use machine learning to calculate the probability of a given test finding a regression with a particular code change. Machine learning can make an informed decision to rule out tests that are extremely unlikely to uncover an issue. Machine learning offers a new way to select tests and prioritize tests. It can use a large data set containing results of tests on historical code changes and then apply standard machine learning techniques. During training, the system learns a model based on features derived from previous code changes and test results of the corresponding code changes. When the system is analyzing new code changes, it applies the learned model to the code change. For any particular test, the model can predict the likelihood of the test finding defects in a regression. Then the system can select the tests that are most likely to fail for a particular change and run those tests first. Often, we will encounter unstable tests which are not generating consistent results, or test outcomes are changing from pass to fail when codes under the tests have not actually changed. In this situation, the model may not be able to predict test outcomes accurately. It is important to segregate the unstable tests for machine learning.

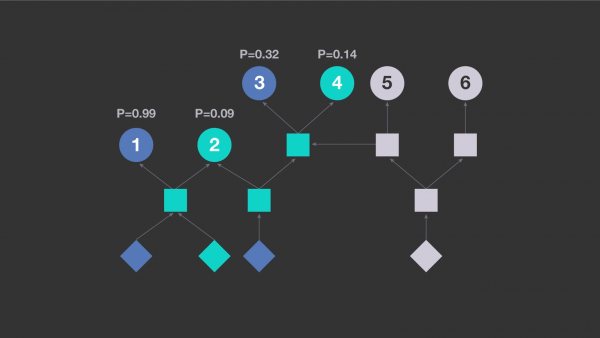

The test dependency diagram based on six test cases at the top level is shown in Figure 12.

Figure 12: Test dependency diagram.

With predictive test case training, machine learning can predict the probability of failure for each test case and prioritize test cases using probability (see Figure 13).

Figure 13: Test cases with probability of failure.ConclusionConclusion

Machine learning can be applied to GitOps to evaluate failures quickly. Developers can fix failures and commit code back to the CI/CD pipeline in an iterative fashion. This process eliminates unnecessary computational resources. There are limitations many organizations face when they are dealing with shorter release cycles. When the deployment results have external dependencies and are dynamic, GitOps combined with machine learning can help make the best decisions and predictions based on historical data and generate predictive models and outcomes.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK