Redis 深入 (2) 集群架构

source link: https://blog.duval.top/2021/04/26/Redis-%E6%B7%B1%E5%85%A5-2-%E9%9B%86%E7%BE%A4%E6%9E%B6%E6%9E%84/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

总结在生产中常见的几种 Redis 集群部署架构。

主从集群架构

最简单的 Redis 集群架构就是主从架构。服务通过主节点写数据,通过从节点读数据,实现读写分离。如下图所示:

存在问题:

- 主机节点宕机后无法对外提供写服务;

- 集群扩容存在瓶颈,从节点数量不能无限拓展,否则数据同步会拖垮主节点。

- 主节点 6379:

## 配置Redis后台运行 daemonize yes ## 配置数据目录,用于存放日志、PID文件、RDB文件、AOF文件等 dir "./data/6379" ## 配置redis日志文件名 logfile "6379.log" ## 配置pid文件 pidfile "redis_6379.pid" ## 密码 requirepass mypassword - 从节点 6380:

## 配置Redis后台运行 daemonize yes ## 配置数据目录,用于存放日志、PID文件、RDB文件、AOF文件等 dir "./data/6380" ## 配置redis日志文件名 logfile "6380.log" ## 配置pid文件 pidfile "redis_6380.pid" ## 配置同步复制 replicaof 127.0.0.1 6379 ## 密码 requirepass mypassword - 丛节点 6381:

## 配置Redis后台运行 daemonize yes ## 配置数据目录,用于存放日志、PID文件、RDB文件、AOF文件等 dir "./data/6381" ## 配置redis日志文件名 logfile "6381.log" ## 配置pid文件 pidfile "redis_6381.pid" ## 配置同步复制 replicaof 127.0.0.1 6379 ## 密码 requirepass mypassword多级主从架构

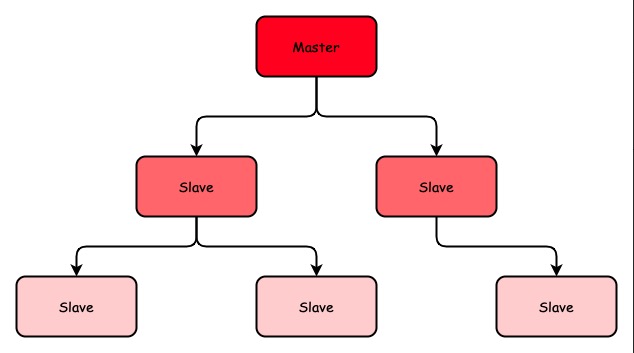

在主从架构上做的优化就是采用多级主从架构,避免主节点同步复制出现瓶颈:

存在问题:

- 主机节点宕机后无法对外提供写服务;

- 多级结构,同步复制延迟增大;

参考主从架构

Sentinel 集群架构

前两种架构都没有解决主节点的高可用问题,主节点挂掉之后,集群不能写数据,所以上述两种架构都不能用在对可靠性要求较高的服务上。

为了实现集群的高可用,于是有了 Sentinel 集群架构。Sentinel 是一个单独的进程,用来监控 Redis Server 进程的状态;当 Redis 主节点挂掉之后,Sentinel 集群会选举出新的 主节点,并更新在配置文件中;而客户端服务可以通过 Sentinel 集群 获取到当前的 Redis 主节点进行数据读写。

架构图如下:

存在问题:

- Redis 从节点仅有备份作用,不对外提供服务;

- Redis 主节点单点,同时对外提供读写功能,存在性能瓶颈;

可见 Sentinel 集群架构只适用于对可靠性有要求,但压力较小的服务场景。

Redis 的主从配置同上。此外还需要配置 Sentinel 集群,可以参考:

- sentinel-26379.conf

## 配置后台运行 daemonize yes ## 数据目录,保存 pid 文件、日志等数据 dir "./data/26379" ## pid 文件名 pidfile "sentinel-26379.pid" ## 日志文件名 logfile "26379.log" ## sentinel-master为集群主节点名; ## 127.0.0.1:6379 为主节点IP端口; ## 2 表示当有两个以上的Sentinel认为 Master 挂掉的时候,便重新选主。一般设置为Sentinel节点总数一半以上的值 sentinel monitor sentinel-master 127.0.0.1 6379 2 - sentinel-26380.conf

## 配置后台运行 daemonize yes ## 数据目录,保存 pid 文件、日志等数据 dir "./data/26380" ## pid 文件名 pidfile "sentinel-26380.pid" ## 日志文件名 logfile "26380.log" ## sentinel-master为集群主节点名; ## 127.0.0.1:6379 为主节点IP端口; ## 2 表示当有两个以上的Sentinel认为 Master 挂掉的时候,便重新选主。一般设置为Sentinel节点总数一半以上的值 sentinel monitor sentinel-master 127.0.0.1 6379 2 - sentinel-26381.conf

## 配置后台运行 daemonize yes ## 数据目录,保存 pid 文件、日志等数据 dir "./data/26381" ## pid 文件名 pidfile "sentinel-26381.pid" ## 日志文件名 logfile "26381.log" ## sentinel-master为集群主节点名; ## 127.0.0.1:6379 为主节点IP端口; ## 2 表示当有两个以上的Sentinel认为 Master 挂掉的时候,便重新选主。一般设置为Sentinel节点总数一半以上的值 sentinel monitor sentinel-master 127.0.0.1 6379 2

如果发生主从切换,哨兵会自动修改 redis-xxx.conf 以及 sentinel-xxx.conf 的主从信息。此外,哨兵还会在 sentinel-xxx.conf 最后追加一些集群信息,例如当 Redis 6380成为主后,sentinel-26379.conf 末尾追加信息为:

user default on nopass ~* &* +@all

sentinel myid bf4850100209679d8287350390e79ee0556df18d

sentinel config-epoch sentinel-master 1

sentinel leader-epoch sentinel-master 1

sentinel current-epoch 1

sentinel known-replica sentinel-master 127.0.0.1 6381

sentinel known-replica sentinel-master 127.0.0.1 6379

sentinel known-sentinel sentinel-master 127.0.0.1 26381 a827326703986e36617dfe5f16ef601cb8e983cd

sentinel known-sentinel sentinel-master 127.0.0.1 26380 95e1de44a18a86774f922ff96cf364b03d1f835eCluster 架构

Redis Cluster 架构将整个数据库划分为多个槽位,并将所有槽位均匀分布到多个小型 Redis 主从集群上。每个 Redis 小集群内部维护一个主从集群,各个小集群之间通过 gossip 协议通讯。架构图如下:

槽划分与重定向

整个集群会被划分为16384个槽位,并均匀分布到集群中。每个小集群只负责维护一部分槽位。

Redis Cluster 客户端连接集群后会在本地保存一份槽位分配信息。如果集群发生变更而导致槽位信息与本地缓存内容不一致,则客户端请求会被重定向到新的节点上。例如:

## 注意要使用 -c 参数连接才会重定向

redis-cli -c -a mypassword -p 6804

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6804> get name1

-> Redirected to slot [12933] located at 127.0.0.1:6802

"\"ddddd334333\""Gossip 协议

集群中的所有 Redis 节点都使用 gossip 协议进行通讯,默认端口是 Redis 访问端口加上 10000。gossip 协议包括ping、pong、fail、meet等消息类型。

详见:《一万字详解 Redis Cluster Gossip 协议》

通过大括号前缀可以指定槽位,常用于批量操作语句:

127.0.0.1:6802> mset {d1}:name name1 {d1}:name2 name2

-> Redirected to slot [8604] located at 127.0.0.1:6801

OK

127.0.0.1:6801> keys *

1) "{d1}:name2"

2) "name"

3) "{d1}:name"

127.0.0.1:6801> get {d1}:name2

"name2"- slave 节点探测到 master 节点挂掉之后,会自行延迟一段时间,然后将自己所在集群的currentEpoch递增,并广播 FAILOVER_AUTH_REQUEST 信息;

- 其他 master 节点收到信息后,会应答 FAILOVER_AUTH_ACK 信息,而且每个 Epoch 只会应答一次;

- slave 节点收集记录收到的 FAILOVER_AUTH_ACK 信息;如果收到超过半数 master 的 ACK(所以 master 节点数量一般为奇数),则自己变为 master 节点,并广播 Pong 消息通知所有节点;

-

如果 Redis 端口为 6800,则配置如下:

```bash ## 配置redis后台运行 daemonize yes ## 配置端口 port 6800 ## 配置pid文件 pidfile /var/run/redis_6800.pid ## 日志文件 logfile "cluster-6800.log" ## 配置数据目录 dir "./data/cluster-6800/" ## 开启集群模式 cluster-enabled yes ## 集群节点信息文件(该文件由Redis节点生成和维护,每个节点需要有单独的节点信息文件) cluster-config-file nodes-6800.conf ## 超时时间。当节点失联超时,该节点会被认为失效。 cluster-node-timeout 15000 ## 绑定端口 bind 127.0.0.1 -::1 ## 保护模式 protected-mode yes ## 使用AOF appendonly yes appendfilename "appendonly.aof" ```其他节点配置只需要根据端口号相应改下即可。

启动全部 Redis 节点

-

redis-cli -a mypassword --cluster create --cluster-replicas 1 127.0.0.1:6800 127.0.0.1:6801 127.0.0.1:6802 127.0.0.1:6803 127.0.0.1:6804 127.0.0.1:6805这里以6个节点(3主3从)为例,创建结果如下:

>>> Performing hash slots allocation on 6 nodes... Master[0] -> Slots 0 - 5460 Master[1] -> Slots 5461 - 10922 Master[2] -> Slots 10923 - 16383 Adding replica 127.0.0.1:6804 to 127.0.0.1:6800 Adding replica 127.0.0.1:6805 to 127.0.0.1:6801 Adding replica 127.0.0.1:6803 to 127.0.0.1:6802 >>> Trying to optimize slaves allocation for anti-affinity [WARNING] Some slaves are in the same host as their master M: 2130527167330eee433e4a85e23667e1f3e7f18c 127.0.0.1:6800 slots:[0-5460] (5461 slots) master M: 838e70efaa99a1e5be69e984548f546f684f3bb4 127.0.0.1:6801 slots:[5461-10922] (5462 slots) master M: ef87bb7b2838bcd02a7debd206136817c9e02a97 127.0.0.1:6802 slots:[10923-16383] (5461 slots) master S: f6b807ce2328586c86e8ce3190750a9519d99850 127.0.0.1:6803 replicates 838e70efaa99a1e5be69e984548f546f684f3bb4 S: 6d7bd796300a8b977df1691d4be8927b6c7da20a 127.0.0.1:6804 replicates ef87bb7b2838bcd02a7debd206136817c9e02a97 S: 80a4eeac5a4f45656af66f77c37fed724ff186e9 127.0.0.1:6805 replicates 2130527167330eee433e4a85e23667e1f3e7f18c Can I set the above configuration? (type 'yes' to accept): yes >>> Nodes configuration updated >>> Assign a different config epoch to each node >>> Sending CLUSTER MEET messages to join the cluster Waiting for the cluster to join >>> Performing Cluster Check (using node 127.0.0.1:6800) M: 2130527167330eee433e4a85e23667e1f3e7f18c 127.0.0.1:6800 slots:[0-5460] (5461 slots) master 1 additional replica(s) S: 80a4eeac5a4f45656af66f77c37fed724ff186e9 127.0.0.1:6805 slots: (0 slots) slave replicates 2130527167330eee433e4a85e23667e1f3e7f18c M: 838e70efaa99a1e5be69e984548f546f684f3bb4 127.0.0.1:6801 slots:[5461-10922] (5462 slots) master 1 additional replica(s) M: ef87bb7b2838bcd02a7debd206136817c9e02a97 127.0.0.1:6802 slots:[10923-16383] (5461 slots) master 1 additional replica(s) S: f6b807ce2328586c86e8ce3190750a9519d99850 127.0.0.1:6803 slots: (0 slots) slave replicates 838e70efaa99a1e5be69e984548f546f684f3bb4 S: 6d7bd796300a8b977df1691d4be8927b6c7da20a 127.0.0.1:6804 slots: (0 slots) slave replicates ef87bb7b2838bcd02a7debd206136817c9e02a97 [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. Redis 6800 宕机,6805 被选为主

127.0.0.1:6804> cluster nodes ef87bb7b2838bcd02a7debd206136817c9e02a97 127.0.0.1:6802@16802 master - 0 1619599971259 3 connected 10923-16383 f6b807ce2328586c86e8ce3190750a9519d99850 127.0.0.1:6803@16803 slave 838e70efaa99a1e5be69e984548f546f684f3bb4 0 1619599972264 2 connected 2130527167330eee433e4a85e23667e1f3e7f18c 127.0.0.1:6800@16800 master,fail - 1619599400385 1619599396000 1 disconnected 838e70efaa99a1e5be69e984548f546f684f3bb4 127.0.0.1:6801@16801 master - 0 1619599970000 2 connected 5461-10922 80a4eeac5a4f45656af66f77c37fed724ff186e9 127.0.0.1:6805@16805 master - 0 1619599973268 7 connected 0-5460 6d7bd796300a8b977df1691d4be8927b6c7da20a 127.0.0.1:6804@16804 myself,slave ef87bb7b2838bcd02a7debd206136817c9e02a97 0 1619599972000 3 connected6805 选主日志:

62735:S 28 Apr 2021 16:43:17.110 # Connection with master lost. 62735:S 28 Apr 2021 16:43:17.111 * Caching the disconnected master state. 62735:S 28 Apr 2021 16:43:17.111 * Reconnecting to MASTER 127.0.0.1:6800 62735:S 28 Apr 2021 16:43:17.113 * MASTER <-> REPLICA sync started 62735:S 28 Apr 2021 16:43:17.114 # Error condition on socket for SYNC: Connection refused 62735:S 28 Apr 2021 16:43:17.317 * Connecting to MASTER 127.0.0.1:6800 62735:S 28 Apr 2021 16:43:17.321 * MASTER <-> REPLICA sync started 62735:S 28 Apr 2021 16:43:17.322 # Error condition on socket for SYNC: Connection refused ### 省略部分... 62735:S 28 Apr 2021 16:43:36.483 * Connecting to MASTER 127.0.0.1:6800 62735:S 28 Apr 2021 16:43:36.501 * MASTER <-> REPLICA sync started 62735:S 28 Apr 2021 16:43:36.508 # Error condition on socket for SYNC: Connection refused 62735:S 28 Apr 2021 16:43:36.625 * FAIL message received from 838e70efaa99a1e5be69e984548f546f684f3bb4 about 2130527167330eee433e4a85e23667e1f3e7f18c 62735:S 28 Apr 2021 16:43:36.625 # Cluster state changed: fail 62735:S 28 Apr 2021 16:43:36.709 # Start of election delayed for 544 milliseconds (rank #0, offset 23072). 62735:S 28 Apr 2021 16:43:37.314 # Starting a failover election for epoch 7. 62735:S 28 Apr 2021 16:43:37.325 # Failover election won: I'm the new master. 62735:S 28 Apr 2021 16:43:37.326 # configEpoch set to 7 after successful failover 62735:M 28 Apr 2021 16:43:37.326 * Discarding previously cached master state. 62735:M 28 Apr 2021 16:43:37.327 # Setting secondary replication ID to 044c1d04537e505407a6f80634583698e24e0b94, valid up to offset: 23073. New replication ID is fa8198769c1b1214d9b16ee07deccde429e98c3a 62735:M 28 Apr 2021 16:43:37.331 # Cluster state changed: ok增加 Redis 节点

比如新增一个端口为 6806 的节点:

~/Applications/redis-6.2.1 redis-cli -a mypassword --cluster add-node 127.0.0.1:6806 127.0.0.1:6800 Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe. >>> Adding node 127.0.0.1:6806 to cluster 127.0.0.1:6800 >>> Performing Cluster Check (using node 127.0.0.1:6800) S: 2130527167330eee433e4a85e23667e1f3e7f18c 127.0.0.1:6800 slots: (0 slots) slave replicates 80a4eeac5a4f45656af66f77c37fed724ff186e9 M: 80a4eeac5a4f45656af66f77c37fed724ff186e9 127.0.0.1:6805 slots:[0-5460] (5461 slots) master 1 additional replica(s) S: f6b807ce2328586c86e8ce3190750a9519d99850 127.0.0.1:6803 slots: (0 slots) slave replicates 838e70efaa99a1e5be69e984548f546f684f3bb4 M: 838e70efaa99a1e5be69e984548f546f684f3bb4 127.0.0.1:6801 slots:[5461-10922] (5462 slots) master 1 additional replica(s) M: ef87bb7b2838bcd02a7debd206136817c9e02a97 127.0.0.1:6802 slots:[10923-16383] (5461 slots) master 1 additional replica(s) S: 6d7bd796300a8b977df1691d4be8927b6c7da20a 127.0.0.1:6804 slots: (0 slots) slave replicates ef87bb7b2838bcd02a7debd206136817c9e02a97 [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. >>> Send CLUSTER MEET to node 127.0.0.1:6806 to make it join the cluster. [OK] New node added correctly.新增的节点自动为master节点,并且没有槽位分配给它:

127.0.0.1:6802> cluster nodes 6d7bd796300a8b977df1691d4be8927b6c7da20a 127.0.0.1:6804@16804 slave ef87bb7b2838bcd02a7debd206136817c9e02a97 0 1619600907256 3 connected 80a4eeac5a4f45656af66f77c37fed724ff186e9 127.0.0.1:6805@16805 master - 0 1619600904000 7 connected 0-5460 6d52725b2d1f4bb561dacabe285091c11d17cbc5 127.0.0.1:6806@16806 master - 0 1619600906000 0 connected 2130527167330eee433e4a85e23667e1f3e7f18c 127.0.0.1:6800@16800 slave 80a4eeac5a4f45656af66f77c37fed724ff186e9 0 1619600906000 7 connected ef87bb7b2838bcd02a7debd206136817c9e02a97 127.0.0.1:6802@16802 myself,master - 0 1619600904000 3 connected 10923-16383 838e70efaa99a1e5be69e984548f546f684f3bb4 127.0.0.1:6801@16801 master - 0 1619600906248 2 connected 5461-10922 f6b807ce2328586c86e8ce3190750a9519d99850 127.0.0.1:6803@16803 slave 838e70efaa99a1e5be69e984548f546f684f3bb4 0 1619600908264 2 connectedresharding

~/Applications/redis-6.2.1 redis-cli -a mypassword --cluster reshard 127.0.0.1:6800 Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe. >>> Performing Cluster Check (using node 127.0.0.1:6800) S: 2130527167330eee433e4a85e23667e1f3e7f18c 127.0.0.1:6800 slots: (0 slots) slave replicates 80a4eeac5a4f45656af66f77c37fed724ff186e9 M: 6d52725b2d1f4bb561dacabe285091c11d17cbc5 127.0.0.1:6806 slots: (0 slots) master M: 80a4eeac5a4f45656af66f77c37fed724ff186e9 127.0.0.1:6805 slots:[0-5460] (5461 slots) master 1 additional replica(s) S: f6b807ce2328586c86e8ce3190750a9519d99850 127.0.0.1:6803 slots: (0 slots) slave replicates 838e70efaa99a1e5be69e984548f546f684f3bb4 M: 838e70efaa99a1e5be69e984548f546f684f3bb4 127.0.0.1:6801 slots:[5461-10922] (5462 slots) master 1 additional replica(s) M: ef87bb7b2838bcd02a7debd206136817c9e02a97 127.0.0.1:6802 slots:[10923-16383] (5461 slots) master 1 additional replica(s) S: 6d7bd796300a8b977df1691d4be8927b6c7da20a 127.0.0.1:6804 slots: (0 slots) slave replicates ef87bb7b2838bcd02a7debd206136817c9e02a97 [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. How many slots do you want to move (from 1 to 16384)? 3000 What is the receiving node ID? 6d52725b2d1f4bb561dacabe285091c11d17cbc5 Please enter all the source node IDs. Type 'all' to use all the nodes as source nodes for the hash slots. Type 'done' once you entered all the source nodes IDs. Source node #1: all # 省略部分 Moving slot 11917 from ef87bb7b2838bcd02a7debd206136817c9e02a97 Moving slot 11918 from ef87bb7b2838bcd02a7debd206136817c9e02a97 Moving slot 11919 from ef87bb7b2838bcd02a7debd206136817c9e02a97 Moving slot 11920 from ef87bb7b2838bcd02a7debd206136817c9e02a97 Moving slot 11921 from ef87bb7b2838bcd02a7debd206136817c9e02a97 Do you want to proceed with the proposed reshard plan (yes/no)? yes Moving slot 11918 from 127.0.0.1:6802 to 127.0.0.1:6806: Moving slot 11919 from 127.0.0.1:6802 to 127.0.0.1:6806: Moving slot 11920 from 127.0.0.1:6802 to 127.0.0.1:6806: Moving slot 11921 from 127.0.0.1:6802 to 127.0.0.1:6806: # 省略部分resharding 后结果:

127.0.0.1:6802> cluster nodes 6d7bd796300a8b977df1691d4be8927b6c7da20a 127.0.0.1:6804@16804 slave ef87bb7b2838bcd02a7debd206136817c9e02a97 0 1619602397000 3 connected 80a4eeac5a4f45656af66f77c37fed724ff186e9 127.0.0.1:6805@16805 master - 0 1619602397000 7 connected 999-5460 6d52725b2d1f4bb561dacabe285091c11d17cbc5 127.0.0.1:6806@16806 master - 0 1619602398000 8 connected 0-998 5461-6461 10923-11921 2130527167330eee433e4a85e23667e1f3e7f18c 127.0.0.1:6800@16800 slave 80a4eeac5a4f45656af66f77c37fed724ff186e9 0 1619602398274 7 connected ef87bb7b2838bcd02a7debd206136817c9e02a97 127.0.0.1:6802@16802 myself,master - 0 1619602396000 3 connected 11922-16383 838e70efaa99a1e5be69e984548f546f684f3bb4 127.0.0.1:6801@16801 master - 0 1619602399281 2 connected 6462-10922 f6b807ce2328586c86e8ce3190750a9519d99850 127.0.0.1:6803@16803 slave 838e70efaa99a1e5be69e984548f546f684f3bb4 0 1619602397000 2 connected

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK