Ultra-fast generative visual intelligence model creates images in just 2 seconds

source link: https://techxplore.com/news/2024-02-ultra-fast-generative-visual-intelligence.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

February 22, 2024

Ultra-fast generative visual intelligence model creates images in just 2 seconds

by National Research Council of Science and Technology

ETRI's researchers have unveiled a technology that combines generative AI and visual intelligence to create images from text inputs in just 2 seconds, propelling the field of ultra-fast generative visual intelligence.

Electronics and Telecommunications Research Institute (ETRI) announced the release of five types of models to the public. These include three models of 'KOALA,' which generate images from text inputs five times faster than existing methods, and two conversational visual-language models 'Ko-LLaVA' which can perform question-answering with images or videos.

The 'KOALA' model significantly reduced the parameters from 2.56B (2.56 billion) of the public SW model to 700M (700 million) using the knowledge distillation technique. A high number of parameters typically means more computations, leading to longer processing times and increased operational costs. The researchers reduced the model size by a third and improved the generation of high-resolution images to be twice as fast as before and five times faster compared to DALL-E 3.

ETRI has managed to reduce the model's size(1.7B (Large), 1B (Base), 700M (Small)) considerably and increase the generation speed to around 2 seconds, enabling its operation on low-cost GPUs with only 8GB of memory amidst the competitive landscape of text-to-image generation both domestically and internationally.

ETRI's three 'KOALA' models, developed in-house, have been released in the HuggingFace environment.

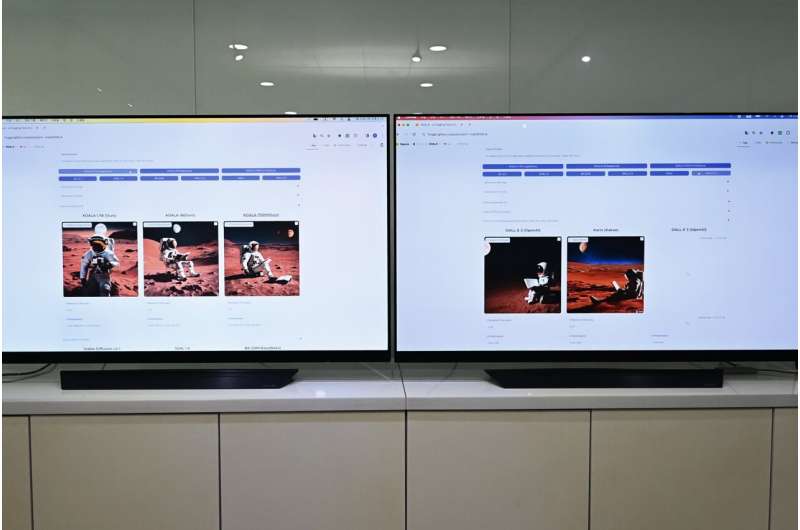

In practice, when the research team input the sentence "a picture of an astronaut reading a book under the moon on Mars," ETRI-developed KOALA 700M model created the image in just 1.6 seconds, significantly faster than Kakao Brain's Kallo (3.8 seconds), OpenAI's DALL-E 2 (12.3 seconds), and DALL-E 3 (13.7 seconds).

ETRI also launched a website where users can directly compare and experience a total of 9 models, including the two publicly available stable diffusion models, BK-SDM, Karlo, DALL-E 2, DALL-E 3, and the three KOALA models.

Furthermore, the research team unveiled the conversational visual-language model 'Ko-LLaVA,' which adds visual intelligence to conversational AI like ChatGPT. This model can retrieve images or videos and perform question-answering in Korean about them.

The 'LLaVA' model was developed in an international joint research project with the University of Wisconsin-Madison and ETRI, presented at the prestigious AI conference NeurIPS'23, and utilizes the open-source LLaVA(Large Language and Vision Assistant) with image interpretation capabilities at the level of GPT-4.

The researchers are conducting extension research to improve Korean language understanding and introduce unprecedented video interpretation capabilities based on the LLaVA model, which is emerging as an alternative to multimodal models including images.

Additionally, ETRI pre-released its own Korean-based compact language understanding-generation model (KEByT5). The released models (330M (Small), 580M (Base), 1.23B (Large)) apply token-free technology capable of handling neologisms and untrained words. Training speed was enhanced by more than 2.7 times, and inference speed by more than 1.4 times.

The research team anticipates a gradual shift in the generative AI market from text-centric generative models to multimodal generative models, with an emerging trend towards smaller, more efficient models in the competitive landscape of model sizes.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK