Fighting deepfakes, shallowfakes and media manipulation

source link: https://techxplore.com/news/2024-01-deepfakes-shallowfakes-media.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

January 30, 2024

Fighting deepfakes, shallowfakes and media manipulation

Photo, audio and video technologies have advanced significantly in recent years, making it easier to create convincing fake multimedia content, like politicians singing popular songs or saying silly things to get a laugh or a click. With a few easily accessible applications and some practice, the average person can alter the face and voice of just about anyone.

But University of Maryland Assistant Professor of Computer Science Nirupam Roy says media manipulation isn't just fun and games—a bit of video and audio editing can quickly lead to life-changing consequences in today's world. Using increasingly sophisticated technologies like artificial intelligence and machine learning, bad actors can exploit the lines between fiction and reality more easily than ever.

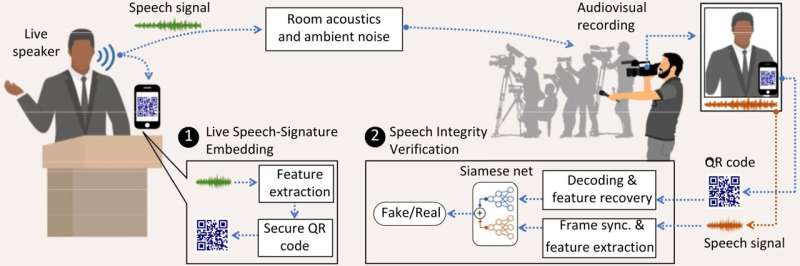

To combat this growing threat, Roy is developing TalkLock, a cryptographic QR code-based system that can verify whether content has been edited from its original form.

"In 2022, a doctored video of Ukrainian President Volodymyr Zelenskyy was circulated on the internet by hackers. In that fake video, he appeared to tell his soldiers to lay down their arms and give up fighting for Ukraine," said Roy, who holds a joint appointment in the University of Maryland Institute for Advanced Computer Studies.

"The clip was debunked, but there was already an impact on morale, on democracy, on people. You can imagine the consequences if it had stayed up for longer, or if viewers couldn't verify its authenticity."

Roy explained that the fake Zelenskyy video is just one of many maliciously edited video and audio clips circulating on the web, thanks to recent surges in multimedia content called deepfakes and shallowfakes.

"While deepfakes use artificial intelligence to seamlessly alter faces, mimic voices or even fabricate actions in videos, shallowfakes rely less on complex editing techniques and more on connecting partial truths to small lies," Roy said. "Shallowfakes are equally—if not more—dangerous because they end up snowballing and becoming easier for people to accept smaller fabrications as the truth. They make us raise questions about how accurate our usual sources of information can be."

Finding a way to tamper-proof recordings of live events

After observing the fallout from the viral falsified Zelenskyy video and others like it, Roy realized that fighting deepfakes and shallowfakes was essential to preventing the rapid spread of dangerous disinformation.

"We already have a few ways of counteracting deepfakes and other audio-video alterations," Roy said. "Beyond just looking for obvious discrepancies in the videos, websites like Facebook can automatically verify the metadata of uploaded content to see if it's altered or not."

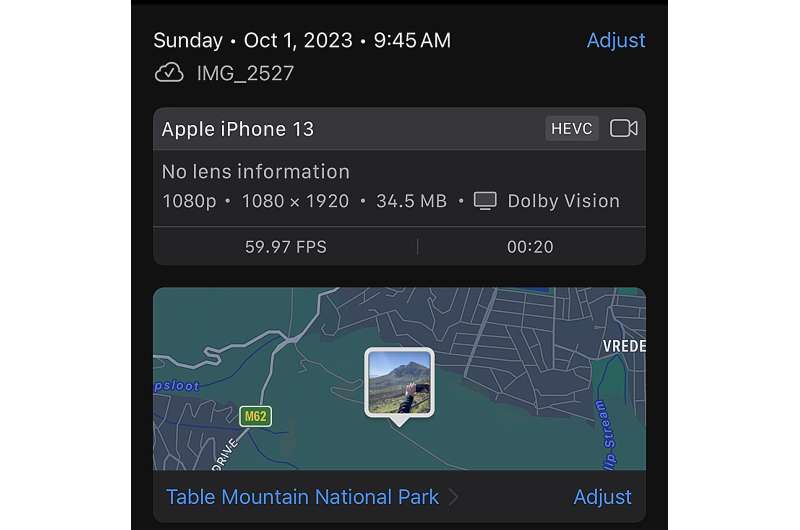

Metadata contains information about a piece of media, such as when it was recorded and on what device. Image editors like Photoshop also leave editing history in a photo's metadata. The metadata embedded in a file can be used to cross-check the origins of the media, but this commonly used authentication technique isn't foolproof.

Some types of metadata can be added manually after a video or audio clip is recorded while other types can be stripped entirely. These shortfalls make using default metadata alone as an authenticator unreliable, especially for recordings of live events.

More information: Irtaza Shahid et al, "Is this my president speaking?" Tamper-proofing Speech in Live Recordings, Proceedings of the 21st Annual International Conference on Mobile Systems, Applications and Services (2023). DOI: 10.1145/3581791.3596862

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK