Automated Integration with Flask and Kafka and API - DZone

source link: https://dzone.com/articles/application-integration-with-flask-kakfa-and-api-l

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Automated Application Integration With Flask, Kakfa, and API Logic Server

Create APIs instantly with API Logic Server. Use your IDE to produce/consume messages and rules for logic and security 40X more concisely.

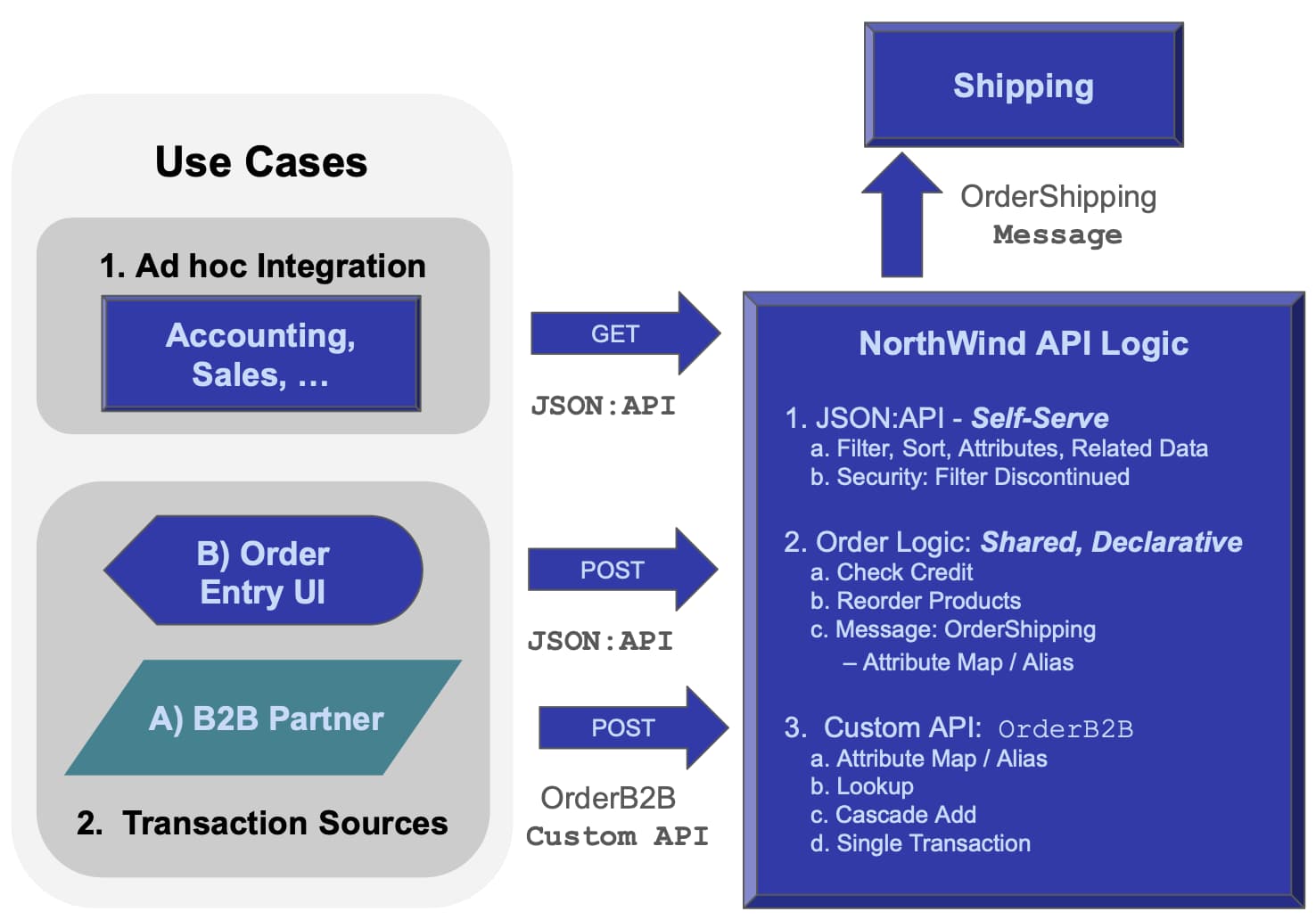

This tutorial illustrates B2B push-style application integration with APIs and internal integration with messages. We have the following use cases:

- Ad Hoc Requests for information (Sales, Accounting) that cannot be anticipated in advance.

- Two Transaction Sources: A) internal Order Entry UI, and B) B2B partner

OrderB2BAPI.

The Northwind API Logic Server provides APIs and logic for both transaction sources:

- Self-Serve APIs to support ad hoc integration and UI dev, providing security (e.g., customers see only their accounts).

- Order Logic: enforcing database integrity and Application Integration (alert shipping).

- A custom API to match an agreed-upon format for B2B partners.

The Shipping API Logic Server listens to Kafka and processes the message.

Key Architectural Requirements: Self-ServeAPIs and Shared Logic

This sample illustrates some key architectural considerations:

| Requirement | Poor Practice | Good Practice | Best Practice | Ideal |

|---|---|---|---|---|

| Ad Hoc Integration | ETL | APIs | Self-Serve APIs | AutomatedSelf-ServeAPIs |

| Logic | Logic in UI | Reusable Logic | Declarative Rules .. Extensible with Python |

|

| Messages | Kafka | Kafka Logic Integration |

We'll further expand on these topics as we build the system, but we note some best practices:

APIs should be self-serve, not requiring continuing server development.

- APIs avoid the nightly Extract, Transfer, and Load (ETL) overhead.

Logic should be re-used over the UI and API transaction sources.

- Logic in UI controls is undesirable since it cannot be shared with APIs and messages.

Using This Guide

This guide was developed with API Logic Server, which is open-source and available here. The guide shows the highlights of creating the system.

The complete Tutorial in the Appendix contains detailed instructions to create the entire running system. The information here is abbreviated for clarity.

Development Overview

This overview shows all the key codes and procedures to create the system above.

We'll be using

API Logic Server, which consists of a CLI plus a set of runtimes for automating APIs, logic, messaging, and an admin UI. It's an open-source Python project with a standard pip install.

1. ApiLogicServer Create: Instant Project

The CLI command below creates an ApiLogicProject by reading your schema. The database is Northwind (Customer, Orders, Items, and Product), as shown in the Appendix.

Note: the

db_urlvalue is an abbreviation; you normally supply a SQLAlchemy URL. The sample NW SQLite database is included in ApiLogicServer for demonstration purposes.

$ ApiLogicServer create --project_name=ApiLogicProject --db_url=nw- The created project is executable; it can be opened in an IDE and executed.

One command has created meaningful elements of our system: an API for ad hoc integration and an Admin App. Let's examine these below.

API: Ad Hoc Integration

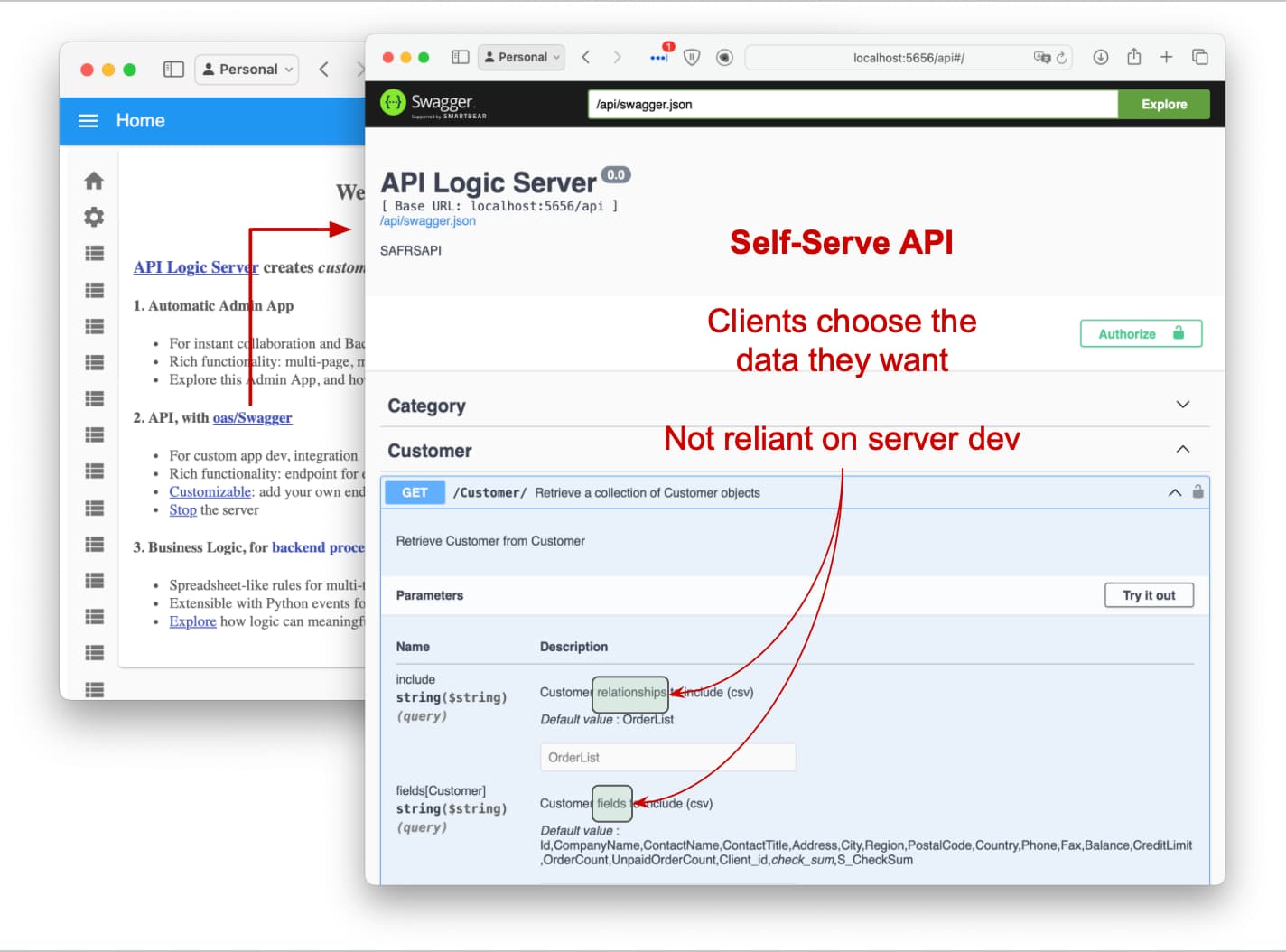

The system creates a JSON API with endpoints for each table, providing filtering, sorting, pagination, optimistic locking, and related data access.

JSON: APIs are self-serve: consumers can select their attributes and related data, eliminating reliance on custom API development.

In this sample, our self-serve API meets our Ad Hoc Integration needs and unblocks Custom UI development.

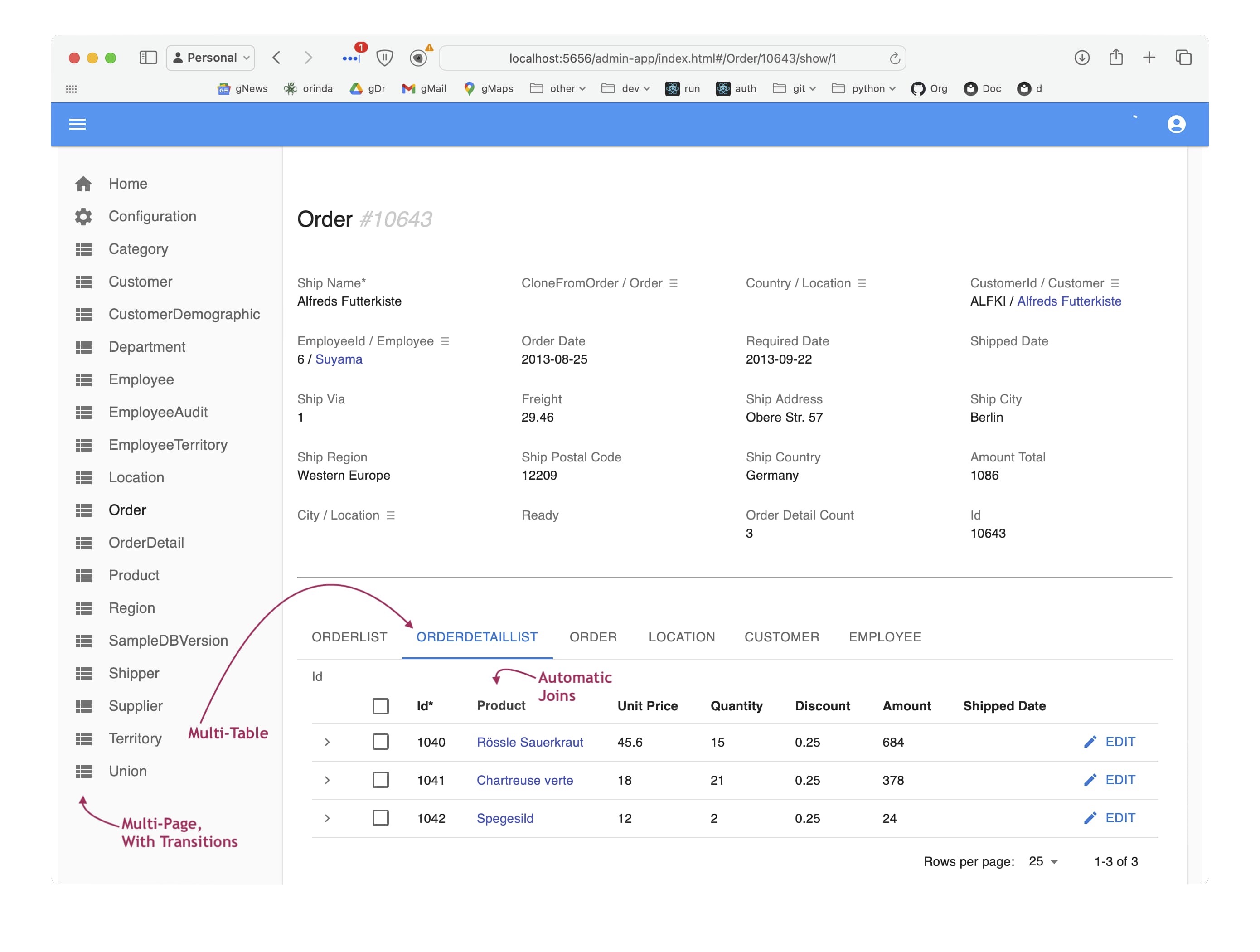

Admin App: Order Entry UI

The create command also creates an Admin App: multi-page, multi-table with automatic joins, ready for business user agile collaboration and back office data maintenance. This complements custom UIs you can create with the API.

Multi-page navigation controls enable users to explore data and relationships. For example, they might click the first Customer and see their Orders and Items:

We created an executable project with one command that completes our ad hoc integration with a self-serve API.

2. Customize: In Your IDE

While API/UI automation is a great start, we now require Custom APIs, Logic, and Security.

Such customizations are added to your IDE, leveraging all its services for code completion, debugging, etc. Let's examine these.

Declare UI Customizations

The admin app is not built with complex HTML and JavaScript. Instead, it is configured with the ui/admin/admin.yml, automatically created from your data model by the ApiLogicServer create command.

You can customize this file in your IDE to control which fields are shown (including joins), hide/show conditions, help text, etc.

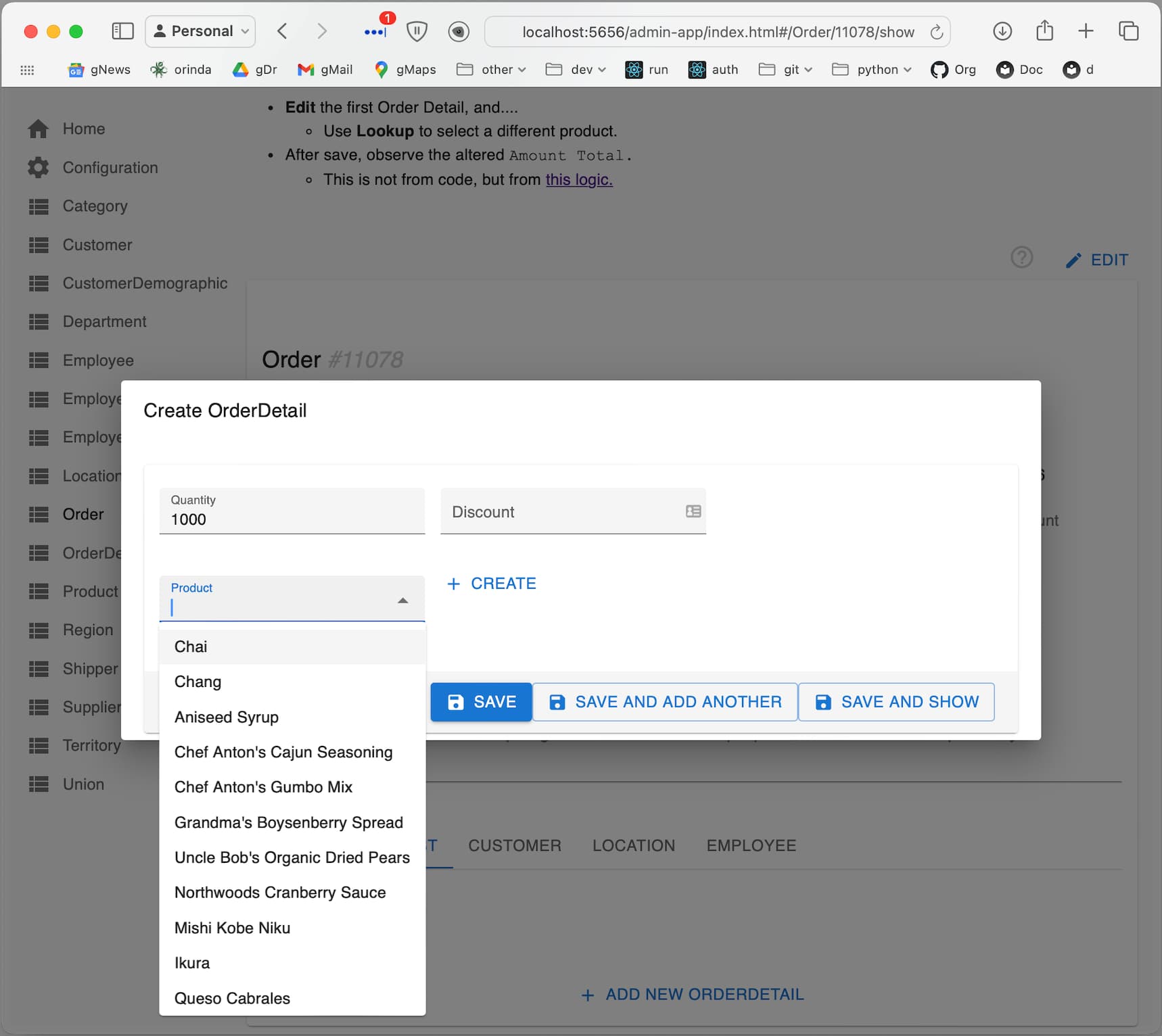

This makes it convenient to use the Admin App to enter an Order and OrderDetails:

Note the automation for automatic joins (Product Name, not ProductId) and lookups (select from a list of Products to obtain the foreign key). If we attempt to order too much Chai, the transaction properly fails due to the Check Credit logic described below.

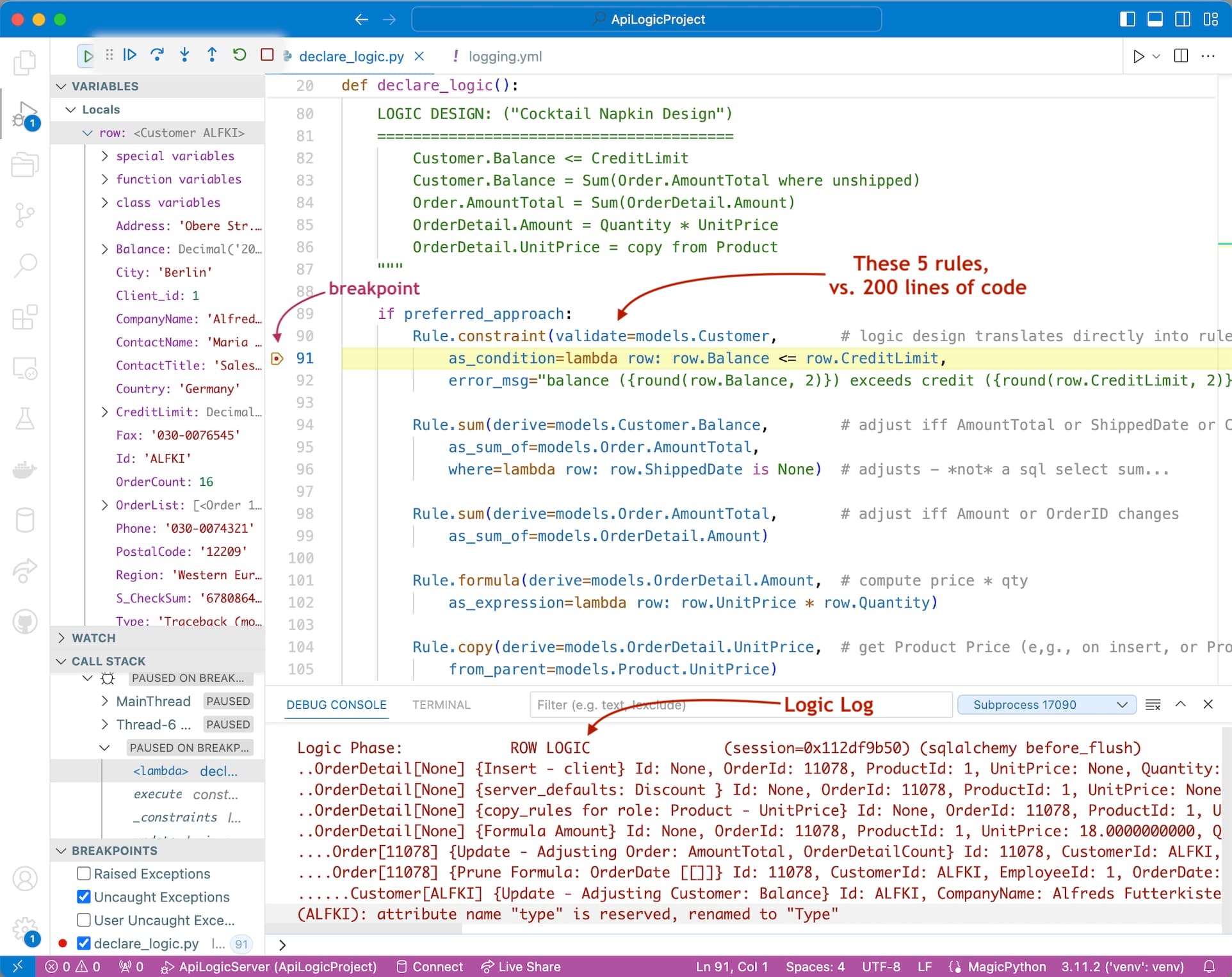

Check Credit Logic: Multi-Table Derivation and Constraint Rules, 40X More Concise.

Such logic (multi-table derivations and constraints) is a significant portion of a system, typically nearly half. API Logic server provides spreadsheet-like rules that dramatically simplify and accelerate logic development.

The five check credit rules below represent the same logic as 200 lines of traditional procedural code.

Rules are 40X more concise than traditional code, as shown here.

Rules are declared in Python and simplified with IDE code completion. Rules can be debugged using standard logging and the debugger:

Rules operate by handling SQLAlchemy events, so they apply to all ORM access, whether by the API engine or your custom code. Once declared, you don't need to remember to call them, which promotes quality.

The above rules prevented the too-big order with multi-table logic from copying the Product Price, computing the Amount, rolling it up to the AmountTotal and Balance, and checking the credit.

These five rules also govern changing orders, deleting them, picking different parts, and about nine automated transactions. Implementing all this by hand would otherwise require about 200 lines of code.

Rules are a unique and significant innovation, providing meaningful improvements over procedural logic:

| CHARACTERISTIC | PROCEDURAL | DECLARATIVE | WHY IT MATTERS |

|---|---|---|---|

| Reuse | Not Automatic | Automatic - all Use Cases | 40X Code Reduction |

| Invocation | Passive - only if called | Active - call not required | Quality |

| Ordering | Manual | Automatic | Agile Maintenance |

| Optimizations | Manual | Automatic | Agile Design |

For more on the rules, click here.

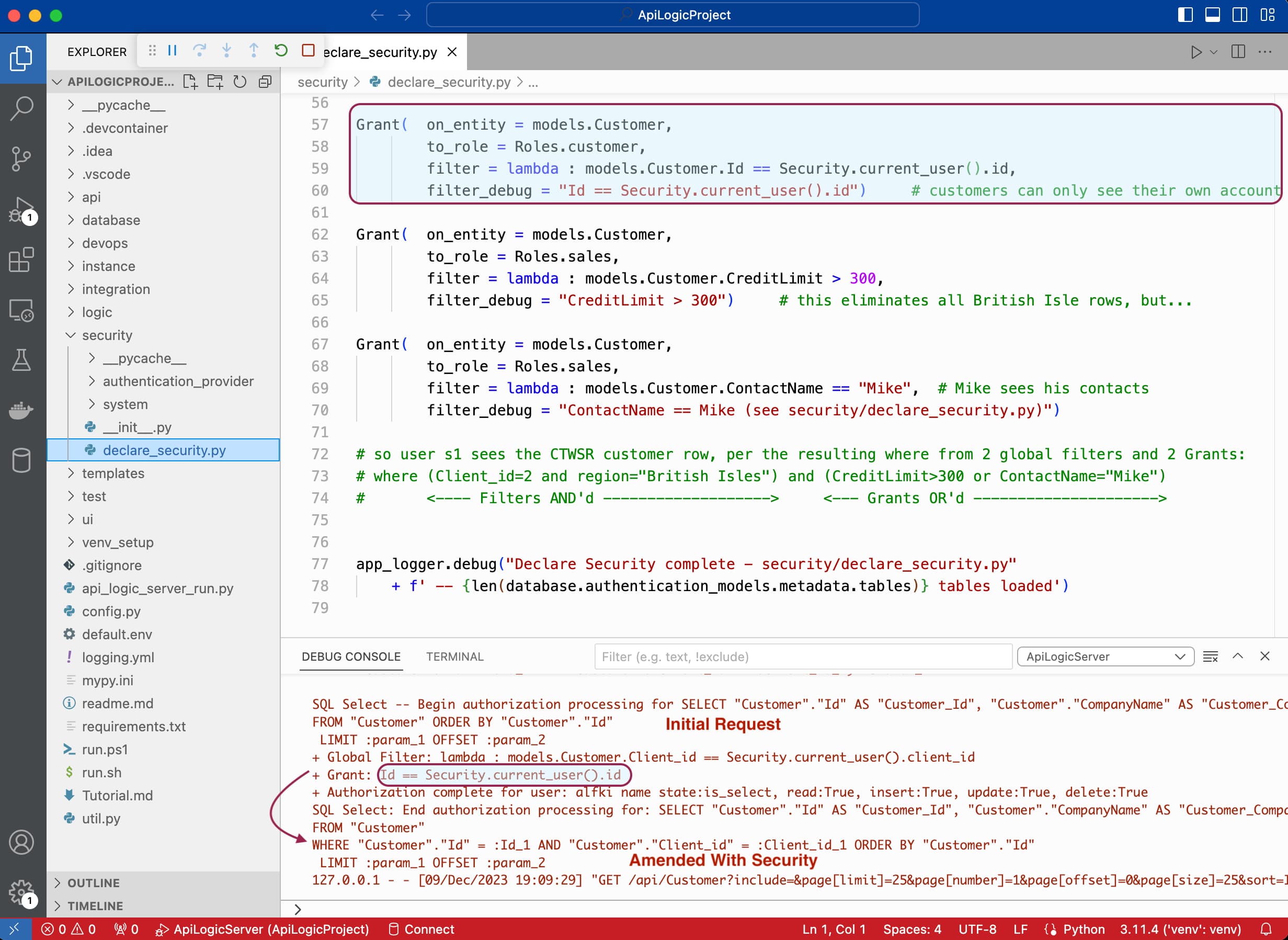

Declare Security: Customers See Only Their Own Row

Declare row-level security using your IDE to edit logic/declare_security.sh, (see screenshot below). An automatically created admin app enables you to configure roles, users, and user roles.

If users now log in as ALFKI (configured with role customer), they see only their customer row. Observe the console log at the bottom shows how the filter worked.

Declarative row-level security ensures users see only the rows authorized for their roles.

3. Integrate: B2B and Shipping

We now have a running system, an API, logic, security, and a UI. Now, we must integrate with the following:

- B2B partners: We'll create a B2B Custom Resource.

- OrderShipping: We add logic to Send an OrderShipping Message.

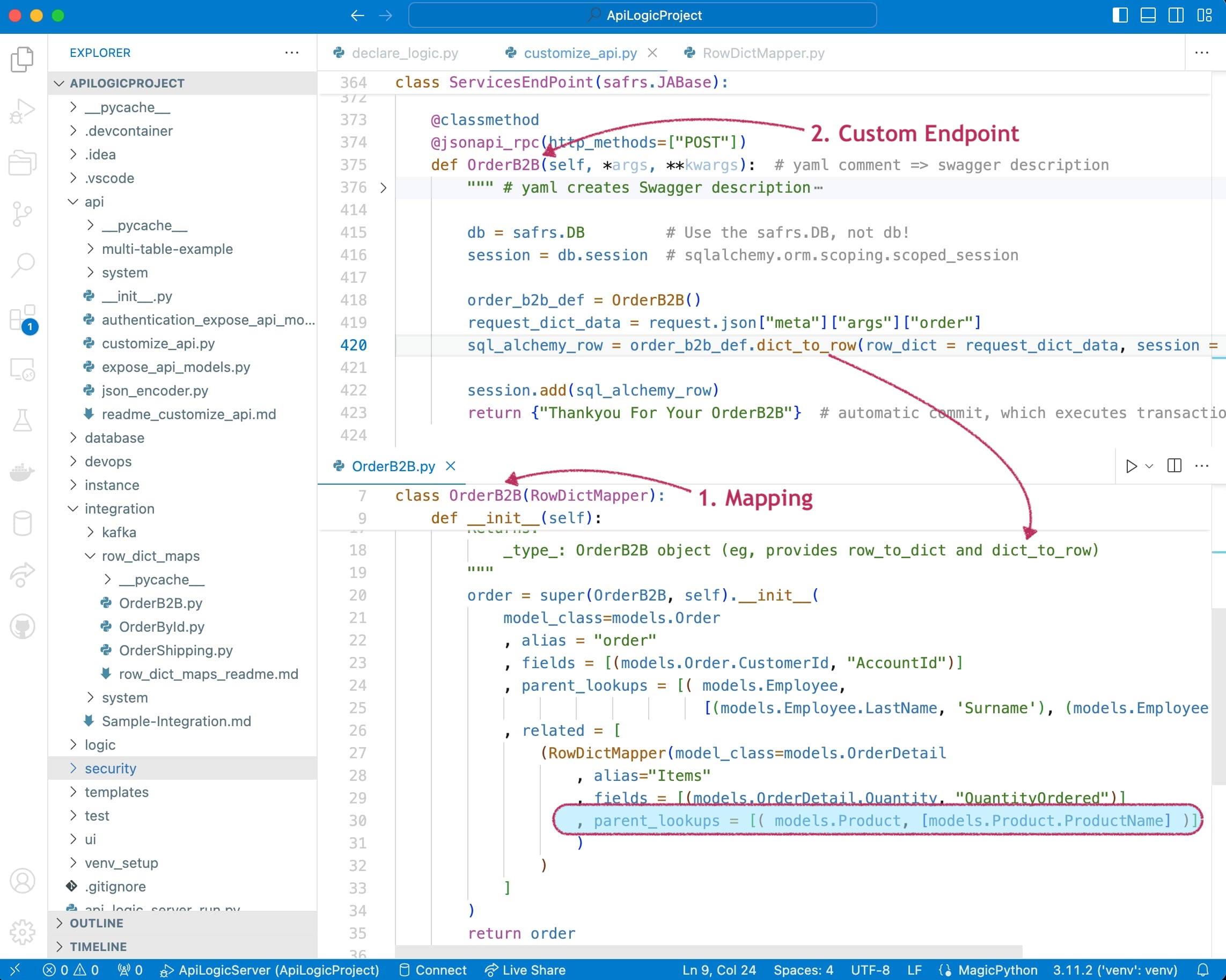

B2B Custom Resource

The self-serve API does not conform to the format required for a B2B partnership. We need to create a custom resource.

You can create custom resources by editing customize_api.py using standard Python, Flask, and SQLAlchemy. A custom OrderB2B endpoint is shown below.

The main task here is to map a B2B payload onto our logic-enabled SQLAlchemy rows. API Logic Server provides a declarative RowDictMapper class you can use as follows:

Declare the row/dict mapping; see the

OrderB2Bclass in the lower pane:- Note the support for lookup so that partners can send ProductNames, not ProductIds.

Create the custom API endpoint; see the upper pane:

- Add

def OrderB2Btocustomize_api/pyto create a new endpoint. - Use the

OrderB2Bclass to transform API request data to SQLAlchemy rows (dict_to_row). - The automatic commit initiates the shared logic described above to check credit and reorder products.

- Add

Our custom endpoint required under ten lines of code and the mapper configuration.

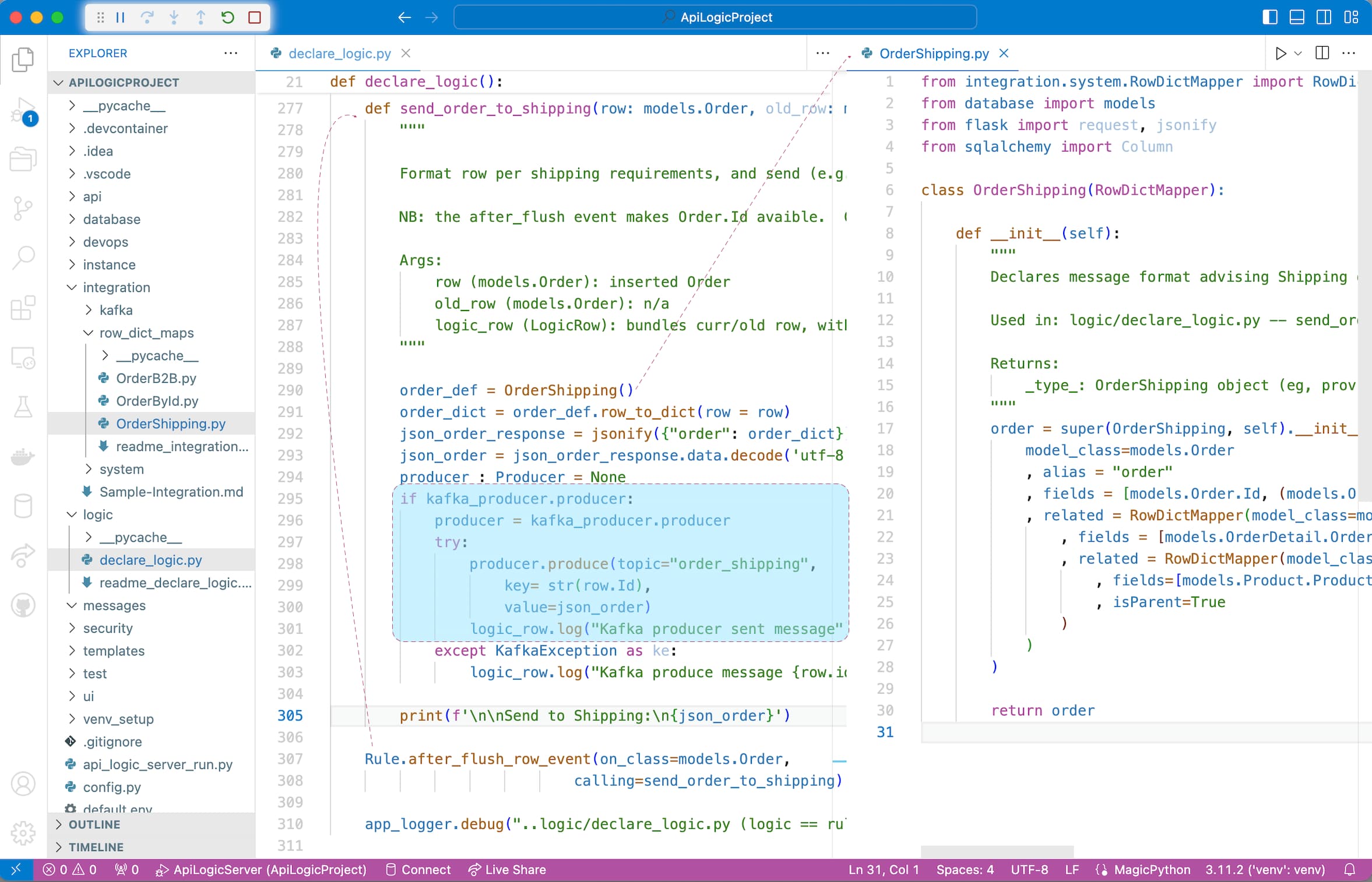

Produce OrderShipping Message

Successful orders must be sent to Shipping in a predesignated format.

We could certainly POST an API, but Messaging (here, Kafka) provides significant advantages:

- Async: Our system will not be impacted if the Shipping system is down. Kafka will save the message and deliver it when Shipping is back up.

- Multi-cast: We can send a message that multiple systems (e.g., Accounting) can consume.

The content of the message is a JSON string, just like an API.

Just as you can customize APIs, you can complement rule-based logic using Python events:

Declare the mapping; see the

OrderShippingclass in the right pane. This formats our Kafka message content in the format agreed upon with Shipping.Define an

after_flushevent, which invokessend_order_to_shipping. This is called by the logic engine, which passes the SQLAlchemymodels.Orderrow.send_order_to_shippingusesOrderShipping.row_to_dictto map our SQLAlchemy order row to a dict and uses the Kafka producer to publish the message.

Rule-based logic is customizable with Python, producing a Kafka message with 20 lines of code here.

4. Consume Messages

The Shipping system illustrates how to consume messages. The sections below show how to create/start the shipping server create/start and use our IDE to add the consuming logic.

Create/Start the Shipping Server

This shipping database was created from AI. To simplify matters, API Logic Server has installed the shipping database automatically. We can, therefore, create the project from this database and start it:

1. Create the Shipping Project

ApiLogicServer create --project_name=shipping --db_url=shipping2. Start your IDE (e.g., code shipping) and establish your venv.

3. Start the Shipping Server: F5 (configured to use a different port).

The core Shipping system was automated by ChatGPT and

ApiLogicServer create. We add 15 lines of code to consume Kafka messages, as shown below.

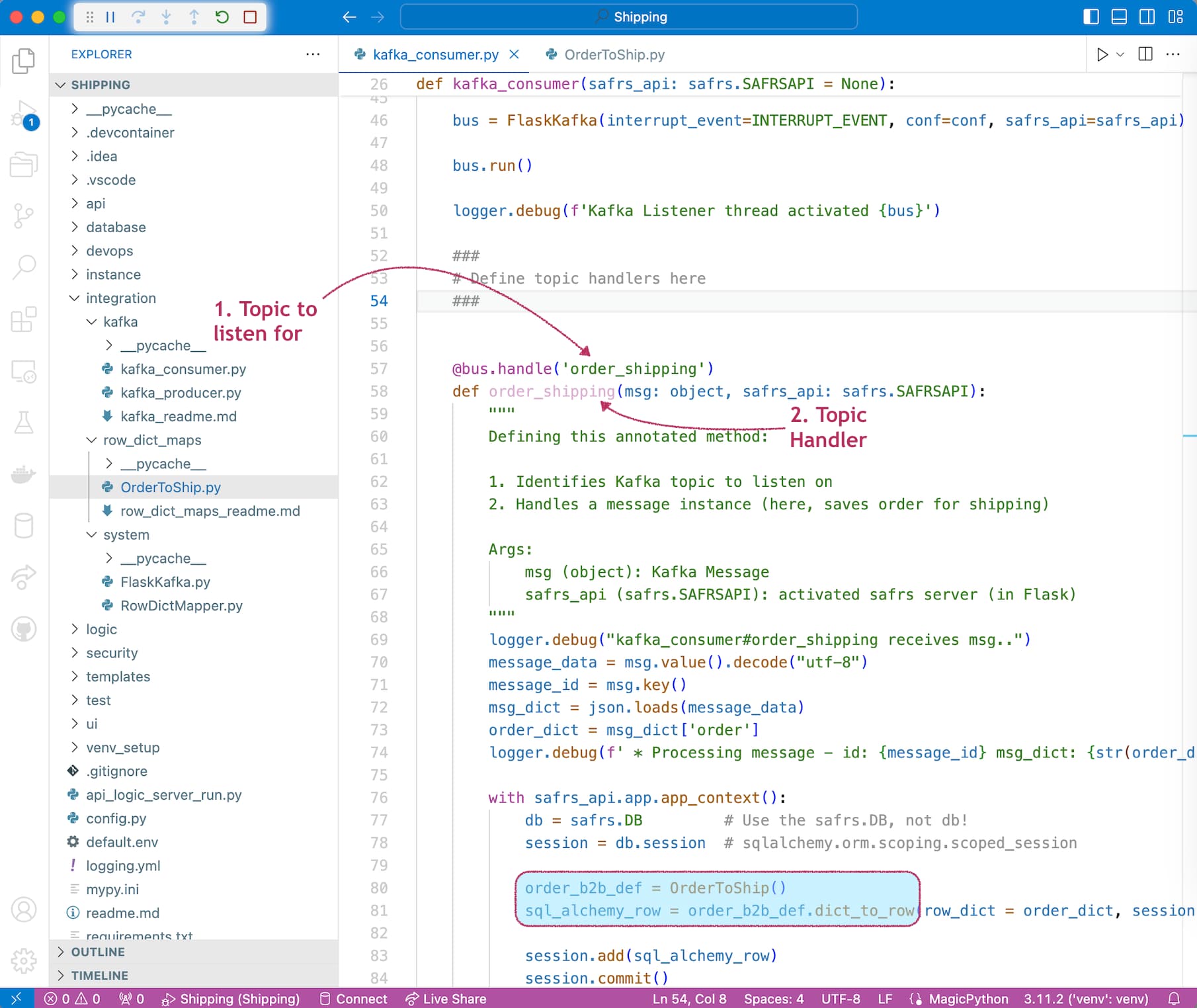

Consuming Logic

To consume messages, we enable message consumption, configure a mapping, and provide a message handler as follows.

1. Enable Consumption

Shipping is pre-configured to enable message consumption with a setting in config.py:

KAFKA_CONSUMER = '{"bootstrap.servers": "localhost:9092", "group.id": "als-default-group1", "auto.offset.reset":"smallest"}'When the server is started, it invokes flask_consumer() (shown below). This is called the pre-supplied FlaskKafka, which handles the Kafka consumption (listening), thread management, and the handle annotation used below. This housekeeping task is pre-created automatically.

FlaskKafkawas inspired by the work of Nimrod (Kevin) Maina in this project. Many thanks!

2. Configure a Mapping

As we did for our OrderB2B Custom Resource, we configured an OrderToShip mapping class to map the message onto our SQLAlchemy Order object.

3. Provide a Consumer Message Handler

We provide the order_shipping handler in kafka_consumer.py:

Annotate the topic handler method, providing the topic name.

- This is used by

FlaskKafkato establish a Kafka listener

- This is used by

Provide the topic handler code, leveraging the mapper noted above. It is called

FlaskKafkaper the method annotations.

Test It

You can use your IDE terminal window to simulate a business partner posting a B2BOrder. You can set breakpoints in the code described above to explore system operation.

ApiLogicServer curl "'POST' 'http://localhost:5656/api/ServicesEndPoint/OrderB2B'" --data '

{"meta": {"args": {"order": {

"AccountId": "ALFKI",

"Surname": "Buchanan",

"Given": "Steven",

"Items": [

{

"ProductName": "Chai",

"QuantityOrdered": 1

},

{

"ProductName": "Chang",

"QuantityOrdered": 2

}

]

}

}}}'Use Shipping's Admin App to verify the Order was processed.

Summary

These applications have demonstrated several types of application integration:

- Ad Hoc integration via self-serve APIs.

- Custom integration via custom APIs to support business agreements with B2B partners.

- Message-based integration to decouple internal systems by reducing dependencies that all systems must always be running.

We have also illustrated several technologies noted in the ideal column:

| Requirement | Poor Practice | Good Practice | Best Practice | Ideal |

|---|---|---|---|---|

| Ad Hoc Integration | ETL | APIs | Self-Serve APIs | Automated Creation of Self-ServeAPIs |

| Logic | Logic in UI | Reusable Logic | Declarative Rules .. Extensible with Python |

|

| Messages | Kafka | Kafka Logic Integration |

API Logic Server provides automation for the ideal practices noted above:

1. Creation: instant ad hoc API (and Admin UI) with the ApiLogicServer create command.

2. Declarative Rules: Security and multi-table logic reduce the backend half of your system by 40X.

3. Kafka Logic Integration

- Produce messages from logic events.

- Consume messages by extending

kafka_consumer. - Services, including:

RowDictMapperto transform rows and dict.FlaskKafkafor Kafka consumption, threading, and annotation invocation.

4. Standards-based Customization

- Standard packages: Python, Flask, SQLAlchemy, Kafka...

- Using standard IDEs.

Creation, logic, and integration automation have enabled us to build two non-trivial systems with a remarkably small amount of code:

| Type | Code |

|---|---|

| Custom B2B API | 10 lines |

| Check Credit Logic | 5 rules |

| Row Level Security | 1 security declaration |

| Send Order to Shipping | 20 lines |

| Process Order in Shipping | 30 lines |

| Mapping configurations to transform rows and dicts |

45 lines |

Automation dramatically increases time to market, with standards-based customization using your IDE, Python, Flask, SQLAlchemy, and Kafka.

For more information on API Logic Server, click here.

Appendix

Full Tutorial

You can recreate this system and explore running code, including Kafka, click here. It should take 30-60 minutes, depending on whether you already have Python and an IDE installed.

Sample Database

The sample database is an SQLite version of Northwind, Customers, Order, OrderDetail, and Product. To see a database diagram, click here. This database is included when you pip install ApiLogicServer.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK