The Power of Caching - DZone

source link: https://dzone.com/articles/the-power-of-caching-boosting-api-performance-and

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

The Power of Caching: Boosting API Performance and Scalability

Learn the power of caching for optimal API and web app performance. Explore client-side, server-side, and CDN caching techniques for faster, more reliable experiences.

Caching is the process of storing frequently accessed data or resources in a temporary storage location, such as memory or disk, to improve retrieval speed and reduce the need for repetitive processing.

Benefits of Caching

- Improved performance: Caching eliminates the need to retrieve data from the original source every time, resulting in faster response times and reduced latency.

- Reduced server load: By serving cached content, the load on the server is reduced, allowing it to handle more requests and improving overall scalability.

- Bandwidth optimization: Caching reduces the amount of data transferred over the network, minimizing bandwidth usage and improving efficiency.

- Enhanced user experience: Faster load times and responsiveness lead to a better user experience, reducing user frustration and increasing engagement.

- Cost savings: Caching can reduce the computational resources required for data processing and lower infrastructure costs by minimizing the need for expensive server resources.

- Improved availability: Caching can help maintain service availability during high-traffic periods or in case of temporary server failures by serving content from the cache.

Types of Caching

Client-Side Caching

Client-side caching refers to the process of storing web resources, like HTML pages, CSS files, JavaScript scripts, and images, on the user's device, typically in their web browser. The purpose of client-side caching is to speed up web page loading by reducing the need to fetch resources from the web server every time a user visits a page.

When a user visits a website, their browser makes requests to the web server for the required resources. The server responds with HTTP headers that guide the browser on how to handle caching. These headers include Cache-Control, Expires, ETag (Entity Tag), and Last-Modified.

What's in store for Containers in 2023

The browser stores the resources in its cache based on the caching rules provided by the server. On subsequent requests to the same page or resources, the browser checks its cache first. If the resource is still valid based on the caching headers, the browser retrieves it from the local cache, saving time and reducing the need for additional server requests.

Client-side caching significantly improves website performance, especially for returning users, as resources can be loaded directly from the cache. However, developers need to manage cache control headers carefully to ensure users receive updated content when needed and to avoid potential issues with outdated or stale cached resources.

Benefits of Client-Side Caching

Client-side caching offers multiple advantages that enhance web performance and user experience. Firstly, it enables faster load times for returning visitors since resources are stored locally in the browser cache, eliminating the need for repeated server requests. This results in quicker page loads and a smoother browsing experience. Secondly, client-side caching reduces server load and bandwidth consumption by minimizing the number of requests sent to the server for unchanged resources. This optimization is particularly valuable for high-traffic websites. Lastly, improved performance leads to a better user experience, reducing bounce rates and increasing user retention. By leveraging client-side caching effectively, website owners can provide a seamless browsing experience, optimize server resource usage, and achieve better overall website performance and success.

How Client-Side Caching Works

Client-side caching relies on HTTP caching headers like Cache-Control, Expires, ETag, and Last-Modified to facilitate resource storage in web browsers. When a user visits a website, these headers dictate whether resources can be cached and for how long. The browser stores these resources locally, and on subsequent visits, it checks the cache for validity. If the resources are still valid, the browser retrieves them from the cache, leading to faster load times and reduced server requests.

If a resource's cache has expired or changed (based on ETag), the browser sends a request to the server. The server then uses cache validation with "If-Modified-Since" or "If-None-Match" headers to determine if the resource is updated. If unchanged, the server responds with a "304 Not Modified" status, and the browser continues using the cached version; otherwise, it receives the updated resource for caching. This process ensures efficient content delivery to users while maintaining up-to-date resources when needed.

Best Practices for Client-Side Caching

Setting Appropriate Cache-Control Headers: Configure Cache-Control headers to specify caching rules for resources. Use values like "public" to allow caching by both browsers and CDNs, "private" for browser caching only, or "no-cache" to ensure resources are revalidated with the server before each use.

Handling Dynamic Content and User-Specific Data: Be cautious when caching dynamic content and user-specific data. Avoid caching pages or resources that display personalized information, as it may lead to serving outdated content to users. Implement caching strategies that consider the unique nature of dynamic content.

Handling Cache Busting for Resource Updates: When updating resources, such as CSS or JavaScript files, implement cache-busting techniques to ensure users receive the latest version. Methods like adding version numbers or unique hashes to the resource URLs force the browser to fetch the updated content instead of relying on the cached version.

By following these best practices, you can optimize client-side caching to enhance website performance, reduce server load, and deliver an improved user experience.

Common Pitfalls and Challenges

- Ensuring Cache Consistency: One of the challenges with client-side caching is maintaining cache consistency. When multiple users access the same resource simultaneously, discrepancies may occur if cached versions differ from the latest content. It's essential to implement cache validation mechanisms and set appropriate expiration times to strike a balance between performance and freshness.

- Dealing with Outdated Cached Resources: Cached resources may become outdated, especially when updates occur on the server side. This can lead to users experiencing stale content. Implement cache revalidation methods, such as conditional requests using ETag or Last-Modified headers, to check if cached resources are still valid before serving them to users.

- Balancing Caching with Security Considerations: Caching sensitive or private data on the client side can pose security risks. Avoid caching sensitive information or use proper encryption and authentication measures when necessary. Consider employing a combination of client-side and server-side caching techniques to strike a balance between performance and security.

Navigating these pitfalls and challenges requires careful planning and a comprehensive caching strategy. By addressing cache consistency, handling outdated resources, and considering security implications, you can optimize client-side caching for an efficient and secure user experience.

Server-Side Caching

Server-side caching refers to the practice of temporarily storing frequently requested data or computations on the server's memory or storage. The primary goal of server-side caching is to optimize server response times and reduce the need for redundant processing, leading to improved overall system performance and reduced latency.

Overview of Caching Mechanisms

Server-side caching employs various caching mechanisms to store and retrieve data efficiently. One common approach is to use in-memory caches such as Redis and Memcached. These caching systems store data directly in RAM, enabling lightning-fast access times. They are ideal for storing frequently accessed data, such as database query results or API responses. By keeping data in memory, server-side applications can quickly retrieve and serve cached content, reducing the need for repeated, expensive database queries or computation.

Another caching mechanism, specifically for PHP-based web applications, is the use of opcode caches like OPcache. Opcode caches store precompiled PHP code in memory, eliminating the need to reprocess PHP scripts on every request. This results in significant performance improvements for PHP applications, as it bypasses the repetitive parsing and compilation steps, reducing server load and response times.

By utilizing server-side caching mechanisms like in-memory caches (Redis, Memcached) and opcode caches (OPcache), applications can optimize server performance, minimize redundant computations, and provide faster and more efficient responses to client requests. This, in turn, leads to a better overall user experience and a more responsive web application.

Benefits of Server-Side Caching

Server-side caching offers several key benefits that significantly improve the performance and scalability of web applications:

Reduced Database and Backend Processing Load: By caching frequently requested data in memory, server-side caching reduces the need for repeated database queries and backend processing. This reduction in data retrieval and computation leads to lighter database and server loads, allowing resources to be allocated efficiently and improving the overall responsiveness of the application.

Faster Response Times for Frequently Requested Data: With data stored in an in-memory cache like Redis or Memcached, the server can quickly retrieve and serve the cached content in milliseconds. As a result, users experience faster response times for data that is frequently accessed, enhancing the user experience and reducing wait times.

Scalability and Load Balancing Advantages: Server-side caching plays a crucial role in improving the scalability and load-balancing capabilities of web applications. By reducing the backend processing load, cached data can be served rapidly, allowing servers to handle a higher number of concurrent requests without sacrificing performance. This enables applications to easily scale to meet increasing demand, ensuring a seamless experience for users during traffic spikes or high-volume usage periods.

Overall, server-side caching provides a robust solution to enhance application performance, optimize resource utilization, and maintain responsiveness, making it an essential component in building high-performing and scalable web applications.

Implementing Server-Side Caching

Implementing server-side caching involves various strategies to store and manage cached data effectively. One approach is caching data at the application level, where frequently accessed data is stored directly in memory using data structures like dictionaries or arrays. This method works well for smaller-scale caching or when the data doesn't change frequently. However, it's crucial to consider memory limitations and data consistency when using this approach.

Another effective technique is caching database query results. When a query is executed, its result is stored in the cache. Subsequent requests for the same query can be served from the cache, reducing the load on the database and improving response times. To keep the cached data up-to-date with changes in the database, developers need to define cache invalidation policies.

Cache expiration and eviction strategies are also essential to ensure that cache data remains relevant and doesn't consume excessive memory. Cache expiration sets a time limit for cached data, after which it is considered stale and discarded upon the next request. On the other hand, eviction policies determine which data gets removed when the cache reaches its capacity limit. Common eviction algorithms include Least Recently Used (LRU), and Least Frequently Used (LFU).

When implementing server-side caching, developers need to consider the nature of the data, the application's specific requirements, and the available caching mechanisms to optimize caching performance effectively. By combining appropriate caching strategies and tools, applications can harness the benefits of server-side caching to deliver faster response times, reduce database load, and achieve more efficient data management.

Optimizing Cache Invalidation

Cache invalidation is a critical aspect of server-side caching to ensure that stale data doesn't persist in the cache. Implementing effective cache invalidation techniques is essential for maintaining data accuracy and consistency. One common approach to removing stale cache entries is through the use of expiration times. By setting an appropriate expiration time for cached data, the cache automatically removes outdated entries, forcing the application to fetch fresh data for the next request.

Another powerful technique for cache invalidation is utilizing cache tags and granular invalidation. Cache tags allow associating multiple cache entries with a specific tag or label. When related data is updated or invalidated, the cache can selectively remove all entries associated with that tag, ensuring that all affected data is evicted from the cache.

Granular invalidation allows developers to target specific cache entries for removal rather than clearing the entire cache. This fine-grained approach minimizes the risk of unnecessarily removing frequently accessed and still valid data from the cache. By employing cache tags and granular invalidation, developers can achieve more precise control over cache invalidation, resulting in more efficient cache management and improved data consistency.

Server-Side Caching Tools

Several powerful caching tools and libraries are available to implement server-side caching effectively. The Cache class contains functions for cache usage in Toro Cloud’s Martini.

- Guava Cache: Google's Guava Cache is a caching utility that uses an in-memory-only caching mechanism. Caches created by this provider are local to a single run of an application only (or, in this case, a single run of a Martini package).

- Ehcache: Ehcache is a full-featured Java-based cache provider. It supports caches that store data on disk or in memory. It is also built to be scalable and can be tuned for loads requiring high concurrency.

- Redis: Redis is an in-memory data structure project implementing a distributed, in-memory key-value database with optional durability. Redis has built-in replication, Lua scripting, LRU eviction, transactions, and different levels of on-disk persistence and provides high availability via Redis Sentinel and automatic partitioning with Redis Cluster.

By leveraging these caching tools and integrating them with web frameworks and CMS platforms, developers can optimize server response times, reduce backend processing, and enhance the overall performance and scalability of their applications.

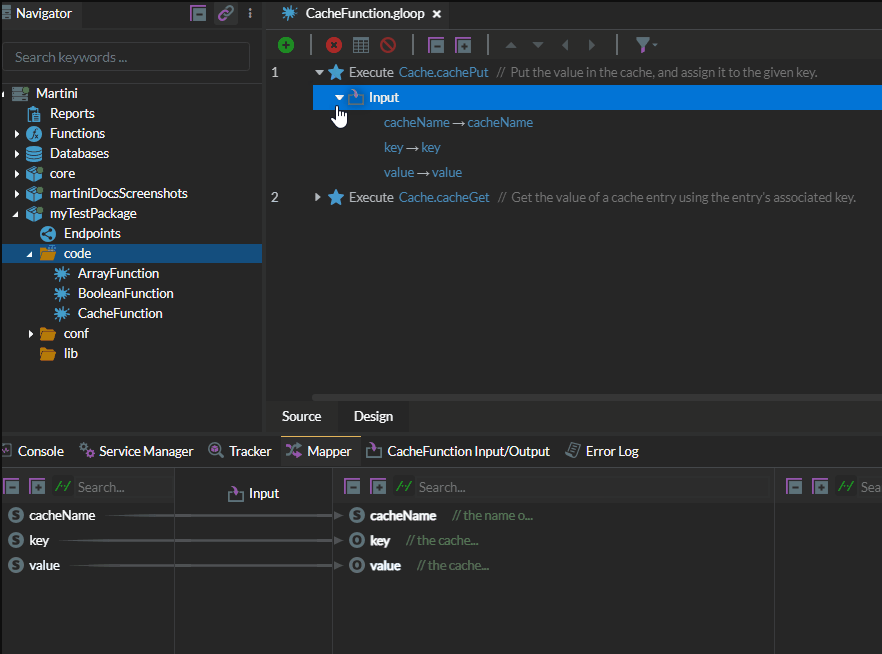

Cache Functions

Enterprise-grade integration platforms often come equipped with caching capabilities to enable the storage of dynamic or static data for quicker retrieval. Below is an example snippet demonstrating the utilization of the Cache function within the Martini integration platform.

Screenshot of Martini Cache Functions.

Caching Strategies and Considerations

Cache coherency and consistency are critical considerations when implementing caching strategies. Maintaining cache coherency ensures that the cached data remains consistent with the data in the source of truth (e.g., your database or backend server). When updates are made to the source data, cached copies should be invalidated or updated accordingly to prevent serving stale or outdated content.

Handling cache invalidation across different caching layers can be challenging. This involves managing caches on both the client side and server side. Coordinating cache invalidation to ensure consistency across all caching layers requires careful planning and implementation.

By addressing cache coherency and handling cache invalidation effectively, you can maintain data consistency throughout your caching infrastructure, providing users with up-to-date and accurate content while optimizing performance.

Combining Caching Methods

Implementing a hybrid caching strategy involves leveraging the strengths of both client-side and server-side caching to maximize performance and user experience.

Utilize client-side caching for static resources that can be stored locally in the user's browser. Set appropriate Cache-Control headers to specify caching durations and optimize the use of browser cache for faster load times on subsequent visits.

Employ server-side caching for dynamic content that is expensive to generate on each request. Use in-memory caches like Redis or Memcached to store frequently accessed data. Implement cache expiration and eviction strategies to keep the data up-to-date.

By combining these caching methods effectively, you can reduce server load, minimize data transfer, and enhance the overall performance and scalability of your application, delivering an optimal user experience globally.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK