微型神经网络库MicroGrad-基于标量自动微分的类pytorch接口的深度学习框架 - LeonYi

source link: https://www.cnblogs.com/justLittleStar/p/17521734.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

一、MicroGrad

MicroGrad是大牛Andrej Karpathy写的一个非常轻量级别的神经网络库(框架),其基本构成为一个90行python代码的标量反向传播(自动微分)引擎,以及在此基础上实现的神经网络层。

其介绍如下:

A tiny scalar-valued autograd engine and a neural net library on top of it with PyTorch-like API

Andrej Karpathy时长2.5小时的通俗易懂的讲解,一步一步教你构建MicroGrad。学习完视频,相信可以一窥现代深度学习框架的底层实现。Bili视频链接:https://www.bilibili.com/video/BV1aB4y13761/?vd_source=e5f3442199b63a8df006d57974ad4e23

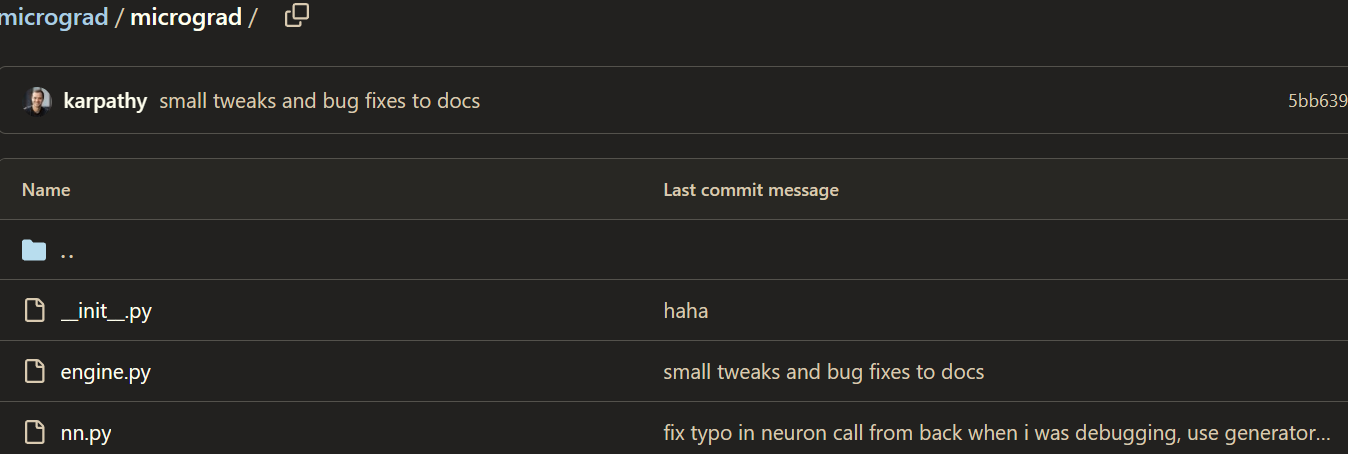

Github链接:https://github.com/karpathy/micrograd

项目除了框架源码外,给出了基于该库在一个二分类数据集上训练了一个2层MLP,源码为demo.ipynb。

此外,给出了基于graphviz库的神经网络计算图可视化notebooktrace_graph.ipynb,可以形象地观察前向和反向传播过程。

接下来将简要介绍一下其标量反向传播引擎engine.py和神经网络层nn.py。

二、标量反向传播引擎Engine

2.1 反向传播与自动微分

反向传播的核心就是链式法则,而深度神经网络的反向传播最多再加一个矩阵求导。

在反向传播的过程,本质是求网络的每个参数关于最终损失函数的梯度,而该梯度可以成是回传的全局梯度和局部梯度之乘。

形象地说,梯度代表了当前层参数的变化,对最终预测损失的影响(变化率),而该变化率实际取决于当前层参数对下一层输入的影响,以及下一层输入对最终预测损失的影响。两个变化一乘,不就是当前层参数对对最终预测损失的影响。

神经网络本质上可视为一个复杂函数,而该函数的计算公式无论多复杂,都可分解为一系列基本的算数运算(加减乘除等)和基本函数(exp,log,sin,cos,等)等。对相关操作进行分解,同时应用链式求导法则,就可以实现自动微分。

形如:c = 3a + b, o = 2 * c。直观的说,每个参与运行的变量以及运算符都要建模成一个节点,从而构成计算图。

2.2 标量Value类

为了实现自动微分,作者具体实现了一个Engine引擎。

Engine的核心其实就是实现了一个标量Value类,其关键就是在标量值的基础上实现基础运算和其它复杂运算(算子)的前向和反向传播(对基本运行进行了重写)。

为了构建计算图,并在其基础上执行从输出到各个运算节点的梯度反向回传,它绑定了相应的运算关系。为此,每个最基本的计算操作都会生成一个标量Value对象,同时记录产生该对象的运算类型以及参与运算的对象(children)。

每个Value对象都可以视为一个计算节点,在每次计算过程中,中间变量也会被建模成一个计算节点。

class Value:

""" stores a single scalar value and its gradient """

def __init__(self, data, _children=(), _op=''):

self.data = data # 标量数据

self.grad = 0 # 对应梯度值,初始为0

# 用于构建自动微分图的内部变量

self._backward = lambda: None # 计算梯度的函数

self._prev = set(_children) # 前向节点(参与该运算的Value对象集合),将用于反向传播

self._op = _op # 产生这个计算节点的运算类型

例如对于如下的+运算:

x = Value(1.0)

y = x + 2

首先,构建Value对象x, 然后在执行+的过程中,调用x.add(self, other)方法。此时,2也被构建为一个Value对象。然后执行如下操作: out = Value(self.data + other.data, (self, other), '+')

最终,返回一个代表计算结果(记录了前向节点)的新Value对象y。

废话不多说,直接上完整源码。

class Value:

""" stores a single scalar value and its gradient """

def __init__(self, data, _children=(), _op=''):

self.data = data

self.grad = 0

# internal variables used for autograd graph construction

self._backward = lambda: None

self._prev = set(_children)

self._op = _op # the op that produced this node, for graphviz / debugging / etc

def __add__(self, other):

other = other if isinstance(other, Value) else Value(other)

out = Value(self.data + other.data, (self, other), '+')

def _backward():

self.grad += out.grad

other.grad += out.grad

out._backward = _backward

return out

def __mul__(self, other):

other = other if isinstance(other, Value) else Value(other)

out = Value(self.data * other.data, (self, other), '*')

def _backward():

self.grad += other.data * out.grad

other.grad += self.data * out.grad

out._backward = _backward

return out

def __pow__(self, other):

assert isinstance(other, (int, float)), "only supporting int/float powers for now"

out = Value(self.data**other, (self,), f'**{other}')

def _backward():

self.grad += (other * self.data**(other-1)) * out.grad

out._backward = _backward

return out

def relu(self):

out = Value(0 if self.data < 0 else self.data, (self,), 'ReLU')

def _backward():

self.grad += (out.data > 0) * out.grad

out._backward = _backward

return out

def backward(self):

# topological order all of the children in the graph

topo = []

visited = set()

def build_topo(v):

if v not in visited:

visited.add(v)

for child in v._prev:

build_topo(child)

topo.append(v)

build_topo(self)

# go one variable at a time and apply the chain rule to get its gradient

self.grad = 1

for v in reversed(topo):

v._backward()

def __neg__(self): # -self

return self * -1

def __radd__(self, other): # other + self

return self + other

def __sub__(self, other): # self - other

return self + (-other)

def __rsub__(self, other): # other - self

return other + (-self)

def __rmul__(self, other): # other * self

return self * other

def __truediv__(self, other): # self / other

return self * other**-1

def __rtruediv__(self, other): # other / self

return other * self**-1

def __repr__(self):

return f"Value(data={self.data}, grad={self.grad})"

有了该框架后,就可构建出计算图。然后,通过基于拓扑排序的backward(self)方法,来进行反向传播。

Value类其实很类似于Pytorh中的Variable类,基于它就可构造复杂的神经网络,而不必手动的计算梯度。

三、简易网络

import random

from micrograd.engine import Value

class Module:

def zero_grad(self):

for p in self.parameters():

p.grad = 0

def parameters(self):

return []

class Neuron(Module):

def __init__(self, nin, nonlin=True):

self.w = [Value(random.uniform(-1,1)) for _ in range(nin)]

self.b = Value(0)

self.nonlin = nonlin

def __call__(self, x):

act = sum((wi*xi for wi,xi in zip(self.w, x)), self.b)

return act.relu() if self.nonlin else act

def parameters(self):

return self.w + [self.b]

def __repr__(self):

return f"{'ReLU' if self.nonlin else 'Linear'}Neuron({len(self.w)})"

class Layer(Module):

def __init__(self, nin, nout, **kwargs):

self.neurons = [Neuron(nin, **kwargs) for _ in range(nout)]

def __call__(self, x):

out = [n(x) for n in self.neurons]

return out[0] if len(out) == 1 else out

def parameters(self):

return [p for n in self.neurons for p in n.parameters()]

def __repr__(self):

return f"Layer of [{', '.join(str(n) for n in self.neurons)}]"

class MLP(Module):

def __init__(self, nin, nouts):

sz = [nin] + nouts

self.layers = [Layer(sz[i], sz[i+1], nonlin=i!=len(nouts)-1) for i in range(len(nouts))]

def __call__(self, x):

for layer in self.layers:

x = layer(x)

return x

def parameters(self):

return [p for layer in self.layers for p in layer.parameters()]

def __repr__(self):

return f"MLP of [{', '.join(str(layer) for layer in self.layers)}]"

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK