If a hammer was like AI…

source link: https://axbom.com/hammer-ai/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

If a hammer was like AI…

It will mostly “guess” your aim, tend to miss the nail and push for a different design. Often unnoticeably.

Per Axbom

Photo montage using PlaceIt and Clipdrop. Hence the weird-looking truck / subway in the background.

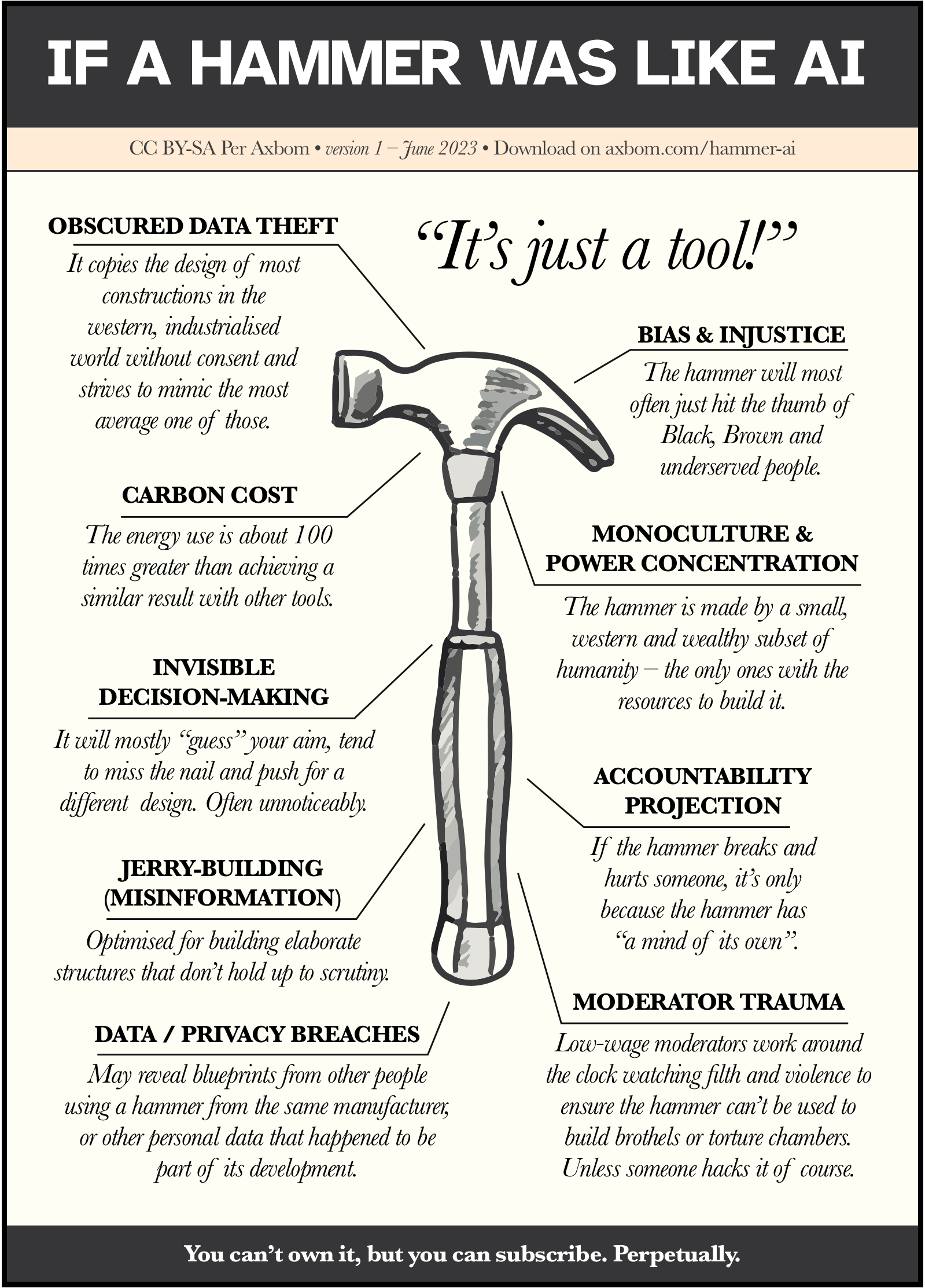

A common argument I come across when talking about ethics in AI is that it's just a tool, and like any tool it can be used for good or for evil. One familiar declaration is this one: "It's really no different from a hammer". I was compelled to make a poster to address these claims. Steal it, share it, print it and use it where you see fit.

Note that this poster primarily addresses the features of generative AI and deep learning, which are the main technologies receiving attention in 2023.

Poster: If a hammer was like AI

Downloads

It's just a tool!

Obscured data theft

It copies the design of most constructions in the western, industrialised world without consent and strives to mimic the most average one of those.

» Read more about obscured data theft.

Bias & injustice

The hammer will most often just hit the thumb of Black, Brown and underserved people.

No, this does not mean that the hammer has a mind of its own. It means that the hammer is built in a way that has inherent biases which will tend to disfavor people who are already underserved and disenfranchised. AI tools will for example fail to reduce bias in recruitment, and contribute to racist and sexist performance reviews.

It's relevant to compare this topic with a touchless soap dispenser that won't react to dark skin. It's not the fault of the user that it does not react, it's the fault of the manufacturer and how it was built. It doesn't "act that way" because it has a mind, but because the manufacturing process was unmindful. You can't tell the user to educate themself about using it to make it work better. See also Twitter's image cropping algorithm, Google's "inability" to find gorillas, webcams unable to recognize dark-skinned faces, and I'm sure there are many more examples that have been shared with me over the years.

» Read more about bias and injustice.

Carbon cost

The energy use is about 100 times greater than achieving a similar result with other tools.

This is of course an inexact number but there are indications that the carbon cost of training, maintaining and using AI tools is significant.

» Read more about carbon costs.

Monoculture and power concentration

The hammer is made by a small western, wealthy subset of humanity who are the only ones who have the resources to build it.

» Read more about monoculture and power concentration.

Invisible decision-making

It will mostly “guess” your aim, tend to miss the nail and push for a different design. Often unnoticeably.

» Read more about invisible decision-making.

Accountability projection

If the hammer breaks and hurts someone, it’s only because the hammer has

“a mind of its own”.

» Read more about accountability projection.

Jerry-building (Misinformation)

Optimised for building elaborate structures that don’t hold up to scrutiny.

» Read more about misinformation.

Data/privacy breaches

May reveal blueprints from other people using a hammer from the same manufacturer, or other personal data that happened to be part of its development.

» Read more about data/privacy breaches.

Moderator trauma

Low-wage moderators work around the clock watching filth and violence to ensure the hammer can’t be used to build brothels or torture chambers. Unless someone hacks it of course.

» Read more about moderator trauma.

You can’t own it, but you can subscribe. Perpetually.

"Not all AI!"

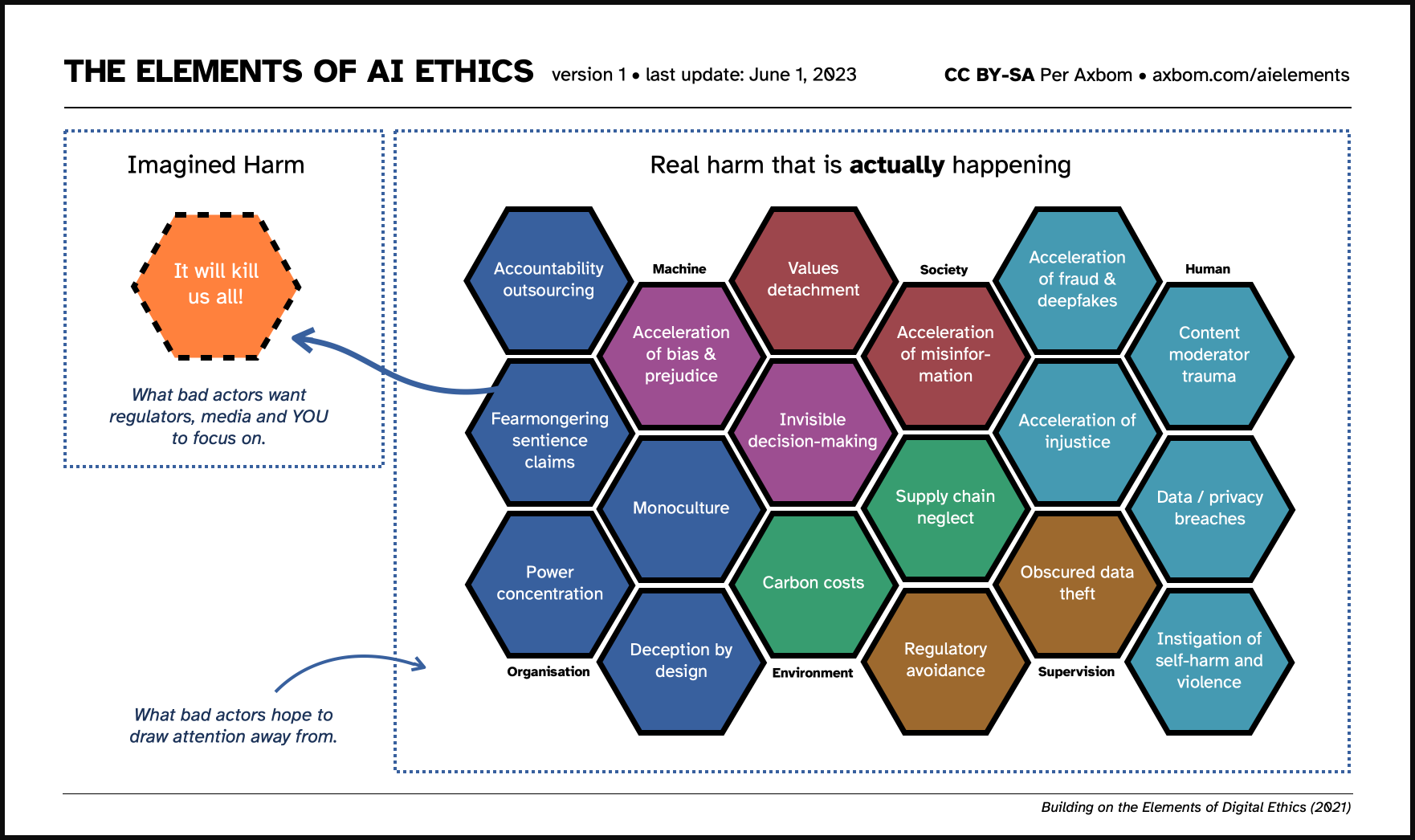

Yes, this is a very tongue-in-cheek poster and you likely want more insights about the background to the topics I bring up. You can get some of that background in my chart The Elements of AI Ethics:

Also read

Comment

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK