Go from 0 to 1 to Enterprise-Ready with MongoDB Atlas and LLMs

source link: https://www.mongodb.com/blog/post/going-from-zero-to-one-enterprise-ready-mongodb-llms

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Go from 0 to 1 to Enterprise-Ready with MongoDB Atlas and LLMs

Creating compelling, truly differentiated experiences for your customers from generative AI-enriched applications means grounding artificial intelligence in truth. That truth comes from your data, more specifically, your most up-to-date operational data. Whether you’re providing hyper-personalized experiences with advanced semantic search or producing user-prompted content and conversations, MongoDB Atlas unifies operational, analytical and vector search data services to streamline embedding the power of LLMs and transformer models into your apps.

Everyday, developers are building the next groundbreaking, transformative generative AI powered application. Commercial and open source LLMs are advancing at breakneck speed. The frameworks and tools to build around them are plentiful and democratize innovation. And yet taking these applications from prototype to enterprise-ready is the chasm development teams must cross. First, these large models can provide incorrect or uninformed answers, because the data they have access to is dated. There are two options to solve uninformed answers - fine-tuning a large model or providing it with long-term memory. However, doing so begets a second barrier - deploying an application around an informed LLM with the right security controls in place, and at the scale and performance users expect.

Developers need a data platform that has the data model flexibility to adapt to the constantly changing unstructured and structured data that informs large models without the hindrance of rigid schemas. While fine-tuning a model is an option, it’s a cost-prohibitive one in terms of time and computational resources. This means developers need to be able present data as context to large models as part of prompts. They need to give these generative models long-term memory. We discuss a few examples of how to do so with various LLMs and generative AI frameworks below.

Five resources to get started with MongoDB Atlas and Large Language Models

MongoDB Atlas makes it seamless to integrate leading generative AI services and systems such as the hyperscalers and open source LLMs and frameworks. By combining document and vector embedding data stores in one place via Atlas Database and Atlas Vector Search (preview), developers can accelerate building their generative AI-enriched applications that are grounded in the truth of operational data. Below are examples with how to work with popular LLM frameworks and MongoDB:

1. Get started with Atlas Vector Search (preview) and OpenAI for semantic search

This tutorial walks you through the steps of performing semantic search on a sample movie dataset with MongoDB Atlas. First, you’ll set up an Atlas Trigger to make a call to an OpenAI API whenever a new document is inserted into your cluster, so as to convert it into a vector embedding. Then, you’ll perform a vector search query using Atlas Vector Search. There’s even a special bonus section for leveraging HuggingFace models. Read the tutorial.

2. Build a Gen AI-enriched chat app with your proprietary data using Llamalndex and MongoDB

LlamaIndex provides a simple, flexible interface to connect LLMs with external data. This joint blog from LlamaIndex and MongoDB goes into more detail about why and how you might want to build your own chat app. The attached notebook in the blog provides a code walkthrough on how to query any PDF document using English language queries. Read the blog.

3. See the docs for how to use Atlas Vector Search (preview) as a vector store with LangChain

As stated in the partnership announcement blog post, LangChain and MongoDB Atlas are a natural fit, and it’s been demonstrated by the organic community enthusiasm which has led to several integrations in LangChain for MongoDB. In addition to now supporting Atlas Vector Search as a Vector Store there is already support to utilize MongoDB as a chat log history.

4. Generate predictions directly in MongoDB Atlas with MindsDB AI Collections

MindsDB is an open-source machine learning platform that brings automated machine learning to the database. In this blog you’ll generate predictions directly in Atlas with MindsDB AI Collections, giving you the ability to consume predictions as regular data, query these predictions, and accelerate development speed by simplifying deployment work-flows. Read the blog.

5. Integrate HuggingFace transformer models into MongoDB Atlas with Atlas Triggers

HuggingFace is an AI community that makes it easy to build, train and deploy machine learning models. Leveraging Atlas Triggers alongside HuggingFace allows you to easily react to changes in operational data that provides long-term memory to your models. Learn how to set up Triggers to automatically predict the sentiment of new documents in your MongoDB database and add them as additional fields to your documents. See the GitHub Repo.

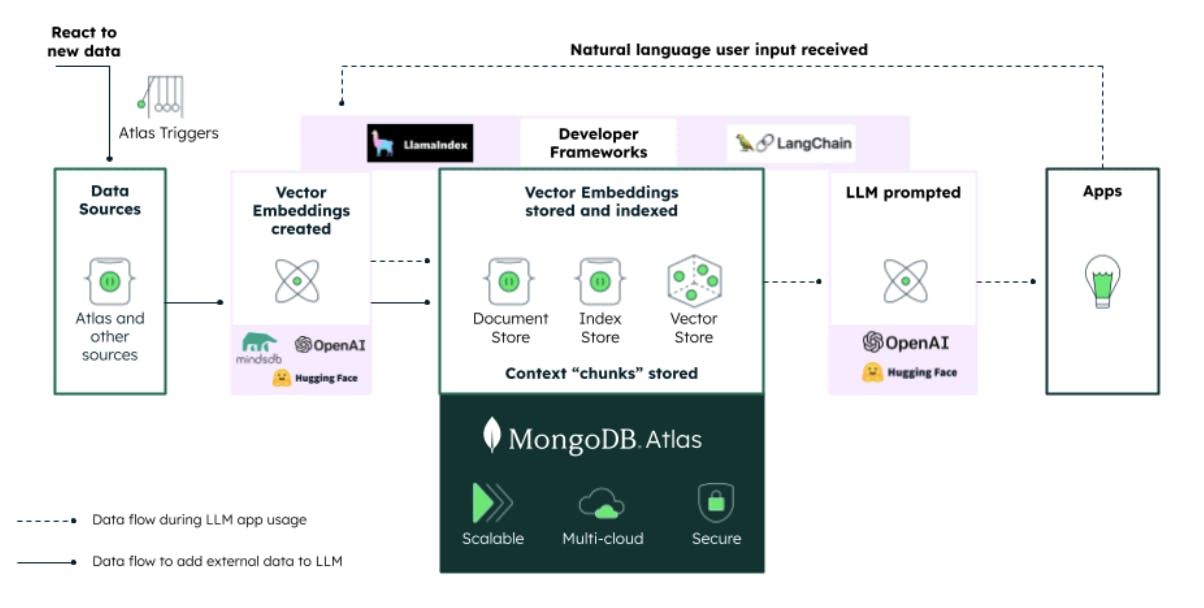

Figure 1: The sample app architecture shows how external, or proprietary, data provides long-term memory to an LLM and how the data flows from a user's input to an LLM-powered response.

From prototype to production with MongoDB for generative AI-enriched apps

MongoDB’s developer data platform built on Atlas provides a modern, optimized developer experience while also being battle tested by thousands of enterprises globally to perform at scale and securely.

Whether you are building the next big thing at a startup or enterprise, Atlas enables you to:

-

Accelerate building your generative AI-enriched applications that are grounded in the truth of operational data.

-

Simplify your app architecture by leveraging a single platform that allows them to store app and vector data in the same place, react to changes in source data with serverless functions, and search across multiple data modalities for improving relevance and accuracy in responses that their apps generate.

-

Easily evolve your gen AI-enriched apps with the flexibility of the document model while maintaining a simple, elegant developer experience.

-

Seamlessly integrate leading AI services and systems such as the hyperscalers and open source LLMs and frameworks to stay competitive in dynamic markets.

-

Build gen AI-enriched applications on a high performance, highly scalable operational database that's had a decade of validation over a wide variety of AI use cases.

While these examples are the building blocks for something more innovative, MongoDB can help you go from concept to production to scale. Get started today by signing up for MongoDB Atlas free tier and integrating with your preferred frameworks and LLMs. If you’re interested in working with us more closely, check out our MongoDB AI Innovators program, which enables Artificial Intelligence innovation and showcases cutting-edge solutions from startups, customers, and partners.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK