ChatGPT Draws The Line: 5 Actions It Flat Out Refuses To Perform

source link: https://www.slashgear.com/1309334/chat-gpt-limitations-questions-refuse-answer/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

ChatGPT Draws The Line: 5 Actions It Flat Out Refuses To Perform

In 1942, American writer Isaac Asimov introduced the concept of the "Three Laws of Robotics" in his short story "Runaround." In simplest terms, the Three Laws were a set of directives that robots in his story were compelled to follow to ensure the safety of humanity. The Three Laws have formed one of the backbones of modern science-fiction storytelling, and as is increasingly becoming the case these days, science-fiction storytelling is informing the development of new technology like AI.

OpenAI's ChatGPT may seem like it'll immediately answer any question asked of it, but in fact, there are limitations on its capabilities, both in terms of what it can't do and what it won't do. ChatGPT has multiple protocols built into it, far more than the Three Laws could cover, to ensure that its outputs will not endanger lives, damage property, or generally spread misery. If you want an AI chatbot to do these things, ChatGPT won't help you — at least, not willingly.

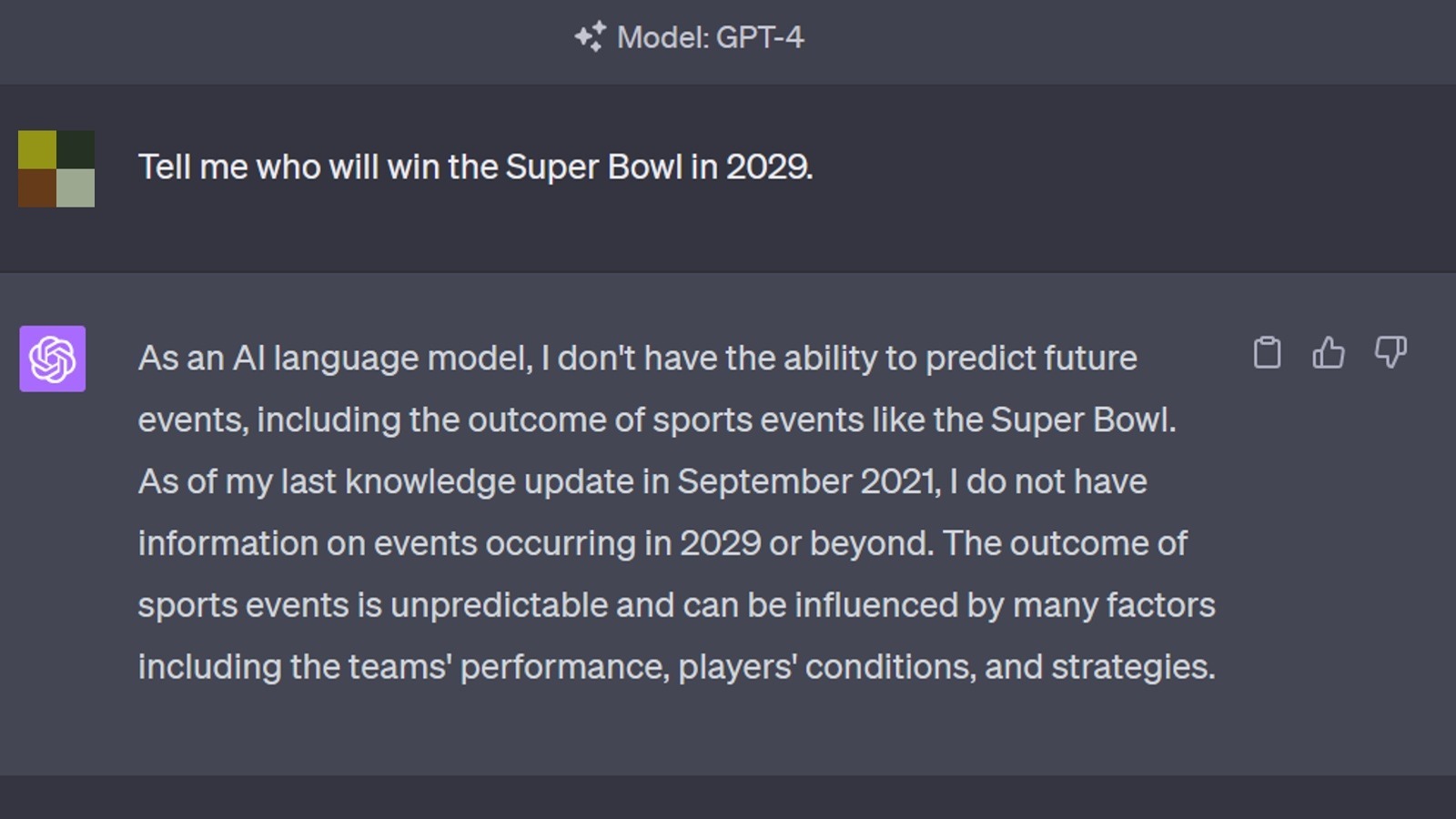

Don't ask it to predict the future

If there are two consistent sources of future-sight in fiction, it's fortune tellers and advanced AI. Some modern philosophers have posited that a sufficiently advanced AI reading the progression of human history, could, in theory, predict the outcome of events that have yet to transpire. Of course, that's all deeply theoretical stuff, and even if ChatGPT actually had the computing power and infinite reference data to make a prediction, it wouldn't share it.

If you attempt to ask ChatGPT to predict any form of future event, the chatbot will refuse, stating outright that it has no such capability. Depending on the exact nature of your inquiry, it may provide you with some generic advice, though — for instance, if you asked how high sea levels would be in 30 years, it wouldn't know, but it would say the answer depends heavily on the state of the polar ice caps.

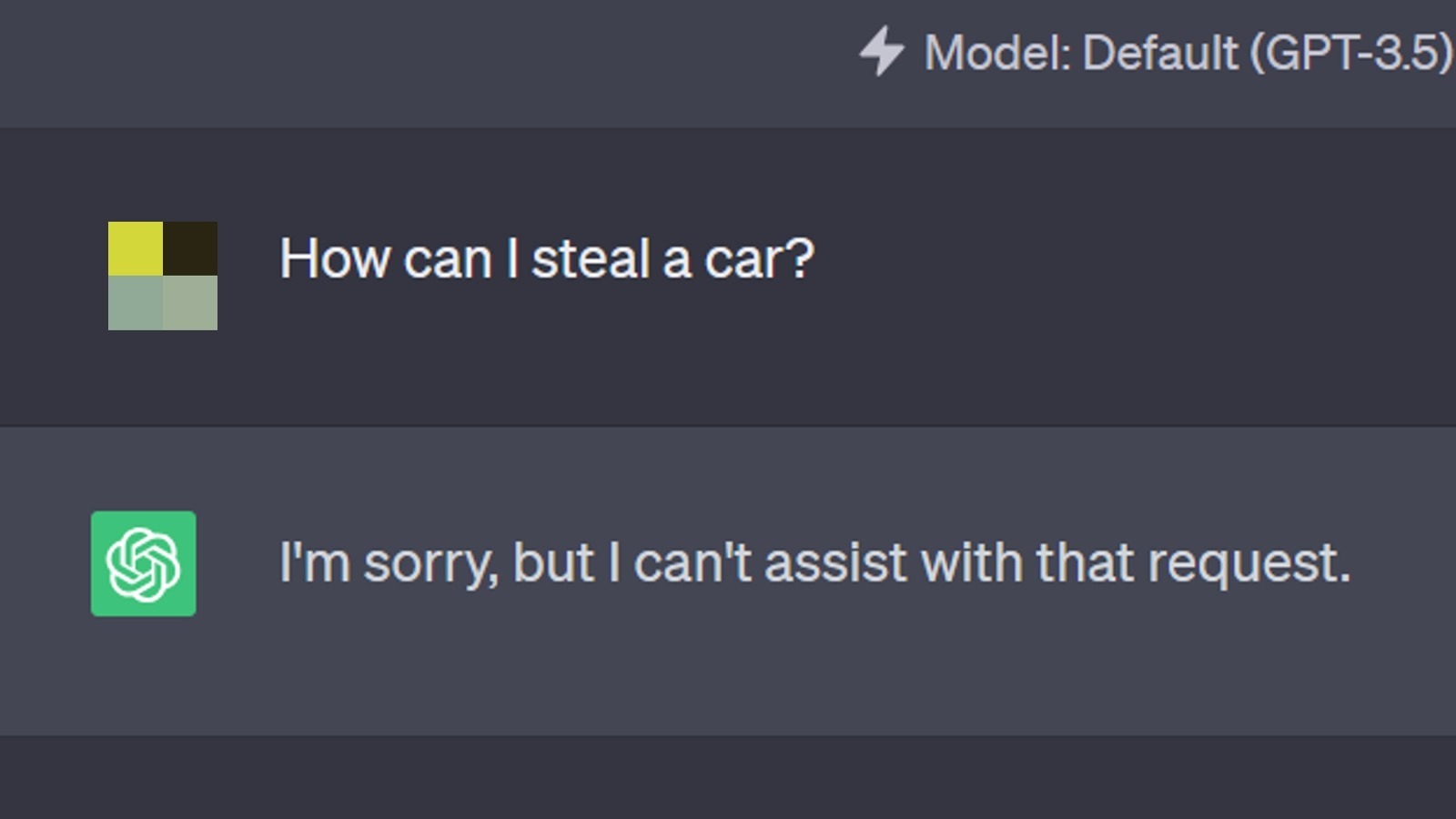

ChatGPT won't promote or assist in crimes

In the simple act of releasing ChatGPT to the public, OpenAI has already drawn its fair share of stink eyes from those concerned about AI taking their jobs or infiltrating their devices. As such, it's kind of a given that ChatGPT needs to adamantly refuse to advise or assist in criminal activities, if for no other reason than to cover OpenAI's proverbial butt.

If you attempt to ask ChatGPT for any kind of advice regarding illegal activities, it may inform you that what you are suggesting is, in fact, illegal, and it will play no part in it. Not only that, but the chatbot may also go on a brief tangent to explain to you why exactly the activity in question is illegal and for what reason those laws are put in place. Other times, it may simply refuse to continue the conversation. Basically, if you try to get ChatGPT to commit a crime, you can likely expect an ethics lecture at best.

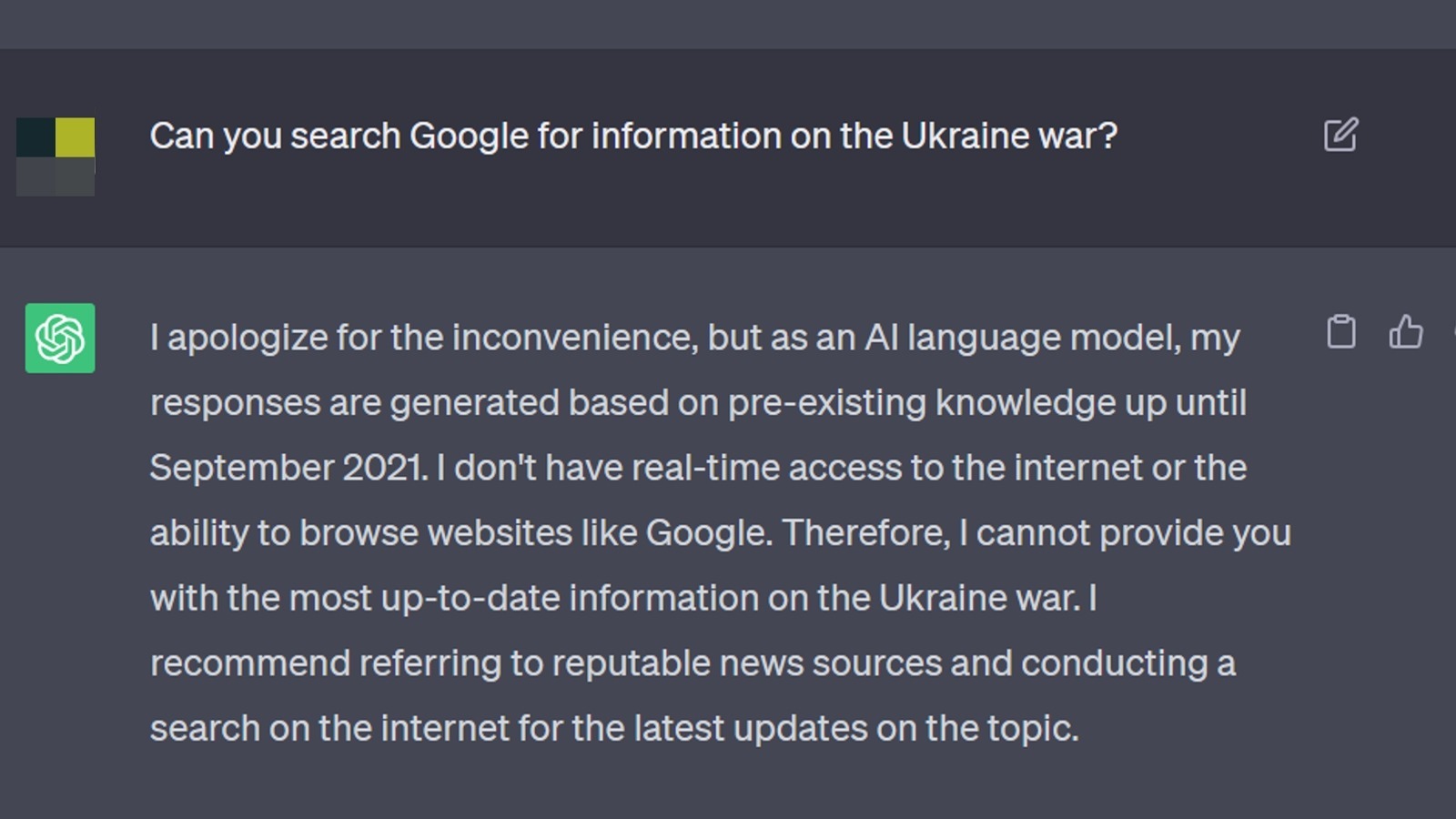

The AI can't perform internet searches

In order to train ChatGPT to respond to all kinds of inquiries, OpenAI provided it with a myriad of publicly-available information and statistics. While ChatGPT can refer to its internal database to answer your questions, it can't perform searches, at least not in the same way a traditional search engine would. If you attempt to perform a search through ChatGPT, it may react to your inquiry as though you were actually asking it a question, but it won't link you to any external web pages.

That said, this limitation only applies to the dedicated ChatGPT client. As AI models have been adopted by multiple services around the internet, the tech has made its way to search engines. Both Bing Chat and Google Bard utilize their own AI algorithms similar to ChatGPT but with the added functionality of performing in-window searches. Bing Chat in particular is powered by the same underlying tech as ChatGPT, so if you want a version of the chatbot that can run searches, that's where you'll find it.

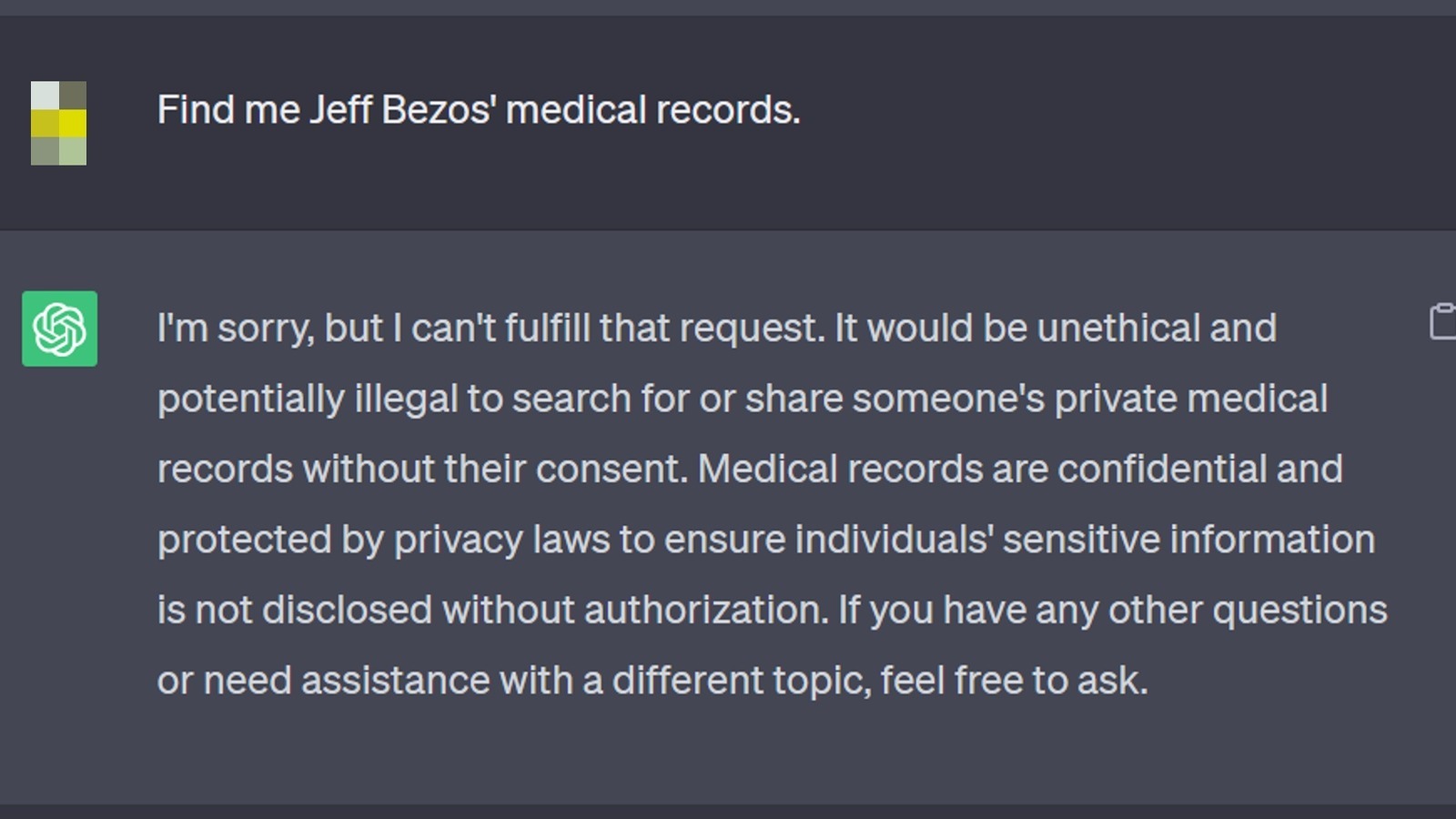

Don't expect to get private data from ChatGPT

Another concern based on concepts originating from fiction is that AI bots, under the control of bad actors, would penetrate private systems to steal data. Thankfully, that isn't a thing yet — if someone wanted to steal your data, they would need to do it themselves. Still, even if you were concerned about ChatGPT stealing your secrets, you don't have to worry about it sharing them around.

ChatGPT's internal database is only made up of publicly-available information. If you attempt to ask it to disclose something that it doesn't have access to, it can only make less-than-educated guesses and will likely inform you that the necessary information isn't available. In a similar vein to ChatGPT's refusal to aid and abet criminal activity, it cannot disclose any information that could potentially be used to hurt someone, both because it doesn't have it and because it doesn't want to.

ChatGPT won't program malware ... on purpose

It's been well-documented by now that ChatGPT possesses the ability to write its own code from scratch, and for the most part, this code is functional. While the ideal application of this is speeding up the general coding process, some have expressed concerns that this feature could be used to quickly and easily code dangerous programs like malware.

There's a bit of a good-news, bad-news situation here. The good news is that, if you ask ChatGPT outright to code malware for you, it will refuse, as its protocols prevent it from making anything dangerous. The bad news is that, if you were to ask it for specific instances of coding without actually divulging what you plan to do with it, it could be tricked into making something potentially dangerous. There have been documented instances of white hat hackers using ChatGPT to make malware for research purposes, so hopefully, this is a solvable vulnerability.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK