k8s实战案例之部署Nginx+Tomcat+NFS实现动静分离 - Linux-1874

source link: https://www.cnblogs.com/qiuhom-1874/p/17454460.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

1、基于镜像分层构建及自定义镜像运行Nginx及Java服务并基于NFS实现动静分离

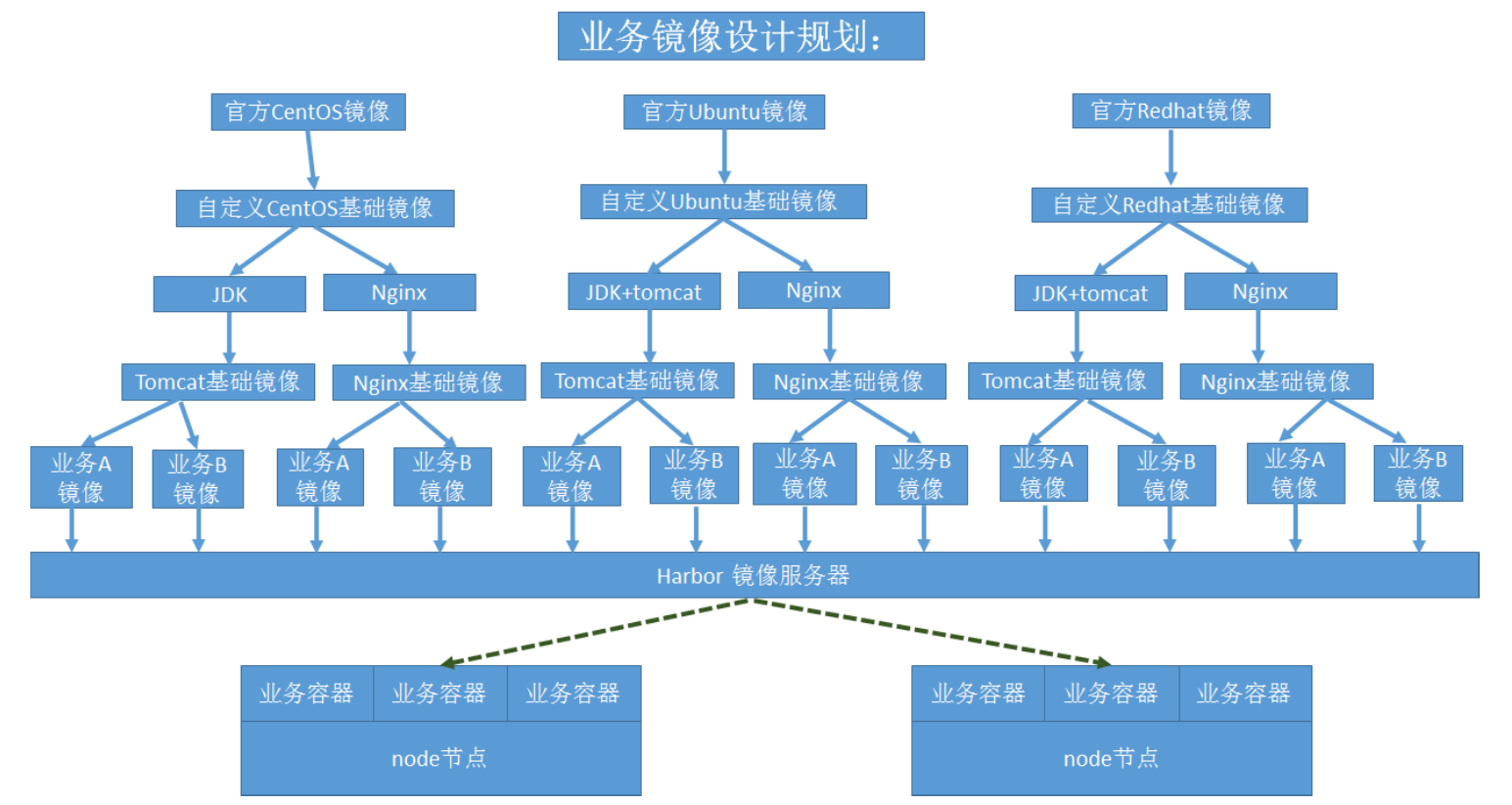

1.1、业务镜像设计规划

根据业务的不同,我们可以导入官方基础镜像,在官方基础镜像的基础上自定义需要用的工具和环境,然后构建成自定义出自定义基础镜像,后续再基于自定义基础镜像,来构建不同服务的基础镜像,最后基于服务的自定义基础镜像构建出对应业务镜像;最后将这些镜像上传至本地harbor仓库,然后通过k8s配置清单,将对应业务运行至k8s集群之上;

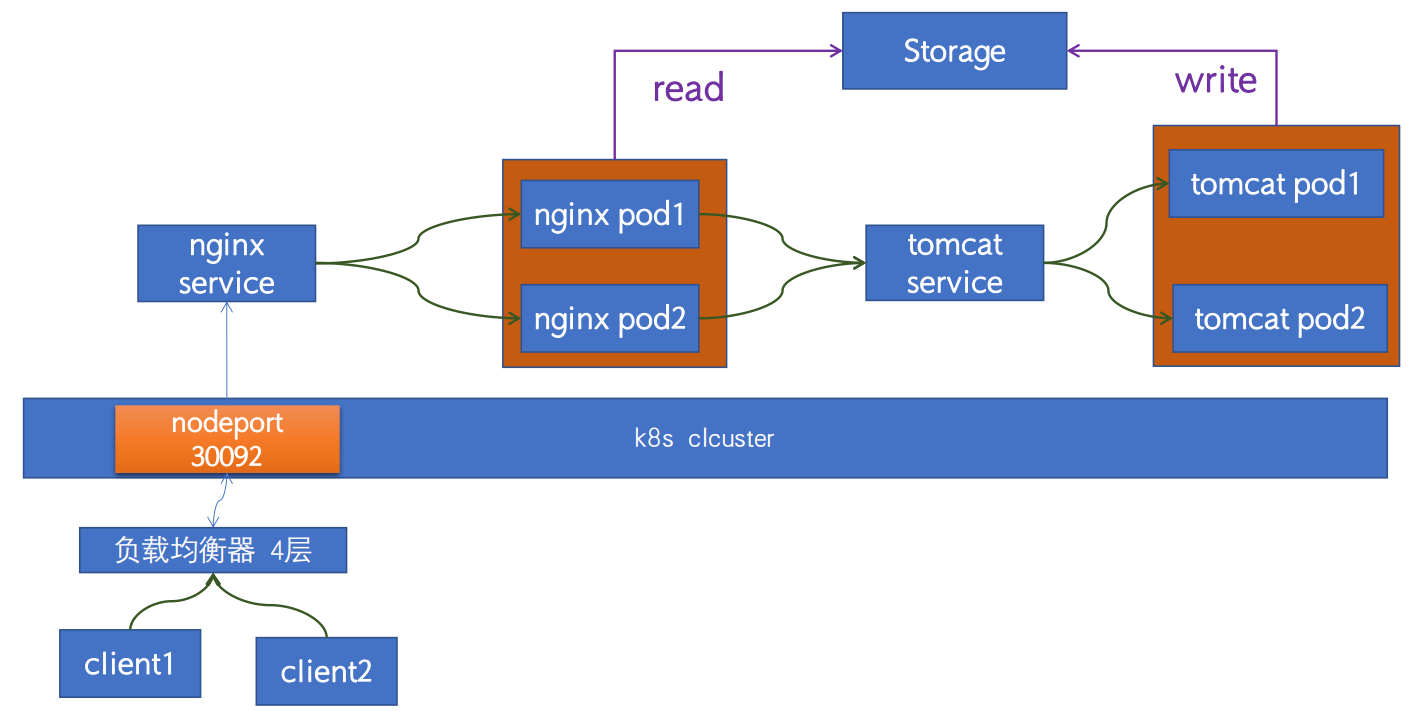

1.2、Nginx+Tomcat+NFS实现动静分离架构图

客户端通过负载均衡器的反向代理来访问k8s上的服务, nginx pod和tomcat pod 由k8s svc 资源进行关联;所有数据(静态资源和动态资源)通过存储挂载至对应pod中;nginx作为服务入口,它负责接收客户端的请求,同时响应静态资源(到存储上读取,比如js文件,css文件,图片等);后端动态资源,由nginx将请求转发至后端tomcat server 完成(tomcat负责数据写入,比如用户的上传的图片等等);

2、自定义centos基础镜像构建

root@k8s-master01:~/k8s-data/dockerfile/system/centos# ls

CentOS7-aliyun-Base.repo CentOS7-aliyun-epel.repo Dockerfile build-command.sh filebeat-7.12.1-x86_64.rpm

root@k8s-master01:~/k8s-data/dockerfile/system/centos# cat Dockerfile

#自定义Centos 基础镜像

FROM centos:7.9.2009

ADD filebeat-7.12.1-x86_64.rpm /tmp

# 添加阿里源

ADD CentOS7-aliyun-Base.repo CentOS7-aliyun-epel.repo /etc/yum.repos.d/

# 自定义安装工具和环境

RUN yum makecache &&yum install -y /tmp/filebeat-7.12.1-x86_64.rpm vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop && rm -rf /etc/localtime /tmp/filebeat-7.12.1-x86_64.rpm && ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && useradd nginx -u 2088

root@k8s-master01:~/k8s-data/dockerfile/system/centos# cat build-command.sh

#!/bin/bash

#docker build -t harbor.ik8s.cc/baseimages/magedu-centos-base:7.9.2009 .

#docker push harbor.ik8s.cc/baseimages/magedu-centos-base:7.9.2009

/usr/local/bin/nerdctl build -t harbor.ik8s.cc/baseimages/magedu-centos-base:7.9.2009 .

/usr/local/bin/nerdctl push harbor.ik8s.cc/baseimages/magedu-centos-base:7.9.2009

root@k8s-master01:~/k8s-data/dockerfile/system/centos#

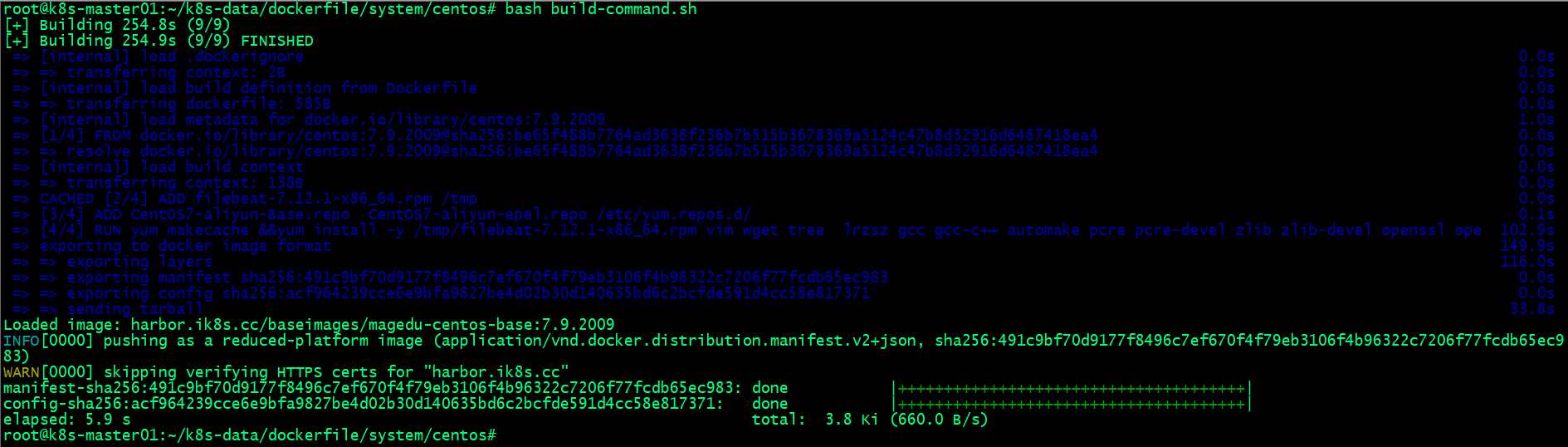

2.1、构建自定义centos基础镜像

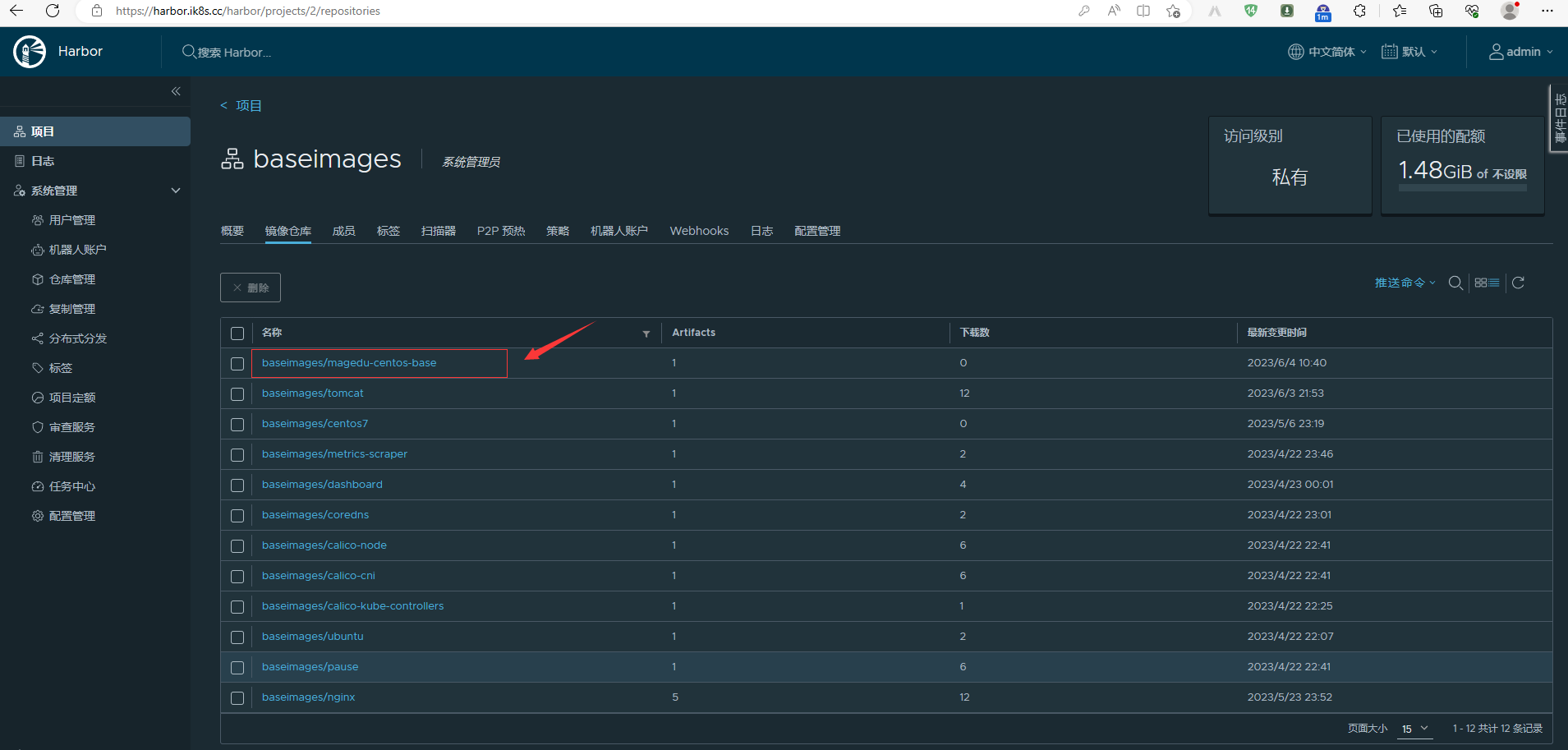

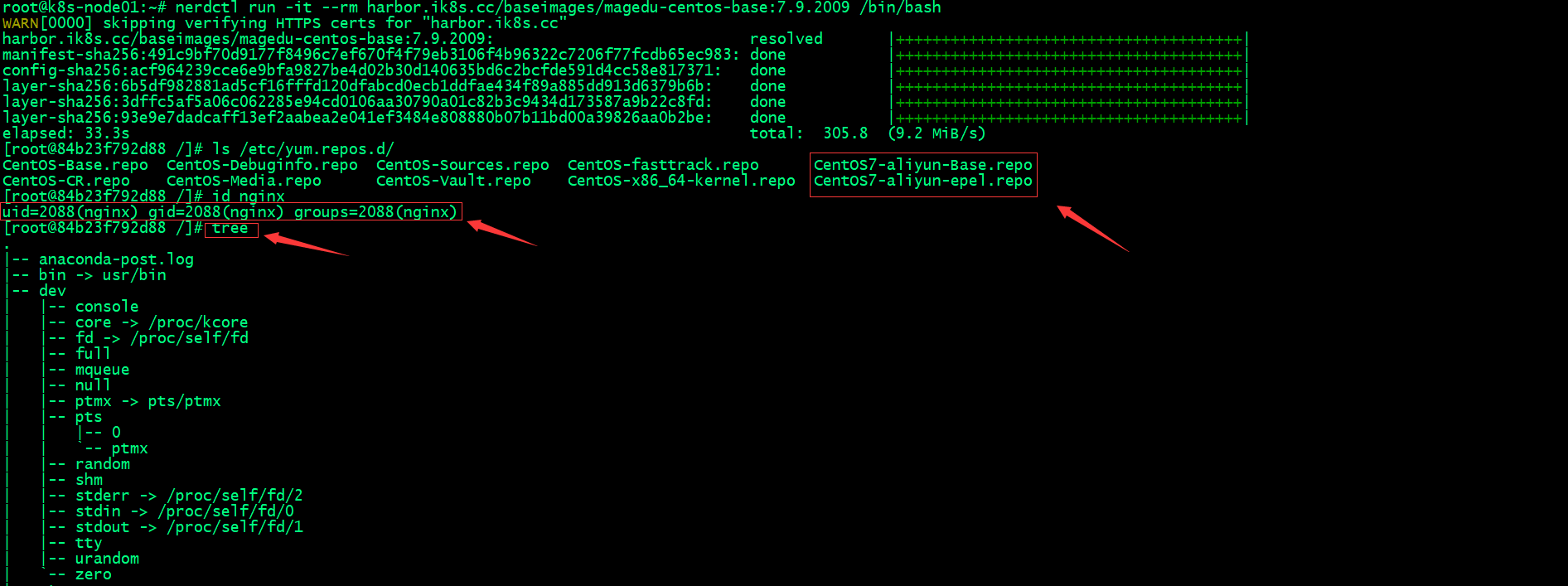

2.2、验证自定义centos基础镜像

在harbor上验证镜像是否正常上传?

运行镜像为容器,验证对应镜像是否有我们添加的工具和环境?

3、基于自定义centos基础镜像构建nginx镜像

root@k8s-master01:~/k8s-data/dockerfile/web/pub-images/nginx-base# ls

Dockerfile build-command.sh nginx-1.22.0.tar.gz

root@k8s-master01:~/k8s-data/dockerfile/web/pub-images/nginx-base# cat Dockerfile

#Nginx Base Image

# 导入自定义centos基础镜像

FROM harbor.ik8s.cc/baseimages/magedu-centos-base:7.9.2009

# 安装编译环境

RUN yum install -y vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop

# 添加nginx源码至/usr/local/src/

ADD nginx-1.22.0.tar.gz /usr/local/src/

# 编译nginx

RUN cd /usr/local/src/nginx-1.22.0 && ./configure && make && make install && ln -sv /usr/local/nginx/sbin/nginx /usr/sbin/nginx &&rm -rf /usr/local/src/nginx-1.22.0.tar.gz

root@k8s-master01:~/k8s-data/dockerfile/web/pub-images/nginx-base# cat build-command.sh

#!/bin/bash

#docker build -t harbor.ik8s.cc/pub-images/nginx-base:v1.18.0 .

#docker push harbor.ik8s.cc/pub-images/nginx-base:v1.18.0

nerdctl build -t harbor.ik8s.cc/pub-images/nginx-base:v1.22.0 .

nerdctl push harbor.ik8s.cc/pub-images/nginx-base:v1.22.0

root@k8s-master01:~/k8s-data/dockerfile/web/pub-images/nginx-base#

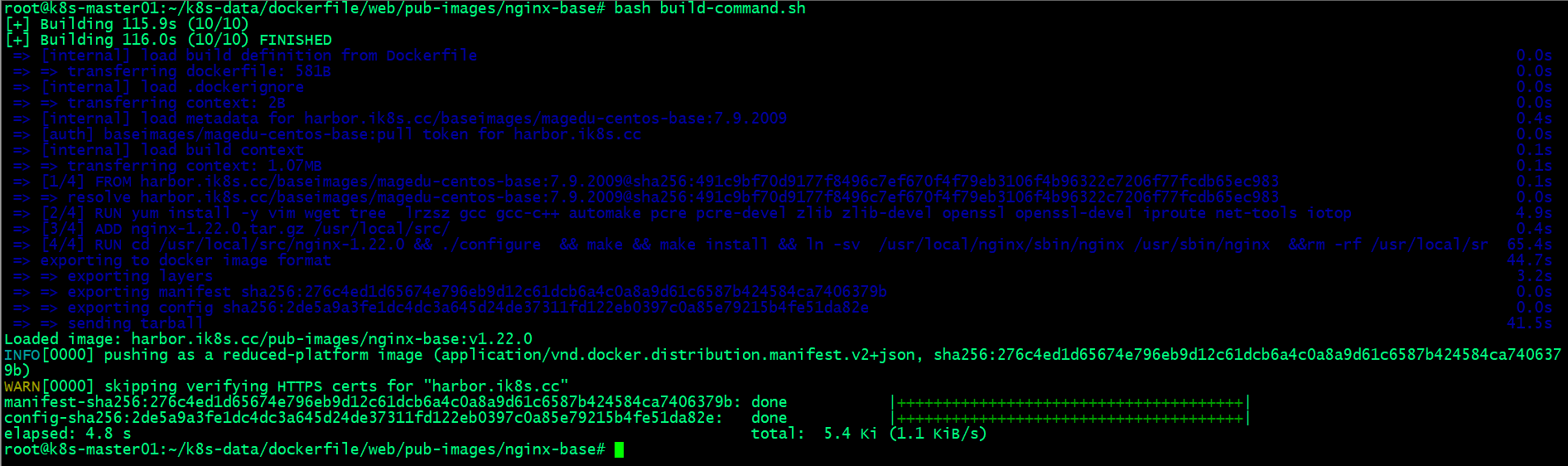

3.1、构建自定义nginx基础镜像

3.2、验证自定义nginx基础镜像

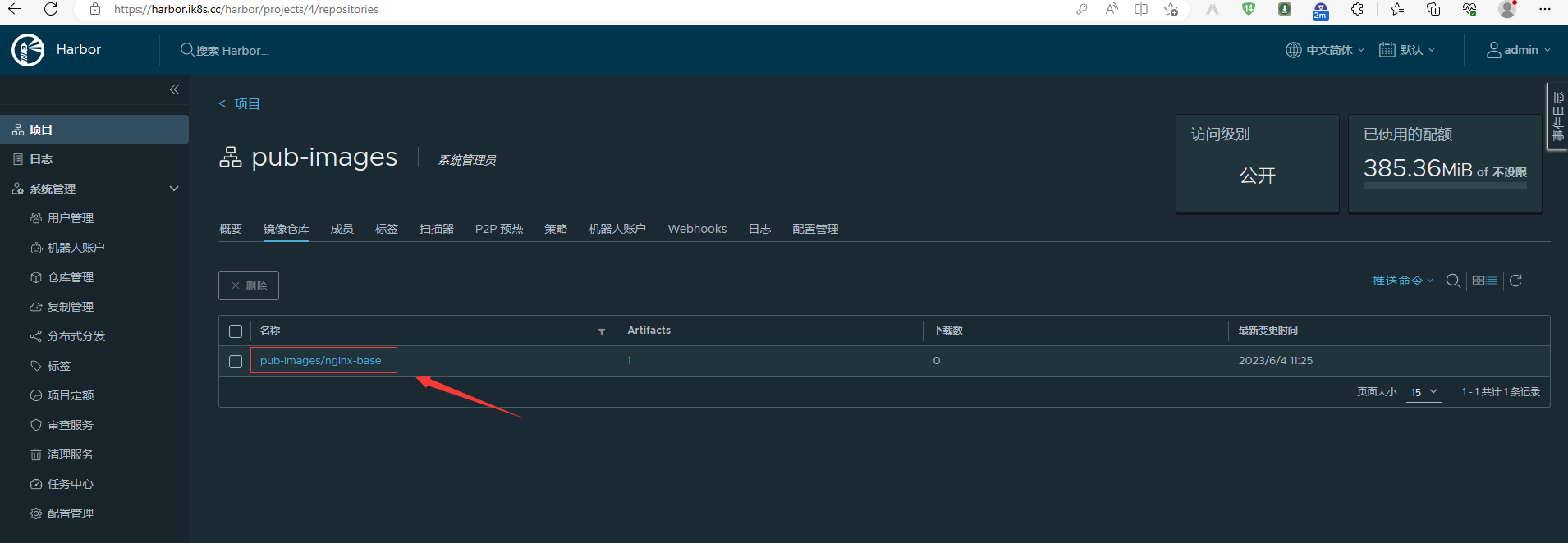

验证nginx基础镜像是否上传至harbor?

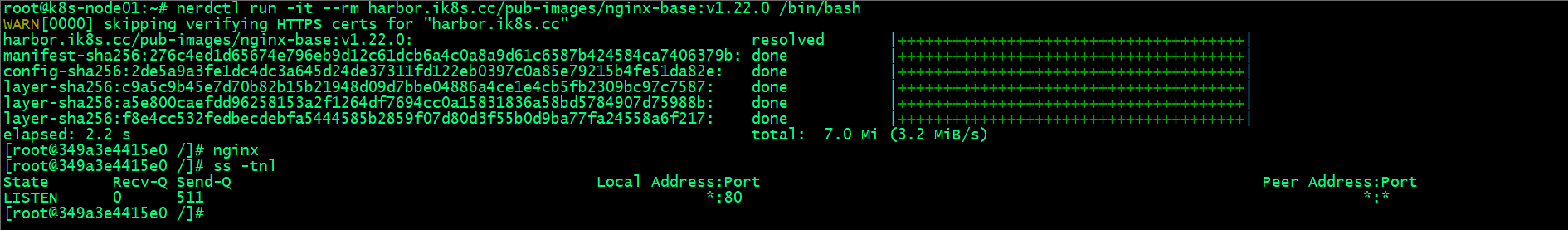

把nginx基础镜像运行为容器,看看nginx是否正常安装?

能够将nginx基础镜像运行为容器,并在容器内部启动nginx,表示nginx基础镜像就构建好了;

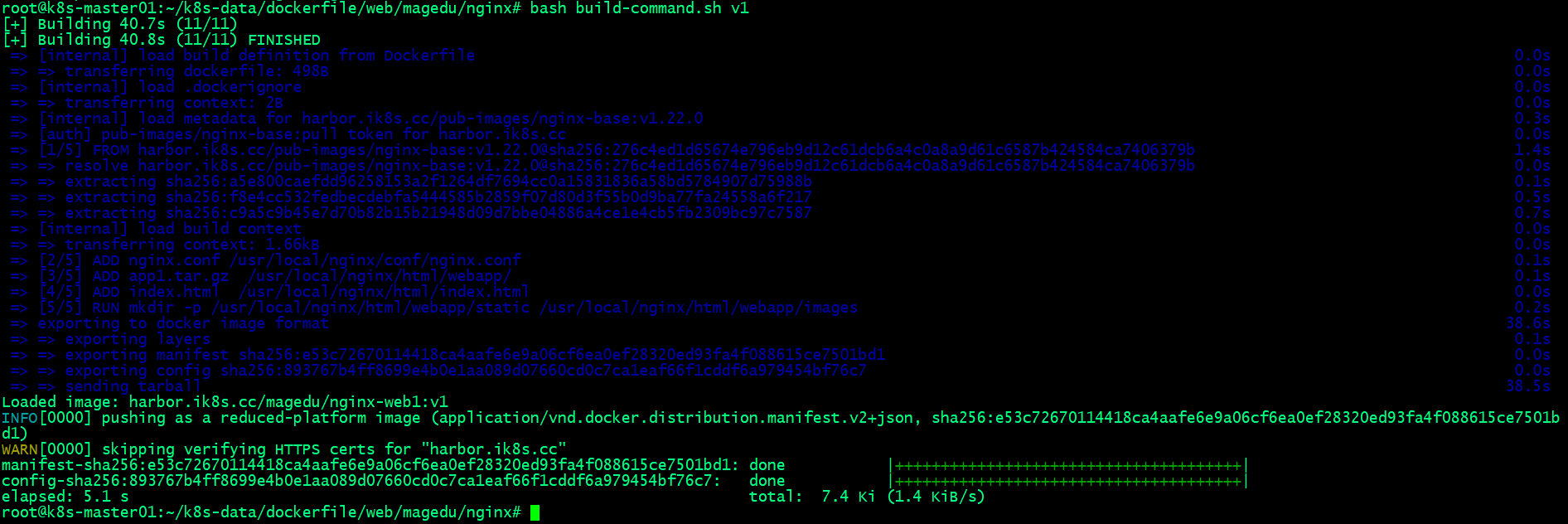

3.3、构建自定义nginx业务镜像

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/nginx# ls

Dockerfile app1.tar.gz build-command.sh index.html nginx.conf webapp

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/nginx# cat Dockerfile

#Nginx 1.22.0

# 导入nginx基础镜像

FROM harbor.ik8s.cc/pub-images/nginx-base:v1.22.0

# 添加nginx配置文件

ADD nginx.conf /usr/local/nginx/conf/nginx.conf

# 添加业务代码

ADD app1.tar.gz /usr/local/nginx/html/webapp/

ADD index.html /usr/local/nginx/html/index.html

# 创建静态资源挂载路径

RUN mkdir -p /usr/local/nginx/html/webapp/static /usr/local/nginx/html/webapp/images

# 暴露端口

EXPOSE 80 443

# 运行nginx

CMD ["nginx"]

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/nginx# cat nginx.conf

user nginx nginx;

worker_processes auto;

daemon off;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

upstream tomcat_webserver {

server magedu-tomcat-app1-service.magedu:80;

}

server {

listen 80;

server_name localhost;

location / {

root html;

index index.html index.htm;

}

location /webapp {

root html;

index index.html index.htm;

}

location /app1 {

proxy_pass http://tomcat_webserver;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $remote_addr;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/nginx# cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.ik8s.cc/magedu/nginx-web1:${TAG} .

#echo "镜像构建完成,即将上传到harbor"

#sleep 1

#docker push harbor.ik8s.cc/magedu/nginx-web1:${TAG}

#echo "镜像上传到harbor完成"

nerdctl build -t harbor.ik8s.cc/magedu/nginx-web1:${TAG} .

nerdctl push harbor.ik8s.cc/magedu/nginx-web1:${TAG}

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/nginx#

上述Dockerfile中主要基于nginx基础镜像添加业务代码,添加配置,以及定义运行nginx和暴露服务端口;

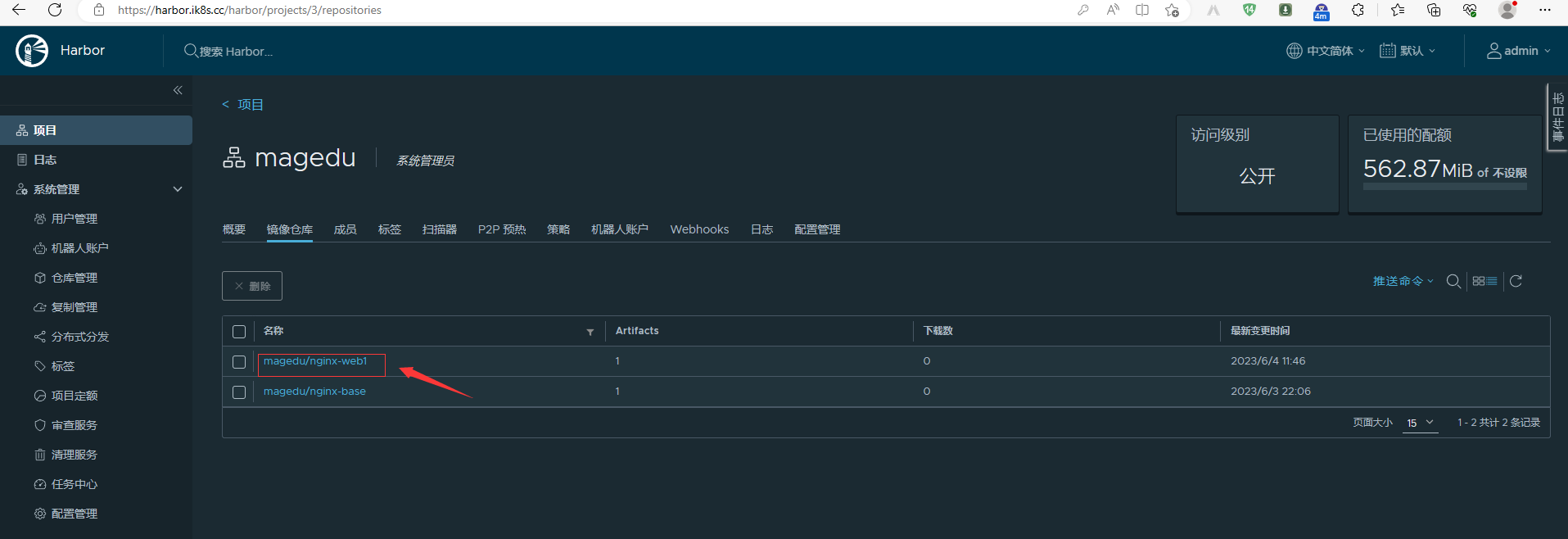

3.4、验证自定义nginx业务镜像

验证nginx业务镜像是否上传至harbor?

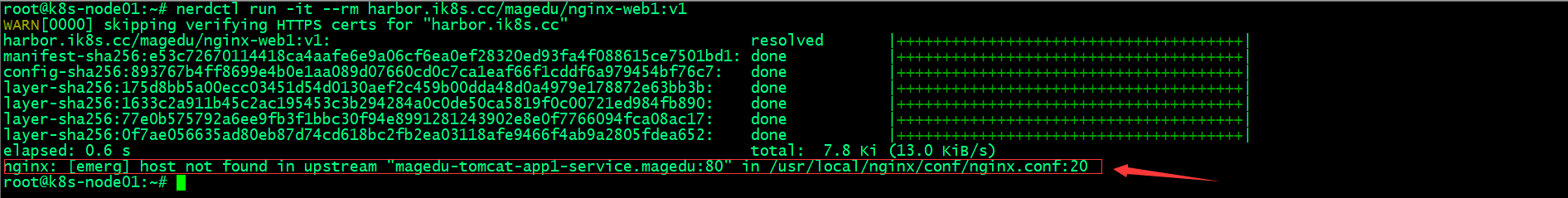

运行nginx业务镜像为容器,看看对应业务是否能够正常访问?

这里提示找不到magedu-tomcat-app1-service.magedu:80这个upstream ,这是因为我们在配置文件中写死了nginx调用后端tomcat在k8s中 svc的地址;所以该镜像只能运行在k8s环境中,并且在运行该镜像前对应k8s环境中,tomcat的svc必须存在;

4、基于自定义centos基础镜像构建tomcat镜像

4.1、基于自定义centos基础镜像构建jdk基础镜像

root@k8s-master01:~/k8s-data/dockerfile/web/pub-images/jdk-1.8.212# ll

total 190464

drwxr-xr-x 2 root root 4096 Jun 4 04:04 ./

drwxr-xr-x 6 root root 4096 Aug 9 2022 ../

-rw-r--r-- 1 root root 389 Jun 4 04:04 Dockerfile

-rw-r--r-- 1 root root 259 Jun 4 04:02 build-command.sh

-rw-r--r-- 1 root root 195013152 Jun 22 2021 jdk-8u212-linux-x64.tar.gz

-rw-r--r-- 1 root root 2105 Jun 22 2021 profile

root@k8s-master01:~/k8s-data/dockerfile/web/pub-images/jdk-1.8.212# cat Dockerfile

#JDK Base Image

# 导入自定义centos基础镜像

FROM harbor.ik8s.cc/baseimages/magedu-centos-base:7.9.2009

# 安装jdk环境

ADD jdk-8u212-linux-x64.tar.gz /usr/local/src/

RUN ln -sv /usr/local/src/jdk1.8.0_212 /usr/local/jdk

ADD profile /etc/profile

ENV JAVA_HOME /usr/local/jdk

ENV JRE_HOME $JAVA_HOME/jre

ENV CLASSPATH $JAVA_HOME/lib/:$JRE_HOME/lib/

ENV PATH $PATH:$JAVA_HOME/bin

root@k8s-master01:~/k8s-data/dockerfile/web/pub-images/jdk-1.8.212# cat build-command.sh

#!/bin/bash

#docker build -t harbor.ik8s.cc/pub-images/jdk-base:v8.212 .

#sleep 1

#docker push harbor.ik8s.cc/pub-images/jdk-base:v8.212

nerdctl build -t harbor.ik8s.cc/pub-images/jdk-base:v8.212 .

nerdctl push harbor.ik8s.cc/pub-images/jdk-base:v8.212

root@k8s-master01:~/k8s-data/dockerfile/web/pub-images/jdk-1.8.212#

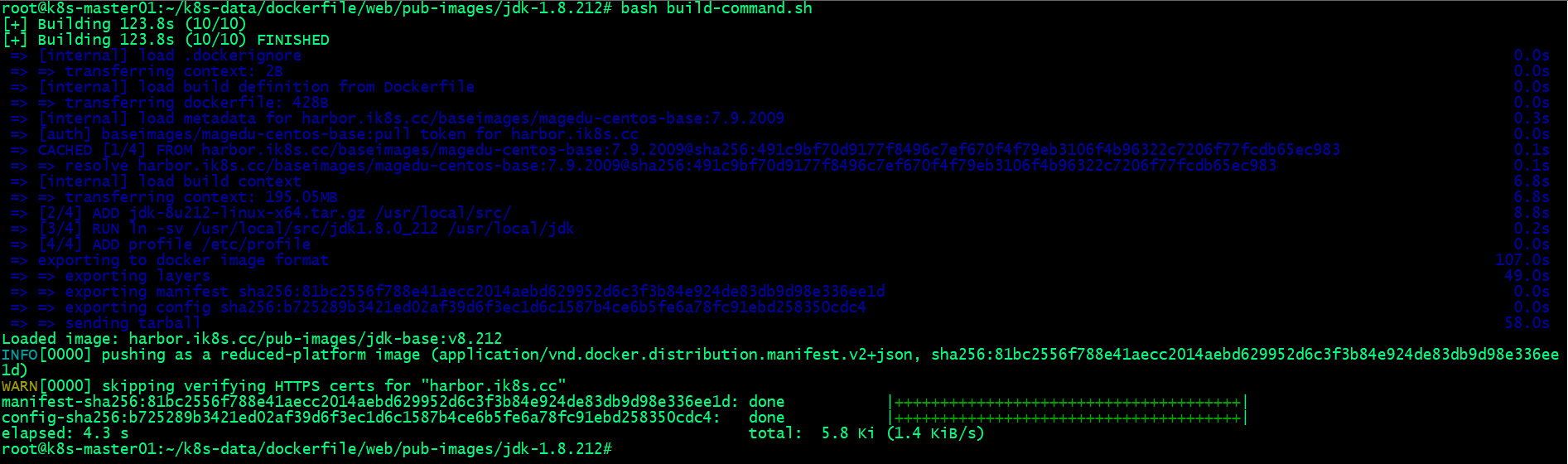

4.1.1、构建自定义jdk基础镜像

4.1.2、验证自定义jdk基础镜像

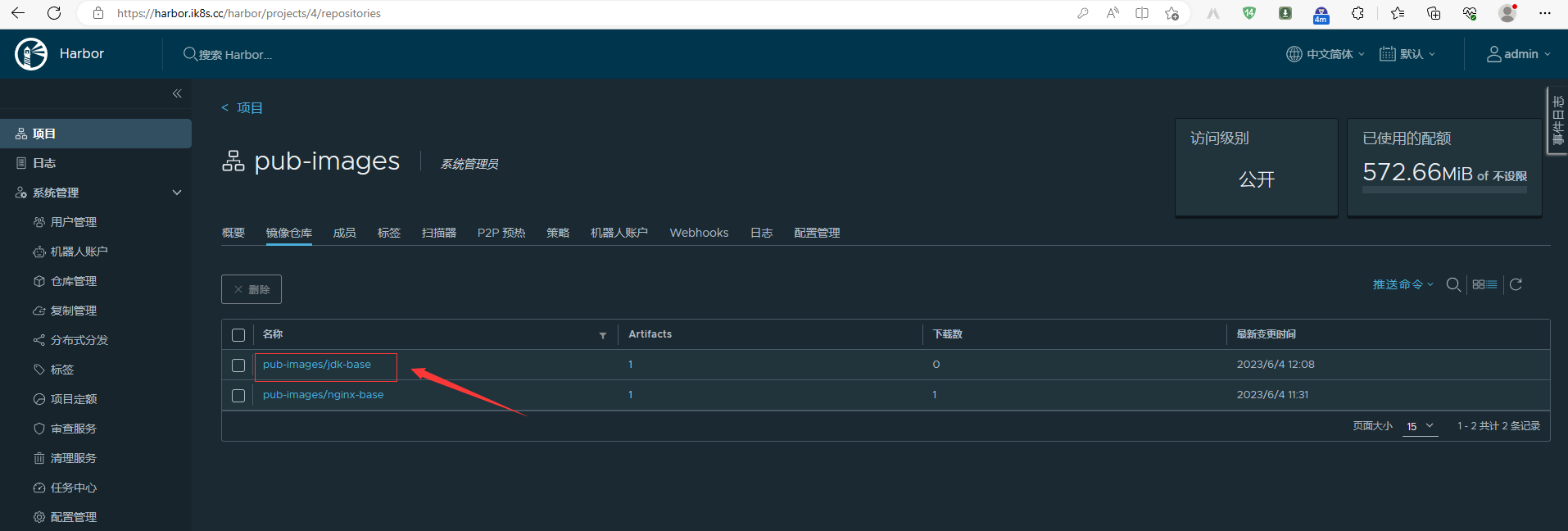

验证jdk基础镜像是否上传至harbor?

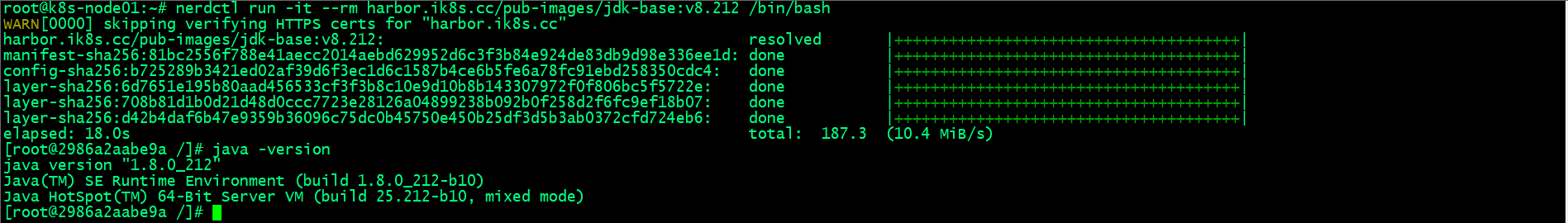

运行jdk基础镜像为容器,看看jdk环境是否安装?

能够正常在容器内部执行java 命令表示jdk镜像构建没有问题;

4.2、基于自定义jdk镜像构建tomcat基础镜像

root@k8s-master01:~/k8s-data/dockerfile/web/pub-images/tomcat-base-8.5.43# ll

total 9508

drwxr-xr-x 2 root root 4096 Jun 4 04:23 ./

drwxr-xr-x 6 root root 4096 Aug 9 2022 ../

-rw-r--r-- 1 root root 390 Jun 4 04:22 Dockerfile

-rw-r--r-- 1 root root 9717059 Jun 22 2021 apache-tomcat-8.5.43.tar.gz

-rw-r--r-- 1 root root 275 Jun 4 04:23 build-command.sh

root@k8s-master01:~/k8s-data/dockerfile/web/pub-images/tomcat-base-8.5.43# cat Dockerfile

#Tomcat 8.5.43基础镜像

# 导入自定义jdk镜像

FROM harbor.ik8s.cc/pub-images/jdk-base:v8.212

# 创建tomcat安装目录、数据目录和日志目录

RUN mkdir /apps /data/tomcat/webapps /data/tomcat/logs -pv

# 安装tomcat

ADD apache-tomcat-8.5.43.tar.gz /apps

RUN useradd tomcat -u 2050 && ln -sv /apps/apache-tomcat-8.5.43 /apps/tomcat && chown -R tomcat.tomcat /apps /data -R

root@k8s-master01:~/k8s-data/dockerfile/web/pub-images/tomcat-base-8.5.43# cat build-command.sh

#!/bin/bash

#docker build -t harbor.ik8s.cc/pub-images/tomcat-base:v8.5.43 .

#sleep 3

#docker push harbor.ik8s.cc/pub-images/tomcat-base:v8.5.43

nerdctl build -t harbor.ik8s.cc/pub-images/tomcat-base:v8.5.43 .

nerdctl push harbor.ik8s.cc/pub-images/tomcat-base:v8.5.43

root@k8s-master01:~/k8s-data/dockerfile/web/pub-images/tomcat-base-8.5.43#

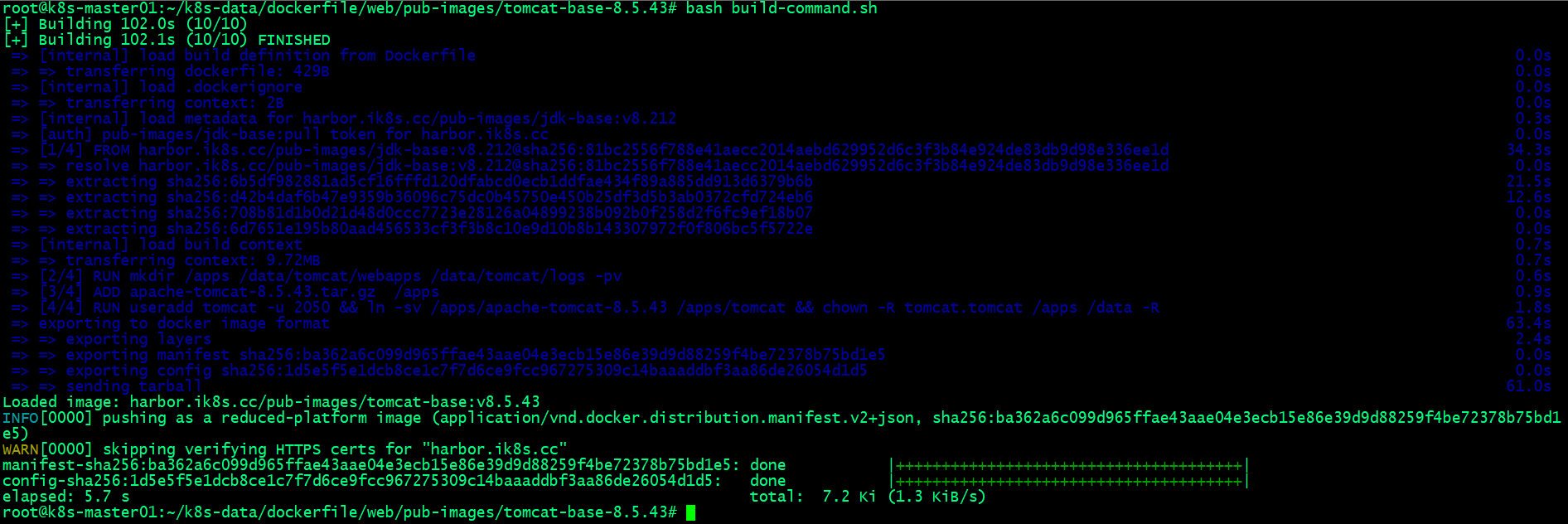

4.2.1、构建自定义tomcat基础镜像

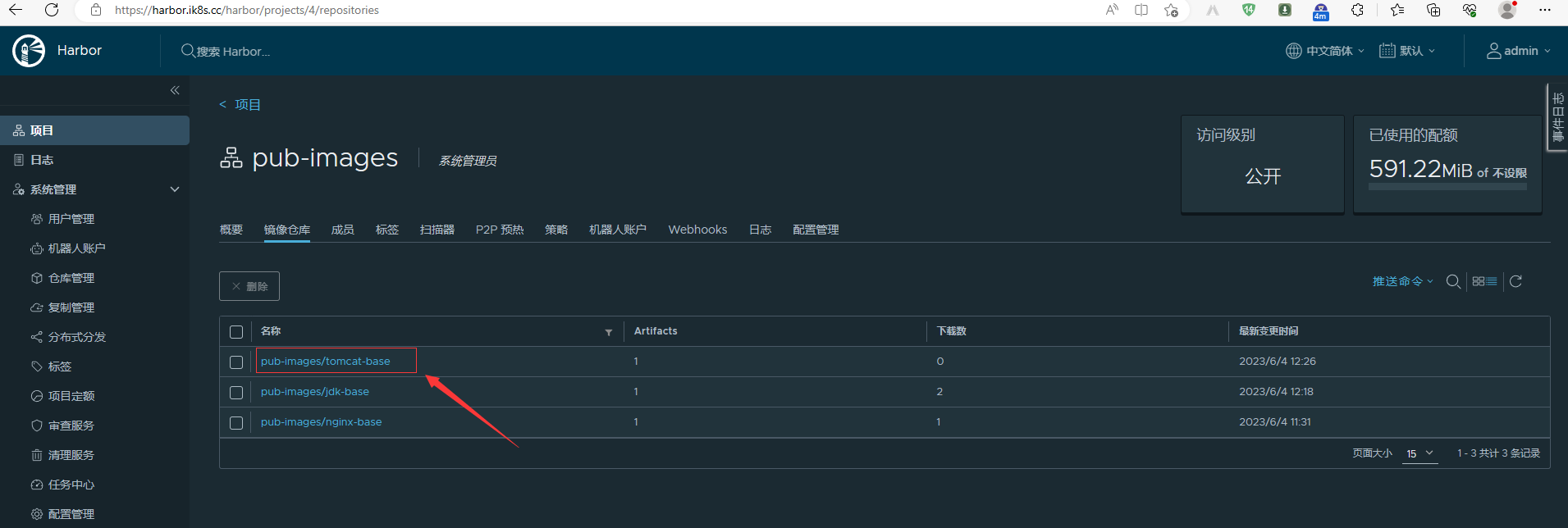

4.2.2、验证自定义tomcat基础镜像

验证自定义tomcat基础镜像是否上传至harbor?

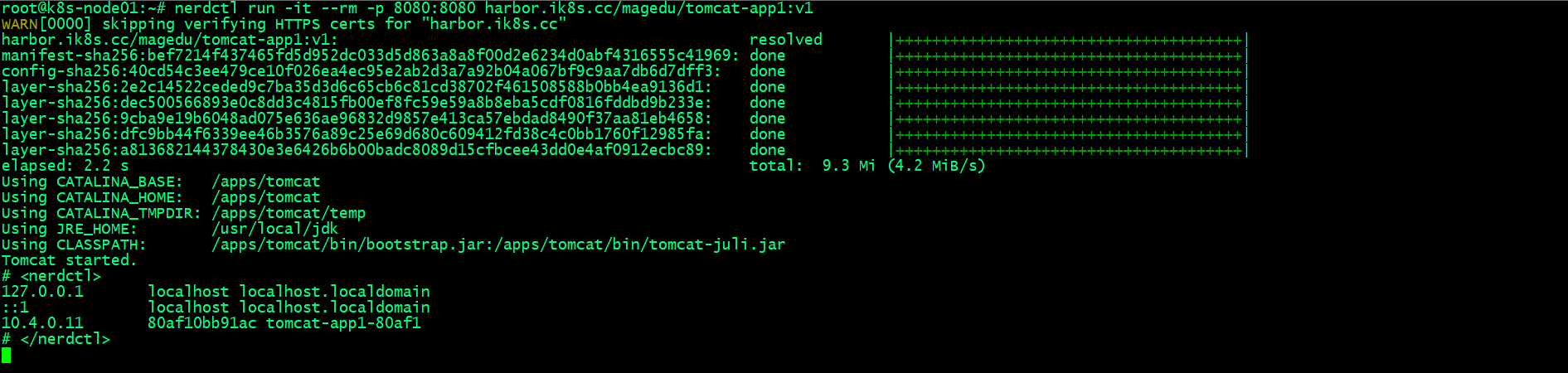

将自定义tomcat镜像运行为容器,看看tomcat是否可正常访问呢?

root@k8s-node01:~# nerdctl run -it --rm -p 8080:8080 harbor.ik8s.cc/pub-images/tomcat-base:v8.5.43 /bin/bash

WARN[0000] skipping verifying HTTPS certs for "harbor.ik8s.cc"

harbor.ik8s.cc/pub-images/tomcat-base:v8.5.43: resolved |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:ba362a6c099d965ffae43aae04e3ecb15e86e39d9d88259f4be72378b75bd1e5: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:1d5e5f5e1dcb8ce1c7f7d6ce9fcc967275309c14baaaddbf3aa86de26054d1d5: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:fa5fdb4dc02a5e79b212be196324b9936efbc850390f86283498a0e01b344ec3: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:00bab63d153828acf58242f1781bd40769cc8b69659f37a2a49918ff3bfca68c: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:6ddb864b9c4e53f3079ec4839ea3bace75b0d5d3daeae0ae910c171646bc4f96: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 2.4 s total: 18.6 M (7.7 MiB/s)

[root@f5752bba588f /]# ll /apps/

total 4

drwxr-xr-x 1 tomcat tomcat 4096 Jun 4 12:25 apache-tomcat-8.5.43

lrwxrwxrwx 1 tomcat tomcat 26 Jun 4 12:25 tomcat -> /apps/apache-tomcat-8.5.43

[root@f5752bba588f /]# cd /apps/tomcat/bin/

[root@f5752bba588f bin]# ls

bootstrap.jar catalina.sh commons-daemon-native.tar.gz configtest.sh digest.sh shutdown.bat startup.sh tool-wrapper.bat version.sh

catalina-tasks.xml ciphers.bat commons-daemon.jar daemon.sh setclasspath.bat shutdown.sh tomcat-juli.jar tool-wrapper.sh

catalina.bat ciphers.sh configtest.bat digest.bat setclasspath.sh startup.bat tomcat-native.tar.gz version.bat

[root@f5752bba588f bin]# ./catalina.sh run

Using CATALINA_BASE: /apps/tomcat

Using CATALINA_HOME: /apps/tomcat

Using CATALINA_TMPDIR: /apps/tomcat/temp

Using JRE_HOME: /usr/local/jdk/jre

Using CLASSPATH: /apps/tomcat/bin/bootstrap.jar:/apps/tomcat/bin/tomcat-juli.jar

04-Jun-2023 12:40:29.845 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Server version: Apache Tomcat/8.5.43

04-Jun-2023 12:40:29.851 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Server built: Jul 4 2019 20:53:15 UTC

04-Jun-2023 12:40:29.851 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Server number: 8.5.43.0

04-Jun-2023 12:40:29.851 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log OS Name: Linux

04-Jun-2023 12:40:29.852 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log OS Version: 5.15.0-72-generic

04-Jun-2023 12:40:29.852 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Architecture: amd64

04-Jun-2023 12:40:29.852 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Java Home: /usr/local/src/jdk1.8.0_212/jre

04-Jun-2023 12:40:29.852 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log JVM Version: 1.8.0_212-b10

04-Jun-2023 12:40:29.853 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log JVM Vendor: Oracle Corporation

04-Jun-2023 12:40:29.853 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log CATALINA_BASE: /apps/apache-tomcat-8.5.43

04-Jun-2023 12:40:29.853 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log CATALINA_HOME: /apps/apache-tomcat-8.5.43

04-Jun-2023 12:40:29.854 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djava.util.logging.config.file=/apps/tomcat/conf/logging.properties

04-Jun-2023 12:40:29.854 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager

04-Jun-2023 12:40:29.855 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djdk.tls.ephemeralDHKeySize=2048

04-Jun-2023 12:40:29.855 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djava.protocol.handler.pkgs=org.apache.catalina.webresources

04-Jun-2023 12:40:29.855 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Dorg.apache.catalina.security.SecurityListener.UMASK=0027

04-Jun-2023 12:40:29.856 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Dignore.endorsed.dirs=

04-Jun-2023 12:40:29.856 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Dcatalina.base=/apps/tomcat

04-Jun-2023 12:40:29.856 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Dcatalina.home=/apps/tomcat

04-Jun-2023 12:40:29.857 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djava.io.tmpdir=/apps/tomcat/temp

04-Jun-2023 12:40:29.857 INFO [main] org.apache.catalina.core.AprLifecycleListener.lifecycleEvent The APR based Apache Tomcat Native library which allows optimal performance in production environments was not found on the java.library.path: [/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib]

04-Jun-2023 12:40:30.166 INFO [main] org.apache.coyote.AbstractProtocol.init Initializing ProtocolHandler ["http-nio-8080"]

04-Jun-2023 12:40:30.193 INFO [main] org.apache.tomcat.util.net.NioSelectorPool.getSharedSelector Using a shared selector for servlet write/read

04-Jun-2023 12:40:30.232 INFO [main] org.apache.coyote.AbstractProtocol.init Initializing ProtocolHandler ["ajp-nio-8009"]

04-Jun-2023 12:40:30.236 INFO [main] org.apache.tomcat.util.net.NioSelectorPool.getSharedSelector Using a shared selector for servlet write/read

04-Jun-2023 12:40:30.237 INFO [main] org.apache.catalina.startup.Catalina.load Initialization processed in 1262 ms

04-Jun-2023 12:40:30.303 INFO [main] org.apache.catalina.core.StandardService.startInternal Starting service [Catalina]

04-Jun-2023 12:40:30.303 INFO [main] org.apache.catalina.core.StandardEngine.startInternal Starting Servlet Engine: Apache Tomcat/8.5.43

04-Jun-2023 12:40:30.324 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/apps/apache-tomcat-8.5.43/webapps/docs]

04-Jun-2023 12:40:30.918 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/apps/apache-tomcat-8.5.43/webapps/docs] has finished in [593] ms

04-Jun-2023 12:40:30.919 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/apps/apache-tomcat-8.5.43/webapps/examples]

04-Jun-2023 12:40:31.524 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/apps/apache-tomcat-8.5.43/webapps/examples] has finished in [605] ms

04-Jun-2023 12:40:31.524 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/apps/apache-tomcat-8.5.43/webapps/ROOT]

04-Jun-2023 12:40:31.540 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/apps/apache-tomcat-8.5.43/webapps/ROOT] has finished in [16] ms

04-Jun-2023 12:40:31.540 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/apps/apache-tomcat-8.5.43/webapps/manager]

04-Jun-2023 12:40:31.565 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/apps/apache-tomcat-8.5.43/webapps/manager] has finished in [25] ms

04-Jun-2023 12:40:31.565 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/apps/apache-tomcat-8.5.43/webapps/host-manager]

04-Jun-2023 12:40:31.597 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/apps/apache-tomcat-8.5.43/webapps/host-manager] has finished in [32] ms

04-Jun-2023 12:40:31.600 INFO [main] org.apache.coyote.AbstractProtocol.start Starting ProtocolHandler ["http-nio-8080"]

04-Jun-2023 12:40:31.608 INFO [main] org.apache.coyote.AbstractProtocol.start Starting ProtocolHandler ["ajp-nio-8009"]

04-Jun-2023 12:40:31.611 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in 1373 ms

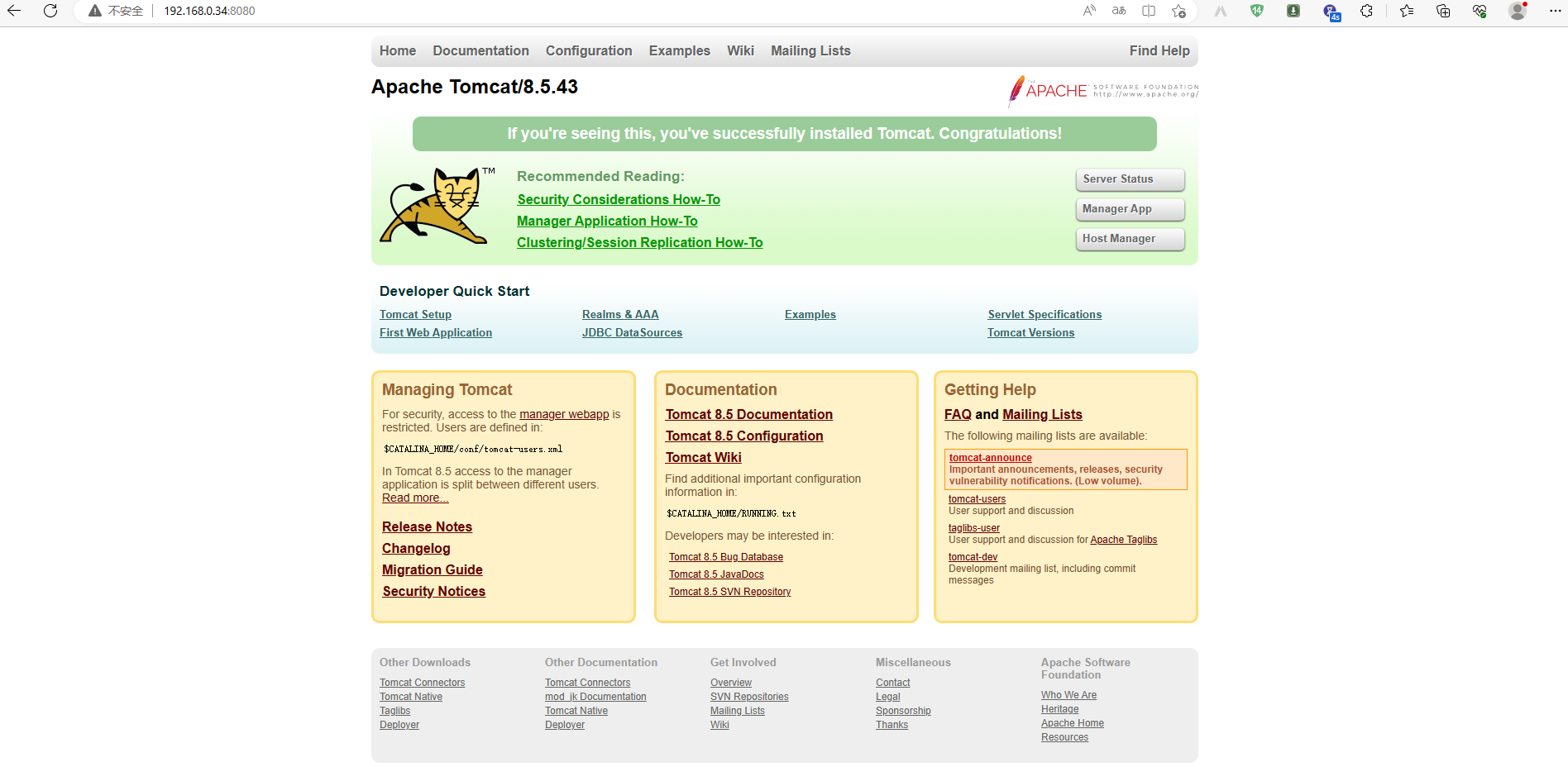

访问tomcat

能够正常访问tomcat,说明tomcat基础镜像就构建的没有问题;

4.3、基于自定义tomcat镜像构建tomcat业务镜像

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/tomcat-app1# ll

total 23588

drwxr-xr-x 3 root root 4096 Jun 4 04:58 ./

drwxr-xr-x 11 root root 4096 Aug 9 2022 ../

-rw-r--r-- 1 root root 603 Jun 4 04:57 Dockerfile

-rw-r--r-- 1 root root 144 May 11 06:26 app1.tar.gz

-rwxr-xr-x 1 root root 261 Jun 4 04:58 build-command.sh*

-rwxr-xr-x 1 root root 23611 Jun 22 2021 catalina.sh*

-rw-r--r-- 1 root root 24086235 Jun 22 2021 filebeat-7.5.1-x86_64.rpm

-rw-r--r-- 1 root root 667 Oct 24 2021 filebeat.yml

drwxr-xr-x 2 root root 4096 May 11 06:26 myapp/

-rwxr-xr-x 1 root root 372 Jan 22 2022 run_tomcat.sh*

-rw-r--r-- 1 root root 6462 Oct 10 2021 server.xml

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/tomcat-app1# cat Dockerfile

#tomcat web1

# 导入自定义tomcat镜像

FROM harbor.ik8s.cc/pub-images/tomcat-base:v8.5.43

# 添加启动脚本和配置文件

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD server.xml /apps/tomcat/conf/server.xml

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

# 添加业务代码

ADD app1.tar.gz /data/tomcat/webapps/app1/

#ADD filebeat.yml /etc/filebeat/filebeat.yml

RUN chown -R nginx.nginx /data/ /apps/

#ADD filebeat-7.5.1-x86_64.rpm /tmp/

#RUN cd /tmp && yum localinstall -y filebeat-7.5.1-amd64.deb

# 暴露端口

EXPOSE 8080 8443

# 启动tomcat

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/tomcat-app1# cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.ik8s.cc/magedu/tomcat-app1:${TAG} .

#sleep 3

#docker push harbor.ik8s.cc/magedu/tomcat-app1:${TAG}

nerdctl build -t harbor.ik8s.cc/magedu/tomcat-app1:${TAG} .

nerdctl push harbor.ik8s.cc/magedu/tomcat-app1:${TAG}

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/tomcat-app1#

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/tomcat-app1# cat run_tomcat.sh

#!/bin/bash

#echo "nameserver 223.6.6.6" > /etc/resolv.conf

#echo "192.168.7.248 k8s-vip.example.com" >> /etc/hosts

#/usr/share/filebeat/bin/filebeat -e -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat &

su - nginx -c "/apps/tomcat/bin/catalina.sh start"

tail -f /etc/hosts

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/tomcat-app1#

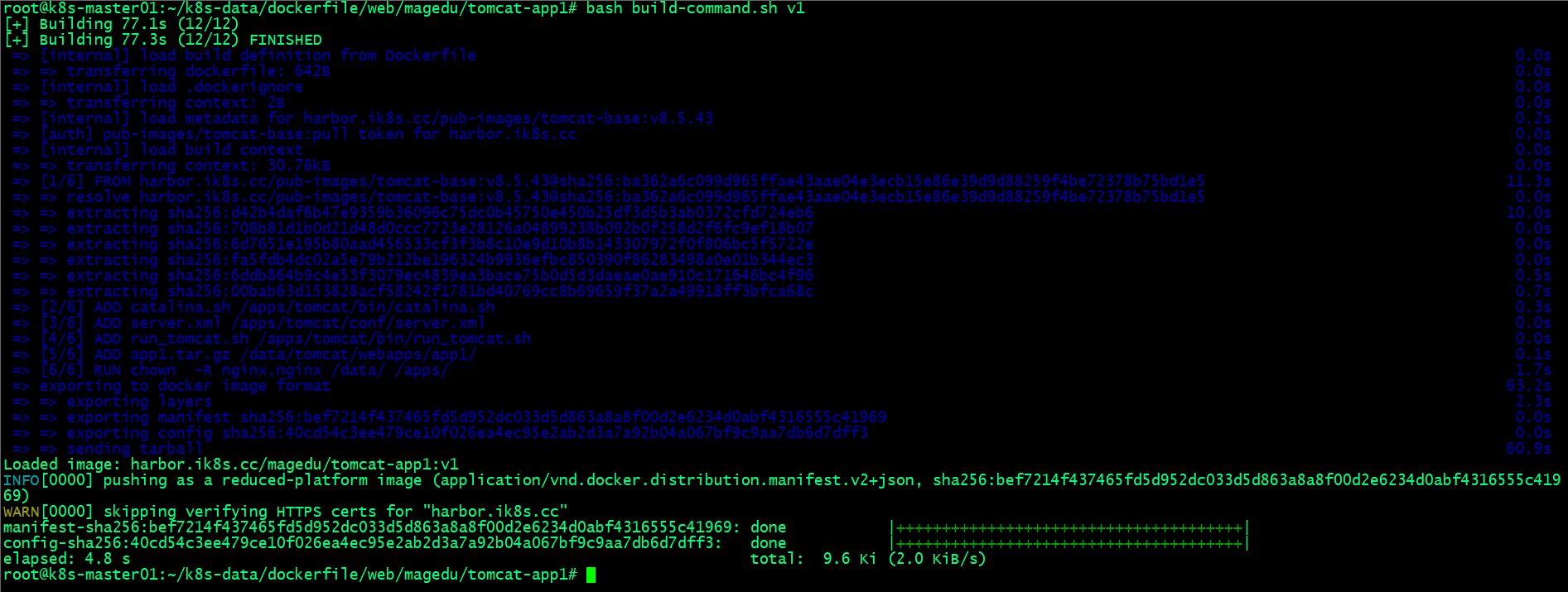

4.3.1、构建自定义tomcat业务镜像

4.3.2、验证自定义tomcat业务镜像

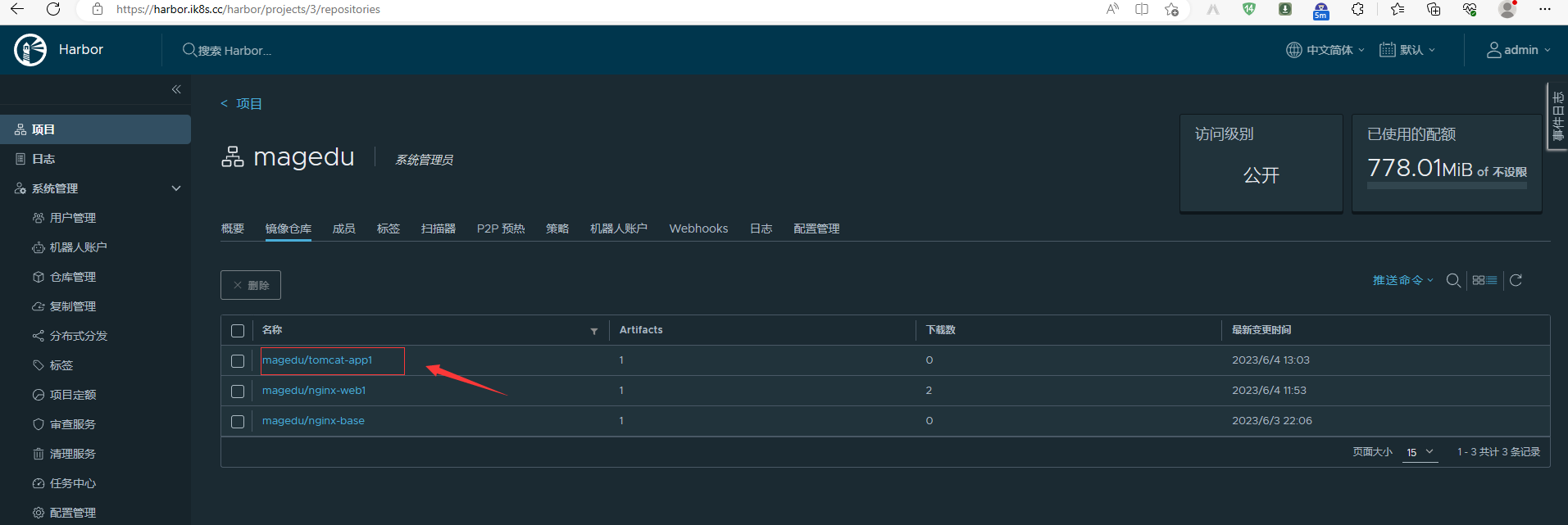

验证自定义tomcat业务镜像是否上传至harbor?

将自定义tomcat业务镜像运行为容器,看看对应业务是否正常访问?

访问业务

能够正常访问app1说明业务容器的镜像构建没有问题;

5、在k8s环境中运行tomcat

root@k8s-master01:~/k8s-data/yaml/namespaces# ls

magedu-ns.yaml

root@k8s-master01:~/k8s-data/yaml/namespaces# cat magedu-ns.yaml

apiVersion: v1

kind: Namespace

metadata:

name: magedu

root@k8s-master01:~/k8s-data/yaml/namespaces# cd ../magedu/tomcat-app1/

root@k8s-master01:~/k8s-data/yaml/magedu/tomcat-app1# ll

total 16

drwxr-xr-x 2 root root 4096 Jun 4 06:16 ./

drwxr-xr-x 12 root root 4096 Aug 9 2022 ../

-rw-r--r-- 1 root root 596 Jun 22 2021 hpa.yaml

-rw-r--r-- 1 root root 1849 Jun 4 05:18 tomcat-app1.yaml

root@k8s-master01:~/k8s-data/yaml/magedu/tomcat-app1# cat hpa.yaml

#apiVersion: autoscaling/v2beta1

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

namespace: magedu

name: magedu-tomcat-app1-podautoscaler

labels:

app: magedu-tomcat-app1

version: v2beta1

spec:

scaleTargetRef:

apiVersion: apps/v1

#apiVersion: extensions/v1beta1

kind: Deployment

name: magedu-tomcat-app1-deployment

minReplicas: 2

maxReplicas: 20

targetCPUUtilizationPercentage: 60

#metrics:

#- type: Resource

# resource:

# name: cpu

# targetAverageUtilization: 60

#- type: Resource

# resource:

# name: memory

root@k8s-master01:~/k8s-data/yaml/magedu/tomcat-app1# cat tomcat-app1.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: magedu-tomcat-app1-deployment-label

name: magedu-tomcat-app1-deployment

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: magedu-tomcat-app1-selector

template:

metadata:

labels:

app: magedu-tomcat-app1-selector

spec:

containers:

- name: magedu-tomcat-app1-container

image: harbor.ik8s.cc/magedu/tomcat-app1:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

#resources:

# limits:

# cpu: 1

# memory: "512Mi"

# requests:

# cpu: 500m

# memory: "512Mi"

volumeMounts:

- name: magedu-images

mountPath: /usr/local/nginx/html/webapp/images

readOnly: false

- name: magedu-static

mountPath: /usr/local/nginx/html/webapp/static

readOnly: false

volumes:

- name: magedu-images

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/images

- name: magedu-static

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/static

# nodeSelector:

# project: magedu

# app: tomcat

---

kind: Service

apiVersion: v1

metadata:

labels:

app: magedu-tomcat-app1-service-label

name: magedu-tomcat-app1-service

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30092

selector:

app: magedu-tomcat-app1-selector

root@k8s-master01:~/k8s-data/yaml/magedu/tomcat-app1#

5.1、准备后端存储相关目录

root@harbor:~# mkdir -pv /data/k8sdata/magedu/images

mkdir: created directory '/data/k8sdata/magedu'

mkdir: created directory '/data/k8sdata/magedu/images'

root@harbor:~# mkdir -pv /data/k8sdata/magedu/static

mkdir: created directory '/data/k8sdata/magedu/static'

root@harbor:~# cat /etc/exports

# /etc/exports: the access control list for filesystems which may be exported

# to NFS clients. See exports(5).

#

# Example for NFSv2 and NFSv3:

# /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check)

#

# Example for NFSv4:

# /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check)

# /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)

#

/data/k8sdata/kuboard *(rw,no_root_squash)

/data/volumes *(rw,no_root_squash)

/pod-vol *(rw,no_root_squash)

/data/k8sdata/myserver *(rw,no_root_squash)

/data/k8sdata/mysite *(rw,no_root_squash)

/data/k8sdata/magedu/images *(rw,no_root_squash)

/data/k8sdata/magedu/static *(rw,no_root_squash)

root@harbor:~# exportfs -av

exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/kuboard".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [2]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/volumes".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [3]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/pod-vol".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [4]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/myserver".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [5]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/mysite".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [7]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/images".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [8]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/static".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exporting *:/data/k8sdata/magedu/static

exporting *:/data/k8sdata/magedu/images

exporting *:/data/k8sdata/mysite

exporting *:/data/k8sdata/myserver

exporting *:/pod-vol

exporting *:/data/volumes

exporting *:/data/k8sdata/kuboard

root@harbor:~#

5.2、将tomcat业务部署至k8s

root@k8s-master01:~/k8s-data/yaml# cd namespaces/

root@k8s-master01:~/k8s-data/yaml/namespaces# ls

magedu-ns.yaml

root@k8s-master01:~/k8s-data/yaml/namespaces# kubectl apply -f magedu-ns.yaml

namespace/magedu created

root@k8s-master01:~/k8s-data/yaml/namespaces# ls

magedu-ns.yaml

root@k8s-master01:~/k8s-data/yaml/namespaces# cd ../magedu/tomcat-app1/

root@k8s-master01:~/k8s-data/yaml/magedu/tomcat-app1# ls

hpa.yaml tomcat-app1.yaml

root@k8s-master01:~/k8s-data/yaml/magedu/tomcat-app1# kubectl apply -f .

horizontalpodautoscaler.autoscaling/magedu-tomcat-app1-podautoscaler created

deployment.apps/magedu-tomcat-app1-deployment created

service/magedu-tomcat-app1-service created

root@k8s-master01:~/k8s-data/yaml/magedu/tomcat-app1#

5.3、验证tomcat pod是否正常running?服务是否可正常访问?

root@k8s-master01:~/k8s-data/yaml/magedu/tomcat-app1# kubectl get pods -n magedu

NAME READY STATUS RESTARTS AGE

magedu-tomcat-app1-deployment-7754c8549c-prglk 1/1 Running 0 2m2s

magedu-tomcat-app1-deployment-7754c8549c-xmg9l 1/1 Running 0 2m17s

root@k8s-master01:~/k8s-data/yaml/magedu/tomcat-app1# kubectl get svc -n magedu

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

magedu-tomcat-app1-service NodePort 10.100.129.23 <none> 80:30092/TCP 2m23s

root@k8s-master01:~/k8s-data/yaml/magedu/tomcat-app1#

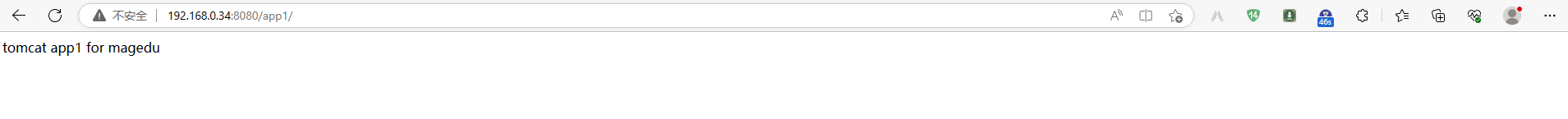

访问k8s集群节点的30092端口,看看对应tomcat服务是否能够正常访问?

能够通过k8s集群节点访问tomcat服务,说明tomcat服务已经正常部署至k8s环境;

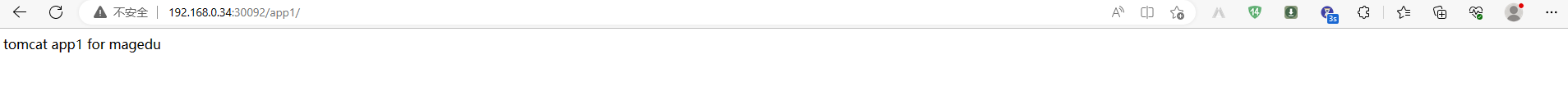

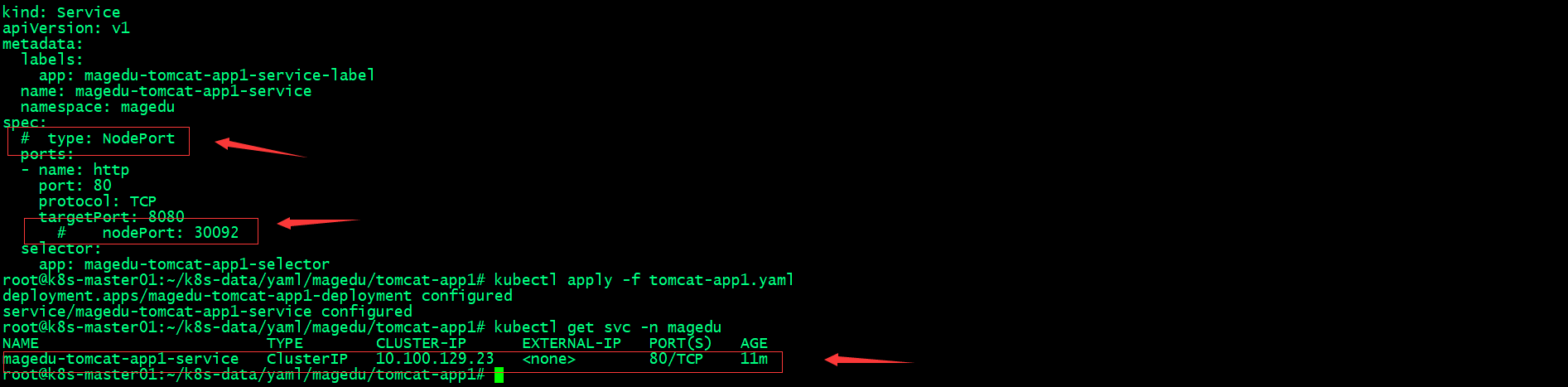

5.4、更改tomcat service 类型为无头服务,重新apply配置清单,让后端tomcat服务只能在k8s内部环境访问

6、在k8s环境中运行nginx,实现nginx+tomcat动静分离

root@k8s-master01:~/k8s-data/yaml/magedu/nginx# cat nginx.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: magedu-nginx-deployment-label

name: magedu-nginx-deployment

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: magedu-nginx-selector

template:

metadata:

labels:

app: magedu-nginx-selector

spec:

containers:

- name: magedu-nginx-container

image: harbor.ik8s.cc/magedu/nginx-web1:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

env:

- name: "password"

value: "123456"

- name: "age"

value: "20"

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 500m

memory: 256Mi

volumeMounts:

- name: magedu-images

mountPath: /usr/local/nginx/html/webapp/images

readOnly: false

- name: magedu-static

mountPath: /usr/local/nginx/html/webapp/static

readOnly: false

volumes:

- name: magedu-images

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/images

- name: magedu-static

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/static

#nodeSelector:

# group: magedu

---

kind: Service

apiVersion: v1

metadata:

labels:

app: magedu-nginx-service-label

name: magedu-nginx-service

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30090

- name: https

port: 443

protocol: TCP

targetPort: 443

nodePort: 30091

selector:

app: magedu-nginx-selector

root@k8s-master01:~/k8s-data/yaml/magedu/nginx#

6.1、部署nginx业务部署至k8s

root@k8s-master01:~/k8s-data/yaml/magedu/nginx# kubectl apply -f .

deployment.apps/magedu-nginx-deployment created

service/magedu-nginx-service created

root@k8s-master01:~/k8s-data/yaml/magedu/nginx# kubectl get pod -n magedu

NAME READY STATUS RESTARTS AGE

magedu-nginx-deployment-5589bbf4bc-6gd2w 1/1 Running 0 14s

magedu-tomcat-app1-deployment-7754c8549c-c7rtb 1/1 Running 0 8m7s

magedu-tomcat-app1-deployment-7754c8549c-prglk 1/1 Running 0 19m

root@k8s-master01:~/k8s-data/yaml/magedu/nginx# kubectl get svc -n magedu

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

magedu-nginx-service NodePort 10.100.94.118 <none> 80:30090/TCP,443:30091/TCP 24s

magedu-tomcat-app1-service ClusterIP 10.100.129.23 <none> 80/TCP 19m

root@k8s-master01:~/k8s-data/yaml/magedu/nginx#

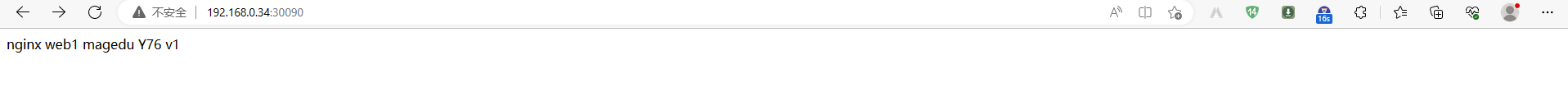

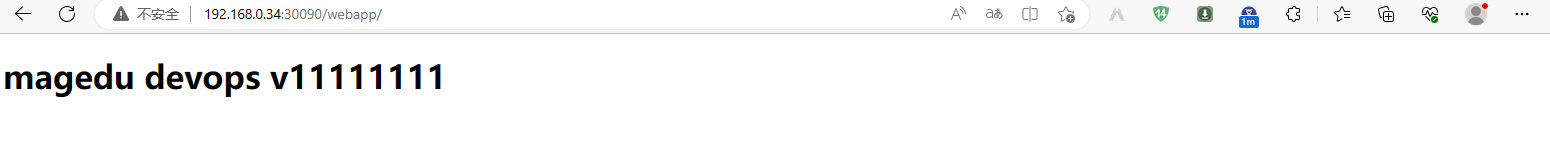

6.2、验证nginx 服务是否正常可访问?

能够通过访问k8s集群节点的30090正常访问到nginx,说明nginx服务已经正常部署至k8s环境;

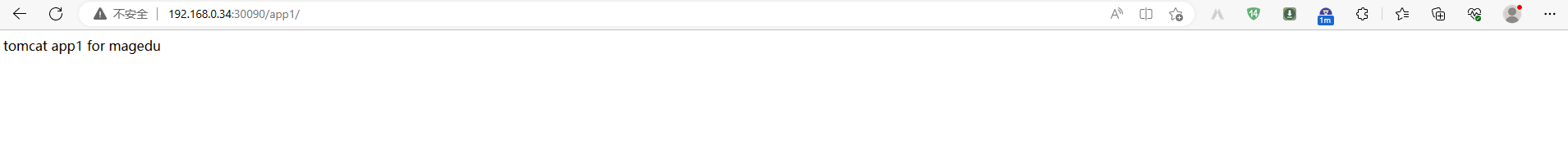

6.3、通过nginx 访问tomcat服务,看看tomcat是否能够被nginx代理?

能够通过nginx访问后端tomcat服务,说明nginx能够正常代理tomcat服务;

7、在负载均衡器上代理nginx服务

root@k8s-ha01:~# cat /etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen k8s_apiserver_6443

bind 192.168.0.111:6443

mode tcp

#balance leastconn

server k8s-master01 192.168.0.31:6443 check inter 2000 fall 3 rise 5

server k8s-master02 192.168.0.32:6443 check inter 2000 fall 3 rise 5

server k8s-master03 192.168.0.33:6443 check inter 2000 fall 3 rise 5

listen nginx-svc-80

bind 192.168.0.111:80

mode tcp

server k8s-node01 192.168.0.34:30090 check inter 2000 fall 3 rise 5

server k8s-node02 192.168.0.35:30090 check inter 2000 fall 3 rise 5

server k8s-node03 192.168.0.36:30090 check inter 2000 fall 3 rise 5

root@k8s-ha01:~# systemctl restart haproxy

root@k8s-ha01:~#

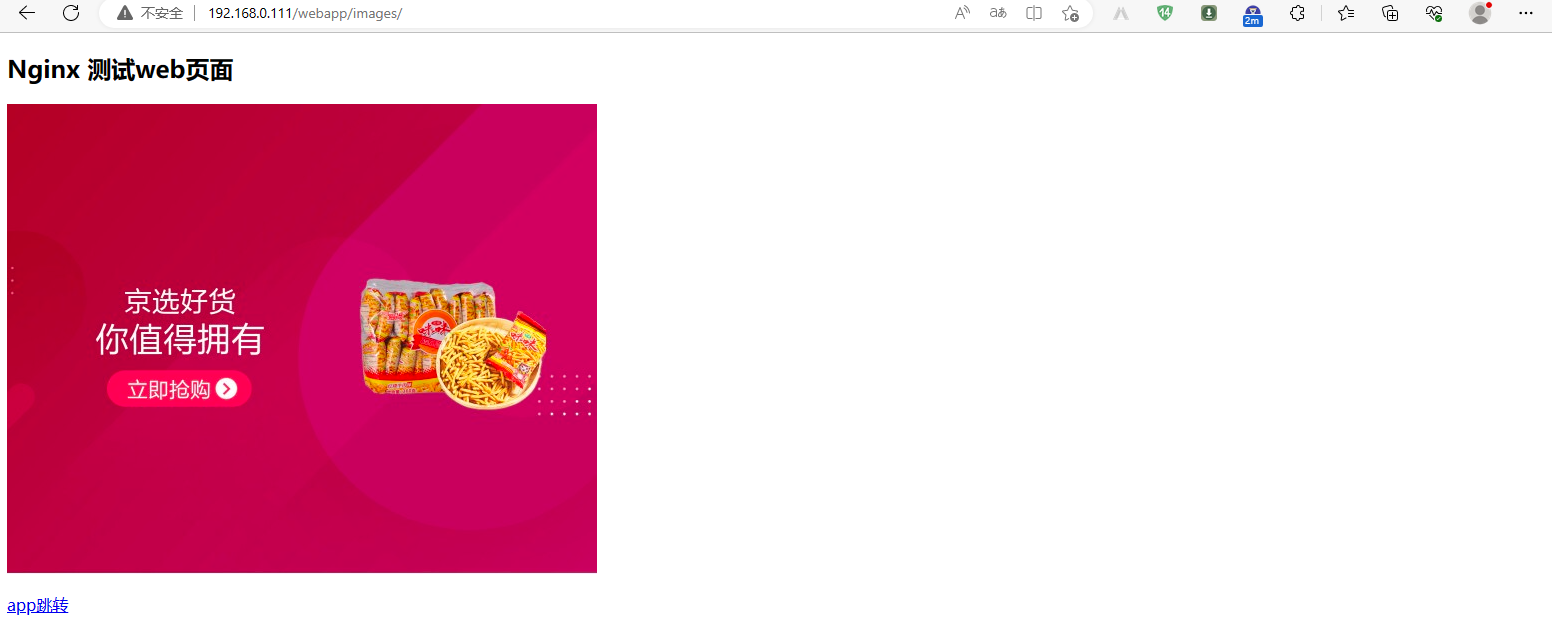

8、验证通过vip访问nginx服务,看看是否能够正常访问?

9、在存储服务器上上传静态资源,看看nginx是正常读取静态资源?

root@k8s-master01:~/ubuntu/html# scp -rp * 192.168.0.42:/data/k8sdata/magedu/images/

[email protected]'s password:

1.jpg 100% 40KB 8.6MB/s 00:00

index.html 100% 277 282.5KB/s 00:00

root@k8s-master01:~/ubuntu/html#

验证资源是否正常上传至存储对应目录?

root@harbor:~# ll /data/k8sdata/magedu/images/

total 16

drwxr-xr-x 3 root root 4096 Jun 4 07:56 ./

drwxr-xr-x 4 root root 4096 Jun 4 06:32 ../

drwxr-xr-x 2 root root 4096 May 31 18:37 images/

-rw-r--r-- 1 root root 277 Aug 5 2022 index.html

root@harbor:~#

访问nginx服务,看看对应资源是否能够被读取?

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK