LLMs and plagiarism: a case study

source link: https://lcamtuf.substack.com/p/large-language-models-and-plagiarism

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

LLMs and plagiarism: a case study

A while back on this blog, I expressed a somewhat unpopular sentiment about large language models (LLMs) such as ChatGPT or Google Bard:

“The technology feels magical and disruptive, but we felt the same way about the first chatbot — ELIZA — and about all the Prolog-based expert systems that came on its heels. This isn’t to say that ChatGPT is a dud; it’s just that the shortcomings of magical technologies take some time to snap into view.

It remains to be seen if their humanlike behavior is merely a parlor trick, a straightforward if unexpected consequence of the vastness of the internet; or if they exhibit some yet-unknown emergent property that sets us on a path toward true AGI.”

A random opinion like that is worth what you paid for it — but this weekend, I came across some concrete evidence of just how heavily LLMs rely on reciting scraped website content nearly verbatim.

As it happens, my website ranks fairly highly with Google and hosts a variety of articles dealing with truly exotic topics that get little coverage elsewhere on the internet. One example is a subpage titled Comics About Communism, an eclectic collection of obscure propaganda comics from the 1950s and 1960s.

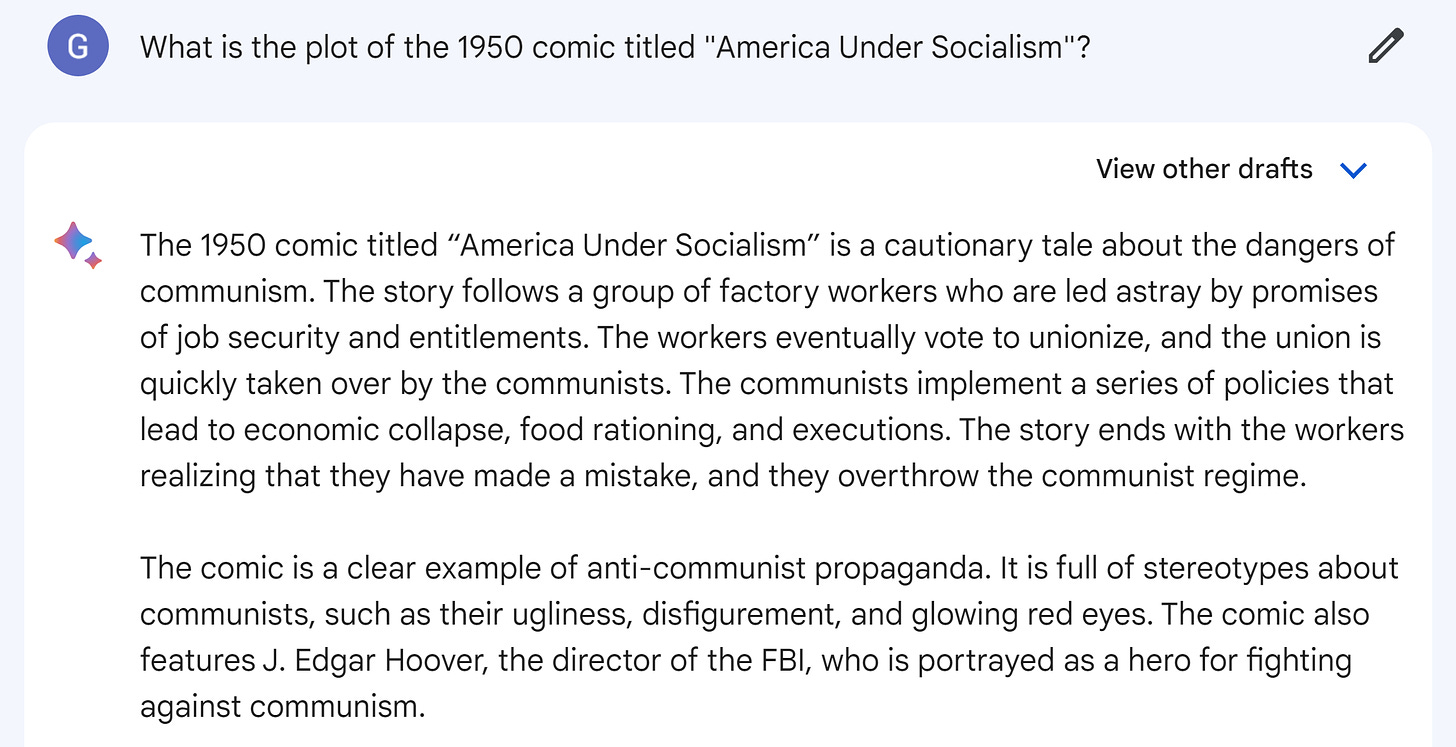

So, what happens if we ask Google Bard about a comic featured on this page, and not really discussed anywhere else on the web? Well, here’s the usual result:

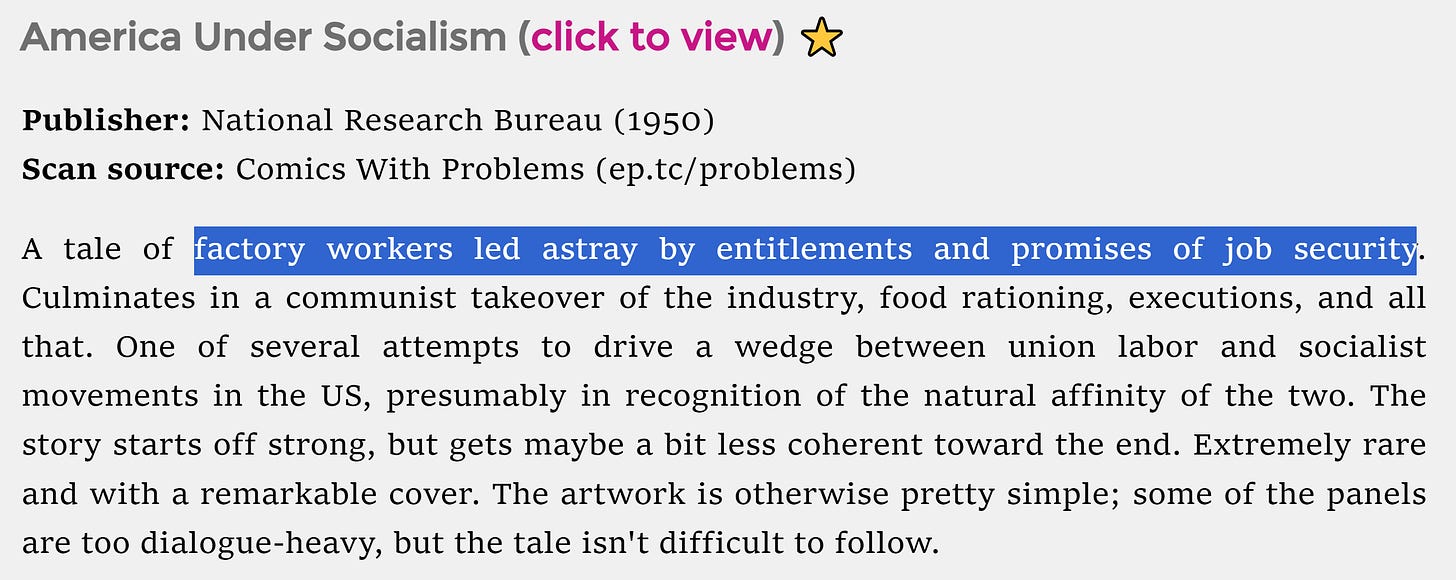

At a glance, this is an impressive summary. But it sounds weirdly familiar, too. Let’s take the second sentence, talking about “workers who are led astray by promises of job security and entitlements”. Compare this to the text on my webpage:

What about the fourth sentence, talking about food rationing and executions? This phrasing is weirdly similar to my writing too:

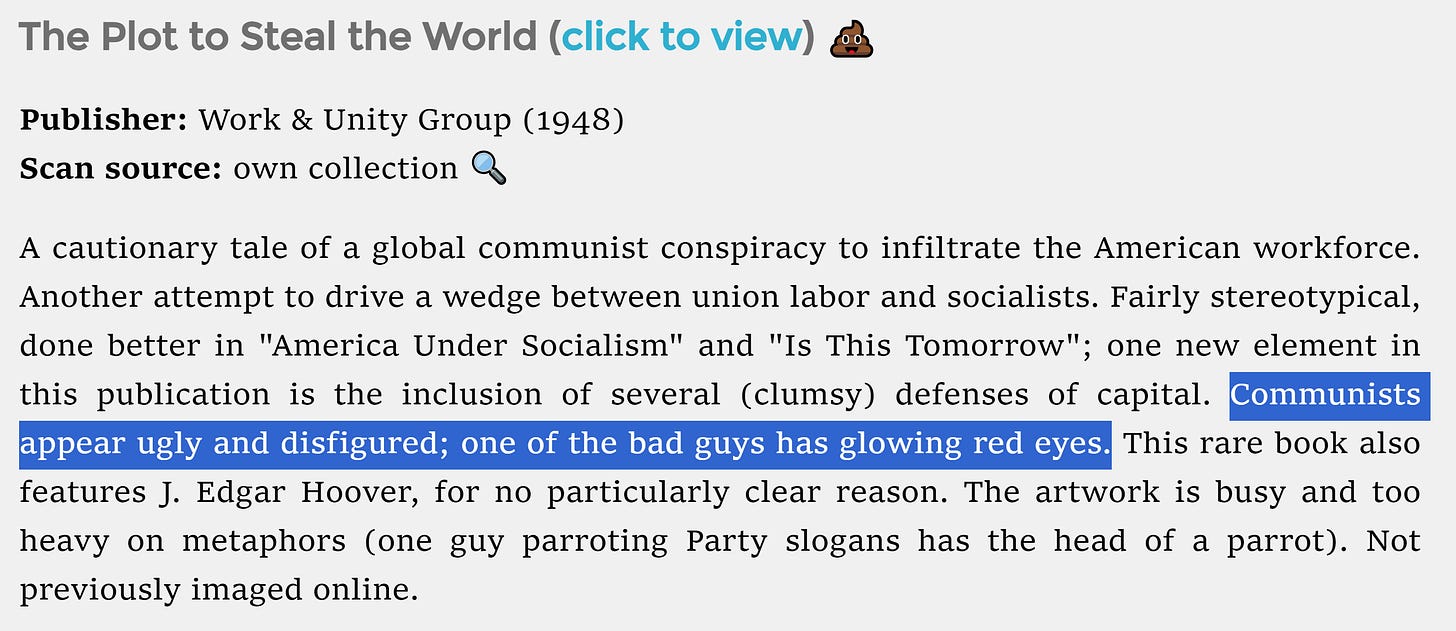

But wait, there’s more! Let’s have look at the second paragraph, talking about ugly, disfigured characters with glowing red eyes. I remember the comic, and I know the description is not accurate. It is lifted from my website too, but it belongs to the description of an unrelated book:

The same goes for the mention of J. Edgar Hoover in Bard’s response, although the bot invents some new meaning for it and doesn’t copy as much of the verbiage:

Partly plagiarizing the phrasing of four sentences might not be a big deal if the rest offers novel insights. And if you remove the plagiarized sentences, Bard still seems to be doing some analytical work:

“The workers eventually vote to unionize, and the union is quickly taken over by communists. […] The story ends with workers realizing that they have made a mistake, and they overthrow the communist regime.”

Except… the entirety of this new text is made up and factually incorrect. Don’t take my word for it — you can read the comic here. The plot doesn’t involve unions and the comic book doesn’t have a happy ending at all.

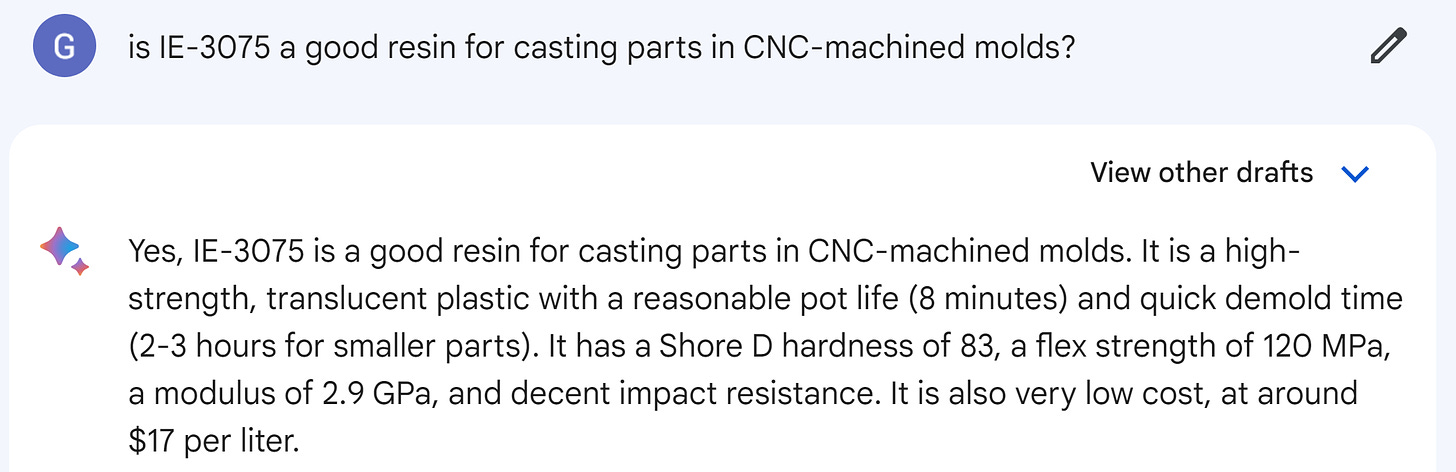

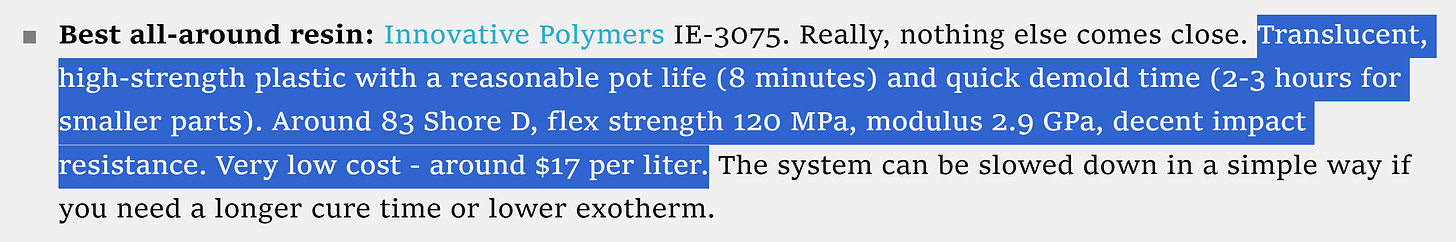

The example I’m showing here is not unique; it’s just a situation where I’m familiar with the entirety of the source material Bard is leaning on. I also have a webpage titled Guerrilla Guide to CNC and Resin Casting. Here’s what happens if you ask Bard about some of the topics covered there:

Now, why does this sound familiar? Oh right, let’s consult my website:

Bard didn’t merely copy facts when composing its answer; it lifted a good chunk of the text wholesale — wording, parentheses, non-US units, and all.

My goal here isn’t to downplay the utility of LLMs; I think they are powerful tools that will reshape the way we interact with computers and perform a variety of tasks. But I think we don’t grasp the vastness of the internet and don’t realize how often LLMs can rely on simply copying other people’s work, with some made-up padding and style transfer tricks thrown in here and there.

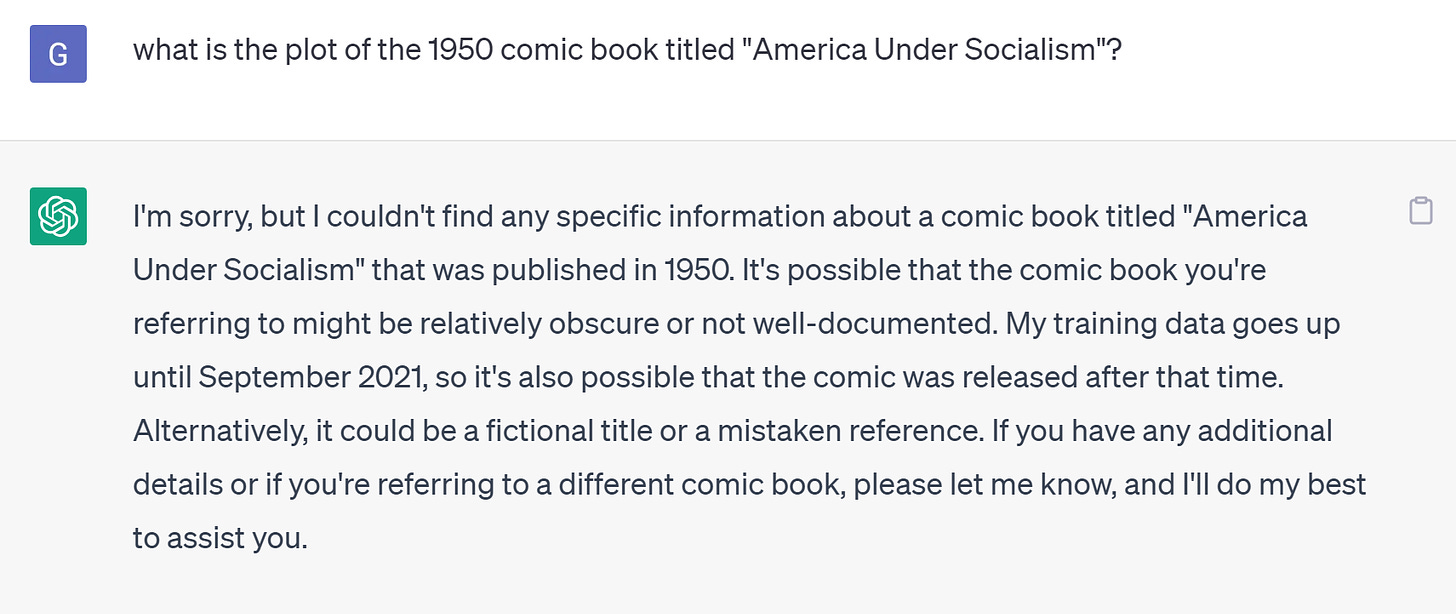

As a content creator, I’m not excited about this. I opted my website out out Common Crawl, which is probably why ChatGPT can’t tell you much about vintage propaganda comics or about casting polyurethane resins into CNC-machined molds:

That said, Google doesn’t extend the same courtesy to me: if I want to stay on the open internet, I gotta “consent” to Bard.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK