Decisions: Multi-Tenancy, Purpose-Built Operating System

source link: https://devm.io/kubernetes/kubernetes-multi-tenancy-os

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Kubernetes is a container orchestrator that has rapidly gained popularity among developers and IT operations teams alike. While it started out as a tool to manage containerized applications, it has evolved into much more than that — Kubernetes is a microcosmos and it is “eating the world.”

A few months ago, I was honored to receive an invitation to speak at DevOpsCon, Munich. I delivered a session on architectural decisions for companies building a Kubernetes platform. Having spent time with strategic companies adopting Kubernetes, I've been able to spot trends, and I am keen to share these learnings with anyone building a Kubernetes platform. I had many interesting ‘corridor’ discussions after my session (seemed like I touched a raw nerve), and this led me to write this article series.

Opinions expressed in this article are solely my own and do not express the views or opinions of my employer.

The challenge(s)

So, you decided to run your containerised applications on Kubernetes, because everyone seems to be doing it these days. Day 0 looks fantastic. You deploy, scale, and observe. Then Day 1 and Day 2 arrive. Day 3 is around the corner. You suddenly notice that Kubernetes is a microcosmos. You are challenged to make decisions. How many clusters should I create? Which tenancy model should I use? How should I encrypt service-to-service communication?

Decision #1, multi-tenancy

Security should always be job-0, if you do not have a secure system, you might not have a system.

Whether you are a Software-as-a-Service (SaaS) provider or simply looking to host multiple in-house tenants, i.e., multiple developer teams in a single cluster, you will have to make a multi-tenancy decision. There’s a great deal of difference in how you should approach multi-tenancy given the use cases.

No matter how you look at it, Kubernetes (to date) was designed as a single-tenant orchestrator from the ground up. The temptation to ‘enforce’ a multi-tenant setup using (for example) namespace isolation is considered a logical separation which is far from a secure approach.

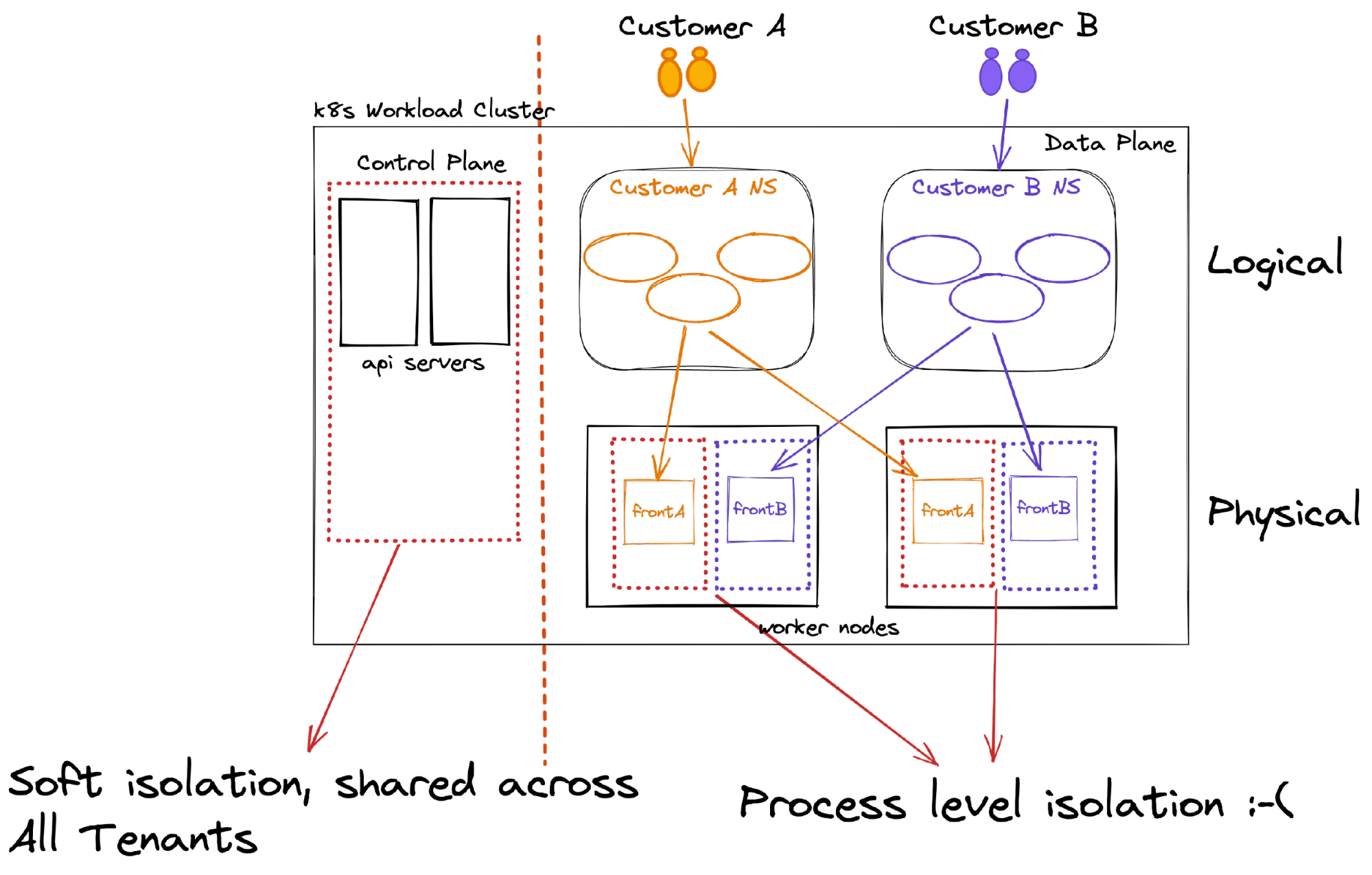

Let’s look at the following diagram to understand the challenge.

The control plane

The k8s control plane is a cluster-wide shared entity; you enforce access to the API and its objects by enforcing RBAC permissions, but that’s (almost) all you got, my friend. In addition, RBAC controls are far from easy to write and audit, increasing the likelihood of the operator making mistakes.

You may ask yourself what’s the ‘big deal,’ however, imagine the scenario where a developer needs to write an app that needs to enumerate the k8s API, and unintentionally, the app has a k8s identity which allows it to list all the k8s namespaces. This means that you just potentially exposed the entire set of customer names that runs on your cluster.

Another example could be a simple exposed app configuration which allows the tenant to enrich log entries with k8s constructs(pod) fields. This has the potential to saturate the k8s API, rendering it inoperable. While capabilities such as API Priority and Fairness can help in mitigation (enabled by default), it is still considered runtime preventative protection rather than strong isolation.

The data plane

Containers provide process-level isolation running on the same host, sharing the same single kernel, that’s all. So, with only k8s namespace isolation, tenants will share the same compute environments pretty much as running multiple processes on the same virtual machine (well, almost). When extending the k8s namespace isolation with tenant affinity, resulting (tenant=namespace=dedicate compute), your tenants still share the same networking space.

Kubernetes offers a flat networking model (out of the box); a pod can communicate with any pod/node regardless of its data-plane location. Very often, network policies are used to limit this access to specific approved flows, i.e., all pods in namespace-a can access all pods in namespace-b but not namespace-c. This practice is still considered a soft-isolation tenancy as the tenants will share the same networking namespace. However, it will mitigate the noisy neighbor effect as tenants are collocated (affinity) onto specific dedicated compute resources.

So how do we make a decision? There seem to be so many vectors to consider. The answer, like anything in architecture, stems from business requirements, risk analysis, constraints, and the extraction of tools and technologies which will mitigate gaps.

In the next section, we start by first defining tenant profiles and then...

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK