Service Mesh之Istio部署bookinfo - Linux-1874

source link: https://www.cnblogs.com/qiuhom-1874/p/17284069.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Service Mesh之Istio部署bookinfo

前文我们了解了service mesh、分布式服务治理和istio部署相关话题,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/17281541.html;今天我们在istio环境中部署官方示例项目bookinfo;通过部署bookinfo项目来了解istio;

给istio部署插件

root@k8s-master01:/usr/local# cd istioroot@k8s-master01:/usr/local/istio# ls samples/addons/extras grafana.yaml jaeger.yaml kiali.yaml prometheus.yaml README.mdroot@k8s-master01:/usr/local/istio# kubectl apply -f samples/addons/serviceaccount/grafana createdconfigmap/grafana createdservice/grafana createddeployment.apps/grafana createdconfigmap/istio-grafana-dashboards createdconfigmap/istio-services-grafana-dashboards createddeployment.apps/jaeger createdservice/tracing createdservice/zipkin createdservice/jaeger-collector createdserviceaccount/kiali createdconfigmap/kiali createdclusterrole.rbac.authorization.k8s.io/kiali-viewer createdclusterrole.rbac.authorization.k8s.io/kiali createdclusterrolebinding.rbac.authorization.k8s.io/kiali createdrole.rbac.authorization.k8s.io/kiali-controlplane createdrolebinding.rbac.authorization.k8s.io/kiali-controlplane createdservice/kiali createddeployment.apps/kiali createdserviceaccount/prometheus createdconfigmap/prometheus createdclusterrole.rbac.authorization.k8s.io/prometheus createdclusterrolebinding.rbac.authorization.k8s.io/prometheus createdservice/prometheus createddeployment.apps/prometheus createdroot@k8s-master01:/usr/local/istio# |

提示:istio插件的部署清单在istio/samples/addons/目录下,该目录下有grafana、jaeger、kiali、prometheus的部署清单;其中jaeger是负责链路追踪,kiali是istio的一个web客户端工具,我们可以在web页面来管控istio,prometheus是负责指标数据采集,grafana负责指标数据的展示工具;应用该目录下的所有部署清单后,对应istio-system名称空间下会跑相应的pod和相应的svc资源;

验证:在istio-system名称空间下,查看对应pod是否正常跑起来了?对应svc资源是否创建?

root@k8s-master01:/usr/local/istio# kubectl get pods -n istio-systemNAME READY STATUS RESTARTS AGEgrafana-69f9b6bfdc-cm966 1/1 Running 0 12mistio-egressgateway-774d6846df-fv97t 1/1 Running 3 (144m ago) 22histio-ingressgateway-69499dc-pdgld 1/1 Running 3 (144m ago) 22histiod-65dcb8497-9skn9 1/1 Running 3 (145m ago) 22hjaeger-cc4688b98-wzfph 1/1 Running 0 12mkiali-594965b98c-kbllg 1/1 Running 0 64sprometheus-5f84bbfcfd-62nwc 2/2 Running 0 12mroot@k8s-master01:/usr/local/istio# kubectl get svc -n istio-systemNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEgrafana ClusterIP 10.107.10.186 <none> 3000/TCP 12mistio-egressgateway ClusterIP 10.106.179.126 <none> 80/TCP,443/TCP 22histio-ingressgateway LoadBalancer 10.102.211.120 192.168.0.252 15021:32639/TCP,80:31338/TCP,443:30597/TCP,31400:31714/TCP,15443:32154/TCP 22histiod ClusterIP 10.96.6.69 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 22hjaeger-collector ClusterIP 10.100.138.187 <none> 14268/TCP,14250/TCP,9411/TCP 12mkiali ClusterIP 10.99.88.50 <none> 20001/TCP,9090/TCP 12mprometheus ClusterIP 10.108.131.84 <none> 9090/TCP 12mtracing ClusterIP 10.100.53.36 <none> 80/TCP,16685/TCP 12mzipkin ClusterIP 10.110.231.233 <none> 9411/TCP 12mroot@k8s-master01:/usr/local/istio# |

提示:可以看到对应pod都在正常runing并处于ready状态;对应svc资源也都正常创建;我们要想访问对应服务,可以在集群内部访问对应的clusterIP来访问;也可以修改svc对应资源类型为nodeport或者loadbalancer类型;当然除了上述修改svc资源类型的方式实现集群外部访问之外,我们也可以通过istio的入口网关来访问;不过这种方式需要我们先通过配置文件告诉给istiod,让其把对应的服务通过ingressgate的外部IP地址暴露出来;

这里说一下通过ingressgateway暴露服务的原理;我们在安装istio以后,对应会在k8s上创建一些crd资源,这些crd资源就是用来定义如何管控流量的;即我们通过定义这些crd类型的资源来告诉istiod,对应服务该如何暴露;只要我们在k8s集群上创建这些crd类型的资源以后,对应istiod就会将其收集起来,把对应资源转换为envoy的配置文件格式,再统一下发给通过istio注入的sidecar,以实现配置envoy的目的(envoy就是istio注入到应用pod中的sidecar);

查看crd

root@k8s-master01:~# kubectl get crdsNAME CREATED ATauthorizationpolicies.security.istio.io 2023-04-02T16:28:24Zbgpconfigurations.crd.projectcalico.org 2023-04-02T02:26:34Zbgppeers.crd.projectcalico.org 2023-04-02T02:26:34Zblockaffinities.crd.projectcalico.org 2023-04-02T02:26:34Zcaliconodestatuses.crd.projectcalico.org 2023-04-02T02:26:34Zclusterinformations.crd.projectcalico.org 2023-04-02T02:26:34Zdestinationrules.networking.istio.io 2023-04-02T16:28:24Zenvoyfilters.networking.istio.io 2023-04-02T16:28:24Zfelixconfigurations.crd.projectcalico.org 2023-04-02T02:26:34Zgateways.networking.istio.io 2023-04-02T16:28:24Zglobalnetworkpolicies.crd.projectcalico.org 2023-04-02T02:26:34Zglobalnetworksets.crd.projectcalico.org 2023-04-02T02:26:34Zhostendpoints.crd.projectcalico.org 2023-04-02T02:26:34Zipamblocks.crd.projectcalico.org 2023-04-02T02:26:34Zipamconfigs.crd.projectcalico.org 2023-04-02T02:26:34Zipamhandles.crd.projectcalico.org 2023-04-02T02:26:34Zippools.crd.projectcalico.org 2023-04-02T02:26:34Zipreservations.crd.projectcalico.org 2023-04-02T02:26:34Zistiooperators.install.istio.io 2023-04-02T16:28:24Zkubecontrollersconfigurations.crd.projectcalico.org 2023-04-02T02:26:34Znetworkpolicies.crd.projectcalico.org 2023-04-02T02:26:34Znetworksets.crd.projectcalico.org 2023-04-02T02:26:34Zpeerauthentications.security.istio.io 2023-04-02T16:28:24Zproxyconfigs.networking.istio.io 2023-04-02T16:28:24Zrequestauthentications.security.istio.io 2023-04-02T16:28:24Zserviceentries.networking.istio.io 2023-04-02T16:28:24Zsidecars.networking.istio.io 2023-04-02T16:28:24Ztelemetries.telemetry.istio.io 2023-04-02T16:28:24Zvirtualservices.networking.istio.io 2023-04-02T16:28:24Zwasmplugins.extensions.istio.io 2023-04-02T16:28:24Zworkloadentries.networking.istio.io 2023-04-02T16:28:24Zworkloadgroups.networking.istio.io 2023-04-02T16:28:24Zroot@k8s-master01:~# kubectl api-resources --api-group=networking.istio.ioNAME SHORTNAMES APIVERSION NAMESPACED KINDdestinationrules dr networking.istio.io/v1beta1 true DestinationRuleenvoyfilters networking.istio.io/v1alpha3 true EnvoyFiltergateways gw networking.istio.io/v1beta1 true Gatewayproxyconfigs networking.istio.io/v1beta1 true ProxyConfigserviceentries se networking.istio.io/v1beta1 true ServiceEntrysidecars networking.istio.io/v1beta1 true Sidecarvirtualservices vs networking.istio.io/v1beta1 true VirtualServiceworkloadentries we networking.istio.io/v1beta1 true WorkloadEntryworkloadgroups wg networking.istio.io/v1beta1 true WorkloadGrouproot@k8s-master01:~# |

提示:可以看到在networking.istio.io这个群组里面有很多crd资源类型;其中gateway就是来定义如何接入外部流量的;virtualservice就是来定义虚拟主机的(类似apache中的虚拟主机),destinationrules用于定义外部流量通过gateway进来以后,结合virtualservice路由,对应目标该如何承接对应流量的;我们在k8s集群上创建这些类型的crd资源以后,都会被istiod收集并由它负责将其转换为envoy识别的格式配置统一下发给整个网格内所有的envoy sidecar或istio-system名称空间下所有envoy pod;

通过istio ingressgateway暴露kiali服务

定义 kiali-gateway资源实现流量匹配

# cat kiali-gateway.yaml apiVersion: networking.istio.io/v1beta1kind: Gatewaymetadata:name: kiali-gatewaynamespace: istio-systemspec:selector:app: istio-ingressgatewayservers:- port:number: 80name: http-kialiprotocol: HTTPhosts:- "kiali.ik8s.cc" |

提示:该资源定义了通过istio-ingresstateway进来的流量,匹配主机头为kiali.ik8s.cc,协议为http,端口为80的流量;

定义virtualservice资源实现路由

# cat kiali-virtualservice.yaml apiVersion: networking.istio.io/v1beta1kind: VirtualServicemetadata:name: kiali-virtualservicenamespace: istio-systemspec:hosts:- "kiali.ik8s.cc"gateways:- kiali-gatewayhttp:- match:- uri:prefix: /route:- destination:host: kialiport:number: 20001 |

提示:该资源定义了gateway进来的流量匹配主机头为kiali.ik8s.cc,uri匹配“/”;就把对应流量路由至对应服务名为kiali的服务的20001端口进行响应;

定义destinationrule实现如何承接对应流量

# cat kiali-destinationrule.yaml apiVersion: networking.istio.io/v1beta1kind: DestinationRulemetadata:name: kialinamespace: istio-systemspec:host: kialitrafficPolicy:tls:mode: DISABLE |

提示:该资源定义了对应承接非tls的流量;即关闭kiali服务的tls功能;

应用上述配置清单

root@k8s-master01:~/istio-in-practise/Traffic-Management-Basics/kiali-port-80# kubectl apply -f .destinationrule.networking.istio.io/kiali createdgateway.networking.istio.io/kiali-gateway createdvirtualservice.networking.istio.io/kiali-virtualservice createdroot@k8s-master01:~/istio-in-practise/Traffic-Management-Basics/kiali-port-80# kubectl get gw -n istio-systemNAME AGEkiali-gateway 27sroot@k8s-master01:~/istio-in-practise/Traffic-Management-Basics/kiali-port-80# kubectl get vs -n istio-system NAME GATEWAYS HOSTS AGEkiali-virtualservice ["kiali-gateway"] ["kiali.ik8s.cc"] 33sroot@k8s-master01:~/istio-in-practise/Traffic-Management-Basics/kiali-port-80# kubectl get dr -n istio-system NAME HOST AGEkiali kiali 38sroot@k8s-master01:~/istio-in-practise/Traffic-Management-Basics/kiali-port-80# |

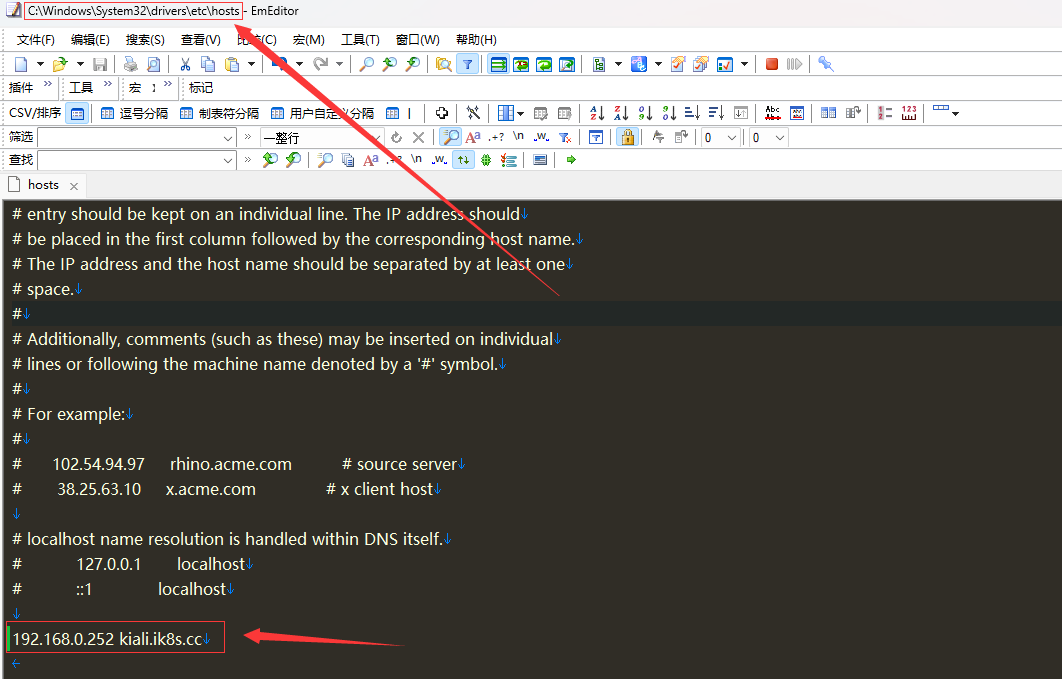

通过集群外部客户端的hosts文件将kiali.ik8s.cc解析至istio-ingressgateway外部地址

提示:我这里是一台win11的客户端,修改C:\Windows\System32\drivers\etc\hosts文件来实现解析;

测试,用浏览器访问kiali.ik8s.cc看看对应是否能够访问到kiali服务呢?

提示:可以看到我们现在就把集群内部kiali服务通过gateway、virtualservice、destinationrule这三种资源的创建将其暴露给ingresgateway的外部地址上;对于其他服务我们也可以采用类似的逻辑将其暴露出来;这里建议kiali不要直接暴露给集群外部客户端访问,因为kiali没有认证,但它又具有管理istio的功能;

部署bookinfo

root@k8s-master01:/usr/local/istio# kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml service/details createdserviceaccount/bookinfo-details createddeployment.apps/details-v1 createdservice/ratings createdserviceaccount/bookinfo-ratings createddeployment.apps/ratings-v1 createdservice/reviews createdserviceaccount/bookinfo-reviews createddeployment.apps/reviews-v1 createddeployment.apps/reviews-v2 createddeployment.apps/reviews-v3 createdservice/productpage createdserviceaccount/bookinfo-productpage createddeployment.apps/productpage-v1 createdroot@k8s-master01:/usr/local/istio# |

提示:我们安装istio以后,bookinfo的部署清单就在istio/samples/bookinfo/platform/kube/目录下;该部署清单会在default名称空间将bookinfo需要的pod运行起来,并创建相应的svc资源;

验证:查看default名称空间下的pod和svc资源,看看对应pod和svc是否正常创建?

root@k8s-master01:/usr/local/istio# kubectl get podsNAME READY STATUS RESTARTS AGEdetails-v1-6997d94bb9-4jssp 2/2 Running 0 2m56sproductpage-v1-d4f8dfd97-z2pcz 2/2 Running 0 2m55sratings-v1-b8f8fcf49-j8l44 2/2 Running 0 2m56sreviews-v1-5896f547f5-v2h92 2/2 Running 0 2m56sreviews-v2-5d99885bc9-dhjdk 2/2 Running 0 2m55sreviews-v3-589cb4d56c-rw6rw 2/2 Running 0 2m55sroot@k8s-master01:/usr/local/istio# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEdetails ClusterIP 10.109.96.34 <none> 9080/TCP 3m2skubernetes ClusterIP 10.96.0.1 <none> 443/TCP 38hproductpage ClusterIP 10.101.76.112 <none> 9080/TCP 3m1sratings ClusterIP 10.97.209.163 <none> 9080/TCP 3m2sreviews ClusterIP 10.108.1.117 <none> 9080/TCP 3m2sroot@k8s-master01:/usr/local/istio# |

提示:可以看到default名称空间下跑了几个pod,每个pod内部都有两个容器,其中一个是bookinfo程序的主容器,一个是istio注入的sidecar;bookinfo的访问入口是productpage;

验证:查看istiod是否将配置下发给我们刚才部署的bookinfo中注入的sidecar配置?

root@k8s-master01:/usr/local/istio# istioctl ps NAME CLUSTER CDS LDS EDS RDS ECDS ISTIOD VERSIONdetails-v1-6997d94bb9-4jssp.default Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-65dcb8497-9skn9 1.17.1istio-egressgateway-774d6846df-fv97t.istio-system Kubernetes SYNCED SYNCED SYNCED NOT SENT NOT SENT istiod-65dcb8497-9skn9 1.17.1istio-ingressgateway-69499dc-pdgld.istio-system Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-65dcb8497-9skn9 1.17.1productpage-v1-d4f8dfd97-z2pcz.default Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-65dcb8497-9skn9 1.17.1ratings-v1-b8f8fcf49-j8l44.default Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-65dcb8497-9skn9 1.17.1reviews-v1-5896f547f5-v2h92.default Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-65dcb8497-9skn9 1.17.1reviews-v2-5d99885bc9-dhjdk.default Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-65dcb8497-9skn9 1.17.1reviews-v3-589cb4d56c-rw6rw.default Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-65dcb8497-9skn9 1.17.1root@k8s-master01:/usr/local/istio# |

提示:这里我们只需要关心cds、lds、eds、rds即可;显示synced表示对应配置已经下发;配置下发完成以后,对应服务就可以在集群内部访问了;

验证:在集群内部部署一个客户端pod,访问productpage:9080看看对应bookinfo是否被访问到?

root@k8s-master01:/usr/local/istio# kubectl apply -f samples/sleep/sleep.yaml serviceaccount/sleep createdservice/sleep createddeployment.apps/sleep createdroot@k8s-master01:/usr/local/istio# kubectl get pods NAME READY STATUS RESTARTS AGEdetails-v1-6997d94bb9-4jssp 2/2 Running 0 12mproductpage-v1-d4f8dfd97-z2pcz 2/2 Running 0 12mratings-v1-b8f8fcf49-j8l44 2/2 Running 0 12mreviews-v1-5896f547f5-v2h92 2/2 Running 0 12mreviews-v2-5d99885bc9-dhjdk 2/2 Running 0 12mreviews-v3-589cb4d56c-rw6rw 2/2 Running 0 12msleep-bc9998558-vjc48 2/2 Running 0 50sroot@k8s-master01:/usr/local/istio# |

进入sleep pod,访问productpage:9080看看是否能访问?

root@k8s-master01:/usr/local/istio# kubectl exec -it sleep-bc9998558-vjc48 -- /bin/sh/ $ cd~ $ curl productpage:9080<!DOCTYPE html><html><head><title>Simple Bookstore App</title><meta charset="utf-8"><meta http-equiv="X-UA-Compatible" content="IE=edge"><meta name="viewport" content="width=device-width, initial-scale=1"><!-- Latest compiled and minified CSS --><link rel="stylesheet" href="static/bootstrap/css/bootstrap.min.css"><!-- Optional theme --><link rel="stylesheet" href="static/bootstrap/css/bootstrap-theme.min.css"></head><body><p><h3>Hello! This is a simple bookstore application consisting of three services as shown below</h3></p><table class="table table-condensed table-bordered table-hover"><tr><th>name</th><td>http://details:9080</td></tr><tr><th>endpoint</th><td>details</td></tr><tr><th>children</th><td><table class="table table-condensed table-bordered table-hover"><tr><th>name</th><th>endpoint</th><th>children</th></tr><tr><td>http://details:9080</td><td>details</td><td></td></tr><tr><td>http://reviews:9080</td><td>reviews</td><td><table class="table table-condensed table-bordered table-hover"><tr><th>name</th><th>endpoint</th><th>children</th></tr><tr><td>http://ratings:9080</td><td>ratings</td><td></td></tr></table></td></tr></table></td></tr></table><p><h4>Click on one of the links below to auto generate a request to the backend as a real user or a tester</h4></p><p><a href="/productpage?u=normal">Normal user</a></p><p><a href="/productpage?u=test">Test user</a></p><!-- Latest compiled and minified JavaScript --><script src="static/jquery.min.js"></script><!-- Latest compiled and minified JavaScript --><script src="static/bootstrap/js/bootstrap.min.js"></script></body></html>~ $ |

提示:可以看到对应客户端pod能够正常访问productpage:9080;这里说一下我们在集群内部pod中用productpage来访问服务是可以正常被coredns解析到对应svc上进行响应的;

暴露bookinfo给集群外部客户端访问

root@k8s-master01:~# cat /usr/local/istio/samples/bookinfo/networking/bookinfo-gateway.yaml apiVersion: networking.istio.io/v1alpha3kind: Gatewaymetadata:name: bookinfo-gatewayspec:selector:istio: ingressgateway # use istio default controllerservers:- port:number: 80name: httpprotocol: HTTPhosts:- "*"---apiVersion: networking.istio.io/v1alpha3kind: VirtualServicemetadata:name: bookinfospec:hosts:- "*"gateways:- bookinfo-gatewayhttp:- match:- uri:exact: /productpage- uri:prefix: /static- uri:exact: /login- uri:exact: /logout- uri:prefix: /api/v1/productsroute:- destination:host: productpageport:number: 9080root@k8s-master01:~# |

提示:该清单将bookinfo通过关联ingressgateway的外部地址的80端口关联,所以我们访问ingressgateway的外部地址就可以访问到bookinfo;

root@k8s-master01:~# kubectl apply -f /usr/local/istio/samples/bookinfo/networking/bookinfo-gateway.yamlgateway.networking.istio.io/bookinfo-gateway createdvirtualservice.networking.istio.io/bookinfo createdroot@k8s-master01:~# |

验证:访问ingressgateway的外部地址,看看对应bookinfo是否能够被访问到?

提示:可以看到现在我们在集群外部通过访问ingressgateway的外部地址就能正常访问到bookinfo,通过多次访问,还可以实现不同的效果;

模拟客户端访问bookinfo

root@k8s-node03:~# while true ; do curl 192.168.0.252/productpage;sleep 0.$RANDOM;done |

在kiali上查看绘图

提示:我们在kiali上看到的图形,就是通过模拟客户端访问流量所形成的图形;该图形能够形象的展示对应服务流量的top,以及动态显示对应流量访问应用的比例;我们可以通过定义配置文件的方式,动态调整客户端能够访问到bookinfo那个版本;对应绘图也会通过采集到的指标数据动态将流量路径绘制出来;

通过bookinfo测试流量治理功能

创建destinationrule

root@k8s-master01:/usr/local/istio# cat samples/bookinfo/networking/destination-rule-all.yamlapiVersion: networking.istio.io/v1alpha3kind: DestinationRulemetadata:name: productpagespec:host: productpagesubsets:- name: v1labels:version: v1---apiVersion: networking.istio.io/v1alpha3kind: DestinationRulemetadata:name: reviewsspec:host: reviewssubsets:- name: v1labels:version: v1- name: v2labels:version: v2- name: v3labels:version: v3---apiVersion: networking.istio.io/v1alpha3kind: DestinationRulemetadata:name: ratingsspec:host: ratingssubsets:- name: v1labels:version: v1- name: v2labels:version: v2- name: v2-mysqllabels:version: v2-mysql- name: v2-mysql-vmlabels:version: v2-mysql-vm---apiVersion: networking.istio.io/v1alpha3kind: DestinationRulemetadata:name: detailsspec:host: detailssubsets:- name: v1labels:version: v1- name: v2labels:version: v2---root@k8s-master01:/usr/local/istio# |

提示:上述清单主要定义了不同版本对应的服务的版本;

root@k8s-master01:/usr/local/istio# kubectl apply -f samples/bookinfo/networking/destination-rule-all.yamldestinationrule.networking.istio.io/productpage createddestinationrule.networking.istio.io/reviews createddestinationrule.networking.istio.io/ratings createddestinationrule.networking.istio.io/details createdroot@k8s-master01:/usr/local/istio# |

将所有流量路由至v1版本

root@k8s-master01:/usr/local/istio# kubectl apply -f samples/bookinfo/networking/virtual-service-all-v1.yamlvirtualservice.networking.istio.io/productpage createdvirtualservice.networking.istio.io/reviews createdvirtualservice.networking.istio.io/ratings createdvirtualservice.networking.istio.io/details createdroot@k8s-master01:/usr/local/istio# |

验证:在kiali上查看对应流量是否只有v1版本了?

提示:可以看到现在kiali绘制的图里面就只有v1版本的流量,其他v2,v3版本流量就没有了;

通过客户端登陆身份标识来路由

root@k8s-master01:/usr/local/istio# cat samples/bookinfo/networking/virtual-service-reviews-test-v2.yamlapiVersion: networking.istio.io/v1alpha3kind: VirtualServicemetadata:name: reviewsspec:hosts:- reviewshttp:- match:- headers:end-user:exact: jasonroute:- destination:host: reviewssubset: v2- route:- destination:host: reviewssubset: v1root@k8s-master01:/usr/local/istio# |

提示:上述清单定义了,登录用户名为jason,就响应v2版本;其他未登录的客户端还是以v1版本响应;

验证:应用配置清单,登录jason,看看是否是以v2版本响应?

提示:可以看到我们应用了配置清单以后,对应模拟客户端访问的还是一直以v1的版本响应;我们登录jason用户以后,对应响应给我们的页面就是v2版本,退出jason用户又是以v1版本响应;

以上就是bookinfo在istio服务网格中,通过定义不同的配置,实现高级流量治理的效果;

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK