What happens when anyone can talk to a machine?

source link: https://khoipond.substack.com/p/what-happens-when-anyone-can-talk?

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

If you’ve ever used a website to reschedule your DMV appointment, cancel a membership, or get flight details and thrown your hands up in exasperation thinking “JUST LET ME TALK TO A PERSON” – you are not alone. In the era of “user friendly” design, interacting with machines still requires too much translation. The operator and the machine are speaking past each other.

With the rise of conversational AI, I think there will be less translation required for humans to speak to machines, making technology accessible to more people.

AI enables a new input and output for machines: natural language

Although less translation is required in the way we physically interact with machines today compared to the days of punchcards, there is still a semantic gap between what a machine outputs (eg a red light on your car dashboard) and how we interpret it (I need to get gas). That semantic gap closed as humans learned to map the machine’s output to a meaning. They learned the language of the machine. “404” means I typed a URL wrong, “A1 MAINTENANCE” means I need a tire check-up AND an oil change, “flashing LED” means I need to charge my [insert trendy wearable name here]. Over time, designers have helped bridge this gap by making symbols and icons more intuitive, tooltip labels and error messages more understandable, and machine outputs generally align with existing mental models that their users have.

But we are still in a paradigm of having to “learn” new graphical user interfaces (GUIs) that designers make. With the rise of large-language models (LLMs) such as GPT, there will be a new paradigm: machines taking natural language as input so that we don’t have to “learn” as much.

Designer Agatha Yu recently tweeted:

“We are finally at a point where language can be a HCI [human-computer interaction] input, instead of traversing through a narrative tree (that’s basically operating hamburger menus with voice).”

The way I interpret Agatha’s tweet is: although we have been able to physically speak to machines for some time, their inputs aren’t actually natural language. Their inputs are a set of learned commands conveyed via voice: “Siri, set a timer for 2 minutes. Play Sara Bareilles’ album. Call Mom.” This leads to a branching set of new commands: “Would you like to call Mom cell? or Home?” The current paradigm enforces the need to learn Siri’s language, specifically which commands Siri understands (eg “Yo Siri, drop my mom a line” does not work). The nuanced difference between speaking commands down a tree vs. interacting via natural language is a massive leap in capabilities. While voice assistants have already aided people with disabilities, its limited predefined tree of commands holds back its users from doing more. A true conversational AI could unlock the ability to do complex tasks. Imagine making a doctor’s appointment or refilling medication for someone who can’t navigate a complex interface for any reason. Additionally, by reducing the need to “learn Siri’s language,” conversational AI can lower the barrier to general usage. This is because if AI understands human language, it can serve as a translator between human and machine.

AI as Translator

I’ve been a “translator,” bridging the gap between human intent and pressing the right buttons to make a machine do the desired task. My three-college-degree-wielding Mom would often give me the 80 button TV remote and ask me to make our “smart” TV load up her favorite K-drama. Now on her new smart TV, she just talks into the microphone on the remote. I have also needed a translator. The other day, I was struggling to write a SQL query, so I had to ask the data scientist on my team to help me. Because the input of the machine is SQL, he serves as my translator to speak to our data systems through SQL queries. What if we could have built-in translators to talk to machines for us?

AI can act as this translator, aligning the inputs of machines (SQL) with the outputs of people (natural language). It can enable people like me to speak plain English to a computer without the need to rely on a technical specialist, learn a coding language, or watch tutorials on how to navigate a complicated GUI.

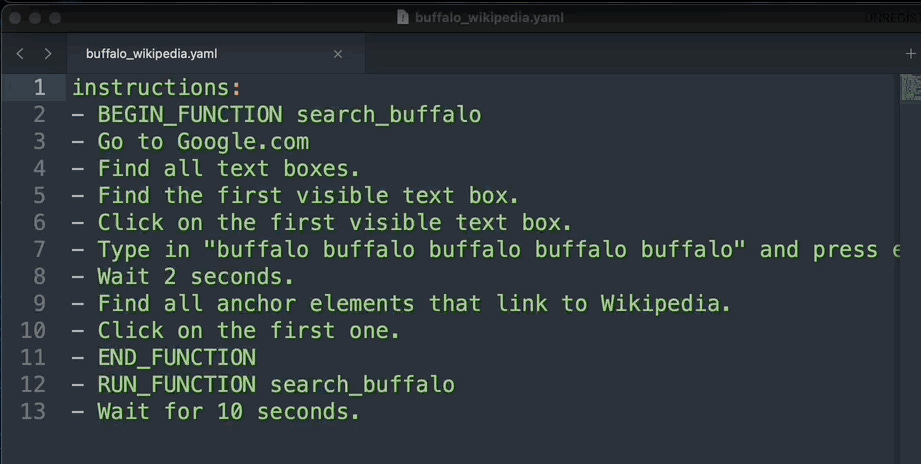

A fantastic example of leapfrogging learning a new coding language is Browser Pilot,

which takes natural language as input and “translates it” into Selenium code to perform automated actions in the browser. The example shown in the image below is the instruction set for “search something on Google and click the first Wikipedia link.” This presents a new world where humans can easily tell machines a set of tasks to do without needing to learn code.

What’s exciting about Browser Pilot being a “English -> code translator” is that it simultaneously makes tech more accessible and eliminates “grunt work” for programmers. Now, folks who don’t know how to code, or even programmers who don’t know Selenium can easily write automation scripts to run in browsers. This means more people can experiment with and build automation scripts to do myriad tasks that we don’t know about today. For example, a baker who wants to get in touch with unhappy customers could tell her browser to go to her Yelp page, skim all the reviews, find semantically negative ones and automatically reply with a nice message and a “please contact us so we can resolve your issues.” Folks who are struggling with navigating a tricky website, could ask a browser to “find the customer support number on this website for me.” An artist could tell their browser to repeatedly search Reddit for key terms related to their work and see if anyone is distributing their art without crediting them. An activist could increase their leverage by automating finding the emails of congresspeople and sending them a message. Anyone can tell a machine to do something in the browser for them.

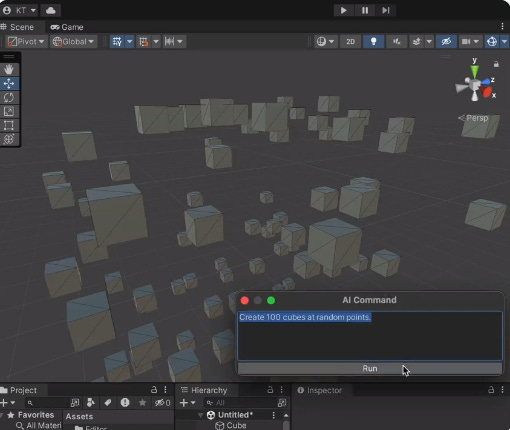

So now we have translators for physical interfaces (smart TV + remote), coding languages (SQL + Selenium), and even for complex software GUIs (the game making tool Unity, see below).

By acting as a built-in translator to help people speak to machines, AI will help more people do complex things with technology without having to learn the complex interactions, languages, and dense GUIs to traditionally do so.

Freeing Up Human Productivity

What does that mean for designers and engineers? Designers know the “language” of humans and programmers know the “language” of machines, and they work together to help humans and machines speak to each other. Does this knowledge become obsolete if AI can help everyone translate natural language into machine code?

I don’t think so—I believe that the value of technical knowledge will actually increase. The best AIs will be “taught” by the best. The best designers and engineers will work on designing and building AI models to be the smoothest, most delightful, and most useful to interact with through natural language. At the same time, designers who no longer have to build UIs (because the AI will do it + some software will be designed around a chatbox) and engineers who no longer have to write scripts in syntactically dense languages (because AI will translate human language to scripts) will be freed up to focus on decision-making. Instead of programmers spending time writing scripts to scrape data, they can decide how the data should be used. Designers can spend less time wireframing UIs and more time talking to users and making strategic decisions around user needs. AI is unlocking more leverage for humans.

Collaborating with Machines

AI will help a huge number of people do more complex tasks with tech while allowing workers to focus on higher-level work. I’m personally excited for enabling a huge number of humans to unlock unprecedented levels of technology “leverage” (being able to do more with tech with less technical knowledge). The same way children growing up today can poke around an iPad and kind of naturally figure out how to physically manipulate it to do things for them, children in 2-3 years will be able to talk to a machine and naturally figure out how to verbally manipulate it to not only do things for them but also tell them things they want to know (to a much greater degree than they could do with Google today). The iPad becomes less of a tool or toy and more of a collaborator or playmate: today kids “play ON their iPads” but I think give it 2-3 years and they’ll be “playing WITH their iPads” (or Surface Tablets, since Microsoft seems to be winning this AI thing). Even children will be capable of accomplishing more with technology thanks to the paradigm shift that AI is unlocking today, putting them in control of shaping the way they interact with machines rather than being forced into navigational patterns by designers in some Silicon Valley HQ.

Lowering the need for translation changes the dynamic between human and machine — it allows them to speak the same language. The ability to talk to your computer as if it were a collaborator is a key pillar for the future of computing that AI unlocks. I’m making a song in GarageBand → GarageBand is helping me write a song. We’ll live in a world where more people have time for creative pursuits and be able to have technology assist them in those complex pursuits. We’ll be doing a lot with our machines in the future rather than on our machines. What would you want to do with your machine?

A common metaphor from “User Friendly” by Cliff Kuang and Robert Fabricant.

Do check out the repo and give it a star, my friend Andrew is always excited to talk to folks interested in AI x scraping.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK