OpenHarmony 3.2 Beta多媒体系列—音视频播放框架

source link: https://www.51cto.com/article/740483.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

OpenHarmony 3.2 Beta多媒体系列—音视频播放框架

媒体子系统为开发者提供一套接口,方便开发者使用系统的媒体资源,主要包含音视频开发、相机开发、流媒体开发等模块。每个模块都提供给上层应用对应的接口,本文会对音视频开发中的音视频播放框架做一个详细的介绍。

foundation/multimedia/media_standard

├── frameworks #框架代码

│ ├── js

│ │ ├── player

│ ├── native

│ │ ├── player #native实现

│ └── videodisplaymanager #显示管理

│ ├── include

│ └── src

├── interfaces

│ ├── inner_api #内部接口

│ │ └── native

│ └── kits #外部JS接口

├── sa_profile #服务配置文件

└── services

├── engine #engine代码

│ └── gstreamer

├── etc #服务配置文件

├── include #头文件

└── services

├── sa_media #media服务

│ ├── client #media客户端

│ ├── ipc #media ipc调用

│ └── server #media服务端

├── factory #engine工厂

└── player #player服务

├── client #player客户端

├── ipc #player ipc调用

└── server #player服务端三、播放的总体流程

四、Native接口使用

OpenHarmony系统中,音视频播放通过N-API接口提供给上层JS调用,N-API相当于是JS和Native之间的桥梁,在OpenHarmony源码中,提供了C++直接调用的音视频播放例子,在foundation/multimedia/player_framework/test/nativedemo/player目录中。

void PlayerDemo::RunCase(const string &path)

{

player_ = OHOS::Media::PlayerFactory::CreatePlayer();

if (player_ == nullptr) {

cout << "player_ is null" << endl;

return;

}

RegisterTable();

std::shared_ptr<PlayerCallbackDemo> cb = std::make_shared<PlayerCallbackDemo>();

cb->SetBufferingOut(SelectBufferingOut());

int32_t ret = player_->SetPlayerCallback(cb);

if (ret != 0) {

cout << "SetPlayerCallback fail" << endl;

}

if (SelectSource(path) != 0) {

cout << "SetSource fail" << endl;

return;

}

sptr<Surface> producerSurface = nullptr;

producerSurface = GetVideoSurface();

if (producerSurface != nullptr) {

ret = player_->SetVideoSurface(producerSurface);

if (ret != 0) {

cout << "SetVideoSurface fail" << endl;

}

}

SetVideoScaleType();

if (SelectRendererMode() != 0) {

cout << "set renderer info fail" << endl;

}

ret = player_->PrepareAsync();

if (ret != 0) {

cout << "PrepareAsync fail" << endl;

return;

}

cout << "Enter your step:" << endl;

DoNext();

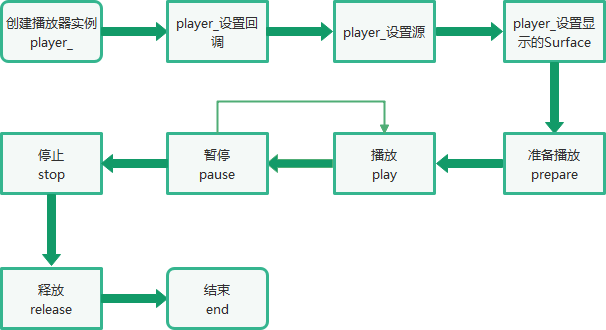

}首先根据RunCase可以大致了解一下播放音视频的主要流程,创建播放器,设置播放源,设置回调方法(包含播放过程中的多种状态的回调),设置播放显示的Surface,这些准备工作做好之后,需要调用播放器的PrepareASync方法,这个方法完成后,播放状态会变成Prepared状态,这时就可以调用播放器的play接口,进行音视频的播放了。

RegisterTable()方法中,将字符串和对应的方法映射到Map中,这样后续的DoNext会根据输入的命令,来决定播放器具体的操作。

void PlayerDemo::DoNext()

{

std::string cmd;

while (std::getline(std::cin, cmd)) {

auto iter = playerTable_.find(cmd);

if (iter != playerTable_.end()) {

auto func = iter->second;

if (func() != 0) {

cout << "Operation error" << endl;

}

if (cmd.find("stop") != std::string::npos && dataSrc_ != nullptr) {

dataSrc_->Reset();

}

continue;

} else if (cmd.find("quit") != std::string::npos || cmd == "q") {

break;

} else {

DoCmd(cmd);

continue;

}

}

}

void PlayerDemo::RegisterTable()

{

(void)playerTable_.emplace("prepare", std::bind(&Player::Prepare, player_));

(void)playerTable_.emplace("prepareasync", std::bind(&Player::PrepareAsync, player_));

(void)playerTable_.emplace("", std::bind(&Player::Play, player_)); // ENTER -> play

(void)playerTable_.emplace("play", std::bind(&Player::Play, player_));

(void)playerTable_.emplace("pause", std::bind(&Player::Pause, player_));

(void)playerTable_.emplace("stop", std::bind(&Player::Stop, player_));

(void)playerTable_.emplace("reset", std::bind(&Player::Reset, player_));

(void)playerTable_.emplace("release", std::bind(&Player::Release, player_));

(void)playerTable_.emplace("isplaying", std::bind(&PlayerDemo::GetPlaying, this));

(void)playerTable_.emplace("isloop", std::bind(&PlayerDemo::GetLooping, this));

(void)playerTable_.emplace("speed", std::bind(&PlayerDemo::GetPlaybackSpeed, this));

}以上的DoNext方法中核心的代码是func()的调用,这个func就是之前注册进Map中字符串对应的方法,在RegisterTable方法中将空字符串""和"play"对绑定为Player::Play方法,默认不输入命令参数时,是播放操作。

五、调用流程

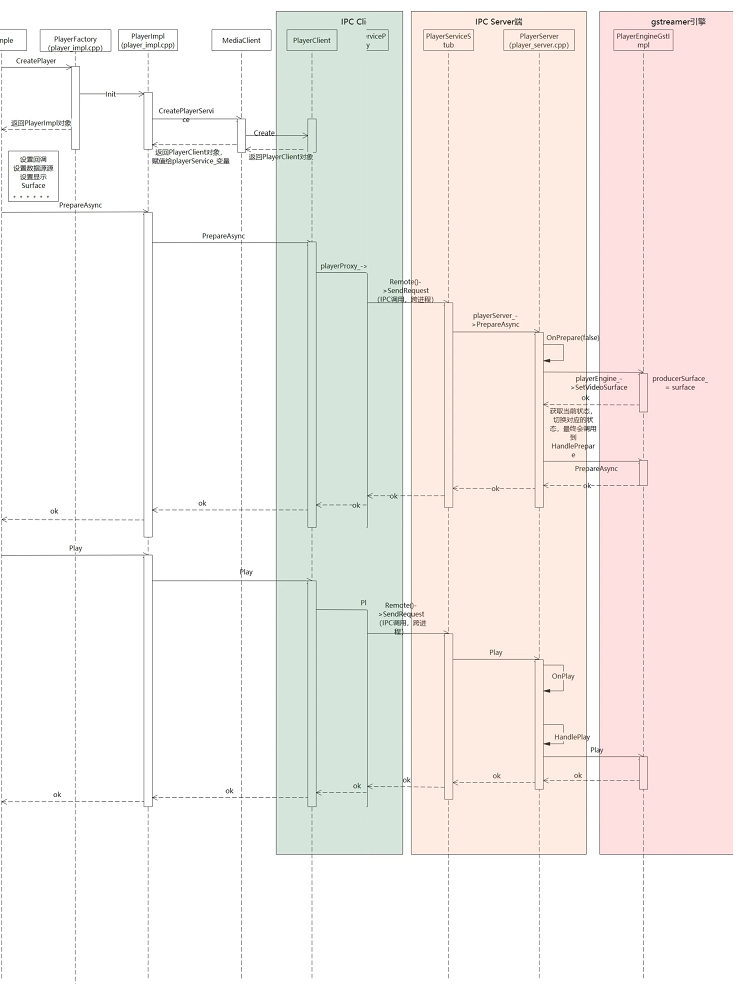

本段落主要针对媒体播放的框架层代码进行分析,所以在流程中涉及到了IPC调用相关的客户端和服务端,代码暂且分析到调用gstreamer引擎。

首先Sample通过PlayerFactory创建了一个播放器实例(PlayerImpl对象),创建过程中调用Init函数。

int32_t PlayerImpl::Init()

{

playerService_ = MediaServiceFactory::GetInstance().CreatePlayerService();

CHECK_AND_RETURN_RET_LOG(playerService_ != nullptr, MSERR_UNKNOWN, "failed to create player service");

return MSERR_OK;

}MediaServiceFactory::GetInstance()返回的是MediaClient对象,所以CreateplayerService函数实际上是调用了MediaClient对应的方法。

std::shared_ptr<IPlayerService> MediaClient::CreatePlayerService()

{

std::lock_guard<std::mutex> lock(mutex_);

if (!IsAlived()) {

MEDIA_LOGE("media service does not exist.");

return nullptr;

}

sptr<IRemoteObject> object = mediaProxy_->GetSubSystemAbility(

IStandardMediaService::MediaSystemAbility::MEDIA_PLAYER, listenerStub_->AsObject());

CHECK_AND_RETURN_RET_LOG(object != nullptr, nullptr, "player proxy object is nullptr.");

sptr<IStandardPlayerService> playerProxy = iface_cast<IStandardPlayerService>(object);

CHECK_AND_RETURN_RET_LOG(playerProxy != nullptr, nullptr, "player proxy is nullptr.");

std::shared_ptr<PlayerClient> player = PlayerClient::Create(playerProxy);

CHECK_AND_RETURN_RET_LOG(player != nullptr, nullptr, "failed to create player client.");

playerClientList_.push_back(player);

return player;

}这个方法中主要通过PlayerClient::Create(playerProxy)方法创建了PlayerClient实例,并且将该实例一层层向上传,最终传给了PlayerImpl的playerService_变量,后续对于播放器的操作,PlayerImpl都是通过调用PlayerClient实例实现的。

int32_t PlayerImpl::Play()

{

CHECK_AND_RETURN_RET_LOG(playerService_ != nullptr, MSERR_INVALID_OPERATION, "player service does not exist..");

MEDIA_LOGW("KPI-TRACE: PlayerImpl Play in");

return playerService_->Play();

}

int32_t PlayerImpl::Prepare()

{

CHECK_AND_RETURN_RET_LOG(playerService_ != nullptr, MSERR_INVALID_OPERATION, "player service does not exist..");

MEDIA_LOGW("KPI-TRACE: PlayerImpl Prepare in");

return playerService_->Prepare();

}

int32_t PlayerImpl::PrepareAsync()

{

CHECK_AND_RETURN_RET_LOG(playerService_ != nullptr, MSERR_INVALID_OPERATION, "player service does not exist..");

MEDIA_LOGW("KPI-TRACE: PlayerImpl PrepareAsync in");

return playerService_->PrepareAsync();

}对于PlayerImpl来说,playerService_指向的PlayerClient就是具体的实现,PlayerClient的实现是通过IPC的远程调用来实现的,具体地是通过IPC中的proxy端向远端服务发起远程调用请求。

我们以播放Play为例:

int32_t PlayerClient::Play()

{

std::lock_guard<std::mutex> lock(mutex_);

CHECK_AND_RETURN_RET_LOG(playerProxy_ != nullptr, MSERR_NO_MEMORY, "player service does not exist..");

return playerProxy_->Play();

}int32_t PlayerServiceProxy::Play()

{

MessageParcel data;

MessageParcel reply;

MessageOption option;

if (!data.WriteInterfaceToken(PlayerServiceProxy::GetDescriptor())) {

MEDIA_LOGE("Failed to write descriptor");

return MSERR_UNKNOWN;

}

int error = Remote()->SendRequest(PLAY, data, reply, option);

if (error != MSERR_OK) {

MEDIA_LOGE("Play failed, error: %{public}d", error);

return error;

}

return reply.ReadInt32();

}proxy端发送调用请求后,对应的Stub端会在PlayerServiceStub::OnRemoteRequest接收到请求,根据请求的参数进行对应的函数调用。播放操作对应的调用Stub的Play方法。

int32_t PlayerServiceStub::Play()

{

MediaTrace Trace("binder::Play");

CHECK_AND_RETURN_RET_LOG(playerServer_ != nullptr, MSERR_NO_MEMORY, "player server is nullptr");

return playerServer_->Play();

}这里最终是通过playerServer_调用Play函数。playerServer_在Stub初始化的时候通过PlayerServer::Create()方式来获取得到。也就是PlayerServer。

std::shared_ptr<IPlayerService> PlayerServer::Create()

{

std::shared_ptr<PlayerServer> server = std::make_shared<PlayerServer>();

CHECK_AND_RETURN_RET_LOG(server != nullptr, nullptr, "failed to new PlayerServer");

(void)server->Init();

return server;

}最终我们的Play调用到了PlayerServer的Play()。在媒体播放的整个过程中会涉及到很多的状态,所以在Play中进行一些状态的判读后调用OnPlay方法。这个方法中发起了一个播放的任务。

int32_t PlayerServer::Play()

{

std::lock_guard<std::mutex> lock(mutex_);

if (lastOpStatus_ == PLAYER_PREPARED || lastOpStatus_ == PLAYER_PLAYBACK_COMPLETE ||

lastOpStatus_ == PLAYER_PAUSED) {

return OnPlay();

} else {

MEDIA_LOGE("Can not Play, currentState is %{public}s", GetStatusDescription(lastOpStatus_).c_str());

return MSERR_INVALID_OPERATION;

}

}

int32_t PlayerServer::OnPlay()

{

auto playingTask = std::make_shared<TaskHandler<void>>([this]() {

MediaTrace::TraceBegin("PlayerServer::Play", FAKE_POINTER(this));

auto currState = std::static_pointer_cast<BaseState>(GetCurrState());

(void)currState->Play();

});

int ret = taskMgr_.LaunchTask(playingTask, PlayerServerTaskType::STATE_CHANGE);

CHECK_AND_RETURN_RET_LOG(ret == MSERR_OK, ret, "Play failed");

lastOpStatus_ = PLAYER_STARTED;

return MSERR_OK;

}在播放任务中调用了PlayerServer::PreparedState::Play()

int32_t PlayerServer::PreparedState::Play()

{

return server_.HandlePlay();

}在Play里面直接调用PlayerServer的HandlePlay方法,HandlePlay方法通过playerEngine_调用到了gstreamer引擎,gstreamer是最终播放的实现。

int32_t PlayerServer::HandlePlay()

{

int32_t ret = playerEngine_->Play();

CHECK_AND_RETURN_RET_LOG(ret == MSERR_OK, MSERR_INVALID_OPERATION, "Engine Play Failed!");

return MSERR_OK;

}本文主要对OpenHarmony 3.2 Beta多媒体子系统的媒体播放进行介绍,首先梳理了整体的播放流程,然后对播放的主要步骤进行了详细地分析。

媒体播放主要分为以下几个层次:

(1) 提供给应用调用的Native接口,这个实际上通过OHOS::Media::PlayerFactory::CreatePlayer()调用返回PlayerImpl实例。

(2) PlayerClient,这部分通过IPC的proxy调用,向远程服务发起调用请求。

(3) PlayerServer,这部分是播放服务的实现端,提供给Client端调用。

(4) Gstreamer,这部分是提供给PlayerServer调用,真正实现媒体播放的功能。

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK