What designers can learn from Apple’s new accessibility features

source link: https://uxdesign.cc/what-designers-can-learn-from-apples-new-accessibility-features-514b8f5bbd33

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

What designers can learn from Apple’s new accessibility features

Connecting the physical and digital world to provide equal experiences and access for all

Although Apple stock is down roughly 23% year to date at the time of writing this article, the $2 trillion company is now valued at more than Amazon, Alphabet and Meta — combined. Could the reason be connected to their continuous release of exciting new features?

Apple recently announced their iPhone 14 Pro with their newest innovation, the Dynamic Island. But this isn’t the most exciting feature they’ve announced this year.

Apple is investing in accessibility features for a range of their products. With over one billion active iPhone users worldwide, these features have the power to impact numerous individuals who face everyday challenges.

Door Detection for Users Who Are Blind or Low Vision for iPhone and iPad

What challenges does this feature aim to address?

Globally, over 2 billion people have some sort of vision impairment, including near and distance. People with visual impairments can experience many difficulties for seemingly simple tasks, such as opening and closing a door.

Door Detection aims to improve the experiences and safety of people that have vision impairment or blindness.

How does this feature improve accessibility?

Door Detection is Apple’s newest accessibility feature available for iPhone and iPad. The navigation feature helps people detect and locate doors around them, removing barriers that people face with finding the right destination. The device uses sound feedback that increases in pitch as the person gets closer to the door.

The feature also communicates how to open the door (ie. pushing, pulling a handle, turning a knob) and a description of any signs or symbols on the door, such as numbers or accessible entrance symbols.

To learn more about how Door Detection works, visit ‘Detect doors around you using Magnifier on iPhone’.

What can designers learn from this feature?

Accessibility isn’t just about improving the digital experience of an app or website. People face challenges in real life all the time, whether they have a permanent, temporary, or situational impairment.

The experiences that most people take for granted, such as being able to see and open doors, are not experienced in the same way by everyone.

With technology and design, we have endless opportunities to create an accessible real-world experience for people.

Advancing Physical and Motor Accessibility for Apple Watch

What challenges does this feature aim to address?

Did you know that 40% of people with a motor impairment have difficulty using their hands? Types of motor impairments can include:

- Spinal cord injury

- Cerebral palsy

- Muscular dystrophy

- Multiple sclerosis

- Spina bifida

- Lou Gehrig’s disease

- Arthritis

- Parkinson’s disease

- Essential tremor

Physical and motor disabilities can also be temporary or situational. Perhaps you fell and broke your arm, or you’re carrying a heavy item, resulting in difficulties tapping your smartwatch or using your phone.

Apple’s advancements for the Apple Watch aim to improve ease of access for all users that experience these challenges.

How does this feature improve accessibility?

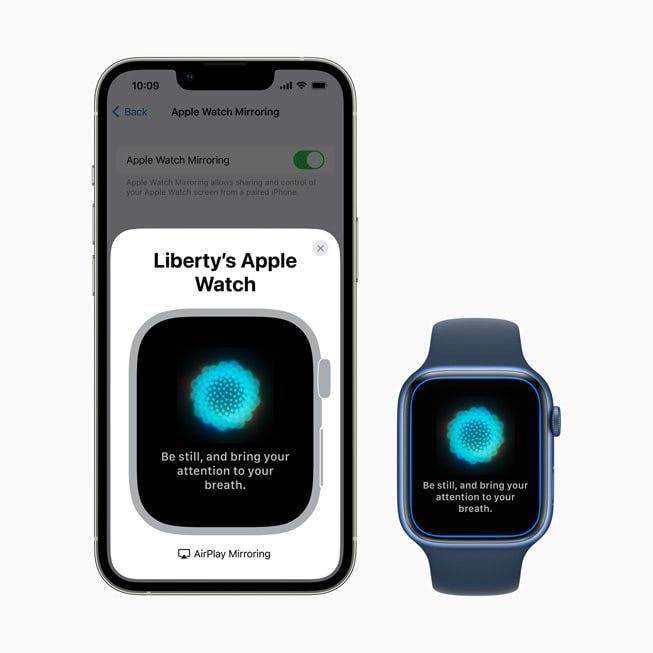

With Apple Watch Mirroring, users can leverage technology in their iPhone as an alternative to tapping their Apple Watch display.

With their paired iPhone’s assistive feature, Voice Control, users can use voice commands, sound actions, or head tracking as a way to interact with their Apple Watch display.

In addition, iPhone’s Switch Control allows users to set up an external switch with Bluetooth. The iPhone’s front-facing camera can also be used as a switch by turning your head either left or right. Switches provide an alternative for people who experience difficulties performing a tap gesture.

To learn more about how Apple Watch Mirroring works, visit ‘Control Apple Watch with your iPhone’.

Apple also introduced Quick Actions, which leverages their AssistiveTouch technology. With AssistiveTouch, users can perform gestures, such as pinch (to move to the next item) and clench (to tap an item) as a way to navigate and select items on their Apple Watch.

If a user receives an incoming phone call or wants to take a photo, the Apple Watch will communicate which gesture they can perform in order to complete the action.

To learn more about how Quick Actions work, visit ‘Use AssistiveTouch on Apple Watch’.

What can designers learn from this feature?

Common gestures, such as tapping a display, can be difficult for some people to perform. We can design and build devices that leverage technology to allow people to interact with it in their preferred way, whether through voice, touch, or other gestures.

Equal access to all users means providing alternative ways to interact with an interface. Designers should recognize that the experience doesn’t have to be limited to just the screen. Offering multiple options for users to be able to complete the same task can improve the accessibility of the experience.

Live Captions Come to iPhone, iPad, and Mac for Deaf and Hard of Hearing Users

What challenges does this feature aim to address?

Approximately 15% of American adults (37.5 million) aged 18 and over report some trouble hearing.

In fact, one in eight people in the United States (13 percent, or 30 million) aged 12 years or older has hearing loss in both ears, based on standard hearing examinations.

Apple’s new Live Captions for iPhone, iPad, and Mac aims to assist deaf and hard of hearing users when consuming audio content. But this feature can also be used by anyone experiencing situational hearing impairment, such as in loud environments.

How does this feature improve accessibility?

People who are deaf or hard of hearing face challenges with consuming audio, such as phone calls, social media, or streaming media content.

Live Captions transcribe audio into written text for users to be able to consume the same content in real time. The font size is also adjustable to suit the user’s preference. This feature improves the universal experience of having a phone call or group call.

When using Live Captions on Mac, users can type a response instead of speaking, as some people may have difficulties speaking clearly, due to the inability to hear their own voice. The response will then be spoken aloud to the other participants of the conversation.

What can designers learn from this feature?

By empathizing and conducting research with people with disabilities, we can start to understand the challenges that they face with everyday activities. Being able to have a proper conversation with someone or consume content shouldn’t come with barriers.

When designing audio experiences, designers should remember to include transcripts and captions for spoken information. Aside from verbal information, describe sounds that provide background or context, such as ‘calming music playing’ or ‘door slams shut’.

For more information regarding captions and transcripts, visit W3.org — Captions/Subtitles and Transcripts.

Accessibility is for everyone

Digital accessibility isn’t perfect. Apple released their fourteenth version of the iPhone and they are still improving their accessibility features across multiple devices.

Everyone has their own range of abilities which makes accessibility a spectrum. If we can improve products by even 1%, why not try to design a slightly better experience as we strive towards equal access for all?

Don’t give up on making the world more accessible. Without a doubt, someone out there will appreciate your efforts.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK