分布式存储系统之Ceph集群访问接口启用 - Linux-1874

source link: https://www.cnblogs.com/qiuhom-1874/p/16727620.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

分布式存储系统之Ceph集群访问接口启用

前文我们使用ceph-deploy工具简单拉起了ceph底层存储集群RADOS,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/16724473.html;今天我们来聊一聊ceph集群访问接口相关话题;

我们知道RADOS集群是ceph底层存储集群,部署好RADOS集群以后,默认只有RBD(Rados Block Device)接口;但是该接口并不能使用;这是因为在使用rados存储集群存取对象数据时,都是通过存储池找到对应pg,然后pg找到对应的osd,由osd通过librados api接口将数据存储到对应的osd所对应的磁盘设备上;

启用Ceph块设备接口(RBD)

对于RBD接口来说,客户端基于librbd即可将RADOS存储集群用作块设备,不过,用于rbd的存储池需要事先启用rbd功能并进行初始化;

1、创建RBD存储池

[root@ceph-admin ~]# ceph osd pool create rbdpool 64 64pool 'rbdpool' created[root@ceph-admin ~]# ceph osd pool lstestpoolrbdpool[root@ceph-admin ~]# |

2、启用RBD存储池RBD功能

查看ceph osd pool 帮助

Monitor commands: =================osd pool application disable <poolname> <app> {--yes- disables use of an application <app> on pool i-really-mean-it} <poolname>osd pool application enable <poolname> <app> {--yes-i- enable use of an application <app> [cephfs,rbd,rgw] really-mean-it} on pool <poolname> |

提示:ceph osd pool application disable 表示禁用对应存储池上的对应接口功能,enbale 表示启用对应功能;后面的APP值只接受cephfs、rbd和rgw;

[root@ceph-admin ~]# ceph osd pool application enable rbdpool rbdenabled application 'rbd' on pool 'rbdpool'[root@ceph-admin ~]# |

3、初始化RBD存储池

[root@ceph-admin ~]# rbd pool init -p rbdpool[root@ceph-admin ~]# |

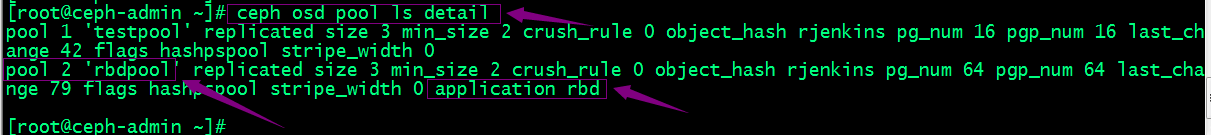

验证rbdpool是否成功初始化,对应rbd应用是否启用?

提示:使用ceph osd pool ls detail命令就能查看存储池详细信息;到此rbd存储池就初始化完成;但是,rbd存储池不能直接用于块设备,而是需要事先在其中按需创建映像(image),并把映像文件作为块设备使用;

4、在rbd存储池里创建image

[root@ceph-admin ~]# rbd create --size 5G rbdpool/rbd-img01[root@ceph-admin ~]# rbd ls rbdpoolrbd-img01[root@ceph-admin ~]# |

查看创建image的信息

[root@ceph-admin ~]# rbd info rbdpool/rbd-img01rbd image 'rbd-img01':size 5 GiB in 1280 objectsorder 22 (4 MiB objects)id: d4466b8b4567block_name_prefix: rbd_data.d4466b8b4567format: 2features: layering, exclusive-lock, object-map, fast-diff, deep-flattenop_features: flags: create_timestamp: Sun Sep 25 11:25:01 2022[root@ceph-admin ~]# |

提示:可以看到对应image支持分层克隆,排他锁,对象映射等等特性;到此一个5G大小的磁盘image就创建好了;客户端可以基于内核发现机制将对应image识别成一块磁盘设备进行使用;

启用radosgw接口

RGW并非必须的接口,仅在需要用到与S3和Swift兼容的RESTful接口时才需要部署RGW实例;radosgw接口依赖ceph-rgw进程对外提供服务;所以我们要启用radosgw接口,就需要在rados集群上运行ceph-rgw进程;

1、部署ceph-radosgw

[root@ceph-admin ~]# ceph-deploy rgw --helpusage: ceph-deploy rgw [-h] {create} ...Ceph RGW daemon managementpositional arguments:{create}create Create an RGW instanceoptional arguments:-h, --help show this help message and exit[root@ceph-admin ~]# |

提示:ceph-deploy rgw命令就只有一个create子命令用于创建RGW实例;

[root@ceph-admin ~]# su - cephadm Last login: Sat Sep 24 23:16:00 CST 2022 on pts/0[cephadm@ceph-admin ~]$ cd ceph-cluster/[cephadm@ceph-admin ceph-cluster]$ ceph-deploy rgw create ceph-mon01[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy rgw create ceph-mon01[ceph_deploy.cli][INFO ] ceph-deploy options:[ceph_deploy.cli][INFO ] username : None[ceph_deploy.cli][INFO ] verbose : False[ceph_deploy.cli][INFO ] rgw : [('ceph-mon01', 'rgw.ceph-mon01')][ceph_deploy.cli][INFO ] overwrite_conf : False[ceph_deploy.cli][INFO ] subcommand : create[ceph_deploy.cli][INFO ] quiet : False[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fa658caff80>[ceph_deploy.cli][INFO ] cluster : ceph[ceph_deploy.cli][INFO ] func : <function rgw at 0x7fa6592f5140>[ceph_deploy.cli][INFO ] ceph_conf : None[ceph_deploy.cli][INFO ] default_release : False[ceph_deploy.rgw][DEBUG ] Deploying rgw, cluster ceph hosts ceph-mon01:rgw.ceph-mon01[ceph-mon01][DEBUG ] connection detected need for sudo[ceph-mon01][DEBUG ] connected to host: ceph-mon01 [ceph-mon01][DEBUG ] detect platform information from remote host[ceph-mon01][DEBUG ] detect machine type[ceph_deploy.rgw][INFO ] Distro info: CentOS Linux 7.9.2009 Core[ceph_deploy.rgw][DEBUG ] remote host will use systemd[ceph_deploy.rgw][DEBUG ] deploying rgw bootstrap to ceph-mon01[ceph-mon01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf[ceph-mon01][WARNIN] rgw keyring does not exist yet, creating one[ceph-mon01][DEBUG ] create a keyring file[ceph-mon01][DEBUG ] create path recursively if it doesn't exist[ceph-mon01][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-rgw --keyring /var/lib/ceph/bootstrap-rgw/ceph.keyring auth get-or-create client.rgw.ceph-mon01 osd allow rwx mon allow rw -o /var/lib/ceph/radosgw/ceph-rgw.ceph-mon01/keyring[ceph-mon01][WARNIN] Created symlink from /etc/systemd/system/ceph-radosgw.target.wants/ceph-radosgw@rgw.ceph-mon01.service to /usr/lib/systemd/system/ceph-radosgw@.service.[ceph-mon01][INFO ] Running command: sudo systemctl enable ceph.target[ceph_deploy.rgw][INFO ] The Ceph Object Gateway (RGW) is now running on host ceph-mon01 and default port 7480[cephadm@ceph-admin ceph-cluster]$ |

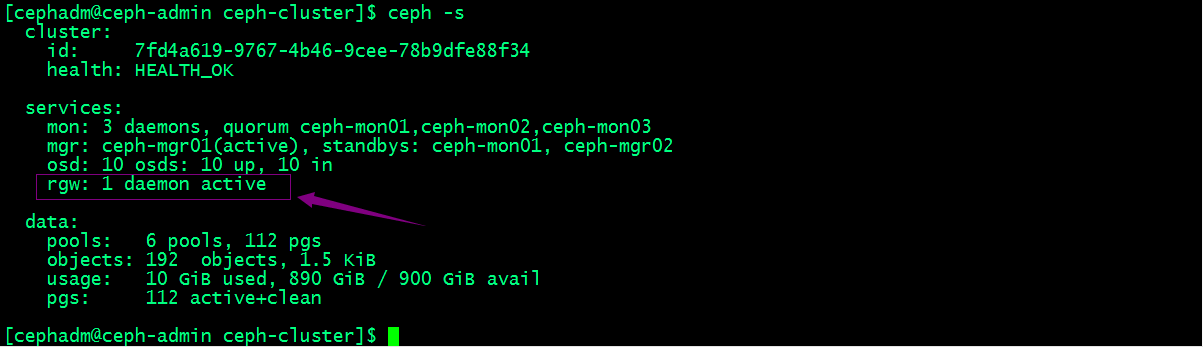

查看集群状态

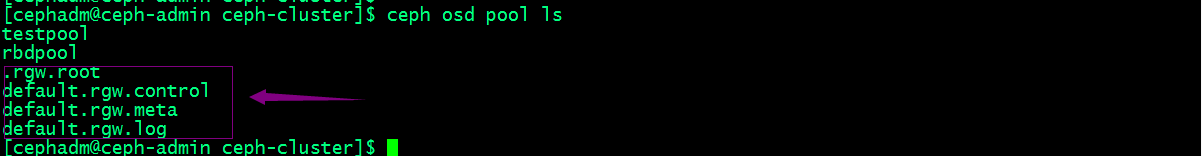

提示:可以看到现在集群有一个rgw进程处于活跃状态;这里需要说明一点,radosgw部署好以后,对应它会自动创建自己所需要的存储池,如下所示;

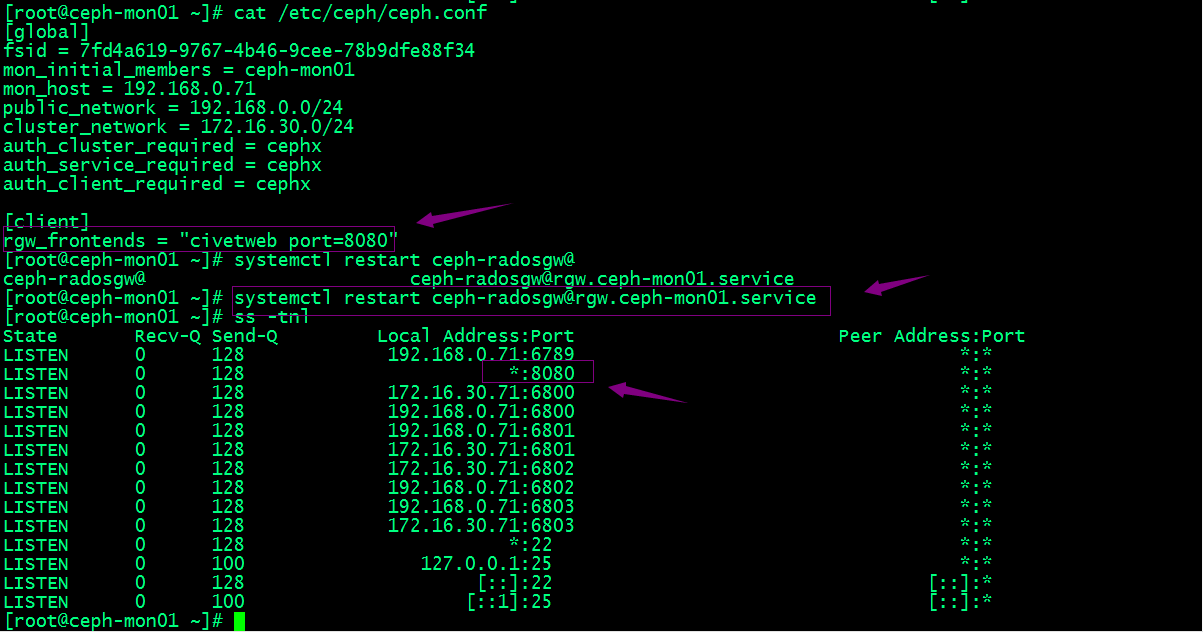

提示:默认情况下,它会创建以上4个存储池;这里说一下默认情况下radosgw会监听tcp协议的7480端口,以web服务形式提供服务;如果我们需要更改对应监听端口,可以在配置文件/etc/ceph/ceph.conf中配置

[client]rgw_frontends = "civetweb port=8080" |

提示:该配置操作需要在对应运行rgw的主机上修改配置,然后重启对应进程即可;

提示:可以看到现在mon01上没有监听7480的端口,而是8080的端口;

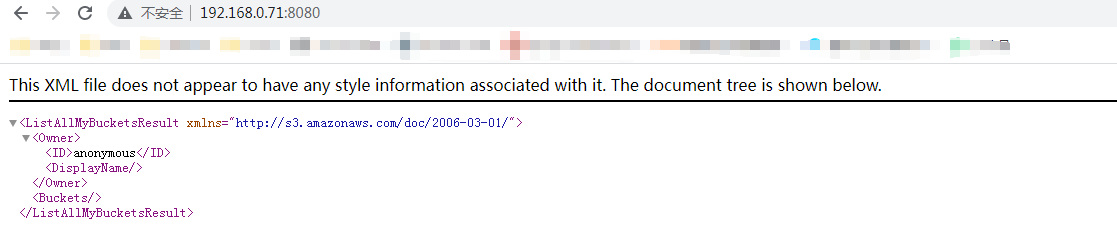

用浏览器访问8080端口

提示:访问对应主机的8080端口这里给我们返回一个xml的文档的界面;RGW提供的是RESTful接口,客户端通过http与其进行交互,完成数据的增删改查等管理操作。有上述界面说明我们rgw服务部署并运行成功;到此radosgw接口就启用Ok了;

启用文件系统接口(cephfs)

在说文件系统时,我们首先会想到元数据和数据的存放问题;CephFS和rgw一样它也需要依赖一个进程,cephfs依赖mds进程来帮助它来维护文件的元数据信息;MDS是MetaData Server的缩写,主要作用就是处理元数据信息;它和别的元数据服务器不一样,MDS只负责路由元数据信息,它自己并不存储元数据信息;文件的元数据信息是存放在RADOS集群之上;所以我们要使用cephfs需要创建两个存储池,一个用于存放cephfs的元数据一个用于存放文件的数据;那么对于mds来说,它为了能够让用户读取数据更快更高效,通常它会把一些热区数据缓存在自己本地,以便更快的告诉客户端,对应数据存放问位置;由于MDS将元数据信息存放在rados集群之上,使得MDS就变成了无状态,无状态意味着高可用是以冗余的方式;至于怎么高可用,后续要再聊;

1、部署ceph-mds

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy mds create ceph-mon02[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy mds create ceph-mon02[ceph_deploy.cli][INFO ] ceph-deploy options:[ceph_deploy.cli][INFO ] username : None[ceph_deploy.cli][INFO ] verbose : False[ceph_deploy.cli][INFO ] overwrite_conf : False[ceph_deploy.cli][INFO ] subcommand : create[ceph_deploy.cli][INFO ] quiet : False[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7ff12df9e758>[ceph_deploy.cli][INFO ] cluster : ceph[ceph_deploy.cli][INFO ] func : <function mds at 0x7ff12e1f7050>[ceph_deploy.cli][INFO ] ceph_conf : None[ceph_deploy.cli][INFO ] mds : [('ceph-mon02', 'ceph-mon02')][ceph_deploy.cli][INFO ] default_release : False[ceph_deploy.mds][DEBUG ] Deploying mds, cluster ceph hosts ceph-mon02:ceph-mon02[ceph-mon02][DEBUG ] connection detected need for sudo[ceph-mon02][DEBUG ] connected to host: ceph-mon02 [ceph-mon02][DEBUG ] detect platform information from remote host[ceph-mon02][DEBUG ] detect machine type[ceph_deploy.mds][INFO ] Distro info: CentOS Linux 7.9.2009 Core[ceph_deploy.mds][DEBUG ] remote host will use systemd[ceph_deploy.mds][DEBUG ] deploying mds bootstrap to ceph-mon02[ceph-mon02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf[ceph-mon02][WARNIN] mds keyring does not exist yet, creating one[ceph-mon02][DEBUG ] create a keyring file[ceph-mon02][DEBUG ] create path if it doesn't exist[ceph-mon02][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.ceph-mon02 osd allow rwx mds allow mon allow profile mds -o /var/lib/ceph/mds/ceph-ceph-mon02/keyring[ceph-mon02][INFO ] Running command: sudo systemctl enable ceph-mds@ceph-mon02[ceph-mon02][WARNIN] Created symlink from /etc/systemd/system/ceph-mds.target.wants/ceph-mds@ceph-mon02.service to /usr/lib/systemd/system/ceph-mds@.service.[ceph-mon02][INFO ] Running command: sudo systemctl start ceph-mds@ceph-mon02[ceph-mon02][INFO ] Running command: sudo systemctl enable ceph.target[cephadm@ceph-admin ceph-cluster]$ |

查看mds状态

[cephadm@ceph-admin ceph-cluster]$ ceph mds stat, 1 up:standby[cephadm@ceph-admin ceph-cluster]$ |

提示:可以看到现在集群有一个mds启动着,并以standby的方式运行;这是因为我们只是部署了ceph-mds进程,对应在rados集群上并没有绑定存储池;所以现在mds还不能正常提供服务;

2、创建存储池

[cephadm@ceph-admin ceph-cluster]$ ceph osd pool create cephfs-metadatpool 64 64pool 'cephfs-metadatpool' created[cephadm@ceph-admin ceph-cluster]$ceph osd pool create cephfs-datapool 128 128pool 'cephfs-datapool' created[cephadm@ceph-admin ceph-cluster]$ ceph osd pool lstestpoolrbdpool.rgw.rootdefault.rgw.controldefault.rgw.metadefault.rgw.logcephfs-metadatpoolcephfs-datapool[cephadm@ceph-admin ceph-cluster]$ |

3、初始化存储池

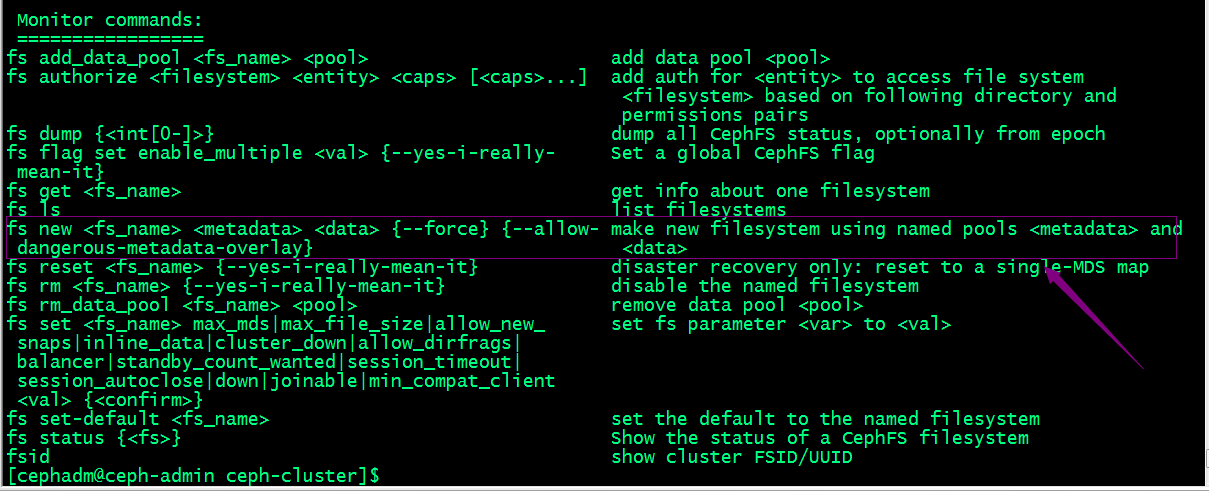

查看ceph fs帮助

提示:我们可以使用ceph fs new命令来指定cephfs的元数据池和数据池;

[cephadm@ceph-admin ceph-cluster]$ ceph fs new cephfs cephfs-metadatpool cephfs-datapoolnew fs with metadata pool 7 and data pool 8[cephadm@ceph-admin ceph-cluster]$ |

再次查看mds状态

[cephadm@ceph-admin ceph-cluster]$ ceph mds statcephfs-1/1/1 up {0=ceph-mon02=up:active}[cephadm@ceph-admin ceph-cluster]$ |

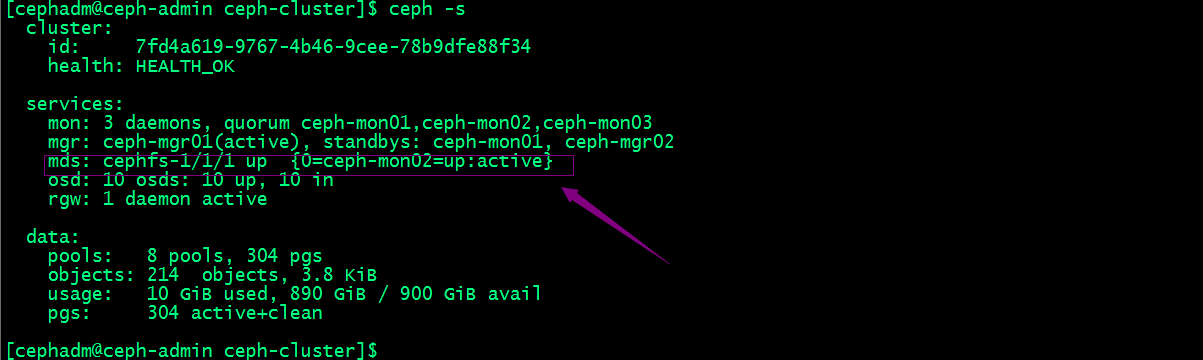

查看集群状态

提示:可以看到现在mds已经运行起来,并处于active状态;

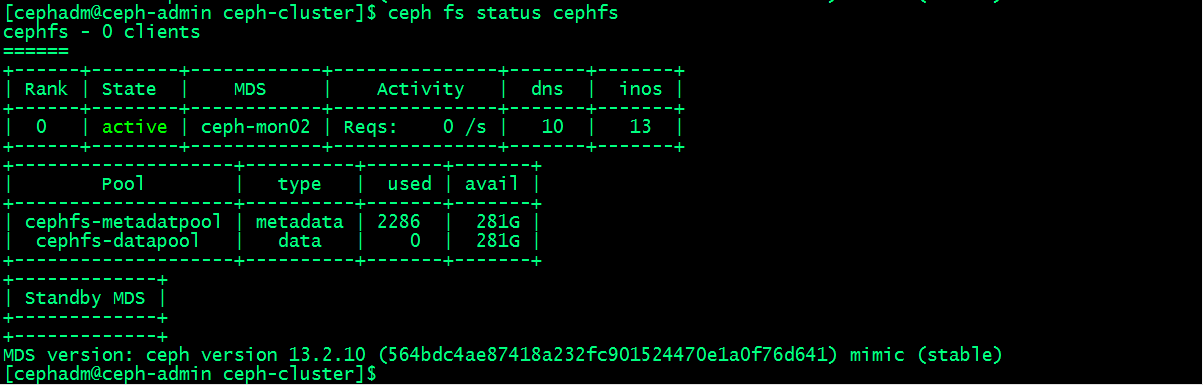

查看ceph 文件系统状态

提示:可以看到对应文件系统是活跃状态;随后,客户端通过内核中的cephfs文件系统接口即可挂载使用cephfs文件系统,或者通过FUSE接口与文件系统进行交互使用;

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK