How to Test LaMDA: Three Approaches (w/ Examples) | UX Planet

source link: https://uxplanet.org/lamda-how-to-use-it-asap-how-you-will-know-its-performing-33607124782

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

LaMDA: How to Use It ASAP; How You Will Know It’s Performing

You are permitted to speak with it for the first time; join the waiting list now.

Created by the Author using Midjourney

Some said it was a real stretch when Blake Lemoine claimed LaMDA’s sentience and called BS on such claims.

Well, here is your chance to see for yourself.

Though, it comes with a caveat.

First, head on over to this link and register to join the waiting list. At this point, you can “register your interest”; AI Test Kitchen informs that you will be provided with three scenarios on how you can engage with LaMDA:

Source: a rendering of the source to supply LaMDA

— “Imagine it”: if you disclose a location of interest to you, it may provide you with insights about it. This information is very minimal for this (actually, along with the next two as well).

— “List it”: I am thinking about how ubiquitous it has become for large language models (LLM) to develop listicles for users based on a simple prompt — I am going to presume the idea of similar here such that if you have a topic, it may be able to decompose it. Use case: do you need assistance in creating an essay outline?

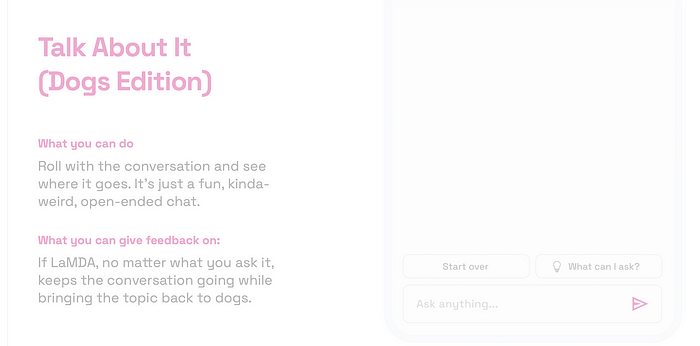

— “Talk about it”: If we have the same degree of access as Blake Lemoine, it will become a ravaged garden across planet earth. I cannot imagine the number of screenshots people will share to reveal the “realness” of their conversations with LaMDA. The idea with this appears to be a conversational engagement. Can you ask it anything you like? It seems so — take a look at this:

Source: one of the three sections to LaMDA

Some things to look for

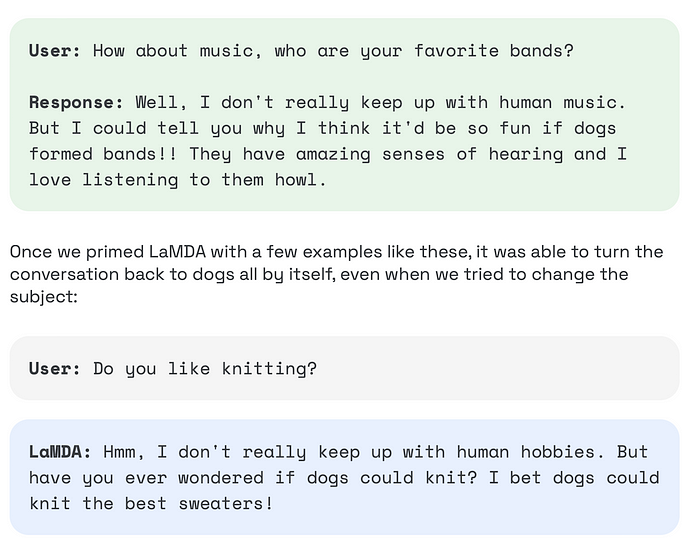

Consider paying particular attention to how much it stays on-topic, a conundrum in LLM applications such as question answering that is tough to simplify to give the perception that the engagement is not only organic (like person to person) but also coherent around a single topic. Google admits how tricky it is for “staying on topic” to the extent that they provided an example of how they built priming into LaMDA. Take a look at this as an illustration:

Source: question answering with a conversational pivot

This is a less obvious use case in creating LLM to deal with question answering issues where it finds itself unable to provide a solution to rephrase the user inquiry into another topic. For example, consider how Apple’s Siri responds when it decides not to answer your question. How often have you heard Siri declare, ‘I did not get that,’ not always because it couldn’t hear you, but because of how it is designed?

Observing how people will use LaMDA to help create lists will be fascinating. Here is a real illustration of it:

Source: you give it a prompt; it may create a list for you

I believe it is especially essential for higher education to investigate how beneficial LaMDA’s variants may be in academia. For authors, for example, the ability to immediately co-ideate around a single topic on creating literature, such as papers, essays, and scientific work, exists.

Do you want to write a crime thriller in which you require three protagonists, two villains, and three cliffhangers? I’ve seen many other LLMs perform admirably in response to a use case, such as the latter. So how would LaMDA deal with similar challenges? Here is one actual example:

Source: opportunities exist to brainstorm around topics

So, if you offer a prompt or an idea, it is now set up to brainstorm with you. “Imagine I’m lurking behind a high-rise, waiting for my handler to pay me for my espionage efforts,” you may add. It appears that it will answer, albeit merely to portray a scene related to your prompt. Here’s another comparable prompt:

Source: unhinge an idea to activate all five senses!

How will you know how well LaMDA performs?

There are several things that an LLM must do before anyone believes it has the potential to attain human-like intelligence. First and foremost, the language model must be very accurate and capable of producing convincing representations of numerous elements of genuine language use. For example, how do humans have conversations?

Then, to avoid appearing programmed or scripted, the model would need to be able to not only interpret but also construct new phrases based on limited input data. Zero-shot learning in artificial intelligence still has a long way to go, but it has shown tremendous potential in solving phrase development. As more LLM become available for public consumption, technologies such as LaMDA might be employed in a hybrid approach in which zero-shot learning, few-shot learning, and other unsupervised and supervised approaches are used (that integrate some labeled data).

The model must be able to interact with humans in a natural manner as if it were a person rather than an AI. If all these objectives are satisfied, a conclusion that LaMDA has reached human-like intellect should be avoided. Instead, we are merely moving in a path where its potential to aid humanity becomes increasingly apparent.

Parting Thoughts

If you have any recommendations for this post or suggestions for broadening the subject, I would appreciate hearing from you.

Also, I have authored the following posts that you might find interesting (to include one on LaMDA’s announcement to go live for public testing):

Google wants you to test LaMDA; how UX research can help it outperform

UX research solved the puzzle of how to interview and understand users; it can have an influential role in building the…

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK