XR With Oracle, Ep 4: Health, Digital Twins, More - DZone IoT

source link: https://dzone.com/articles/develop-xr-with-oracle-ep-4-health-digital-twins-o

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Develop XR With Oracle, Ep 4: Health, Digital Twins, Observability, and Metaverse

In this fourth article of the series, we focus on XR applications of health, digital twins, IoT observability, and its related use in the metaverse.

This is the fourth piece in a series on developing XR applications and experiences using Oracle and focuses on XR applications of computer vision AI and ML and its related use in the metaverse. Find the links to the first three articles below:

As with the previous posts, here I will again specifically show applications developed with Oracle database and cloud technologies, HoloLens 2, Mixed Reality Toolkit, and Unity platform.

Throughout the blog, I will reference this corresponding demo video below.

Extended Reality (XR), Metaverse, and HoloLens

I will refer the reader to the first article in this series for an overview of XR and HoloLens (linked above). That post was based on a data-driven microservices workshop and demonstrated a number of aspects that will be present in the metaverse, such as online shopping, by interacting with 3D models of food/products, 3D/spatial real-world maps, etc., as well as backend DevOps (Kubernetes and OpenTelemetry tracing), etc.

The second article of the series was based on a number of graph workshops and demonstrated visualization, creation, and manipulation of models, notebooks, layouts, and highlights for property graph analysis used in social graphs, neural networks, and the financial sector (e.g., money laundering detection).

The third article of the series used computer vision AI to detect, tag, and speak images in a room and to extract text from the same room in order to provide various contextual information, etc. based on the environment.

In all of these articles as well as in this one, the subject matter can be shared and actively collaborated up, even in real-time, remotely. These types of abilities are key to the metaverse concept and will be expanded upon and extended in these future pieces.

This blog will not go into digital twins in-depth, but will instead focus on the XR-enablement of these topics and the use of Oracle tech to this end.

Digital Twins

There are a number of nuanced definitions available but in general, "A digital twin is a virtual representation of an object or system that spans its lifecycle, is updated from real-time data, and uses simulation, machine learning, and reasoning to help decision-making." A digital double refers specifically to representations of human beings. Real-time data is often collected from sensors and thus IoT architectures, etc. are often used in the process though there are numerous types of sources and techniques that may be used.

Quite a bit of material exists on digital twins and so I will be brief on this topic, but its potential for innovation, collaboration, immersion, etc. in XR and metaverse really is limitless and very exciting. There are endless examples in so many sectors. Here, I will only touch on health (as a representation of the greater health, sports, health care, etc. space) and home (as a representation of the greater AEC space).

Health and Healthcare

Health and healthcare are currently and increasingly areas where XR innovation has taken some of its greatest advances. Telemedicine and telehealth from such companies as Amwell, etc. provide comprehensive digital healthcare solutions for health systems, health plans, employers, and physicians already and have made huge strides, particularly in the necessity brought about by pandemics, etc. This will certainly continue to be enhanced with XR to provide access in locations, conditions, and areas of specialization previously unobtainable. In addition, XR has been used for training and education in the health sector for some time now and the HoloLens, in particular, is now used in live surgeries. Used together with computer vision AI, predictive analysis, etc., the efficiency and quality of treatment will make leaps forward in the near future. I will show an example of this in an upcoming blog in this series and with the merger of Oracle and Cerner, you can expect to see further synergy and advancements in this area.

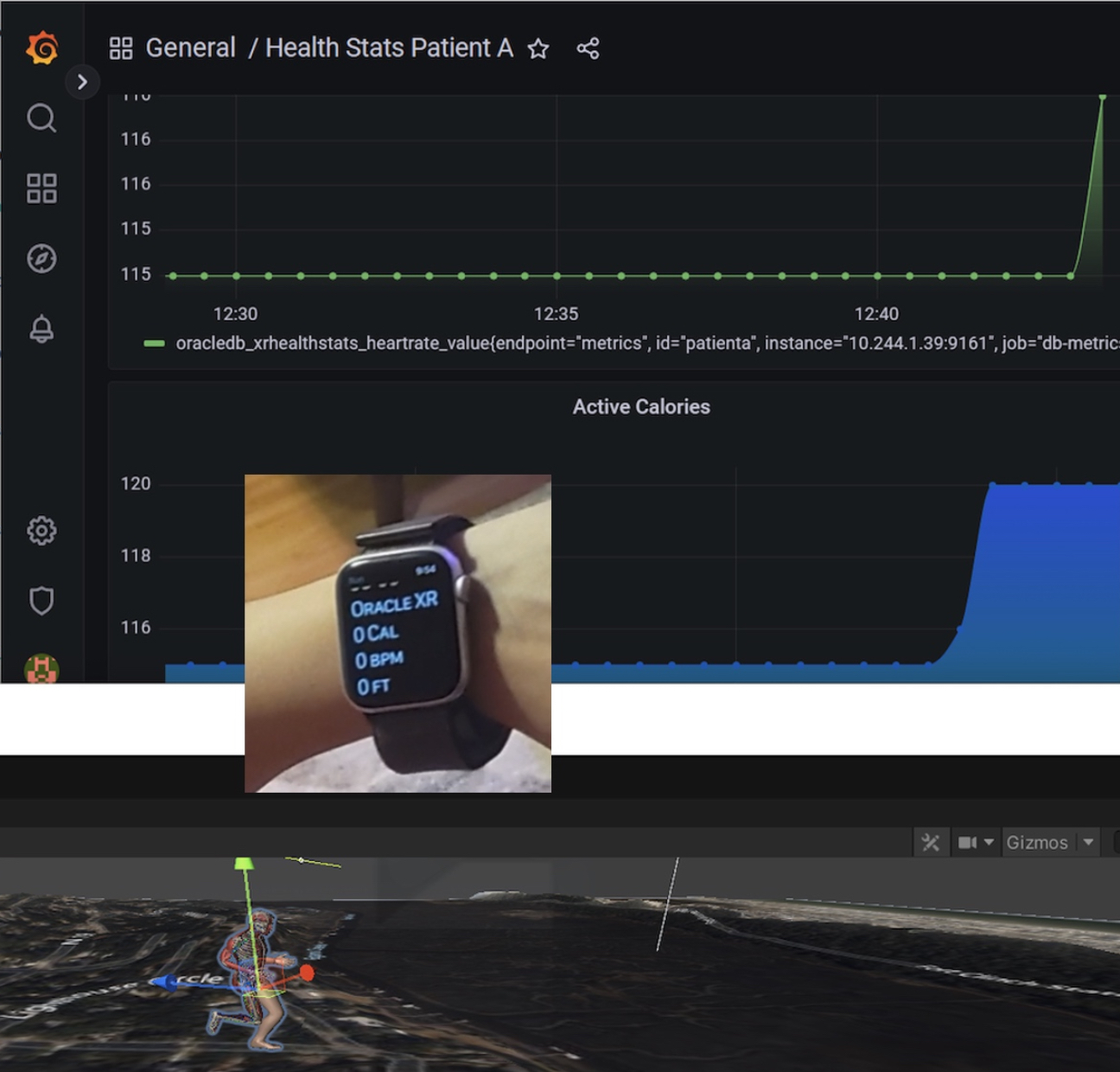

Though obviously directly related, the example in this blog is focused directly on individual health itself and a digital twin/double representation of a patient/human exercising. There are numerous flavors of tech and wearables for fitness and otherwise, that are perfectly suited for this purpose. I have focused on the Apple Watch and its HealthKit API for my development as it provides an astonishing amount of information including activity (activeEnergyBurned, swimmingStrokeCount, vo2Max, etc.), body measurement (bodyFatPercentage, e.g.), reproductive health (i.e., basalBodyTemperature), hearing (i.e., environmentalAudio Exposure), vital signs (i.e., heartRate, bloodPressure), lab and test results (i.e., bloodGlucose), nutrition (i.e., dietaryCholesterol), alcohol consumption, mobility, and UV exposure. This information is available in addition to other information the watch and its apps collect and provide like weather, GPS, etc.

The demonstration shown in the video entails a runner wearing a watch with an application that continuously sends any selected metrics to the Oracle database. The metrics sent can be configured either at the watch or at the database itself, making the application very dynamic. In this case, the GeoJSON/GPS location, heart rate, cadence, calories burned, elevation, and temperature are sent. This can be done in various ways general using MQTT (e.g., a bridge/event mesh from Mosquitto to the Oracle AQ/TEQ messaging system can be used to take advantage of functionality there) or, in this case, REST.

The HoloLens in turn receives this information from the database and plots a human standing, walking, or running (depending on location distance/change over time) on the appropriate GPS location on a map. The direction and rotation of the human animation are determined by the history/change of GeoJSON coordinates received, the lighting determined by the weather stats received, etc. The human animation is divided along the mid-sagittal plane with skeletal, muscular, etc. systems exposed for labeling/tracking in case any specific biometrics in these areas would like to be labeled, measured, and analyzed.

Simultaneously, a unified observability exporter receives this information from the database and provides the metrics, for example, in Prometheus format for display and monitoring in a Grafana console. You can learn more about Oracle's unified observability framework.

Oracle is referred to as the converged database because it supports all data types/formats (as well as workloads, messaging, etc.) in a single database. This is exemplified here by the fact that various stats can be stored in relational format, the GPS locations in (Geo)JSON format and MapMyRun/TCX or Stava/GPX in XML format. This allows compatibility, cross-data type queries, operations, etc. In this way, the activity/run can be played back and analyzed at a later time. There are a number of breakthroughs occurring in fitness and sports (health and entertainment) made possible by XR that are beyond the scope of this article.

Home, Architectural, Engineering, and Construction

Likely the first and most prominent industry to implement the usage of XR is that of architecture, engineering, and construction (AEC) and to a related extent, the home. Everything from arranging virtual furniture in a house to orchestrating extremely complex construction sites and processes such as those provided by Oracle's Aconex.

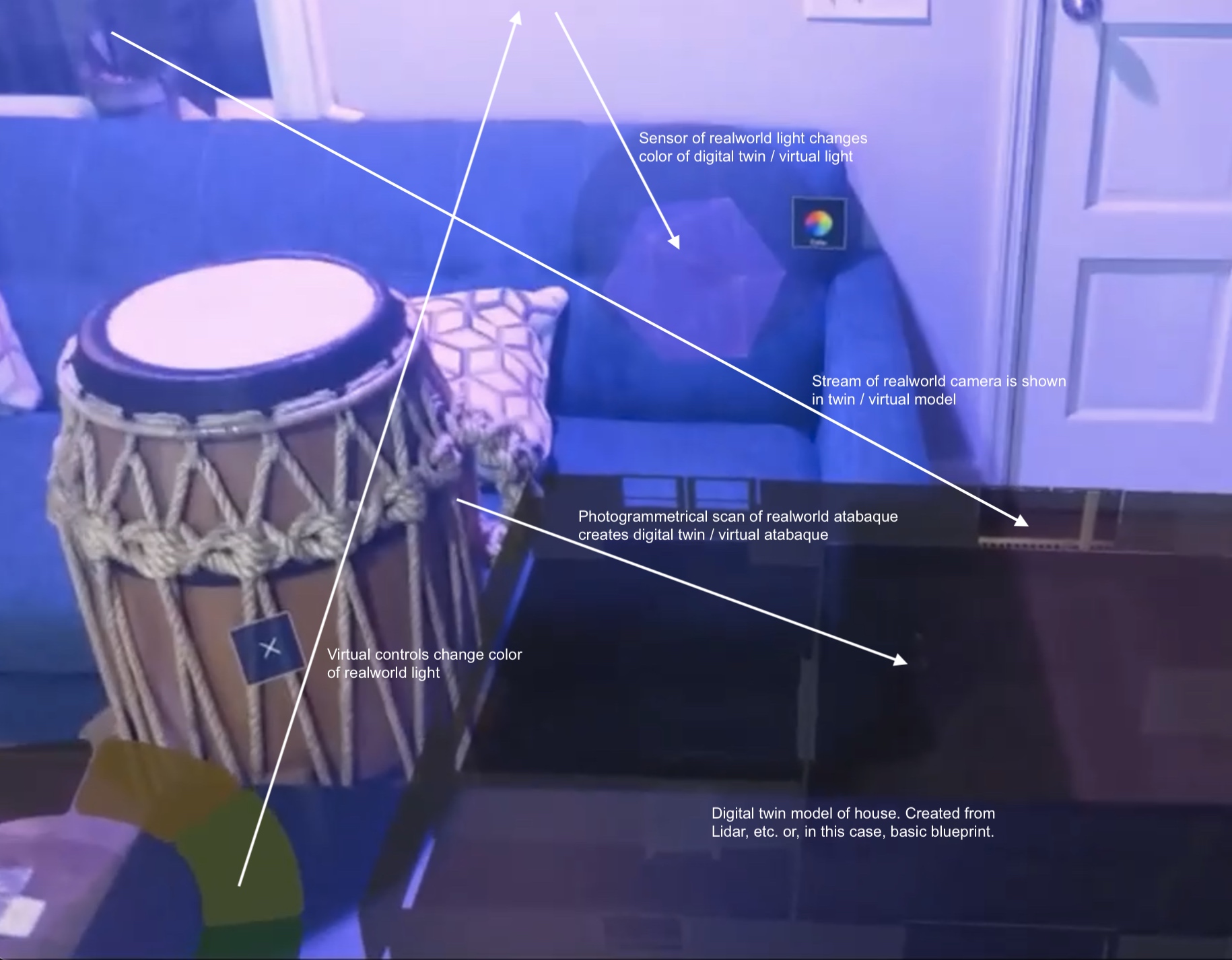

The demonstration shown in the video entails a simple representation of some key concepts. The HoloLens presents a 3D visualization of the house. The visualization can easily be generated quickly by a number of existing technologies such as the Lidar support on newer phones. However, in this case, I simply use a model of the house though I do include a photogrammetric scan of a drum from the house in the model. (This was done statically, however, dynamic/real-time photogrammetric, Lidar, etc. scans are becoming more feasible for digital twin generation as time goes on and a future blog will demonstrate this.)

The real-world light in the house continuously sends its color status (over MQTT) which the HoloLens receives and in turn uses to set the color of its virtual/digital twin light. Conversely, when a virtual/digital twin color button in the HoloLens app is pushed, the color command is sent (this time over REST, though it could be MQTT as well) and the real-world light changes to the color selected. As these changes are stored in the database, the digital twin of the house can be monitored and controlled from anywhere with an Internet connection.

In addition, a security camera facing the outside of the house streams the video it captures. One possible way to do this is to stream a Pi camera to the Oracle cloud or use video streaming with Oracle's new OCI Digital Media Services. The HoloLens receives and displays in the digital twin model, so the user can also view security cameras to recreate a realistic live representation/model of the house from any location.

The HoloLens application can also overlay the camera feed on the actual/real-world interior wall corresponding to the location of the camera on the exterior wall thus creating a see-through effect of the walls.

Finally, digital twins can be representations of actual world objects and locations or representations of a process or concept (or both). In this example, we further replace the window with a window that looks out on the Venice canal, a water hole in the Nairobi desert, etc. via live stream: yet another classic example(s) of mixed reality.

Other Sectors

Other examples of sectors developing more digital twins and digital doubles in the XR space include fintech, as well as the following examples:

- Advanced digital assistants

- Sensors in cars for insurance

- Geographic information systems for mining and other industries

- Holograms and photogrammetry for various meetings and conferences

- Advanced sensors and analytics such as Oracle's F1 Redbull collaboration project

Additional Thoughts

I have given some ideas and examples of how digital doubles and XR can be used together and facilitated by Oracle. I look forward to putting out more blogs on this topic and other areas of XR with Oracle Cloud and Database soon.

Please see my other publications for more information on XR and Oracle cloud and converged database, as well as various topics around microservices, observability, transaction processing, etc. Also, please feel free to contact me with any questions or suggestions for new blogs and videos as I am very open to suggestions. Thanks for reading and watching.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK