Machine Learning Foundations Homework 3

source link: https://lasttillend.github.io/machine/learning/2020/04/15/MLF-HW3.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Machine Learning Foundations Homework 3

Apr 15, 2020 • 唐涵

Website of Machine Learning Foundations by Hsuan-Tien Lin: https://www.csie.ntu.edu.tw/~htlin/mooc/.

Question 1

Question 1-2 are about linear regression.

Consider a noisy target y=w⊤fx+ϵ, where x∈Rd (with the added coordinate x0=1, y∈R, wf is an unknown vector, and ϵ is a noise term with zero mean and σ2 variance. Assume ϵ is independent of x and all other ϵ’s. If linear regression is carried out using a training data set D={(x1,y1),…,(xN,yN)}, and outputs the parameter vector wlin, it can be shown that the expected in-sample error Ein with respect to D is given by:

ED[Ein (wlin )]=σ2(1−d+1N)

For σ=0.1 and d=8, which among the following choices is the smallest number of example N that will result in an expected Ein greater than 0.008?

Sol:

sigma = 0.1

d = 8

expected_E_in = lambda N: sigma**2 * (1 - (d + 1) / N)

for N in [500, 25, 100, 1000, 10]:

print(f"{N:>4}: {expected_E_in(N)}")

Output:

500: 0.009820000000000002

25: 0.006400000000000001

100: 0.009100000000000002

1000: 0.009910000000000002

10: 0.001

So the answer is N=100.

Question 2

Recall that we have introduced the hat matrix H=X(X⊤X)−1X⊤ in class, where X∈RN×(d+1) for N examples and d features. Assume X⊤X is invertible, which statements of H are true?

- (a) H is always positive semi-definite.

- (b) H is always invertible.

- (c) some eigenvalues of H are possibly bigger than 1

- (d) d+1 eigenvalues of H are exactly 1.

- (e) H1126=H

Sol:

- (a): true, since the eigenvalues of H are all non-negative.

- (b): false, since the rank of H is d+1, which may be less than N.

- (c): false.

- (d): true.

- (e): true, since H is an orthogonal projection matrix, project twice does not have much more effect.

Question 3

Question 3-5 are about error and SGD

Which of the following is an upper bound of [sign(wTx)≠y] for y∈{−1,+1}?

- err(w)=(−ywTx)

- err(w)=(max(0,1−ywTx))2

- err(w)=max(0,−ywTx)

- err(w)=θ(−ywTx)

Sol:

If we draw the picture, then we can find that only the second error function err(w)=(max(0,1−ywTx))2 is an upper bound for the 0-1 error function.

Question 4

Which of the following is not a everywhere-differentiable function of w?

- err(w)=(max(0,1−ywTx))2

- err(w)=max(0,−ywTx)

- err(w)=θ(−ywTx)

- err(w)=(−ywTx)

Sol:

The error function err(w)=max(0,−ywTx) is not differentiable at w where wTx=0.

Question 5

When using SGD on the following error functions and ‘ignoring’ some singular points that are not differentiable, which of the following error function results in PLA?

- err(w)=(max(0,1−ywTx))2

- err(w)=max(0,−ywTx)

- err(w)=θ(−ywTx)

- err(w)=(−ywTx)

Sol: The answer is err(w)=max(0,−ywTx).

At wTx≠0, this error function is differentiable.

If y=−1, then

∇err(w)={0,wTx<0x,wTx>0.

If y=1, then

∇err(w)={0,wTx>0−x,wTx<0.

We can combine the two cases together as

∇err(w)={0,ywTx>0−yx,ywTx<0.

While in PLA, we update w only at a misclassified point, i.e., ywTx<0, and the update value is exactly yx, which is the opposite direction of its gradient.

Question 6

For Question 6-10, you will play with gradient descent algorithm and variants. Consider a function

E(u,v)=eu+e2v+euv+u2−2uv+2v2−3u−2v.

What is the gradient ∇E(u,v) around (u,v)=(0,0)?

Sol:

The gradient of E(u,v) is

∇E(u,v)=[∂E∂u∂E∂v]=[eu+veuv+2u−2v−32e2v+ueuv−2u+4v−2].

So ∇E(0,0)=[−20].

Question 7

In class, we have taught that the update rule of the gradient descent algorithm is

(ut+1,vt+1)=(ut,vt)−η∇E(ut,vt).

Please start from (u0,v0)=(0,0), and fix η=0.01, what is E(u5,v5) after five updates?

Sol:

import numpy as np

E = lambda u, v: np.exp(u) + np.exp(2 * v) + np.exp(u * v) + \

u ** 2 - 2 * u * v + 2 * v ** 2 - 3 * u - 2 * v

grad_E = lambda u, v: np.array([np.exp(u) + v * np.exp(u * v) + 2 * u - 2 * v - 3,

2 * np.exp(2 * v) + u * np.exp(u * v) - 2 * u + 4 * v - 2])

eta = 0.01

ut, vt = 0, 0

for i in range(5):

ut, vt = (ut, vt) - eta * grad_E(ut, vt)

# After 5 updates

E(ut, vt)

Output:

2.8250003566832635

So E(u5,v5) is about 2.825.

Question 8

Continue from Question 7, if we approximate the E(u+Δu,v+Δv) by ˆE2(Δu,Δv), where ˆE2 is the second-order Taylor’s expansion of E around (u,v). Suppose

ˆE2(Δu,Δv)=buu(Δu)2+bvv(Δv)2+buv(Δu)(Δv)+buΔu+bvΔv+b

What are the values of (buu,bvv,buv,bu,bv,b) around (u,v)=(0,0)?

Sol: The Hessian matrix of E is

HE(u,v)=[∂2E∂u2∂2E∂u∂v∂2E∂v∂u∂2E∂v2]=[eu+v2euv+2euv+uveuv−2euv+uveuv−24e2v+u2euv+4]

HE(0,0)=[3−1−18].

If we denote h=(Δu,Δv)T, then the second-order Taylor’s expansion of E around (0,0) can also be written as

ˆE2(Δu,Δv)=ˆE2(h)=E(0,0)+∇E(0,0)⋅h+12hTHE(0,0)h.

So buu=3/2,bvv=4,buv=−1. By Question 6, bu=−2,bv=0, and b=E(0,0)=3.

Question 9

Continue from Question 8 and denote the Hessian matrix be ∇2E(u,v), and assume that the Hessian matrix is positive definite. What is the optimal (Δu,Δv) to minimize ˆE2(Δu,Δv)? The direction is called the Newton Direction.

Sol:

The second-order Taylor’s expansion of E around (u,v) can be written as

ˆE2(Δu,Δv)=ˆE2(h)=E(u,v)+∇E(u,v)⋅h+12hTHE(u,v)h.

Take derivative with respect to Δu and Δv to get

∂^E2∂Δu=∂E∂u+∂2E∂u2Δu+∂2E∂u∂vΔv∂^E2∂Δv=∂E∂v+∂2E∂v2Δv+∂2E∂u∂vΔu.

Set them to equal 0, we obtain

h=(Δu,Δv)T=−(∇2E(u,v))−1∇E(u,v).

Question 10

Using the Newton direction (without η) to update, please start from (u0,v0)=(0,0), what is E(u5,v5) after five updates?

from numpy.linalg import inv

# Define Hessian matrix

H_E = lambda u, v: np.array([[np.exp(u) + v ** 2 * np.exp(u * v) + 2, np.exp(u * v) + u * v * np.exp(u * v) - 2],

[np.exp(u * v) + u * v * np.exp(u * v) - 2, 4 * np.exp(2 * v) + u ** 2 * np.exp(u * v) + 4]])

ut, vt = 0, 0

for i in range(5):

newton_dir = -inv(H_E(ut, vt)) @ grad_E(ut, vt)

ut, vt = (ut, vt) + newton_dir

# After 5 updates

E(ut, vt)

Output:

2.360823345643139

As we can see, the value of E drops more quickly using Newton’s method than gradient descent in this example. But this gain is at the cost of computing Hessian matrix.

Question 11

For Question 11-12, you will play with feature transforms

Consider six inputs x1=(1,1),x2=(1,−1),x3=(−1,−1),x4=(−1,1),x5=(0,0),x6=(1,0). What is the biggest subset of those input vectors that can be shattered by the union of quadratic, linear, or constant hypothesis of x?

Sol:

Consider the general quadratic transform of x=(x1,x2) by

f(x)=(1,x1,x2,x21,x22,x1x2).

In our case, f takes inputs x1,⋯,x6 to z1=f(x1),⋯,z2=f(x6), which is shown as the following matrix:

Z=[11111111−111−11−1−11111−1111−1100000110100]

This matrix Z has full rank, which means for any y=[y1⋮y5+1], the equation

Z˜w=y

has a solution ˜w=Z−1y.

Thus, these six points z1⋯z6 can be shattered by lines in the transformed space. However, lines in the transformed space correspond to exactly quadratic curves in the original space, and hence, x1,⋯,x6 can be shattered by the union of quadratic, linear, or constant hypothesis of x.

Question 12

Assume that a transformer peeks the data and decides the following transform Φ “intelligently” from the data of size N. The transform maps x∈Rd to z∈RN , where

(Φ(x))n=zn=[x=xn].

Consider a learning algorithm that performs linear classification after the feature transform. That is, the algorithm effectively works on an HΦ that includes all possible Φ. What is dvc(HΦ) (i.e., the maximum number of points that can be shattered by the process above)?

Sol:

If we apply Φ to the training data x1,⋯,xN, then

Φ(xn)=en∀n=1,⋯N,

where en is the n-th standard basis vector in RN.

If we put these N vectors in the matrix Z row by row, then we can find that Z is exactly the identity matrix IN×N. So by the same argument as Question 11, the training data x1,⋯,xN can be shattered by HΦ. This is true for all N, so the VC dimension of this hypothesis set is ∞.

Question 13

For Question 13-15, you will play with linear regression and feature transforms. Consider the target function:

f(x1,x2)=sign(x21+x22−0.6)

Generate a training set of N=1000 points on X=[−1,1]×[−1,1] with uniform probablity of picking each x∈X. Generate simulated noise by flipping the sign of the output in a random 10% subset of the generated training set.

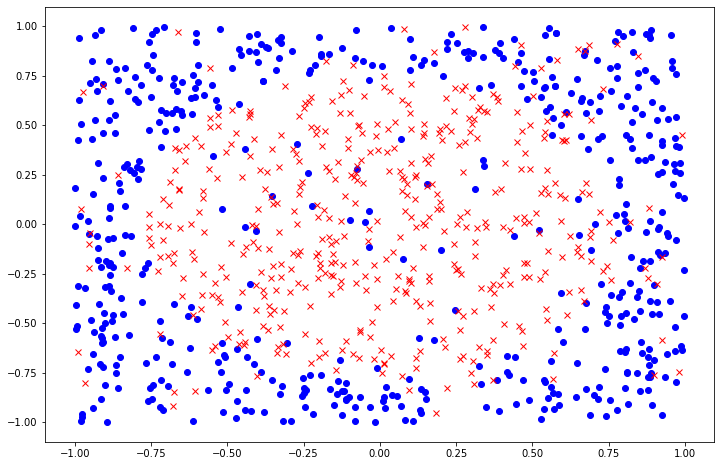

Carry out linear regression without transformation, i.e., with feature vector: (1,x1,x2), to find the weight w, and use wlin directly for classification. What is the closest value to the classification (0/1) in-sample error (Ein)? Run the experiment 1000 times and take the average Ein in order to reduce variation in your results.

import random

from matplotlib import pyplot as plt

from numpy.linalg import pinv

# Target function

# def f(x1, x2):

# def sign(x):

# return 1 if x > 0 else -1

# return sign(x1 ** 2 + x2 ** 2 - 0.6)

def generate_data(N):

"""

Args:

N: int

Returns:

X: ndarray of shape = [N, d]

y: ndarray of shape = [N, ]

"""

X = np.random.uniform(low=-1.0, high=1.0, size=(N, 2))

y = np.zeros(N)

y = np.sign(X[:, 0] ** 2 + X[:, 1] ** 2 - 0.6)

# # Below does not take advantage of the numpy vectorized operation and is slow

# i = 0

# for x in X:

# y[i] = f(*x)

# i += 1

# Add noise to y

p = 0.1

offset = int(N * p)

random_index = np.random.permutation(N)

noisy_y_index = random_index[:offset]

y[noisy_y_index] *= -1

return X, y

N = 1000

X, y = generate_data(N)

fig, ax = plt.subplots(1, 1, figsize=(12, 8))

ax.plot(X[y==1][:, 0], X[y==1][:, 1], color='blue', marker='o', linestyle='None', label='+1')

ax.plot(X[y==-1][:, 0], X[y==-1][:, 1], color='red', marker='x', linestyle='None', label='-1');

def lin(X, y):

"""

Args:

X: ndarray of shape = [N, d]

y: ndarray of shape = [N, ]

Returns:

w: ndarray of shape = [d, ]

"""

N = X.shape[0]

X = np.c_[np.ones((N, 1)), X]

w = pinv(X.T @ X) @ X.T @ y

return w

def calc_err(X, y, w):

"""

Args:

X: ndarray of shape = [N, d]

y: ndarray of shape = [N, ]

w: ndarray of shape = [d, ]

Returns:

err: float

"""

N = X.shape[0]

X = np.c_[np.ones((N, 1)), X]

y_hat = np.sign(X @ w)

err = np.mean(y_hat != y)

return err

w_lin = lin(X, y)

calc_err(X, y, w_lin)

Output:

0.467

def simulation_lin_no_trans(N):

err_list = []

for _ in range(1000):

X, y = generate_data(N)

w_lin = lin(X, y)

err = calc_err(X, y, w_lin)

err_list.append(err)

avg_err = np.mean(err_list)

return avg_err

simulation_lin_no_trans(N=1000)

Output:

0.508207

Question 14 Now, transform the training data into the following nonlinear feature vector:

(1,x1,x2,x1x2,x21,x22)

Find the vector ˜w that corresponds to the solution of linear regression, and take it for classification.

Which of the following hypotheses is closest to the one you find using linear regression on the transformed input? Closest here means agrees the most with your hypothesis (has the most probability of agreeing on a randomly selected point).

g(x1,x2)=sign(−1−1.5x1+0.08x2+0.13x1x2+0.05x21+1.5x22)g(x1,x2)=sign(−1−1.5x1+0.08x2+0.13x1x2+0.05x21+0.05x22)g(x1,x2)=sign(−1−0.05x1+0.08x2+0.13x1x2+1.5x21+1.5x22)g(x1,x2)=sign(−1−0.05x1+0.08x2+0.13x1x2+1.5x21+15x22)g(x1,x2)=sign(−1−0.05x1+0.08x2+0.13x1x2+15x21+1.5x22)

# def quadratic(x1, x2):

# return np.array([x1, x2, x1 * x2, x1 ** 2, x2 ** 2])

def transform_X(X):

"""

Args:

X: ndarray of shape = [N, d]

Returns:

Z: ndarray of shape = [N, d]

"""

N = X.shape[0]

d = 5

Z = np.zeros((N, d))

Z[:, :2] = X[:]

Z[:, 2] = X[:, 0] * X[:, 1]

Z[:, 3] = X[:, 0] ** 2

Z[:, 4] = X[:, 1] ** 2

# Below does not take advantage of the numpy vectorized operation and is slow

# i = 0

# for x in X:

# Z[i] = quadratic(*x)

# i += 1

return Z

X, y = generate_data(N)

Z = transform_X(X)

w_lin = lin(Z, y)

w_lin

Output:

array([-0.9914943 , -0.07906799, 0.1171203 , -0.02982396, 1.53896951, 1.62686342])

So the closest hypothesis in the given hypotheses is

g(x1,x2)=sign(−1−0.05x1+0.08x2+0.13x1x2+1.5x21+1.5x22).

We can also run the simulation with the transformed data to see the classification error drops dramatically:

def simulation_lin_with_trans(N):

err_list = []

for _ in range(1000):

X, y = generate_data(N)

Z = transform_X(X)

w_lin = lin(Z, y)

err = calc_err(Z, y, w_lin)

err_list.append(err)

avg_err = np.mean(err_list)

return avg_err

simulation_lin_with_trans(N=1000)

Output:

0.124313

Question 15

What is the closest value to the classification out-of-sample error Eout of your hypothesis? Estimate it by generating a new set of 1000 points and adding noise as before. Average over 1000 runs to reduce the variation in your results.

def test_lin(N):

err_list = []

for _ in range(1000):

X, y = generate_data(N)

Z = transform_X(X)

w_lin = lin(Z, y)

X_test, y_test = generate_data(N)

Z_test = transform_X(X_test)

# Calculate E_out

err = calc_err(Z_test, y_test, w_lin)

err_list.append(err)

avg_err = np.mean(err_list)

return avg_err

test_lin(N=1000)

Output:

0.126341

Question 16

For Question 16-17, you will derive an algorithm for multinomial (multiclass) logistic regression. For a K-class classification problem, we will denote the output space Y={1,2,⋯,K}. The hypotheses considered by MLR are indexed by a list of weight vectors (w1,⋯,wK), each weight vector of length d+1. Each list represents a hypothesis

hy(x)=exp(wTyx)∑Ki=1exp(wTix)

that can be used to approximate the target distribution P(y∣x). MLR then seeks for the maximum likelihood solution over all such hypotheses.

For general K, derive an Ein(w1,⋯,wK) like page 11 of Lecture 10 slides by minimizing the negative log likelihood.

Sol:

The likelihood function of multinomial logistic hypothesis is

L(w1,⋯,wK)=N∏n=1f(xn,yn)=N∏n=1P(yn|xn)P(xn)=N∏n=1hyn(xn)P(xn).

So the log likelihood is

logL(w1,⋯,wK)=N∑n=1[loghyn(xn)+logP(xn)],

and maximizing log likelihood is equivalent to minimize its negative, i.e.,

maxw1,⋯,wKlogL⟺minw1,⋯,wK−N∑n=1[loghyn(xn)+logP(xn)].

Since the second term logP(xn) does not depend on w1,⋯,wK, we can simply ignore it. Then

maxw1,⋯,wKlogL⟺minw1,⋯,wK−N∑n=1loghyn(xn)⟺minw1,⋯,wKN∑n=1[log(K∑i=1exp(wTixn))−wTynxn]⟺minw1,⋯,wK1NN∑n=1[log(K∑i=1exp(wTixn))−wTynxn]

Therefore, the error function we want to minimize is

Ein(w1,⋯,wK)=1NN∑n=1[log(K∑i=1exp(wTixn))−wTynxn]

Question 17

For the Ein derived above, its gradient ∇Ein can be represented by (∂Ein ∂w1,∂Ein ∂w2,⋯,∂Ein ∂wK), write down ∂Ein ∂wk.

Sol:

Take the derivative with respect to wk, we can obtain

∂Ein ∂wk=1NN∑n=1[exp(wTkxn)xn∑Ki=1exp(wTixn)−[yn=k]xn]=1NN∑n=1[(hk(xn)−[yn=k])xn].

Question 18

For Question 18-20, you will play with logistic regression.

Please use the following set hw3_train.dat for training and the following set hw3_test.dat for testing.

Implement the fixed learning rate gradient descent algorithm for logistic regression. Run the algorithm with η=0.001 and T=2000. What is Eout(g) from your algorithm, evaluated using the 0/1 error on the test set?

def sigmoid(s):

return 1 / (1 + np.exp(-s))

def calc_grad_err(X, y, w):

"""

Args:

X: ndarray of shape = [N, d + 1]

y: ndarray of shape = [N, 1]

w: ndarray of shape = [d + 1, 1]

returns:

grad: ndarray of shape = [d + 1, 1]

"""

N = X.shape[0]

grad = (X * (-y)).T @ sigmoid(X @ w * (-y)) / N

return grad

def lr(X, y, eta):

"""

Args:

X: ndarray of shape = [N, d]

y: ndarray of shape = [N, ]

eta: float

Returns:

wt: ndarray of shape = [d + 1, ]

"""

N, d = X.shape

X = np.c_[np.ones((N, 1)), X]

# wt = np.random.normal(size=(d+1, 1)) # initialize using normal distribution requires more steps to converge in this example

wt = np.zeros((d + 1, 1)) # initialize all parameters to zero converges more quickly

y = y.reshape(-1, 1) # reshape y to a two dimensional ndarray to make `calc_grad_err` function easy to implement

T = 2000

for t in range(T):

grad_t = calc_grad_err(X, y, wt)

wt -= eta * grad_t

wt = wt.flatten()

return wt

def calc_zero_one_err(X, y, w):

"""

Args:

X: ndarray of shape = [N, d]

y: ndarray of shape = [N, ]

w: ndarray of shape = [d + 1, ]

Returns:

avg_err: float

"""

N = X.shape[0]

X = np.c_[np.ones((N, 1)), X]

y_hat = np.sign(X @ w) # h(x) > 1/2 <==> w^T x > 0

avg_err = np.mean(y_hat != y)

return avg_err

import pandas as pd

# Read in training and test data

df_train = pd.read_csv('hw3_train.dat', header=None, sep='\s+')

X_train_df, y_train_df = df_train.iloc[:, :-1], df_train.iloc[:, -1]

X_train, y_train = X_train_df.to_numpy(), y_train_df.to_numpy()

df_test = pd.read_csv('hw3_test.dat', header=None, sep='\s+')

X_test_df, y_test_df = df_test.iloc[:, :-1], df_test.iloc[:, -1]

X_test, y_test = X_test_df.to_numpy(), y_test_df.to_numpy()

eta = 0.001

w_lr = lr(X_train, y_train, eta)

# E_in

calc_zero_one_err(X_train, y_train, w_lr)

Output:

0.466

# E_out

calc_zero_one_err(X_test, y_test, w_lr)

Output:

0.475

Question 19

Implement the fixed learning rate gradient descent algorithm for logistic regression. Run the algorithm with η=0.01 and T=2000, what is Eout(g) from your algorithm, evaluated using the 0/1 error on the test set?

eta = 0.01

w_lr = lr(X_train, y_train, eta)

# E_in

calc_zero_one_err(X_train, y_train, w_lr)

Output:

0.197

# E_out

calc_zero_one_err(X_test, y_test, w_lr)

Output:

0.22

Question 20

Implement the fixed learning rate stochastic gradient descent algorithm for logistic regression. Instead of randomly choosing n in each iteration, please simply pick the example with the cyclic order n=1,2,⋯,N,1,2,⋯

Run the algorithm with η=0.001 and T=2000, what is Eout(g) from your algorithm, evaluated using the 0/1 error on the test set?

def lr_sgd(X, y, eta):

"""

Args:

X: ndarray of shape = [N, d]

y: ndarray of shape = [N, ]

eta: float

Returns:

wt: ndarray of shape = [d + 1, ]

"""

N, d = X.shape

X = np.c_[np.ones((N, 1)), X]

wt = np.zeros((d + 1, 1))

y = y.reshape(-1, 1)

T = 2000

n = 0

for t in range(T):

xt, yt = X[n].reshape(-1, 1).T, y[n]

grad_t = calc_grad_err(xt, yt, wt)

wt -= eta * grad_t

n = n + 1 if n != N - 1 else 0

wt = wt.flatten()

return wt

eta = 0.001

w_lr = lr_sgd(X_train, y_train, eta)

# E_in

calc_zero_one_err(X_train, y_train, w_lr)

Output:

0.464

# E_out

calc_zero_one_err(X_test, y_test, w_lr)

Output:

0.473

So for this data set, we need more iterations for SGD to converge.

Reference

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK