Reduce data privacy issues with machine learning models

source link: https://developer.ibm.com/blogs/data-minimization-for-machine-learning/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Reduce data privacy issues with machine learning models – IBM Developer

IBM Developer Blog

Follow the latest happenings with IBM Developer and stay in the know.

Blog Post

Reduce data privacy issues with machine learning models

Use the IBM AI Privacy toolkit to minimize collected data

As the use of AI becomes increasingly pervasive in business, industries are discovering that they can use machine learning models to make the most of existing data to improve business outcomes. However, machine learning models have a distinct drawback: traditionally, they need huge amounts of data to make accurate forecasts. That data often includes extensive personal and private information, the use of which is governed by modern data privacy guidelines, such as the EU’s General Data Protection Regulation (GDPR). GDPR sets a specific requirement called data minimization, which means that organizations can collect only data that is necessary.

It’s not only data privacy regulations that need to be considered when using AI in business: Collecting personal data for machine learning analysis also represents a big risk when it comes to security and privacy. According to the Cost of a Data Breach Report for 2021, the average data breach costs over $4 million overall for the enterprise, with an average cost of $180 per each record compromised.

Minimizing the data required

So how can you continue to benefit from the huge advantages of machine learning while reducing data privacy issues and security threats and adhering to regulations? Reducing the collected data holds the key, and you can use the minimization technology from IBM’s open source AI Privacy toolkit to apply this approach to machine learning models.

Perhaps the main problem you face when applying data minimization is determining exactly what data you actually need to carry out your task properly. It seems almost impossible to know that in advance, and data scientists are often stuck making educated guesses as to what data they require.

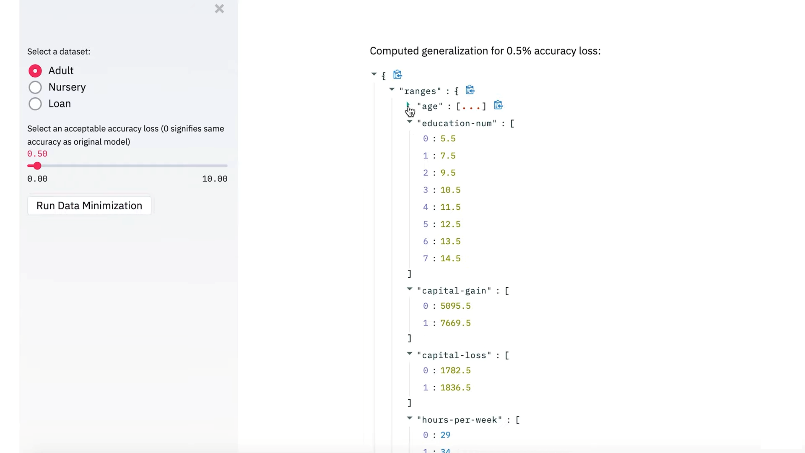

Given a trained machine learning model, IBM’s toolkit can determine the specific set of features and the level of detail for each feature that is needed for the model to make accurate predictions on runtime data.

How it works

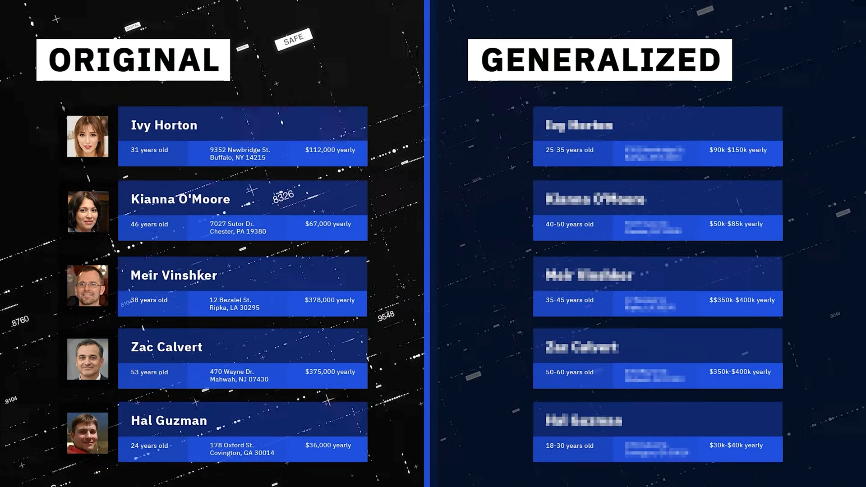

It can be difficult to determine the minimal amount of data you need, especially in complex machine learning models such as deep neural networks. We developed a first-of-a-kind method that reduces the amount of personal data needed to perform predictions with a machine learning model by removing or generalizing some of the input features of the runtime data. Our method makes use of the knowledge encoded within the model to produce a generalization that has little to no impact on its accuracy. We showed that, in some cases, you can collect less data while preserving the exact same level of model accuracy as before. But even if this is not the case, in order to adhere to the data minimization requirement, companies are still required to demonstrate that all data collected is needed by the model for accurate analysis.

Real-world application

This technology can be applied in a wide variety of industries that use personal data for forecasts, but perhaps the most obvious domain is healthcare. One possible application for the AI minimization technology would be for medical data. For example, research scientists developing a model to predict if a given patient is likely to develop melanoma so that advance preventative measures and initial treatment efforts can be administered).

To begin this process, the hospital system would generally initiate a study and enlist a cohort of patients who agree to have their medical data used for this research. Because the hospital is seeking to create the most accurate model possible, they would traditionally use all of the available data when training the model to serve as a decision support system for its doctors. But they don’t want to collect and store more sensitive medical, genetic, or demographic information than they really need.

Using the minimization technology, the hospital can decide what percent reduction in accuracy they can sustain, which could be very small or even none at all. The toolkit can then automatically determine the range of data for each feature, or even show that some features aren’t needed at all, while still maintaining the model’s desired accuracy.

Researching data minimization

You can experiment with the initial proof-of-concept implementation of the data minimization principle for machine learning models that we recently published. We also published a Data minimization for GDPR compliance in machine learning models paper, where we presented some promising results on a few publicly available datasets. There are several possible directions for extensions and improvements.

Our initial evaluation focused on classification models, but as we deepen our study of this area, we plan to extend it to additional model types, such as regression. In addition, we plan to examine ways to combine this work with other techniques from the domains of model testing, explainable AI (XAI), and interpretability.

When less is more

Data minimization helps researchers adhere to data protection regulations, but it also serves to prevent unfair data collection practices, such as excessive collection or retention of data, and the personal risk to data subjects in case of a data breach. Generalizing the input data to models has the potential to help prevent prediction bias or other forms of discrimination, leading to more fairness-aware or discrimination-aware data mining practices.

Download the toolkit and try it for yourself.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK