Building a Homelab VM Server (2020 Edition)

source link: https://mtlynch.io/building-a-vm-homelab/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Building a Homelab VM Server (2020 Edition)

For the past five years, I’ve done all of my software development in virtual machines (VMs). Each of my projects gets a dedicated VM, sparing me the headache of dependency conflicts and TCP port collisions.

Three years ago, I took things to the next level by building my own homelab server to host all of my VMs. It’s been a fantastic investment, as it sped up numerous dev tasks and improved reliability.

In the past few months, I began hitting the limits of my VM server. My projects have become more resource-hungry, and mistakes I’d made in my first build were coming back to bite me. I decided to build a brand new homelab VM server for 2020.

Components of my new VM server build (most of them, anyway)

I don’t care about the backstory; show me your build! 🔗︎

If you’re not interested in the “why” of this project, you can jump directly to the build.

Why build a whole VM server? 🔗︎

Originally, I used VirtualBox to run VMs from my Windows desktop. That was fine for a while, but reboots became a huge hassle.

Between forced reboots from Windows Update, voluntary restarts to complete software installs, and the occasional OS crash, I had to restart my entire suite of development VMs three to five times per month.

A dedicated VM server spares me most reboots. The VM host runs a minimal set of software, so crashes and mandatory reboots are rare.

What’s a “homelab?"

Homelab is just a colloquial term that’s grown in popularity in the last few years. Homelab servers are no different from any other servers, except that you build them at home rather than in an office or data center. Many people use them as a low-stakes practice environment before using the same tools in a real-world business context.

Why not use cloud computing? 🔗︎

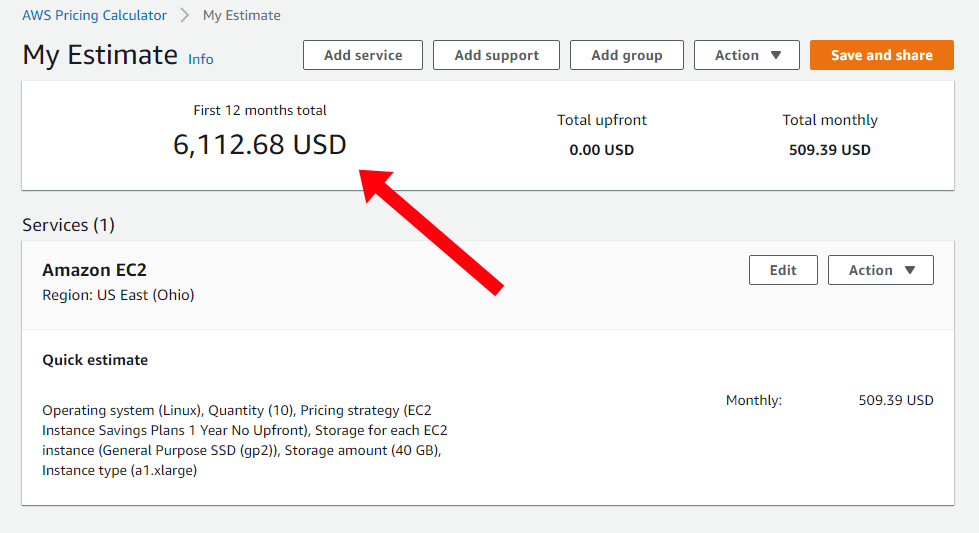

Cloud servers could serve the same function and save me the trouble (fun!) of maintaining my own hardware, but it’s prohibitively expensive. For VM resources similar to my homelab server, AWS EC2 instances would cost over $6k per year:

Using AWS instead of my homelab server would cost me over $6k per year.

I could substantially reduce costs by turning cloud instances on and off as needed, but that would introduce friction into my workflows. With a local VM server, I can keep 10-20 VMs available and ready at all times without worrying about micromanaging my costs.

Learning from past mistakes 🔗︎

My 2017 build served me well, but in three years of using it, I’ve come to recognize a few key areas begging for improvement.

1. Keep storage local 🔗︎

My Synology NAS has 10.9 TB of storage capacity. With all that network storage space, I thought, “why put more disk space on the server than the bare minimum to boot the host OS?”

On my first build, I relied on my 10.9 TB of network storage.

That turned out to be a dumb idea.

First, running VMs on network storage creates a strict dependency on the disk server. Synology publishes OS upgrades every couple of months, and their patches always require reboots. With my VMs running on top of Synology’s storage, I had to shut down my entire VM fleet before applying any update from Synology. It was the same reboot problem I had when I ran VMs on my Windows desktop.

The OS on my storage server requires frequent upgrades.

Second, random disk access over the network is slow. At the time of my first build, most of my development work was on backend Python and Go applications, and they didn’t perform significant disk I/O. Since then, I’ve expanded into frontend web development. Modern web frameworks all use Node.js, so every project has anywhere from 10k-200k random JavaScript files in its dependency tree. Node.js builds involve tons of random disk access, a worst-case scenario for network storage.

2. Pick better VM management software 🔗︎

For my first server, I evaluated two options for VM management: Kimchi and VMWare ESXi. VMWare was far more polished and mature, but Kimchi charmed me with its scrappy spirit and open-source nature.

Early listing of my VMs through Kimchi’s web UI

Almost immediately after I installed it, development on Kimchi stopped.

Code commits to Kimchi, which stop almost immediately after I started using it

Over time, Kimchi’s shortcomings became more and more apparent. I often had to click a VM’s “clone” or “shutdown” button multiple times before it cooperated. And there were infuriating UI bugs where buttons disappeared or shifted position right before I clicked on them.

3. Plan for remote administration 🔗︎

My VM server is tucked away in the corner, which is convenient except for the occasional instance where I need physical access.

If you read the above and thought, “Kimchi is just software. Why did Michael have to build a whole new server just to install a different VM manager?” It’s because I failed to anticipate the importance of remote administration.

My VM server is just a PC that sits in the corner of my office with no monitor or keyboard attached. That’s fine 99% of the time when I can SSH in or use the web interface. But for the 1% of the time when the server fails to boot or I want to install a new host OS, it’s a huge pain. I have to drag the server over to my desk, disconnect my desktop keyboard and monitor, fix whatever needs fixing, then restore everything in my office to its original configuration.

For my next build, I wanted a virtual console with physical-level access to the machine as soon as it powered on. I was thinking something like Dell’s iDRAC or HP’s iLO.

Dell iDRAC was one option I considered for remote server management.

Choosing components 🔗︎

CPU 🔗︎

My first VM server’s CPU was a Ryzen 7 1700. At eight cores and 16 threads, it was the hot new CPU at the time. But when I showed off my build on /r/homelab, reddit’s homelab subcommunity, they mocked me as a filthy casual because I used consumer parts. The cool kids used enterprise gear.

/r/homelab was unimpressed with my first build.

Resolved never to let /r/homelab make fun of me again, I ventured into the world of enterprise server hardware. I even got fancy and chose to build a system with two physical CPUs.

To get the best performance for my dollar, I restricted my search to used CPUs, released four to eight years ago. For each candidate, I looked up benchmark scores on PassMark and then checked eBay for recent sales of that CPU model in used condition.

The most cost-efficient performance seemed to be in the Intel Xeon E5 v3 family, especially the 2600 models. I settled on the E5-2680 v3. It had an average benchmark of 15,618 and cost ~$130 used on eBay.

The Intel Xeon E5-2680 v3 scores 15,618 on cpubenchmark.net.

For context, my previous build’s Ryzen 7 had a benchmark of 14,611. So with dual-E5-2680s, I’d more than double the processing power from my old server.

Motherboard 🔗︎

The downside of a dual-CPU system was that it limited my options for motherboards. Only a handful of motherboards support dual Intel 2011-v3 CPUs. Their prices ranged from $300 to $850, which was far more than I expected to spend on a motherboard.

I chose the SuperMicro MBD-X10DAL-I-O, which at $320 was lower in price than similar motherboards, but it was still five times what I paid for my last one.

Memory 🔗︎

There seems to be a lot less informed choice for server memory. With consumer hardware, plenty of websites publish reviews and benchmarks of different RAM sticks, but I didn’t see anything like that for server RAM.

I went with Crucial CT4K16G4RFD4213 64 GB (4 x 16 GB) because I trusted the brand. I chose 64 GB because my previous build had 32 GB, and some of my workflows were approaching that limit, so I figured doubling RAM would cover me for the next few years.

Storage 🔗︎

I love M.2 SSDs, as they’re small, perform outstandingly, and neatly tuck away in the motherboard without any cabling. Sadly, the MBD-X10DAL doesn’t support the M.2 interface.

Instead, I stuck with traditional old SATA. I bought a 1 TB Samsung 860 EVO. I typically allocate 40 GB of space to each VM, so 1 TB would give me plenty of room. If I need to upgrade later, I can always buy more disks.

Power 🔗︎

Choosing a power supply unit (PSU) isn’t that interesting, so I again chose mainly by trusted brand, the Corsair CX550M 550W 80 Plus Bronze.

The wattage on all of my components added up to 400 W, so 450 W would have been sufficient. But the 550 W version was only $10 more, which seemed like a fair price for an extra 100 W of breathing room.

The only other important feature to me was semi-modular cabling. In my last build, I made the mistake of using non-modular cabling, which meant that all of the PSU cables stay attached permanently. My server barely has any internal components, so the extraneous power cables created clutter. With semi-modular cabling, I can keep things tidy by removing unused cables from the PSU.

Fans 🔗︎

The dual-CPU build made cooling an unexpected challenge. The MBD-X10DAL doesn’t leave much space between the two CPU sockets, so I looked carefully for fans thin enough to work side-by-side. A pair of Cooler Master Hyper 212s fit the bill.

Case 🔗︎

My server sits inconspicuously in the corner of my office, so I didn’t want a case with clear panels or flashy lights.

The Fractal Design Meshify C Black had positive reviews and seemed like a simple, quiet case.

Graphics 🔗︎

For a headless server, the graphics card doesn’t matter much. It’s still necessary so I can see the screen during the initial install and the occasional debugging session, so I went with the MSI GeForce GT 710 as a cheap, easy option.

Remote administration 🔗︎

I looked into remote administration solutions and was blown away by how expensive they were. At first, I thought I’d use a Dell iDRAC, but the remote console requires a $300 enterprise license and constrains my build to Dell components. I looked at KVM over IP solutions, but those were even more expensive, ranging from $600 to $1,000.

Commercial KVM over IP devices cost between $500 and $1,000.

To achieve remote administration, I took the unusual approach of building my own KVM over IP device out of a Raspberry Pi. I call it TinyPilot.

Using TinyPilot to install an OS on my server

TinyPilot captures HDMI output and forwards keyboard and mouse input from the browser. It provides the same access you’d have if you physically connected a real keyboard, mouse, and monitor. The software is open-source, and I offer pre-made versions for purchase.

Install a new server OS right from your browser

TinyPilot is an affordable, open-source solution that provides a remote console for your headless server.

My 2020 server build 🔗︎

The Meshify C has been my all-time favorite case for cable management. Its built-in velcro straps organize the cables, and little rubber dividers hide them in the far side of the case.

Installing the motherboard, CPU, RAM, and fans

My completed build in its new home

VM Management: Proxmox 🔗︎

To manage my VMs, I’m using Proxmox VE.

Proxmox’s dashboard of all my VMs

After Kimchi burned me on my last build, I was reluctant to try another free solution. Proxmox has been around for 12 years, so I felt like they were a safe enough bet. Graphics-wise, it’s a huge step up from Kimchi, but it lags behind ESXi in slickness.

The part of Proxmox that I most appreciate is its scriptability. One of my frequent tasks is creating a new VM from a template and then using Ansible to install additional software. With ESXi, I couldn’t find a way to do this without manually clicking buttons in the web UI every time. With Proxmox, their CLI is powerful enough that I can script it down to just ./create-vm whatgotdone-dev and my scripts create a fresh What Got Done development VM.

My biggest complaint is that Proxmox is unintuitive. I couldn’t even figure out how to install it until I found Craft Computing’s installation tutorial. But once you learn your way around, it’s easy to use.

Benchmarks 🔗︎

Before I decommissioned my old VM server, I collected simple benchmarks of my common workflows to measure performance improvements.

Most of my old VMs ran on network storage because its local SSD only had room for a couple of VMs. In the benchmarks below, I compare performance in three different scenarios:

- 2017 Server (NAS): The typical VM I kept on network storage

- 2017 Server (SSD): For the few VMs I kept on local storage

- 2020 Server: All VMs run on local SSD, so there’s no NAS vs. SSD

Provision a new VM 🔗︎

The first benchmark I took was provisioning a new VM. I have a standard Ubuntu 18.04 VM template I use for almost all of my VMs. Every time I need a new VM, I run a shell script that performs the following steps:

- Clone the VM from the base template.

- Boot the VM.

- Change the hostname from

ubuntuto whatever the VM’s name is. - Reboot the VM to pick up the new hostname.

- Pick up the latest software with

apt update && apt upgrade.

My new server brought a huge speedup to this workflow. Cloning a VM went from 15 minutes on my old server to less than four minutes on the new one.

If I skip the package upgrade step, the speedup is a little less impressive. The new server still blows away performance on NAS storage, dropping from eight minutes to just under two and a half. SSD to SSD, it underperforms my previous server. Cloning a VM is likely disk-bound, and my old M.2 SSD was faster than my new SATA SSD.

Boot a VM 🔗︎

From the moment I power on a VM, how long does it take for me to see the login prompt?

My old VMs booted in 48 seconds. The few SSD VMs on my old system did a little better, showing the login prompt in 32 seconds. My new server blows both away, booting up a VM in only 18 seconds.

Run What Got Done end-to-end tests 🔗︎

My weekly journaling app, What Got Done, has automated tests that exercise its functionality end-to-end. This is one of my most diverse workflows — it involves compiling a Go backend, compiling a Vue frontend, building a series of Docker containers, and automating Chrome to exercise my app. This was one of the workflows that exhausted resources on my old VM, so I expected substantial gains here.

Your browser does not support the video tag.

Surprisingly, there was no significant performance difference between the two servers. For a cold start (downloading all of the Docker base images), the new server is 2% slower than the old one. When the base Docker images are available locally, my new server beats my old, but only by 6%. It looks like the bottleneck is mainly the disk and browser interaction, so the new server doesn’t make much of a difference.

Build Is It Keto 🔗︎

One frequent workflow I have is building Is It Keto, my resource for keto dieters. I generate the site using Gridsome, a static site generator for Vue.

I expected a significant speedup here, so I was surprised when my build got slower. The build seemed to be mostly CPU-bound on my old server, but doubling CPU resources on my new server did nothing. My next guess was that it was disk-bound, so I tried moving the files to a RAMdisk, but build speeds remained the same.

My hypothesis is that the workflow is CPU-bound but parallelizes poorly. My old server has fewer CPU cores, but each core is faster. If the build is limited to five or six threads, it can’t take advantage of my new server’s 48 cores.

Train a new Zestful model 🔗︎

Zestful is my machine-learning-based API for parsing recipe ingredients. Every few months, I train it on new data. This is my most CPU-intensive workflow, so I was interested to see how the new system would handle it.

Finally, a case where my 48 CPU cores shine! The new server blows the old one away, training the model in less than half the time. Unfortunately, it’s a workflow I only run a few times per year.

Reflections 🔗︎

There’s no shame in consumer hardware 🔗︎

Even though /r/homelab may never respect me, on my next build, I’m planning to return to consumer hardware.

The biggest advantage I see with server components is that they have better compatibility with server software. Back in 2017, I couldn’t install ESXi until I disabled multithreading on my CPU, degrading performance substantially. But that was a limitation in the Linux kernel, and later updates fixed it.

Server hardware commands a premium because of its greater reliability. For user-facing services, this characteristic is meaningful, but it matters much less on a development server. An occasional crash or bit flip on a dev server shouldn’t ruin your day.

Consider the full cost of dual-CPU 🔗︎

This was the first time I’d ever built a dual-CPU computer. It was an interesting experience, but I don’t think it was worth the trouble.

Based on my benchmarks, the CPU was so rarely the limiting factor in my workflows. The most damning evidence is Proxmox’s graph of my CPU usage over time. In the past few months, I’ve never pushed CPU load above 11%, so I’m crazy overprovisioned.

My max CPU usage in the last few months never went above 11% of my server’s capacity.

The requirement for dual CPUs drove up the cost of a motherboard substantially and limited my options. Only a scant few mobos support dual Intel 2011-v3 CPUs, so I didn’t have many choices in terms of other motherboard features.

Remote administration provides flexibility 🔗︎

Before I used TinyPilot to manage my server, I didn’t realize how change-averse I was. Changing any BIOS or network settings brought a risk of losing the next few hours of my life physically moving around machines and reconnecting peripherals to debug and fix the problem. Knowing that, I never wanted to modify any of those settings.

Having a virtual console gives me the freedom to fail and makes me more open to experimenting with different operating systems. It’s always going to be a substantial effort to install and learn a new OS, but knowing that I don’t have to drag machines back and forth makes me much more open to it. Had I not built TinyPilot, I might have stuck with ESXi as “good enough” rather than taking a chance on Proxmox.

A Year Later 🔗︎

A reader asked me if there’s anything I’d change about this build in retrospect, so I thought I’d share an update as it’s been a little over a year with this server.

CPU - Too much 🔗︎

I definitely went overboard on the dual E5-2680 v3 CPUs.

In a year of usage, I’ve rarely exceeded 50% CPU usage, meaning one CPU would have been sufficient.

In a year of usage, I’ve never reached 100% CPU usage, and I’ve only ever exceeded 50% capacity a handful of times, so I would have been fine with just a single CPU.

SSD - Not enough 🔗︎

My 1 TB Samsung SSD is just about full, so I just purchased another a 2 TB Samsung 870 Evo for a total of 3 TB of SSD. There’s plenty of space in the case for more SSDs.

My server has only 15% of disk still free.

By default, I provision each VM with 40 GB of disk, which is sometimes limiting. When I’m doing work with Docker, container images can eat up disk quickly. Every few weeks, I find that I’ve filled up my VM’s disk, and I have to run docker system prune --all, so the additional disk will spare me those interruptions.

RAM - Slightly too little 🔗︎

The 64 GB of RAM has mostly been sufficient, but there have been a few instances where I have to turn off VMs to give myself more memory. I prefer not to interrupt my workflow managing resources, so I just ordered another 64 GB of the same RAM sticks.

I’m reaching the limits of 64 GB of RAM.

Proxmox - Still great 🔗︎

I still love Proxmox as a VM manager. I purchased a license, which I’m not sure adds any new features that I use, but I’m happy to support the project.

Annoyingly, the licenses are priced per CPU, so in addition the shame of buying too much CPU, I have to pay double for Proxmox.

Parts list (as of 2021-12-05) 🔗︎

* Purchased a year after the original build.

Related posts 🔗︎

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK