Comparing AMD EPYC Performance with Intel Xeon in GCP

source link: https://www.percona.com/blog/comparing-amd-epyc-performance-with-intel-xeon-in-gcp/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Recently we were asked to check the performance of the new family of AMD EPYC processors when using MySQL in Google Cloud Virtual Machines. This was motivated by a user running MySQL in the N1 machines family and willing to upgrade to N2D generation considering the potential cost savings using the new AMD family.

Recently we were asked to check the performance of the new family of AMD EPYC processors when using MySQL in Google Cloud Virtual Machines. This was motivated by a user running MySQL in the N1 machines family and willing to upgrade to N2D generation considering the potential cost savings using the new AMD family.

The idea behind the analysis is to do a side-by-side comparison of performance considering some factors:

- EPYC processors have demonstrated better performance in purely CPU-based operations according to published benchmarks.

- EPYC platform has lower costs compared to the Intel Xeon platform.

The goal of this analysis is to check if cost reductions by upgrading from N1 to N2D are worth the change to avoid suffering from performance problems and eventually reduce the machine size from the current 64 cores based (N1 n1-highmem-64 – Intel Haswell) to either N2D 64 cores (n2d-highmem-64 – AMD Rome) or even to 48 cores (n2d-highmem-48 – AMD Rome), to provide some extra context we included N2 (the new generation of Intel machines) into the analysis.

In order to do a purely CPU performance comparison we created 4 different VMs:

NAME: n1-64

MACHINE_TYPE: n1-highmem-64

Intel Haswell – Xeon 2.30GHz

*This VM corresponds to the same type as the type we use in Production.

NAME: n2-64

MACHINE_TYPE: n2-highmem-64

Intel Cascade Lake – Xeon 2.80GHz

NAME: n2d-48

MACHINE_TYPE: n2d-highmem-48

AMD Epyc Rome – 2.25Ghz

NAME: n2d-64

MACHINE_TYPE: n2d-highmem-64

AMD Epyc Rome – 2.25Ghz

For the analysis, we used MySQL Community Server 5.7.35-log and this is the basic configuration:

In all cases, we placed a 1TB balanced persistent drive so we get enough IO performance for the tests. We wanted to normalize all the specs so we can focus on the CPU performance, so don’t pay too much attention to the chances for improving performance for IO operations and so.

The analysis is based on sysbench oltp read-only workload with an in-memory dataset, the reason for this is that we want to generate traffic that can saturate CPU while not being affected by IO or Memory.

The approach for the benchmark was also simple, we executed RO OLTP work for 16, 32, 64, 128, and 256 threads with a one-minute wait between runs. Scripts and results from tests can be found here.

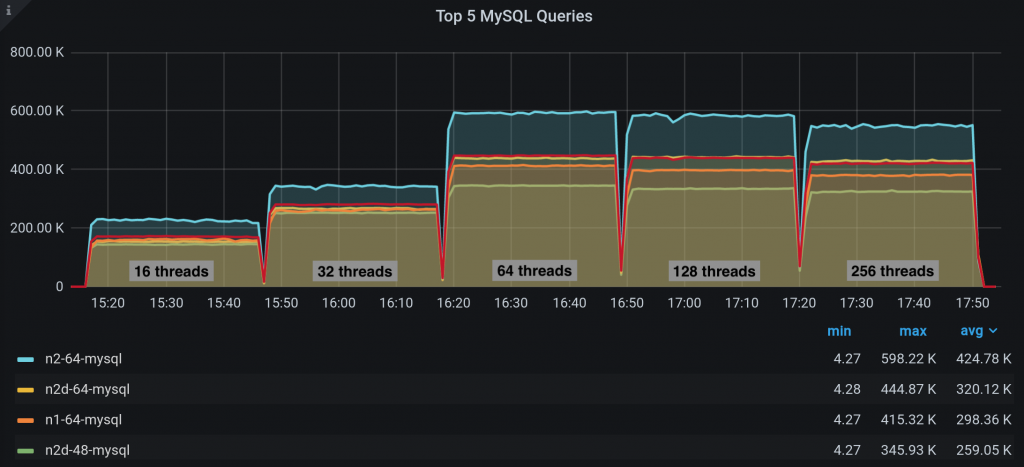

Let’s jump into the analysis, these are the number of Queries that instances are capable to run:

The maximum amount of TPS by Instance Type by the number of threads:

Threads/Instance N1-64 N2-64 N2D-48 N2D-64

16

164k 230k 144k

32

265k 347k 252k 268k

64

415k 598k 345k

439k

128

398k 591k 335k

256 381k 554k 328k

Some observations:

- In all cases we reached the maximum TPS at 64 threads, this is somehow expected as we are not generating CPU context switches.

- Roughly we get a maximum of 598k tps in n2-highmem-64 and 444k tps in n2d-highmem-64 instance types which are the bigger ones. While this is expected Intel-based architecture outperforms AMD by a 35%

- Maximum tps seems to be reached with 64 threads, this is expected considering the number of CPU threads we can use in parallel.

- While n1-highmem-64 (Intel Xeon) and n2d-highmem-48 (AMD Epyc) seems to start suffering performance issues when the amount of threads exceeds the max number of cores the bigger instances running with 64 cores are capable to sustain the throughput a bit better, these instances start to be impacted when we reach 4x the amount of CPU cores.

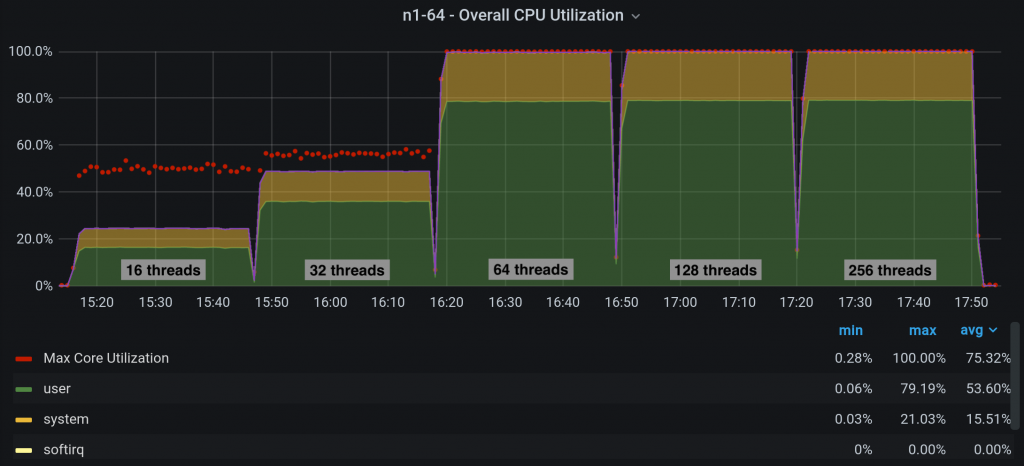

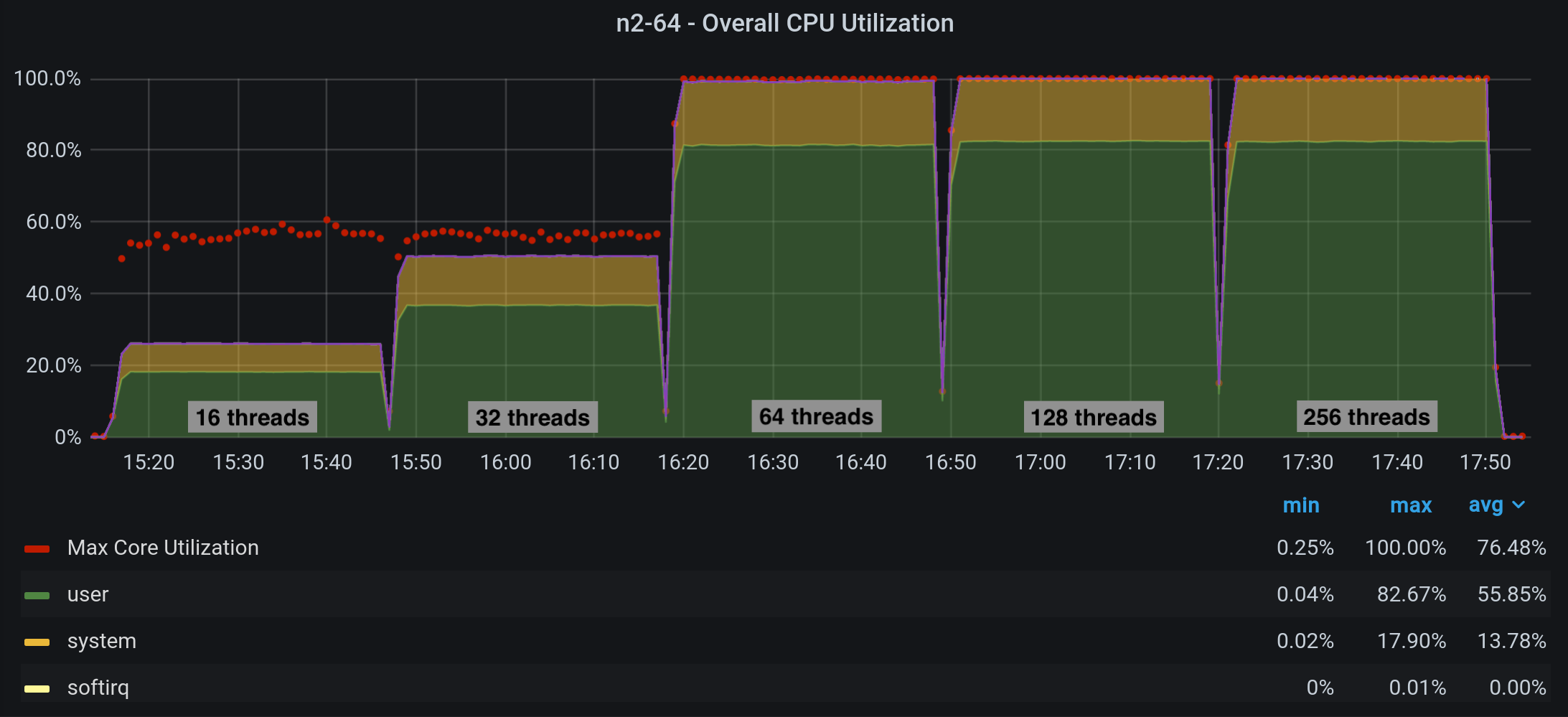

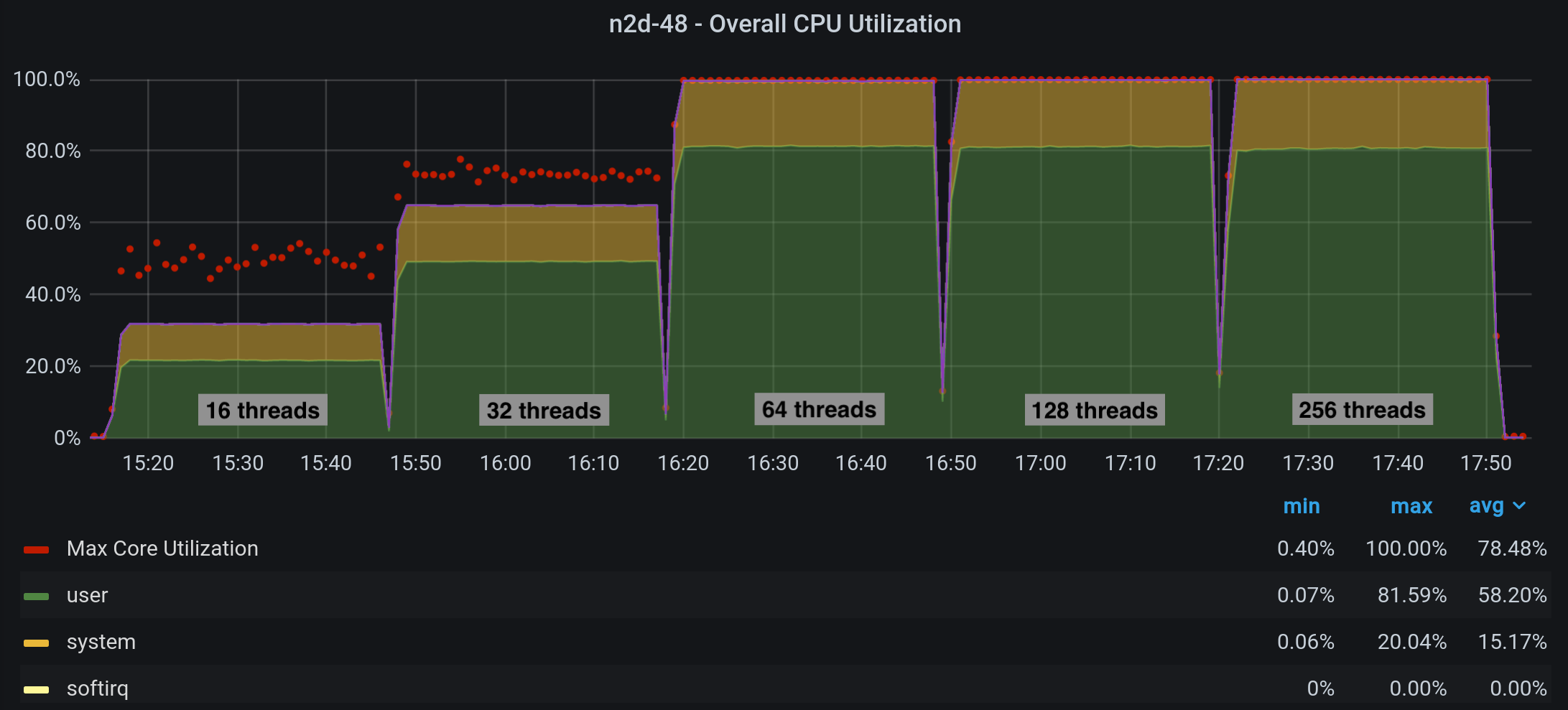

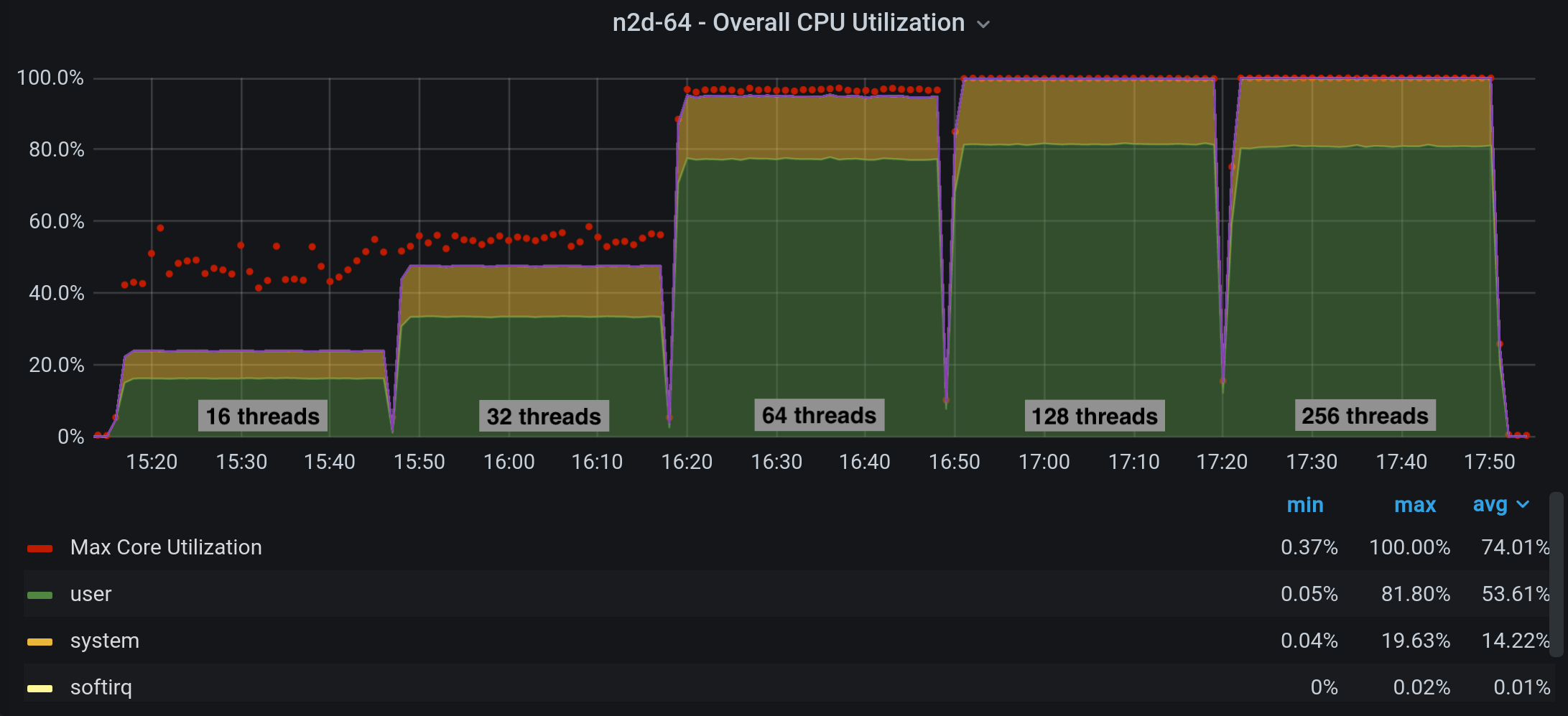

Let’s have a look at the CPU utilization on each node:

Additional observations:

- n1-highmem-64 and n2d-highmem-48 are reaching 100% utilization at 64 threads running.

- With 64 threads running n2-highmem-64 reaches 100% utilization while n2d-highmem-64 is still below. Although Intel provides better throughput overall probably by having a faster CPU clock (2.8Ghz vs 2.25Ghz)

- For 128 and 256 threads all CPUs show similar utilization.

For the sake of analysis this is the estimated costs of each of used machines (at the moment of writing the post):

n1-highmem-64 $2,035.49/month = $0.000785297/second

n2-highmem-64 $2,549.39/month = $0.000983561/second

n2d-highmem-48 $1,698.54/month = $0.000655301/second

n2d-highmem-64 $2,231.06/month = $0.000860748/second

Costs above will give us roughly at peaks of TPS:

n1-highmem-64 costs are $0.0000000019/trx

n2-highmem-64 costs are $0.0000000016/trx

n2d-highmem-48 costs are $0.0000000019/trx

n2d-highmem-64 costs are $0.0000000019/trx

Conclusions

While this is not a super exhaustive analysis of all implications of CPU performance for MySQL workload we get a very good understanding of cost vs performance analysis.

- n1 family, currently used in production, shows very similar performance to n2d family (AMD) when running with the same amount of cores. This changes a lot when we move into the n2 family (Intel) which outperforms all other instances.

- While the cut in costs for moving into n2d-highmem-48 will represent ~$4k/year the performance penalty is close to 20%.

- Comparing the costs per trx at peaks of loads we can see that both n2-64 and n2d-64 are pretty much the same but n2-64 will give us 35% more throughput, this is definitely something to consider if we plan to squeeze the CPU power.

- If the consideration is to go with n2 generation then definitely the n2d-highmem-64 is a very good choice to balance performance and costs but n2-highmem-64 will give much better performance per dollar spent.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK