Deep Image Prior

source link: https://dmitryulyanov.github.io/deep_image_prior

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

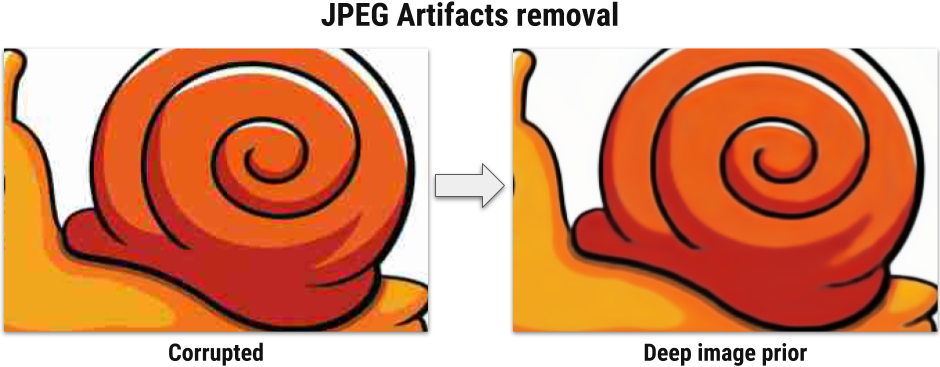

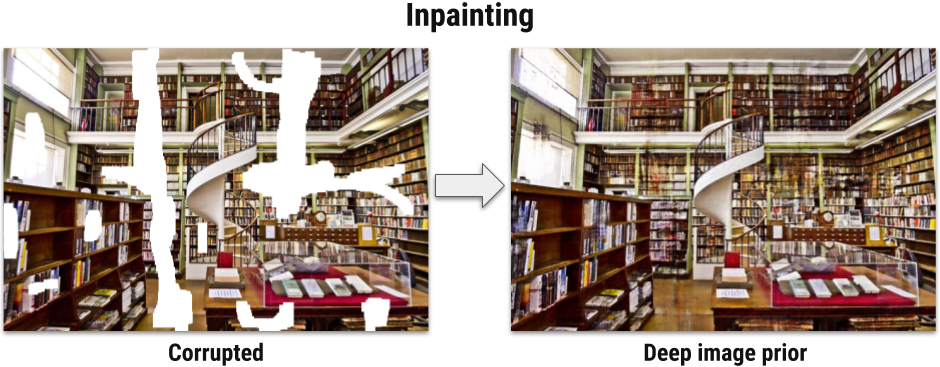

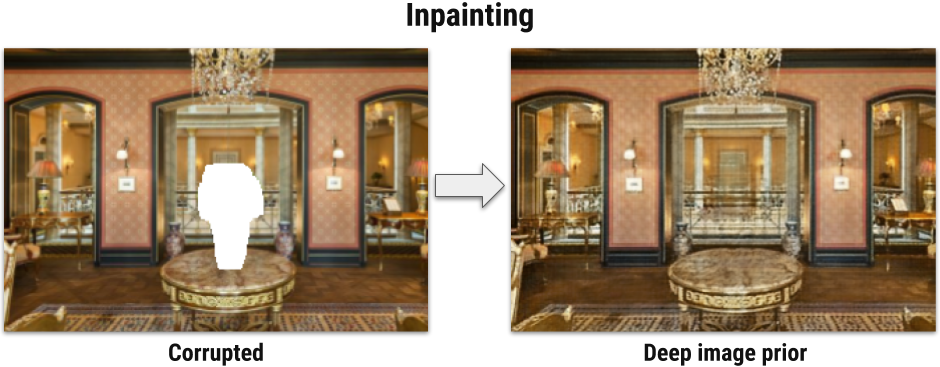

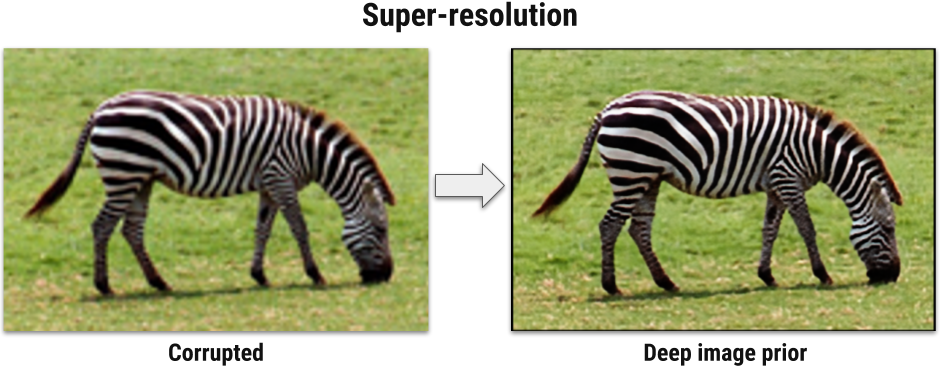

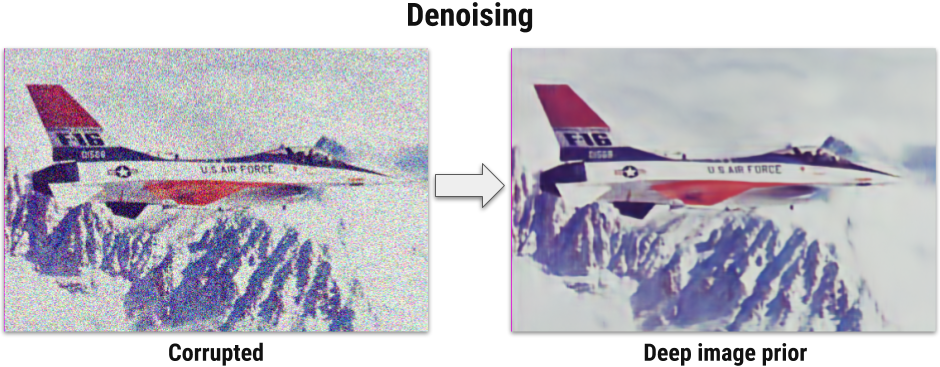

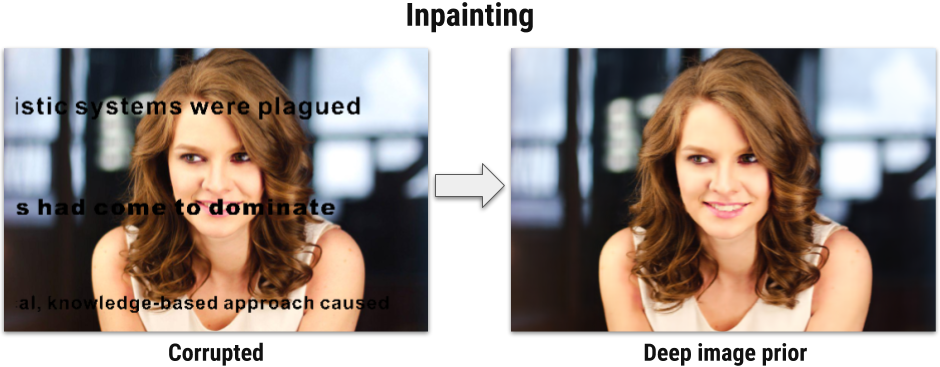

Deep convolutional networks have become a popular tool for image generation and restoration. Generally, their excellent performance is imputed to their ability to learn realistic image priors from a large number of example images. In this paper, we show that, on the contrary, the structure of a generator network is sufficient to capture a great deal of low-level image statistics prior to any learning. In order to do so, we show that a randomly-initialized neural network can be used as a handcrafted prior with excellent results in standard inverse problems such as denoising, super-resolution, and inpainting. Furthermore, the same prior can be used to invert deep neural representations to diagnose them, and to restore images based on flash-no flash input pairs.

Apart from its diverse applications, our approach highlights the inductive bias captured by standard generator network architectures. It also bridges the gap between two very popular families of image restoration methods: learning-based methods using deep convolutional networks and learning-free methods based on handcrafted image priors such as self-similarity.

In image restoration problems the goal is to recover original image xx having a corrupted image x0x0. Such problems are often formulated as an optimization task: minxE(x;x0)+R(x),(1)(1)minxE(x;x0)+R(x),

where E(x;x0)E(x;x0) is a data term and R(x)R(x) is an image prior. The data term E(x;x0)E(x;x0) is usually easy to design for a wide range of problems, such as super-resolution, denoising, inpainting, while image prior R(x)R(x) is a challenging one. Today's trend is to capture the prior R(x)R(x) with a ConvNet by training it using large number of examples.

We first notice, that for a surjective g:θ↦xg:θ↦x the following procedure in theory is equivalent to (1)(1): minθE(g(θ);x0)+R(g(θ)).minθE(g(θ);x0)+R(g(θ)).

In practice gg dramatically changes how the image space is searched by an optimization method. Furthermore, by selecting a "good" (possibly injective) mapping gg, we could get rid of the prior term. We define g(θ)g(θ) as fθ(z)fθ(z), where ff is a deep ConvNet with parameters θθ and zz is a fixed input, leading to the formulation

minθE(fθ(z);x0).minθE(fθ(z);x0).

Here, the network fθfθ is initialized randomly and input zz is filled with noise and fixed.

In other words, instead of searching for the answer in the image space we now search for it in the space of neural network's parameters. We emphasize that we never use a pretrained network or an image database. Only corrupted image x0x0 is used in the restoration process.

See paper and supplementary material for details.

Click on the image below and use left and right arrows or swipe.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK