AWS Audit and Security Strengthening

source link: https://dzone.com/articles/aws-audit-and-security-strengthening

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

AWS Audit and Security Strengthening

How AWS's rich set of services helps users with auditing and securing their infrastructure to build a strong foundation for the enterprise security framework.

Join the DZone community and get the full member experience.

Join For FreeAfter my previous article on AWS DDoS resiliency, I have decided to take on some of the AWS services which should be a part of any enterprise architecture. These services will help users in raising the posture of the overall security and help in meeting the core security and compliance requirements.

AWS has already mentioned security as one of the pillars of their architecture framework and, to support that, AWS provides a wide range of features and services. As a consumer and business owner, we need to understand that it is more of a shared model where AWS is contributing by developing new features based on their rich experience and exposure of multiple domains, and it is up to us to figure out how we can reinforce our security framework using these capabilities.

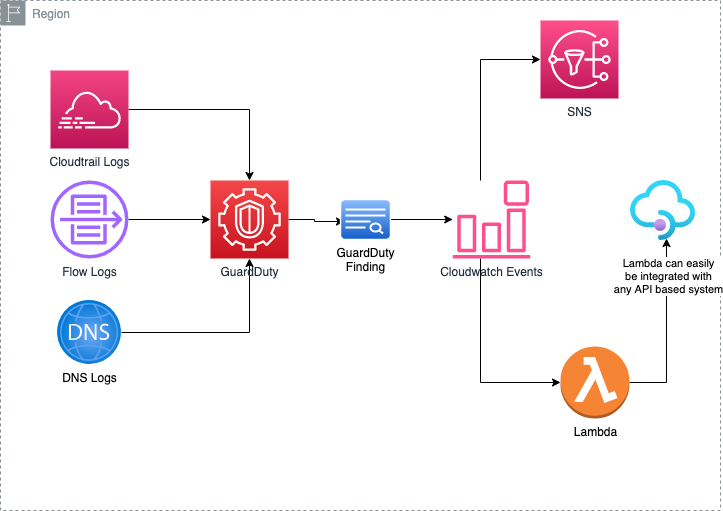

AWS GuardDuty

You may have noticed the news of incidences of resource hijacking, bitcoin mining, or unauthorized creation of new IAM entities, etc., in AWS accounts. Cybercrime has been on the rise for years now and it is not showing any signs of slowing down. To make it worse, the arrival of the COVID-19 pandemic in 2020 just catalyzed the situation.

For enterprises, it becomes imperative to enhance their monitoring and remediation techniques. AWS GuardDuty is one such tool.

Let me describe it as, “guard always on duty.” This is basically a threat detection service that uses AI/ML and external threat intelligence feeds behind the scene to identify ambiguous behavior or any abnormal activity around the supported services. It uses AI/ML so it automatically observes and learns the usual pattern, and based on that it creates a model to identify the deviations or anomalies. Based on the workload, it requires some initial time to learn the pattern and create a baseline.

It can be integrated with services like ASW S3, Cloudtrail, VPC Flow Logs, and DNS Logs. Some of the items which it can monitor include:

- Unusual API hits

- Instance or account compromise

- Bucket compromise

- Failed logins

- Suspicious traffic or traffic spikes coming from VPN etc.

One of the very powerful features of this service is it can integrate with Cloudwatch Eventbridge. With the help of this, you can convert this detection-only service to a reactive system that can take some automatic actions on your behalf, like blocking IP addresses using NACL.

Moreover, it can also be integrated with third-party services like Splunk, QRadar, and Crowdwatch, and it can provide centralized threat detection management across multiple AWS accounts.

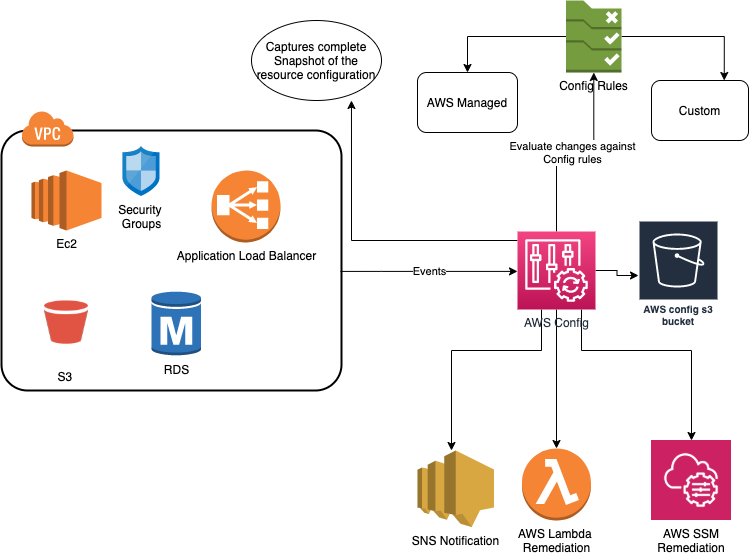

AWS Config

In addition to AWS GuardDuty, AWS Config is another tool in the same league which helps in auditing and assess the desired state of your resource config. It can be thought of as a tracker, which monitors every resource in the AWS and captures every action related to the AWS resource configuration. For example, a security group configuration is modified so that SSH ports are opened for everyone (0.0.0.0/0). AWS Config can track it and, if required, it can send notifications to the cloud team.

With every modification, it creates an item at that point in time and stores it on an S3 bucket with the following attributes

- Modification details

- Who made it

- Relationship with other AWS resources

- When it changed

It provides the snapshot of your overall AWS resource configuration which makes it a tool of choice that can be used heavily where you have audit and compliance requirements. This is helpful as it provides the overall state of your resource configuration. Furthermore, this can be integrated with the CMDB tool using other AWS services and it can contribute to providing the overall picture of your infrastructure (which can be deployed across multiple clouds).

It becomes more powerful with a config rule set, through which users can configure rules to define the desired state of the infrastructure. AWS Config monitors changes and evaluates them against these rules. There are two types of rules:

- AWS Managed Rules — AWS provides pre-built rules which are managed by them, as well. Users can select from these rules to start with.

- Customer Managed Rules — This is the ruleset configured and defined by the customer as per their requirements. AWS Lambda can be invoked to run the logic required for the custom-managed rules.

Furthermore, this can be integrated with other AWS services to remediate the unintended changes, to send notifications, or to integrate it with external services like your CMDB (Configuration Management Database). Following are some of the AWS services which can be integrated with AWS Config to provide a remediation framework.

- AWS Lambda

- AWS SSM

- AWS Eventbridge

AWS Config Dashboard can also be utilized to see the non-compliant changes. The dashboard also comes with aggregators (Aggregate from multiple accounts, multiple regions) and an advanced query feature to make it a complete tool in itself.

AWS Inspector

This is one service that is majorly focussed on EC2 instances, OS running over it, and its network. The main purpose of this service is to run vulnerability assessments and security tests on these instances against CVE (Common Vulnerability and Exposure ), CIS (Centre for Internet Security), and Amazon Security best practices. It provides a detailed report order by priority at the end of the assessment. Reports can be accessed through the AWS console or the Inspector APIs.

Before running it, the assessment setup needs to be done where the user selects the type of assessment which includes ”Network Assessment” and “Host Assessment.” Then the user can create templates that are mostly comprised of the rules packages, target, and the duration of the run. Assessment can be of variable lengths starting from 3 minutes to a day long. It could be an agentless or agent-based assessment. An agent-based run provides a detailed inspection as compared to agentless. But network assessments can be done without installing any agents. For host assessments or richer network assessments, agent installation will be required. AWS recommends running it weekly.

Trusted Advisor

Its landscape comprises five major categories:

- Cost optimizations

- Performance

- Security

- Fault tolerance

- Service limits

It also has a basic and developer version available that is free of cost and it comes with seven core checks:

- S3 bucket permission

- Security groups — specific ports unrestricted

- IAM use

- MFA on root account

- EBS public snapshots

- RDS public snapshots

- Service limits checks (50)

The business or enterprise versions come with much more enhanced features and access to AWS support API. Users will get all the 115 checks (14 cost optimization, 17 security, 24 fault tolerance, 10 performance, and 50 service limits) and recommendations. For a complete list of checks and descriptions, explore Trusted Advisor Best Practices.

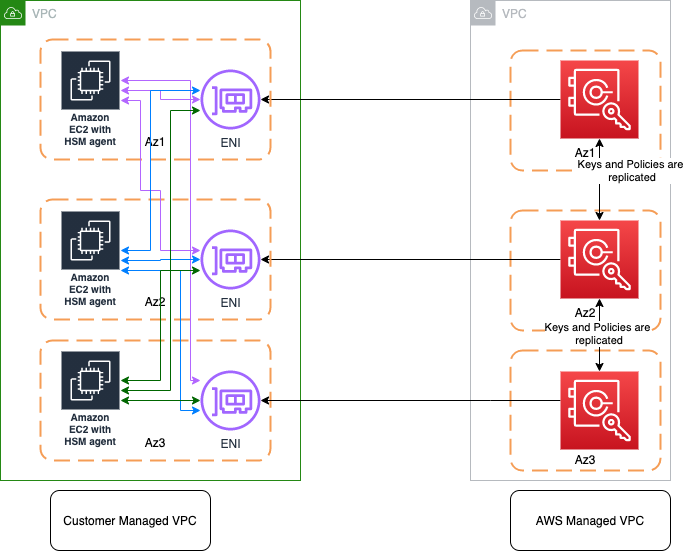

CloudHSM

Treat HSM as the locker with tamper detection and response mechanism where you put the most expensive and precious jewels (in our case it will be cryptographic material). It allows customers to securely generate, store and manage cryptographic keys used for encrypting their data and the keys will only be accessible by them. In fact, AWS also has limited accessibility over this service to perform specific tasks:

- Monitoring and maintaining the health and availability of HSM

- Taking encrypted backups

- Extracting and publishing audit logs to Cloudwatch logs.

The flipside of it is that CloudHSM doesn’t have any native integration with any of the AWS services. It is an industry-specific piece of hardware that was purposely built to manage cryptographic material. It follows Federal Information Processing Standard (FIPS-140-2 Level 3 standard).

Another important thing to remember is that VPC is a must in order to procure the AWS CloudHSM. As a standard practice, HSM is procured in a different VPC (HSM VPC) than the VPC that contains the rest of your services for the isolation and you have no visibility of that VPC that is managed by AWS. ENIs are provisioned into the customer VPC for the network connectivity with the CloudHSM. Also for high availability, customers are required to procure at least two HSM in different AZs, and HSM clients can automatically handle the failovers, which means by default it doesn’t provide HA.

CloudHSM is used where you have a specific requirement of having a single tenant or isolated appliance for keeping your keys. This is also one important factor that differentiates it from KMS, which is more of a shared service. Some of the use cases include offloading SSL/TLS processing, TDE for Oracle database, etc.

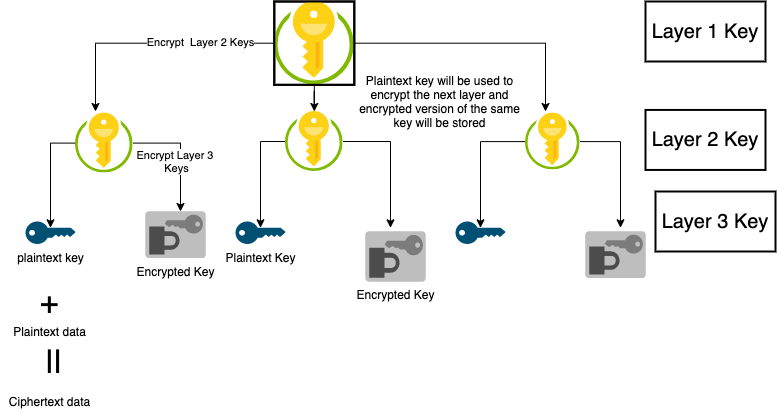

AWS KMS

Before I get into KMS, I want to introduce a term called “envelope encryption,” as this is something that forms the basis of KMS irrespective of any cloud provider. Envelope encryption is the process of using multiple layers of keys to encrypt data. It can be seen as a hierarchal structure of keys where the top layer key encrypts the second layer key, the second layer key will encrypt the third layer key, and so on.

The bottom layer key will be used to encrypt the actual data.

AWS KMS provides functionality to create, manage, and delete the keys used for the encryption of data. It is FIPS-140-2 Level 2 compliant and it has some of the functionalities that support Level 3 as well, but mostly it can be seen as a Level 2 compliant.

This is more of a shared service that is completely managed by AWS at the backend. This has the benefit that it supports integration with many of the AWS services like S3 natively. KMS provides both symmetric and asymmetric encryption. Users can create policies in order to have granular control on the access and usage of these keys.

It also supports multi-region keys where the data can be encrypted in one region and decrypted in another region.

In the case of AWS KMS, consider the top layer key as “root keys,” the Layer 2 key as CMK (customer master keys), and Layer 3 will be considered as DEK (data encryption keys). This is only for the purpose of understanding — internally, the hierarchy is much longer, but we are interested in CMK and DEK as that is the part which customer handles.

CMK or AWS KMS Keys

It can be “AWS managed,” “AWS owned,” and “customer-managed.” Consider it as a logical representation of the physical cryptographic key. In the case of customer-managed, the customer will be responsible for providing and managing this completely; basically, it provides more flexibility. AWS-managed keys will have the compulsory rotation policy (every 3 years) whereas in the case of customer-managed, it is optional and we do not cover AWS-owned keys in this article.

The best part of CMK is it never leaves KMS unencrypted, which means if you want to encrypt data with CMK, you need to send the data string and ARN of the KMS key that will be used to encrypt it. As an output, the ciphertext will be received along with the plain text.

The only limitation of CMK is you can’t encrypt any data that is more than 4 KB. I am not sure whether to say it is a limitation or part of the functionality. Along with encryption and decryption, CMKs will also be responsible for generating DEKs, and they produce both the encrypted version and plaintext version of the DEK. CMKs can be symmetric and asymmetric.

Note: AWS KMS is replacing the term customer master key (CMK) with AWS KMS key.

DEK (Data Encryption Keys)

These are the keys that are used to encrypt the actual data. KMS doesn’t store these keys. The general modus operandi is to encrypt the data with the plaintext key (DEK) and discard it. Along with the encrypted data, store the ciphertext of the keys (Encrypted DEK), as well.

During the decryption request, encrypted keys are first converted into plaintext keys by sending the request to AWS KMS, and then plaintext keys are used to decrypt the data itself. The best practice is to have a separate DEK for each record of data that you want to encrypt.

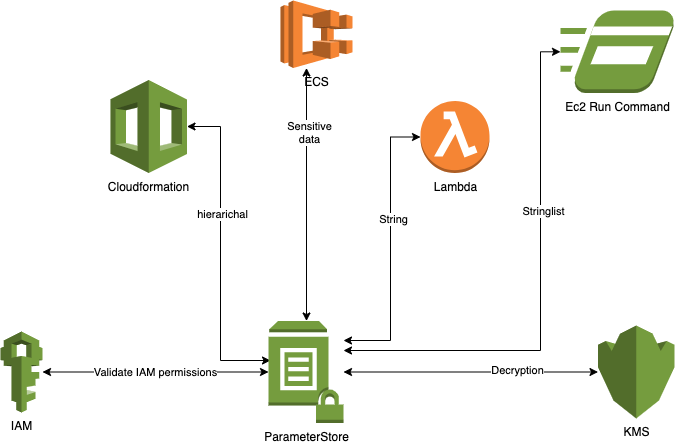

AWS Parameter Store

It is a part of the AWS system manager and its functionality is quite simple as compared to any other service mentioned above. As the name suggests it is used to store parameters and configurations, and for that matter, any confidential information in the key-value schema where value represents the data of interest. The AWS Parameter Store can be referenced through services like EC2 (Run Command and State Manager), Cloudformation, and Lambda, etc. You can also access them through your applications.

There are three types of storage string, string list, and secure string. More or less they are self-explanatory:

- String for any string value (eg. database connection string)

- String list (eg. SFTP IPs: X.X.X.Y, X.X.X.Z) is for multiple string values separated by a comma

- Secure string is for storing sensitive data, this functionality encrypts data with default KMS keys.

It also supports features like versioning and hierarchy. Versioning can support multiple versions of the data getting stored. Hierarchies help organize and better manage the parameters. You can have hierarchies per environment (dev, test, prod) to store environment-specific data or per department, like HR, IT, and accounts, or whatever suits your use case.

AWS Secret Manager

This is another service provided by AWS to manage and maintain secrets. It can be invoked via AWS console, CLI, or SDKs. In the most common scenario, the application makes an API call to a secret manager to retrieve the secrets. It uses KMS for encryption. One unique feature about AWS secret manager is it supports the automatic rotation of keys for some of the services like RDS. The most common use case of a secret manager is to store RDS credentials or any other service/application password, SSH keys, API keys, etc.

There is some overlapping in terms of use cases as both of them provide the functionality of encrypting the data and keep them secret; on the other side, Secret Manager has some specific use cases like secret rotation.

Conclusion

AWS provides a rich set of services to help you with auditing and securing your infrastructure. These services can work together to form a strong foundation for the enterprise security framework. Gradually, you can automate the responses as well based on the events. This helps in significantly reducing the amount of time that a business devotes to managing and operating security practices.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK