WWDC’21: Notable Documentations

source link: https://type.cyhsu.xyz/2021/06/wwdc21-notable-documentations/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

WWDC’21: Notable Documentations

Most users don’t follow the WWDC beyond the Keynote session. The trove of recorded sessions are thought to be relevant to only coders, not to mention the developer documentations. However, there are actually many summary articles that are easy to follow and full of tasteful tidbits, and even the rest of us can find interesting to read. If you have ever wondered how sites like MacStories and Ars Techinica can dig out so many details in their reviews of the annual OS releases, here lies the answer.

The following is an inconclusive list of such approachable documentations updated during WWDC’21 that I’ve discovered. I may (or may not, dependent on my time available) update the list with further readings. For an official list of new technologies across the Apple platforms, see New Technologies WWDC 2021.

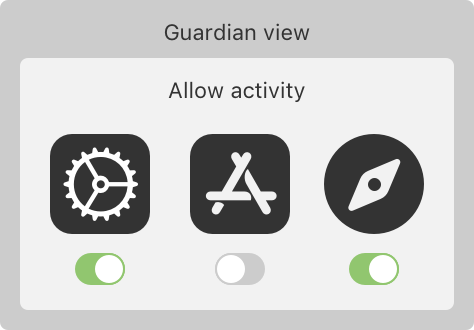

ManagedSettings

Managed Settings provides a privacy-preserving way for users to restrict access to certain settings and features on their devices. With the user’s permission, your app can limit media showings, restrict app purchases, lock passcode settings, and configure other device behavior.

Managed Settings works together with ManagedSettingsUI, DeviceActivity, and FamilyControls to allow your app to restrict, authorize, and monitor device usage.

Audio Graphs

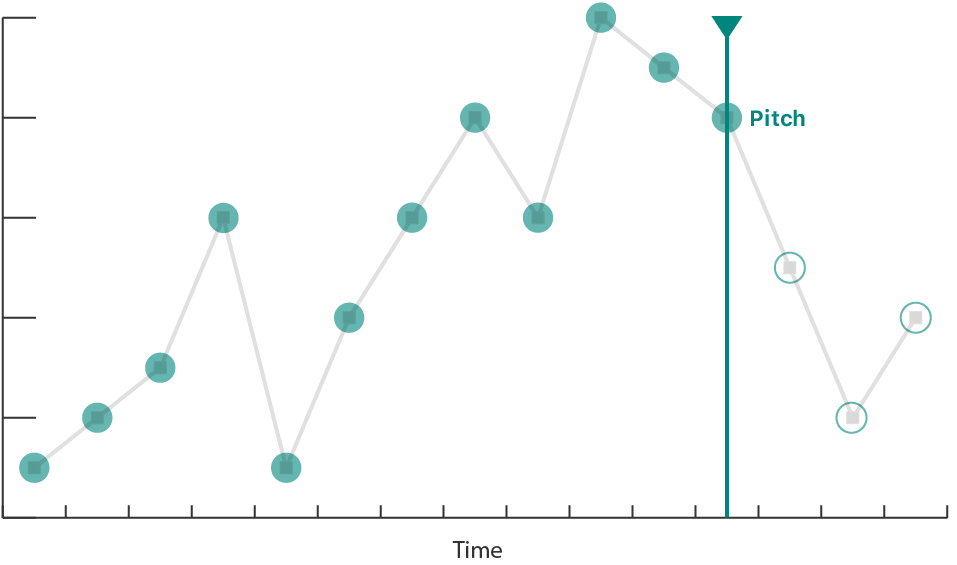

Use the Audio Graphs API to provide all the information that VoiceOver needs to construct an audible representation of the data in your charts and graphs, giving people who are blind or have low vision access to valuable data insights.

An audio graph turns the data in your chart into an audible representation by encoding the data on each axis as audio. Typically, the audio graph represents the X-axis as time, and the Y-axis as pitch. For example, an audible representation of a scatter plot that shows a linear downward trend might be a series of individual tones descending in pitch over time. An audible representation of a stock chart might be a single continuous tone with a pitch that modulates up or down with the stock price (Y-axis) as the audio plays over time (X-axis).

ShazamKit

- The process

- ShazamKit uses the unique acoustic signature of an audio recording to find a match.

- ShazamKit generates a reference signature for each searchable full audio recording.

- A catalog stores the reference signatures and their associated metadata, or media items.

- Searching for a match compares a query signature, which ShazamKit generates for captured audio, with the reference signatures in the catalog.

- You can create a custom catalog with your own reference signatures and their associated metadata.

- a virtual learning app

- reference signatures = teaching videos

- associated metadata = the timecodes for questions

- offer choices for a possible answer for a matched question; updates with fwd. and bwd.

- a virtual learning app

Nearby Interaction

Interact with Apple Watch

The U1 chip-capable Apple Watch running watchOS 8 supports Nearby Interaction sessions. Apps share discovery tokens to begin an interaction session. The Multipeer Connectivity framework provides a way for apps to share discovery tokens over a local network on iOS; on watchOS, apps share discovery tokens using a custom server, Core Bluetooth, LAN (TCP/UDP), or Watch Connectivity.

The system backgrounds the foreground Watch app after two minutes; when the system backgrounds an app, Nearby Interaction sessions suspend.

Nearby Interaction on iOS provides a peer device’s distance and direction, whereas Nearby Interaction on watchOS provides only a peer device’s distance.

Interact with Third-Party Devices

In iOS 15, iPhones can interact with third-party accessories that you partner with or develop with a U1-compatible UWB chip.

Increasing the Visibility of Widgets in Smart Stacks

When users configure Smart Stacks, they have two options for surfacing widgets:

- Smart Rotate automatically rotates widgets to the top of the stack when they have timely, relevant information to show.

- Widget Suggestions automatically suggest widgets that the user doesn’t already have in their stack, perhaps exposing them to widgets they don’t even know exist.

- Your app donates intents and identifies relevant shortcuts to provide a signal about how the user uses your app, when they use it, and what is most relevant to them.

- While intent donations using

INInteractiongive Smart Stacks insight into the past user behavior,INRelevantShortcutinforms future suggestions.- The app conveys this information by specifying a relevance provider as part of the INRelevantShortcut.

- Relevance providers determine when a shortcut is relevant, such as at a specific time of day:

INDateRelevanceProvider

Delivering an Enhanced Privacy Experience in Your Photos App

- In iOS 15.0 and later, you can present the limited-library picker with a callback.

- The callback provides a list of local identifiers that reflect the user’s new selections.

- This method only works when the user enables limited-library mode.

- To monitor changes to the user’s limited-library selection, use the standard change observer API as described in Observing Changes in the Photo Library.

- Register your app to receive notifications and update your user interface as the system notifies it of state changes.

About Continuous Integration and Delivery with Xcode Cloud

- Xcode Cloud is a CI/CD system that

- uses Git for source control and

- provides you with an integrated system that

- ensures the quality and stability of your codebase.

- It also helps you publish apps efficiently.

- Xcode supports source control with Git.

- By using source control, you improve your project’s quality by tracking and reviewing code changes.

- The Importance of Automated Building and Testing

- A typical development process starts with making changes to your code, building the project, and running your app in the Simulator or on a test device.

- take a significant amount of your time.

- especially true for complex apps or frameworks

- With Xcode Cloud, however, you can build, run, and test your project on multiple simulated devices in less time than you can traditionally

- After verifying a change, Xcode Cloud automatically notifies you about the result via email.

- A typical development process starts with making changes to your code, building the project, and running your app in the Simulator or on a test device.

- Continuous Delivery

- The flip side to continuous integration (CI) is continuous delivery (CD).

- When Xcode Cloud verifies a change to your code (CI), it can

- automatically deliver (CD) a new version of your app to testers with TestFlight, or

- make a new version available for release in the App Store.

- upload the exported app archive or a framework to your own server.

- Collaborative Software Development with Xcode Cloud

- support for pull requests (PRs): When you create a PR, you inform your team that a change is ready for review, and you and your team can take a look at each other’s code changes, and provide and address feedback before merging branches.

- In Xcode 13 and later, you can create, view, and comment on PRs, and merge changes into your codebase if you host your Git repository with Bitbucket Server, GitHub, or GitHub Enterprise.

- You can also configure Xcode Cloud to detect new PRs, or changes to an existing PR.

Safari Web Extensions

Safari web extensions are available in macOS 11 and later, macOS 10.14.6 or 10.15.6 with Safari 14 installed, and iOS 15 and later.

Designing Your App for the Always On State

- watchOS 8 expands Always On to include your apps.

- Even though your app is inactive or running in the background,

- the system continues to update and monitor many of the user interface elements.

- any controls in the user interface remain interactive.

- Apps compiled for watchOS 8 and later have Always On enabled by default.

- Always On isn’t available on Apple Watch SE or Apple Watch Series 4 and earlier; the screen turns off when the app transitions to the background or inactive states.

- Understand Frontmost App Behavior

- By default, when the user lowers their wrist or stops interacting with their watch, your app transitions to the inactive state.

- The system continues to display your app’s user interface as long as your app remains the frontmost app (usually two minutes) before transitioning to the background and becoming suspended.

- If the user dismisses the app (for example, by pressing the crown or by covering the screen), the app transitions immediately to the background and doesn’t become the frontmost app.

- Users can set the amount of time that apps remains the frontmost app by changing the settings at Settings > General > Wake Screen > Return to Clock.

- They can also specify a custom time for individual apps from the Wake Screen.

- In WatchOS 8 and later, apps can remain in the frontmost app state for a maximum of 1 hour.

- When the app is inactive, the user interface doesn’t update by default. However, you can use a

TimelineViewto schedule periodic updates.

- Update the Display During Background Sessions

- Unlike frontmost apps, apps running a background session can continue to update their user interface;

- however, to save battery life, the system reduces the update frequency.

- For the best UX,

- pause any animation and show the final state (or a good static image), and

- remove any subsecond updates.

- Unlike frontmost apps, apps running a background session can continue to update their user interface;

- Hide Sensitive Information

- Add the

privacySensitive(_:)view modifier to blur out specific user interface elements during Always On. - Or access the

redactionReasonsenvironment variable.- If [this variable contains the

privacyvalue.], eliminate or obscure any sensitive data.

- If [this variable contains the

- Always hide any highly sensitive information, such as financial information or health data.

- If the user has any concerns, they can disable Always On on a per-app basis.

- Add the

- Customize the Reduced Luminance Appearance

- The system determines the screen’s overall luminance by comparing the ratio of lit pixels to dark pixels.

- It then reduces the overall brightness to an appropriate level.

- Many system controls also automatically update their appearance during Always On.

- You can customize the appearance during Always On to highlight glanceable, important information in your user interface.

- To customize your interface, use the

isLuminanceReducedenvironment variable.

Accessing the Camera While Multitasking

- Starting with iOS 13.5, you can use the new Multitasking Camera Access Entitlement to let your app continue using the camera when Multitasking.

- This scenario occurs in both Split View and Slide Over modes.

- In iOS 15.0 or later, this behavior extends to Picture in Picture mode using AVKit’s new API.

- Respond to System Pressure

- Multitasking introduces the possibility of performance degradation; Increased device temperature and power usage can lead to frame drops or poor capture quality.

- Monitor[] the

systemPressureStateproperty onAVCaptureDevice- When the pressure reaches excessive levels, the capture system shuts down and emits an

AVCaptureSessionWasInterruptednotification. - Apps reduce their footprint on the system by lowering the frame rate or requesting lower-resolution, binned, or non-HDR formats.

- When the pressure reaches excessive levels, the capture system shuts down and emits an

- Observe Capture Session Notifications

- The system only allows one app to use the device’s camera at a given time.

- Prepare your app to respond when another app starts using the camera

- observe two notifications:

AVCaptureSessionWasInterruptedandAVCaptureSessionInterruptionEnded. - The notification object’s user information dictionary contains the reason for an interruption, [which] lets you configure your user interface as your camera access changes.

- observe two notifications:

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK