全网最全python爬虫系统进阶学习(附原代码)学完可就业

source link: https://blog.csdn.net/qq_45803923/article/details/116357910

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

5.2(第二天)

第一章 爬虫介绍

1.认识爬虫

第二章:requests实战(基础爬虫)

1.豆瓣电影爬取

2.肯德基餐厅查询

3.破解百度翻译

4.搜狗首页

5.网页采集器

6.药监总局相关数据爬取

第三章:爬虫数据分析(bs4,xpath,正则表达式)

1.bs4解析基础

2.bs4案例

3.xpath解析基础

4.xpath解析案例-4k图片解析爬取

5.xpath解析案例-58二手房

6.xpath解析案例-爬取站长素材中免费简历模板

7.xpath解析案例-全国城市名称爬取

8.正则解析

9.正则解析-分页爬取

10.爬取图片

第四章:自动识别验证码

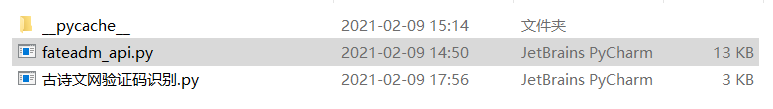

1.古诗文网验证码识别

fateadm_api.py(识别需要的配置,建议放在同一文件夹下)

调用api接口

第五章:request模块高级(模拟登录)

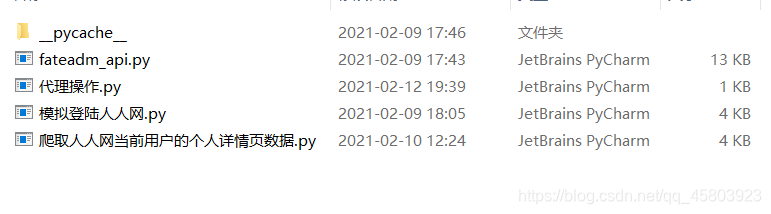

1.代理操作

2.模拟登陆人人网

3.模拟登陆人人网

第六章:高性能异步爬虫(线程池,协程)

1.aiohttp实现多任务异步爬虫

2.flask服务

3.多任务协程

4.多任务异步爬虫

5.示例

6.同步爬虫

7.线程池基本使用

8.线程池在爬虫案例中的应用

9.协程

第七章:动态加载数据处理(selenium模块应用,模拟登录12306)

1.selenium基础用法

2.selenium其他自动操作

3.12306登录示例代码

4.动作链与iframe的处理

5.谷歌无头浏览器+反检测

6.基于selenium实现1236模拟登录

7.模拟登录qq空间

第八章:scrapy框架

1.各种项目实战,scrapy各种配置修改

2.bossPro示例

3.bossPro示例

4.数据库示例

第一章 爬虫介绍

第0关 认识爬虫

1、初始爬虫

爬虫,从本质上来说,就是利用程序在网上拿到对我们有价值的数据。

2、明晰路径

2-1、浏览器工作原理

(1)解析数据:当服务器把数据响应给浏览器之后,浏览器并不会直接把数据丢给我们。因为这些数据是用计算机的语言写的,浏览器还要把这些数据翻译成我们能看得懂的内容;

(2)提取数据:我们就可以在拿到的数据中,挑选出对我们有用的数据;

(3)存储数据:将挑选出来的有用数据保存在某一文件/数据库中。

2-2、爬虫工作原理

(1)获取数据:爬虫程序会根据我们提供的网址,向服务器发起请求,然后返回数据;

(2)解析数据:爬虫程序会把服务器返回的数据解析成我们能读懂的格式;

(3)提取数据:爬虫程序再从中提取出我们需要的数据;

(4)储存数据:爬虫程序把这些有用的数据保存起来,便于你日后的使用和分析。

————————————————

版权声明:本文为CSDN博主「yk 坤帝」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/qq_45803923/article/details/116133325

第二章:requests实战(基础爬虫)

1.豆瓣电影爬取

import requests

import json

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = "https://movie.douban.com/j/chart/top_list"

params = {

'type': '24',

'interval_id': '100:90',

'action': '',

'start': '0',#从第几部电影开始取

'limit': '20'#一次取出的电影的个数

}

response = requests.get(url,params = params,headers = headers)

list_data = response.json()

fp = open('douban.json','w',encoding= 'utf-8')

json.dump(list_data,fp = fp,ensure_ascii= False)

print('over!!!!')

2.肯德基餐厅查询

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=keyword'

word = input('请输入一个地址:')

params = {

'cname': '',

'pid': '',

'keyword': word,

'pageIndex': '1',

'pageSize': '10'

}

response = requests.post(url,params = params ,headers = headers)

page_text = response.text

fileName = word + '.txt'

with open(fileName,'w',encoding= 'utf-8') as f:

f.write(page_text)

3.破解百度翻译

import requests

import json

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

post_url = 'https://fanyi.baidu.com/sug'

word = input('enter a word:')

data = {

'kw':word

}

response = requests.post(url = post_url,data = data,headers = headers)

dic_obj = response.json()

fileName = word + '.json'

fp = open(fileName,'w',encoding= 'utf-8')

#ensure_ascii = False,中文不能用ascii代码

json.dump(dic_obj,fp = fp,ensure_ascii = False)

print('over!')

4.搜狗首页

import requests

url = 'https://www.sogou.com/?pid=sogou-site-d5da28d4865fb927'

response = requests.get(url)

page_text = response.text

print(page_text)

with open('./sougou.html','w',encoding= 'utf-8') as fp:

fp.write(page_text)

print('爬取数据结束!!!')

5.网页采集器

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://www.sogou.com/sogou'

kw = input('enter a word:')

param = {

'query':kw

}

response = requests.get(url,params = param,headers = headers)

page_text = response.text

fileName = kw +'.html'

with open(fileName,'w',encoding= 'utf-8') as fp:

fp.write(page_text)

print(fileName,'保存成功!!!')

6.药监总局相关数据爬取

import requests

import json

url = "http://scxk.nmpa.gov.cn:81/xk/itownet/portalAction.do?method=getXkzsList"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4385.0 Safari/537.36'

}

for page in range(1,6):

page = str(page)

data = {

'on': 'true',

'page': page,

'pageSize': '15',

'productName':'',

'conditionType': '1',

'applyname': '',

'applysn':''

}

json_ids = requests.post(url,data = data,headers = headers).json()

id_list = []

for dic in json_ids['list']:

id_list.append(dic['ID'])

#print(id_list)

post_url = 'http://scxk.nmpa.gov.cn:81/xk/itownet/portalAction.do?method=getXkzsById'

all_data_list = []

for id in id_list:

data = {

'id':id

}

datail_json = requests.post(url = post_url,data = data,headers = headers).json()

#print(datail_json,'---------------------over')

all_data_list.append(datail_json)

fp = open('allData.json','w',encoding='utf-8')

json.dump(all_data_list,fp = fp,ensure_ascii= False)

print('over!!!')

第三章:爬虫数据分析(bs4,xpath,正则表达式)

1.bs4解析基础

from bs4 import BeautifulSoup

fp = open('第三章 数据分析/text.html','r',encoding='utf-8')

soup = BeautifulSoup(fp,'lxml')

#print(soup)

#print(soup.a)

#print(soup.div)

#print(soup.find('div'))

#print(soup.find('div',class_="song"))

#print(soup.find_all('a'))

#print(soup.select('.tang'))

#print(soup.select('.tang > ul > li >a')[0].text)

#print(soup.find('div',class_="song").text)

#print(soup.find('div',class_="song").string)

print(soup.select('.tang > ul > li >a')[0]['href'])

2.bs4案例

from bs4 import BeautifulSoup

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = "http://sanguo.5000yan.com/"

page_text = requests.get(url ,headers = headers).content

#print(page_text)

soup = BeautifulSoup(page_text,'lxml')

li_list = soup.select('.list > ul > li')

fp = open('./sanguo.txt','w',encoding='utf-8')

for li in li_list:

title = li.a.string

#print(title)

detail_url = 'http://sanguo.5000yan.com/'+li.a['href']

print(detail_url)

detail_page_text = requests.get(detail_url,headers = headers).content

detail_soup = BeautifulSoup(detail_page_text,'lxml')

div_tag = detail_soup.find('div',class_="grap")

content = div_tag.text

fp.write(title+":"+content+'\n')

print(title,'爬取成功!!!')

3.xpath解析基础

from lxml import etree

tree = etree.parse('第三章 数据分析/text.html')

# r = tree.xpath('/html/head/title')

# print(r)

# r = tree.xpath('/html/body/div')

# print(r)

# r = tree.xpath('/html//div')

# print(r)

# r = tree.xpath('//div')

# print(r)

# r = tree.xpath('//div[@class="song"]')

# print(r)

# r = tree.xpath('//div[@class="song"]/P[3]')

# print(r)

# r = tree.xpath('//div[@class="tang"]//li[5]/a/text()')

# print(r)

# r = tree.xpath('//li[7]/i/text()')

# print(r)

# r = tree.xpath('//li[7]//text()')

# print(r)

# r = tree.xpath('//div[@class="tang"]//text()')

# print(r)

# r = tree.xpath('//div[@class="song"]/img/@src')

# print(r)

4.xpath解析案例-4k图片解析爬取

import requests

from lxml import etree

import os

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'http://pic.netbian.com/4kmeinv/'

response = requests.get(url,headers = headers)

#response.encoding=response.apparent_encoding

#response.encoding = 'utf-8'

page_text = response.text

tree = etree.HTML(page_text)

li_list = tree.xpath('//div[@class="slist"]/ul/li')

# if not os.path.exists('./picLibs'):

# os.mkdir('./picLibs')

for li in li_list:

img_src = 'http://pic.netbian.com/'+li.xpath('./a/img/@src')[0]

img_name = li.xpath('./a/img/@alt')[0]+'.jpg'

img_name = img_name.encode('iso-8859-1').decode('gbk')

# print(img_name,img_src)

# print(type(img_name))

img_data = requests.get(url = img_src,headers = headers).content

img_path ='picLibs/'+img_name

#print(img_path)

with open(img_path,'wb') as fp:

fp.write(img_data)

print(img_name,"下载成功")

5.xpath解析案例-58二手房

import requests

from lxml import etree

url = 'https://bj.58.com/ershoufang/p2/'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

page_text = requests.get(url=url,headers = headers).text

tree = etree.HTML(page_text)

li_list = tree.xpath('//section[@class="list-left"]/section[2]/div')

fp = open('58.txt','w',encoding='utf-8')

for li in li_list:

title = li.xpath('./a/div[2]/div/div/h3/text()')[0]

print(title)

fp.write(title+'\n')

6.xpath解析案例-爬取站长素材中免费简历模板

import requests

from lxml import etree

import os

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://www.aqistudy.cn/historydata/'

page_text = requests.get(url,headers = headers).text

7.xpath解析案例-全国城市名称爬取

import requests

from lxml import etree

import os

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://www.aqistudy.cn/historydata/'

page_text = requests.get(url,headers = headers).text

tree = etree.HTML(page_text)

# holt_li_list = tree.xpath('//div[@class="bottom"]/ul/li')

# all_city_name = []

# for li in holt_li_list:

# host_city_name = li.xpath('./a/text()')[0]

# all_city_name.append(host_city_name)

# city_name_list = tree.xpath('//div[@class="bottom"]/ul/div[2]/li')

# for li in city_name_list:

# city_name = li.xpath('./a/text()')[0]

# all_city_name.append(city_name)

# print(all_city_name,len(all_city_name))

#holt_li_list = tree.xpath('//div[@class="bottom"]/ul//li')

holt_li_list = tree.xpath('//div[@class="bottom"]/ul/li | //div[@class="bottom"]/ul/div[2]/li')

all_city_name = []

for li in holt_li_list:

host_city_name = li.xpath('./a/text()')[0]

all_city_name.append(host_city_name)

print(all_city_name,len(all_city_name))

8.正则解析

import requests

import re

import os

if not os.path.exists('./qiutuLibs'):

os.mkdir('./qiutuLibs')

url = 'https://www.qiushibaike.com/imgrank/'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4385.0 Safari/537.36'

}

page_text = requests.get(url,headers = headers).text

ex = '<div class="thumb">.*?<img src="(.*?)" alt.*?</div>'

img_src_list = re.findall(ex,page_text,re.S)

print(img_src_list)

for src in img_src_list:

src = 'https:' + src

img_data = requests.get(url = src,headers = headers).content

img_name = src.split('/')[-1]

imgPath = './qiutuLibs/'+img_name

with open(imgPath,'wb') as fp:

fp.write(img_data)

print(img_name,"下载完成!!!!!")

9.正则解析-分页爬取

import requests

import re

import os

if not os.path.exists('./qiutuLibs'):

os.mkdir('./qiutuLibs')

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4385.0 Safari/537.36'

}

url = 'https://www.qiushibaike.com/imgrank/page/%d/'

for pageNum in range(1,3):

new_url = format(url%pageNum)

page_text = requests.get(new_url,headers = headers).text

ex = '<div class="thumb">.*?<img src="(.*?)" alt.*?</div>'

img_src_list = re.findall(ex,page_text,re.S)

print(img_src_list)

for src in img_src_list:

src = 'https:' + src

img_data = requests.get(url = src,headers = headers).content

img_name = src.split('/')[-1]

imgPath = './qiutuLibs/'+img_name

with open(imgPath,'wb') as fp:

fp.write(img_data)

print(img_name,"下载完成!!!!!")

10.爬取图片

import requests

url = 'https://pic.qiushibaike.com/system/pictures/12404/124047919/medium/R7Y2UOCDRBXF2MIQ.jpg'

img_data = requests.get(url).content

with open('qiutu.jpg','wb') as fp:

fp.write(img_data)

第四章:自动识别验证码

1.古诗文网验证码识别

开发者账号密码可以申请

import requests

from lxml import etree

from fateadm_api import FateadmApi

def TestFunc(imgPath,codyType):

pd_id = "xxxxxx" #用户中心页可以查询到pd信息

pd_key = "xxxxxxxx"

app_id = "xxxxxxx" #开发者分成用的账号,在开发者中心可以查询到

app_key = "xxxxxxx"

#识别类型,

#具体类型可以查看官方网站的价格页选择具体的类型,不清楚类型的,可以咨询客服

pred_type = codyType

api = FateadmApi(app_id, app_key, pd_id, pd_key)

# 查询余额

balance = api.QueryBalcExtend() # 直接返余额

# api.QueryBalc()

# 通过文件形式识别:

file_name = imgPath

# 多网站类型时,需要增加src_url参数,具体请参考api文档: http://docs.fateadm.com/web/#/1?page_id=6

result = api.PredictFromFileExtend(pred_type,file_name) # 直接返回识别结果

#rsp = api.PredictFromFile(pred_type, file_name) # 返回详细识别结果

'''

# 如果不是通过文件识别,则调用Predict接口:

# result = api.PredictExtend(pred_type,data) # 直接返回识别结果

rsp = api.Predict(pred_type,data) # 返回详细的识别结果

'''

# just_flag = False

# if just_flag :

# if rsp.ret_code == 0:

# #识别的结果如果与预期不符,可以调用这个接口将预期不符的订单退款

# # 退款仅在正常识别出结果后,无法通过网站验证的情况,请勿非法或者滥用,否则可能进行封号处理

# api.Justice( rsp.request_id)

#card_id = "123"

#card_key = "123"

#充值

#api.Charge(card_id, card_key)

#LOG("print in testfunc")

return result

# if __name__ == "__main__":

# TestFunc()

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://so.gushiwen.cn/user/login.aspx?from=http://so.gushiwen.cn/user/collect.aspx'

page_text = requests.get(url,headers = headers).text

tree = etree.HTML(page_text)

code_img_src = 'https://so.gushiwen.cn' + tree.xpath('//*[@id="imgCode"]/@src')[0]

img_data = requests.get(code_img_src,headers = headers).content

with open('./code.jpg','wb') as fp:

fp.write(img_data)

code_text = TestFunc('code.jpg',30400)

print('识别结果为:' + code_text)

code_text = TestFunc('code.jpg',30400)

print('识别结果为:' + code_text)

fateadm_api.py(识别需要的配置,建议放在同一文件夹下)

调用api接口

# coding=utf-8

import os,sys

import hashlib

import time

import json

import requests

FATEA_PRED_URL = "http://pred.fateadm.com"

def LOG(log):

# 不需要测试时,注释掉日志就可以了

print(log)

log = None

class TmpObj():

def __init__(self):

self.value = None

class Rsp():

def __init__(self):

self.ret_code = -1

self.cust_val = 0.0

self.err_msg = "succ"

self.pred_rsp = TmpObj()

def ParseJsonRsp(self, rsp_data):

if rsp_data is None:

self.err_msg = "http request failed, get rsp Nil data"

return

jrsp = json.loads( rsp_data)

self.ret_code = int(jrsp["RetCode"])

self.err_msg = jrsp["ErrMsg"]

self.request_id = jrsp["RequestId"]

if self.ret_code == 0:

rslt_data = jrsp["RspData"]

if rslt_data is not None and rslt_data != "":

jrsp_ext = json.loads( rslt_data)

if "cust_val" in jrsp_ext:

data = jrsp_ext["cust_val"]

self.cust_val = float(data)

if "result" in jrsp_ext:

data = jrsp_ext["result"]

self.pred_rsp.value = data

def CalcSign(pd_id, passwd, timestamp):

md5 = hashlib.md5()

md5.update((timestamp + passwd).encode())

csign = md5.hexdigest()

md5 = hashlib.md5()

md5.update((pd_id + timestamp + csign).encode())

csign = md5.hexdigest()

return csign

def CalcCardSign(cardid, cardkey, timestamp, passwd):

md5 = hashlib.md5()

md5.update(passwd + timestamp + cardid + cardkey)

return md5.hexdigest()

def HttpRequest(url, body_data, img_data=""):

rsp = Rsp()

post_data = body_data

files = {

'img_data':('img_data',img_data)

}

header = {

'User-Agent': 'Mozilla/5.0',

}

rsp_data = requests.post(url, post_data,files=files ,headers=header)

rsp.ParseJsonRsp( rsp_data.text)

return rsp

class FateadmApi():

# API接口调用类

# 参数(appID,appKey,pdID,pdKey)

def __init__(self, app_id, app_key, pd_id, pd_key):

self.app_id = app_id

if app_id is None:

self.app_id = ""

self.app_key = app_key

self.pd_id = pd_id

self.pd_key = pd_key

self.host = FATEA_PRED_URL

def SetHost(self, url):

self.host = url

#

# 查询余额

# 参数:无

# 返回值:

# rsp.ret_code:正常返回0

# rsp.cust_val:用户余额

# rsp.err_msg:异常时返回异常详情

#

def QueryBalc(self):

tm = str( int(time.time()))

sign = CalcSign( self.pd_id, self.pd_key, tm)

param = {

"user_id": self.pd_id,

"timestamp":tm,

"sign":sign

}

url = self.host + "/api/custval"

rsp = HttpRequest(url, param)

if rsp.ret_code == 0:

LOG("query succ ret: {} cust_val: {} rsp: {} pred: {}".format( rsp.ret_code, rsp.cust_val, rsp.err_msg, rsp.pred_rsp.value))

else:

LOG("query failed ret: {} err: {}".format( rsp.ret_code, rsp.err_msg.encode('utf-8')))

return rsp

#

# 查询网络延迟

# 参数:pred_type:识别类型

# 返回值:

# rsp.ret_code:正常返回0

# rsp.err_msg: 异常时返回异常详情

#

def QueryTTS(self, pred_type):

tm = str( int(time.time()))

sign = CalcSign( self.pd_id, self.pd_key, tm)

param = {

"user_id": self.pd_id,

"timestamp":tm,

"sign":sign,

"predict_type":pred_type,

}

if self.app_id != "":

#

asign = CalcSign(self.app_id, self.app_key, tm)

param["appid"] = self.app_id

param["asign"] = asign

url = self.host + "/api/qcrtt"

rsp = HttpRequest(url, param)

if rsp.ret_code == 0:

LOG("query rtt succ ret: {} request_id: {} err: {}".format( rsp.ret_code, rsp.request_id, rsp.err_msg))

else:

LOG("predict failed ret: {} err: {}".format( rsp.ret_code, rsp.err_msg.encode('utf-8')))

return rsp

#

# 识别验证码

# 参数:pred_type:识别类型 img_data:图片的数据

# 返回值:

# rsp.ret_code:正常返回0

# rsp.request_id:唯一订单号

# rsp.pred_rsp.value:识别结果

# rsp.err_msg:异常时返回异常详情

#

def Predict(self, pred_type, img_data, head_info = ""):

tm = str( int(time.time()))

sign = CalcSign( self.pd_id, self.pd_key, tm)

param = {

"user_id": self.pd_id,

"timestamp": tm,

"sign": sign,

"predict_type": pred_type,

"up_type": "mt"

}

if head_info is not None or head_info != "":

param["head_info"] = head_info

if self.app_id != "":

#

asign = CalcSign(self.app_id, self.app_key, tm)

param["appid"] = self.app_id

param["asign"] = asign

url = self.host + "/api/capreg"

files = img_data

rsp = HttpRequest(url, param, files)

if rsp.ret_code == 0:

LOG("predict succ ret: {} request_id: {} pred: {} err: {}".format( rsp.ret_code, rsp.request_id, rsp.pred_rsp.value, rsp.err_msg))

else:

LOG("predict failed ret: {} err: {}".format( rsp.ret_code, rsp.err_msg))

if rsp.ret_code == 4003:

#lack of money

LOG("cust_val <= 0 lack of money, please charge immediately")

return rsp

#

# 从文件进行验证码识别

# 参数:pred_type;识别类型 file_name:文件名

# 返回值:

# rsp.ret_code:正常返回0

# rsp.request_id:唯一订单号

# rsp.pred_rsp.value:识别结果

# rsp.err_msg:异常时返回异常详情

#

def PredictFromFile( self, pred_type, file_name, head_info = ""):

with open(file_name, "rb") as f:

data = f.read()

return self.Predict(pred_type,data,head_info=head_info)

#

# 识别失败,进行退款请求

# 参数:request_id:需要退款的订单号

# 返回值:

# rsp.ret_code:正常返回0

# rsp.err_msg:异常时返回异常详情

#

# 注意:

# Predict识别接口,仅在ret_code == 0时才会进行扣款,才需要进行退款请求,否则无需进行退款操作

# 注意2:

# 退款仅在正常识别出结果后,无法通过网站验证的情况,请勿非法或者滥用,否则可能进行封号处理

#

def Justice(self, request_id):

if request_id == "":

#

return

tm = str( int(time.time()))

sign = CalcSign( self.pd_id, self.pd_key, tm)

param = {

"user_id": self.pd_id,

"timestamp":tm,

"sign":sign,

"request_id":request_id

}

url = self.host + "/api/capjust"

rsp = HttpRequest(url, param)

if rsp.ret_code == 0:

LOG("justice succ ret: {} request_id: {} pred: {} err: {}".format( rsp.ret_code, rsp.request_id, rsp.pred_rsp.value, rsp.err_msg))

else:

LOG("justice failed ret: {} err: {}".format( rsp.ret_code, rsp.err_msg.encode('utf-8')))

return rsp

#

# 充值接口

# 参数:cardid:充值卡号 cardkey:充值卡签名串

# 返回值:

# rsp.ret_code:正常返回0

# rsp.err_msg:异常时返回异常详情

#

def Charge(self, cardid, cardkey):

tm = str( int(time.time()))

sign = CalcSign( self.pd_id, self.pd_key, tm)

csign = CalcCardSign(cardid, cardkey, tm, self.pd_key)

param = {

"user_id": self.pd_id,

"timestamp":tm,

"sign":sign,

'cardid':cardid,

'csign':csign

}

url = self.host + "/api/charge"

rsp = HttpRequest(url, param)

if rsp.ret_code == 0:

LOG("charge succ ret: {} request_id: {} pred: {} err: {}".format( rsp.ret_code, rsp.request_id, rsp.pred_rsp.value, rsp.err_msg))

else:

LOG("charge failed ret: {} err: {}".format( rsp.ret_code, rsp.err_msg.encode('utf-8')))

return rsp

##

# 充值,只返回是否成功

# 参数:cardid:充值卡号 cardkey:充值卡签名串

# 返回值: 充值成功时返回0

##

def ExtendCharge(self, cardid, cardkey):

return self.Charge(cardid,cardkey).ret_code

##

# 调用退款,只返回是否成功

# 参数: request_id:需要退款的订单号

# 返回值: 退款成功时返回0

#

# 注意:

# Predict识别接口,仅在ret_code == 0时才会进行扣款,才需要进行退款请求,否则无需进行退款操作

# 注意2:

# 退款仅在正常识别出结果后,无法通过网站验证的情况,请勿非法或者滥用,否则可能进行封号处理

##

def JusticeExtend(self, request_id):

return self.Justice(request_id).ret_code

##

# 查询余额,只返回余额

# 参数:无

# 返回值:rsp.cust_val:余额

##

def QueryBalcExtend(self):

rsp = self.QueryBalc()

return rsp.cust_val

##

# 从文件识别验证码,只返回识别结果

# 参数:pred_type;识别类型 file_name:文件名

# 返回值: rsp.pred_rsp.value:识别的结果

##

def PredictFromFileExtend( self, pred_type, file_name, head_info = ""):

rsp = self.PredictFromFile(pred_type,file_name,head_info)

return rsp.pred_rsp.value

##

# 识别接口,只返回识别结果

# 参数:pred_type:识别类型 img_data:图片的数据

# 返回值: rsp.pred_rsp.value:识别的结果

##

def PredictExtend(self,pred_type, img_data, head_info = ""):

rsp = self.Predict(pred_type,img_data,head_info)

return rsp.pred_rsp.value

def TestFunc():

pd_id = "128292" #用户中心页可以查询到pd信息

pd_key = "bASHdc/12ISJOX7pV3qhPr2ntQ6QcEkV"

app_id = "100001" #开发者分成用的账号,在开发者中心可以查询到

app_key = "123456"

#识别类型,

#具体类型可以查看官方网站的价格页选择具体的类型,不清楚类型的,可以咨询客服

pred_type = "30400"

api = FateadmApi(app_id, app_key, pd_id, pd_key)

# 查询余额

balance = api.QueryBalcExtend() # 直接返余额

# api.QueryBalc()

# 通过文件形式识别:

file_name = 'img.gif'

# 多网站类型时,需要增加src_url参数,具体请参考api文档: http://docs.fateadm.com/web/#/1?page_id=6

# result = api.PredictFromFileExtend(pred_type,file_name) # 直接返回识别结果

rsp = api.PredictFromFile(pred_type, file_name) # 返回详细识别结果

'''

# 如果不是通过文件识别,则调用Predict接口:

# result = api.PredictExtend(pred_type,data) # 直接返回识别结果

rsp = api.Predict(pred_type,data) # 返回详细的识别结果

'''

just_flag = False

if just_flag :

if rsp.ret_code == 0:

#识别的结果如果与预期不符,可以调用这个接口将预期不符的订单退款

# 退款仅在正常识别出结果后,无法通过网站验证的情况,请勿非法或者滥用,否则可能进行封号处理

api.Justice( rsp.request_id)

#card_id = "123"

#card_key = "123"

#充值

#api.Charge(card_id, card_key)

LOG("print in testfunc")

if __name__ == "__main__":

TestFunc()

第五章:request模块高级(模拟登录)

1.代理操作

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://www.sogou.com/sie?query=ip'

page_text = requests.get(url,headers = headers,proxies = {"https":"183.166.103.86:9999"}).text

with open('ip.html','w',encoding='utf-8') as fp:

fp.write(page_text)

2.模拟登陆人人网

import requests

from lxml import etree

from fateadm_api import FateadmApi

def TestFunc(imgPath,codyType):

pd_id = "xxxxx" #用户中心页可以查询到pd信息

pd_key = "xxxxxxxxxxxxxxxxxx"

app_id = "xxxxxxxx" #开发者分成用的账号,在开发者中心可以查询到

app_key = "xxxxxx"

#识别类型,

#具体类型可以查看官方网站的价格页选择具体的类型,不清楚类型的,可以咨询客服

pred_type = codyType

api = FateadmApi(app_id, app_key, pd_id, pd_key)

# 查询余额

balance = api.QueryBalcExtend() # 直接返余额

# api.QueryBalc()

# 通过文件形式识别:

file_name = imgPath

# 多网站类型时,需要增加src_url参数,具体请参考api文档: http://docs.fateadm.com/web/#/1?page_id=6

result = api.PredictFromFileExtend(pred_type,file_name) # 直接返回识别结果

#rsp = api.PredictFromFile(pred_type, file_name) # 返回详细识别结果

'''

# 如果不是通过文件识别,则调用Predict接口:

# result = api.PredictExtend(pred_type,data) # 直接返回识别结果

rsp = api.Predict(pred_type,data) # 返回详细的识别结果

'''

# just_flag = False

# if just_flag :

# if rsp.ret_code == 0:

# #识别的结果如果与预期不符,可以调用这个接口将预期不符的订单退款

# # 退款仅在正常识别出结果后,无法通过网站验证的情况,请勿非法或者滥用,否则可能进行封号处理

# api.Justice( rsp.request_id)

#card_id = "123"

#card_key = "123"

#充值

#api.Charge(card_id, card_key)

#LOG("print in testfunc")

return result

# if __name__ == "__main__":

# TestFunc()

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'http://www.renren.com/'

page_text = requests.get(url,headers = headers).text

tree = etree.HTML(page_text)

code_img_src = tree.xpath('//*[@id="verifyPic_login"]/@src')[0]

code_img_data = requests.get(code_img_src,headers = headers).content

with open('./code.jpg','wb') as fp:

fp.write(code_img_data)

result = TestFunc('code.jpg',30600)

print('识别结果为:' + result)

login_url = 'http://www.renren.com/ajaxLogin/login?1=1&uniqueTimestamp=2021121720536'

data = {

'email':'xxxxxxxx',

'icode': result,

'origURL': 'http://www.renren.com/home',

'domain': 'renren.com',

'key_id': '1',

'captcha_type':' web_login',

'password': '47e27dd5ef32b31041ebf56ec85a9b1e4233875e36396241c88245b188c56cdb',

'rkey': 'c655ef0c57a72755f1240d6c0efac67d',

'f': ''

}

response = requests.post(login_url,headers = headers, data = data)

print(response.status_code)

with open('renren.html','w',encoding= 'utf-8') as fp:

fp.write(response.text)

fateadm_api.py

# coding=utf-8

import os,sys

import hashlib

import time

import json

import requests

FATEA_PRED_URL = "http://pred.fateadm.com"

def LOG(log):

# 不需要测试时,注释掉日志就可以了

print(log)

log = None

class TmpObj():

def __init__(self):

self.value = None

class Rsp():

def __init__(self):

self.ret_code = -1

self.cust_val = 0.0

self.err_msg = "succ"

self.pred_rsp = TmpObj()

def ParseJsonRsp(self, rsp_data):

if rsp_data is None:

self.err_msg = "http request failed, get rsp Nil data"

return

jrsp = json.loads( rsp_data)

self.ret_code = int(jrsp["RetCode"])

self.err_msg = jrsp["ErrMsg"]

self.request_id = jrsp["RequestId"]

if self.ret_code == 0:

rslt_data = jrsp["RspData"]

if rslt_data is not None and rslt_data != "":

jrsp_ext = json.loads( rslt_data)

if "cust_val" in jrsp_ext:

data = jrsp_ext["cust_val"]

self.cust_val = float(data)

if "result" in jrsp_ext:

data = jrsp_ext["result"]

self.pred_rsp.value = data

def CalcSign(pd_id, passwd, timestamp):

md5 = hashlib.md5()

md5.update((timestamp + passwd).encode())

csign = md5.hexdigest()

md5 = hashlib.md5()

md5.update((pd_id + timestamp + csign).encode())

csign = md5.hexdigest()

return csign

def CalcCardSign(cardid, cardkey, timestamp, passwd):

md5 = hashlib.md5()

md5.update(passwd + timestamp + cardid + cardkey)

return md5.hexdigest()

def HttpRequest(url, body_data, img_data=""):

rsp = Rsp()

post_data = body_data

files = {

'img_data':('img_data',img_data)

}

header = {

'User-Agent': 'Mozilla/5.0',

}

rsp_data = requests.post(url, post_data,files=files ,headers=header)

rsp.ParseJsonRsp( rsp_data.text)

return rsp

class FateadmApi():

# API接口调用类

# 参数(appID,appKey,pdID,pdKey)

def __init__(self, app_id, app_key, pd_id, pd_key):

self.app_id = app_id

if app_id is None:

self.app_id = ""

self.app_key = app_key

self.pd_id = pd_id

self.pd_key = pd_key

self.host = FATEA_PRED_URL

def SetHost(self, url):

self.host = url

#

# 查询余额

# 参数:无

# 返回值:

# rsp.ret_code:正常返回0

# rsp.cust_val:用户余额

# rsp.err_msg:异常时返回异常详情

#

def QueryBalc(self):

tm = str( int(time.time()))

sign = CalcSign( self.pd_id, self.pd_key, tm)

param = {

"user_id": self.pd_id,

"timestamp":tm,

"sign":sign

}

url = self.host + "/api/custval"

rsp = HttpRequest(url, param)

if rsp.ret_code == 0:

LOG("query succ ret: {} cust_val: {} rsp: {} pred: {}".format( rsp.ret_code, rsp.cust_val, rsp.err_msg, rsp.pred_rsp.value))

else:

LOG("query failed ret: {} err: {}".format( rsp.ret_code, rsp.err_msg.encode('utf-8')))

return rsp

#

# 查询网络延迟

# 参数:pred_type:识别类型

# 返回值:

# rsp.ret_code:正常返回0

# rsp.err_msg: 异常时返回异常详情

#

def QueryTTS(self, pred_type):

tm = str( int(time.time()))

sign = CalcSign( self.pd_id, self.pd_key, tm)

param = {

"user_id": self.pd_id,

"timestamp":tm,

"sign":sign,

"predict_type":pred_type,

}

if self.app_id != "":

#

asign = CalcSign(self.app_id, self.app_key, tm)

param["appid"] = self.app_id

param["asign"] = asign

url = self.host + "/api/qcrtt"

rsp = HttpRequest(url, param)

if rsp.ret_code == 0:

LOG("query rtt succ ret: {} request_id: {} err: {}".format( rsp.ret_code, rsp.request_id, rsp.err_msg))

else:

LOG("predict failed ret: {} err: {}".format( rsp.ret_code, rsp.err_msg.encode('utf-8')))

return rsp

#

# 识别验证码

# 参数:pred_type:识别类型 img_data:图片的数据

# 返回值:

# rsp.ret_code:正常返回0

# rsp.request_id:唯一订单号

# rsp.pred_rsp.value:识别结果

# rsp.err_msg:异常时返回异常详情

#

def Predict(self, pred_type, img_data, head_info = ""):

tm = str( int(time.time()))

sign = CalcSign( self.pd_id, self.pd_key, tm)

param = {

"user_id": self.pd_id,

"timestamp": tm,

"sign": sign,

"predict_type": pred_type,

"up_type": "mt"

}

if head_info is not None or head_info != "":

param["head_info"] = head_info

if self.app_id != "":

#

asign = CalcSign(self.app_id, self.app_key, tm)

param["appid"] = self.app_id

param["asign"] = asign

url = self.host + "/api/capreg"

files = img_data

rsp = HttpRequest(url, param, files)

if rsp.ret_code == 0:

LOG("predict succ ret: {} request_id: {} pred: {} err: {}".format( rsp.ret_code, rsp.request_id, rsp.pred_rsp.value, rsp.err_msg))

else:

LOG("predict failed ret: {} err: {}".format( rsp.ret_code, rsp.err_msg))

if rsp.ret_code == 4003:

#lack of money

LOG("cust_val <= 0 lack of money, please charge immediately")

return rsp

#

# 从文件进行验证码识别

# 参数:pred_type;识别类型 file_name:文件名

# 返回值:

# rsp.ret_code:正常返回0

# rsp.request_id:唯一订单号

# rsp.pred_rsp.value:识别结果

# rsp.err_msg:异常时返回异常详情

#

def PredictFromFile( self, pred_type, file_name, head_info = ""):

with open(file_name, "rb") as f:

data = f.read()

return self.Predict(pred_type,data,head_info=head_info)

#

# 识别失败,进行退款请求

# 参数:request_id:需要退款的订单号

# 返回值:

# rsp.ret_code:正常返回0

# rsp.err_msg:异常时返回异常详情

#

# 注意:

# Predict识别接口,仅在ret_code == 0时才会进行扣款,才需要进行退款请求,否则无需进行退款操作

# 注意2:

# 退款仅在正常识别出结果后,无法通过网站验证的情况,请勿非法或者滥用,否则可能进行封号处理

#

def Justice(self, request_id):

if request_id == "":

#

return

tm = str( int(time.time()))

sign = CalcSign( self.pd_id, self.pd_key, tm)

param = {

"user_id": self.pd_id,

"timestamp":tm,

"sign":sign,

"request_id":request_id

}

url = self.host + "/api/capjust"

rsp = HttpRequest(url, param)

if rsp.ret_code == 0:

LOG("justice succ ret: {} request_id: {} pred: {} err: {}".format( rsp.ret_code, rsp.request_id, rsp.pred_rsp.value, rsp.err_msg))

else:

LOG("justice failed ret: {} err: {}".format( rsp.ret_code, rsp.err_msg.encode('utf-8')))

return rsp

#

# 充值接口

# 参数:cardid:充值卡号 cardkey:充值卡签名串

# 返回值:

# rsp.ret_code:正常返回0

# rsp.err_msg:异常时返回异常详情

#

def Charge(self, cardid, cardkey):

tm = str( int(time.time()))

sign = CalcSign( self.pd_id, self.pd_key, tm)

csign = CalcCardSign(cardid, cardkey, tm, self.pd_key)

param = {

"user_id": self.pd_id,

"timestamp":tm,

"sign":sign,

'cardid':cardid,

'csign':csign

}

url = self.host + "/api/charge"

rsp = HttpRequest(url, param)

if rsp.ret_code == 0:

LOG("charge succ ret: {} request_id: {} pred: {} err: {}".format( rsp.ret_code, rsp.request_id, rsp.pred_rsp.value, rsp.err_msg))

else:

LOG("charge failed ret: {} err: {}".format( rsp.ret_code, rsp.err_msg.encode('utf-8')))

return rsp

##

# 充值,只返回是否成功

# 参数:cardid:充值卡号 cardkey:充值卡签名串

# 返回值: 充值成功时返回0

##

def ExtendCharge(self, cardid, cardkey):

return self.Charge(cardid,cardkey).ret_code

##

# 调用退款,只返回是否成功

# 参数: request_id:需要退款的订单号

# 返回值: 退款成功时返回0

#

# 注意:

# Predict识别接口,仅在ret_code == 0时才会进行扣款,才需要进行退款请求,否则无需进行退款操作

# 注意2:

# 退款仅在正常识别出结果后,无法通过网站验证的情况,请勿非法或者滥用,否则可能进行封号处理

##

def JusticeExtend(self, request_id):

return self.Justice(request_id).ret_code

##

# 查询余额,只返回余额

# 参数:无

# 返回值:rsp.cust_val:余额

##

def QueryBalcExtend(self):

rsp = self.QueryBalc()

return rsp.cust_val

##

# 从文件识别验证码,只返回识别结果

# 参数:pred_type;识别类型 file_name:文件名

# 返回值: rsp.pred_rsp.value:识别的结果

##

def PredictFromFileExtend( self, pred_type, file_name, head_info = ""):

rsp = self.PredictFromFile(pred_type,file_name,head_info)

return rsp.pred_rsp.value

##

# 识别接口,只返回识别结果

# 参数:pred_type:识别类型 img_data:图片的数据

# 返回值: rsp.pred_rsp.value:识别的结果

##

def PredictExtend(self,pred_type, img_data, head_info = ""):

rsp = self.Predict(pred_type,img_data,head_info)

return rsp.pred_rsp.value

def TestFunc():

pd_id = "128292" #用户中心页可以查询到pd信息

pd_key = "bASHdc/12ISJOX7pV3qhPr2ntQ6QcEkV"

app_id = "100001" #开发者分成用的账号,在开发者中心可以查询到

app_key = "123456"

#识别类型,

#具体类型可以查看官方网站的价格页选择具体的类型,不清楚类型的,可以咨询客服

pred_type = "30400"

api = FateadmApi(app_id, app_key, pd_id, pd_key)

# 查询余额

balance = api.QueryBalcExtend() # 直接返余额

# api.QueryBalc()

# 通过文件形式识别:

file_name = 'img.gif'

# 多网站类型时,需要增加src_url参数,具体请参考api文档: http://docs.fateadm.com/web/#/1?page_id=6

# result = api.PredictFromFileExtend(pred_type,file_name) # 直接返回识别结果

rsp = api.PredictFromFile(pred_type, file_name) # 返回详细识别结果

'''

# 如果不是通过文件识别,则调用Predict接口:

# result = api.PredictExtend(pred_type,data) # 直接返回识别结果

rsp = api.Predict(pred_type,data) # 返回详细的识别结果

'''

just_flag = False

if just_flag :

if rsp.ret_code == 0:

#识别的结果如果与预期不符,可以调用这个接口将预期不符的订单退款

# 退款仅在正常识别出结果后,无法通过网站验证的情况,请勿非法或者滥用,否则可能进行封号处理

api.Justice( rsp.request_id)

#card_id = "123"

#card_key = "123"

#充值

#api.Charge(card_id, card_key)

LOG("print in testfunc")

if __name__ == "__main__":

TestFunc()

3.爬取人人网当前用户的个人详情页数据

import requests

from lxml import etree

from fateadm_api import FateadmApi

def TestFunc(imgPath,codyType):

pd_id = "xxxxxxx" #用户中心页可以查询到pd信息

pd_key = "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

app_id = "xxxxxxxx" #开发者分成用的账号,在开发者中心可以查询到

app_key = "xxxxxxxxx"

#识别类型,

#具体类型可以查看官方网站的价格页选择具体的类型,不清楚类型的,可以咨询客服

pred_type = codyType

api = FateadmApi(app_id, app_key, pd_id, pd_key)

# 查询余额

balance = api.QueryBalcExtend() # 直接返余额

# api.QueryBalc()

# 通过文件形式识别:

file_name = imgPath

# 多网站类型时,需要增加src_url参数,具体请参考api文档: http://docs.fateadm.com/web/#/1?page_id=6

result = api.PredictFromFileExtend(pred_type,file_name) # 直接返回识别结果

#rsp = api.PredictFromFile(pred_type, file_name) # 返回详细识别结果

'''

# 如果不是通过文件识别,则调用Predict接口:

# result = api.PredictExtend(pred_type,data) # 直接返回识别结果

rsp = api.Predict(pred_type,data) # 返回详细的识别结果

'''

# just_flag = False

# if just_flag :

# if rsp.ret_code == 0:

# #识别的结果如果与预期不符,可以调用这个接口将预期不符的订单退款

# # 退款仅在正常识别出结果后,无法通过网站验证的情况,请勿非法或者滥用,否则可能进行封号处理

# api.Justice( rsp.request_id)

#card_id = "123"

#card_key = "123"

#充值

#api.Charge(card_id, card_key)

#LOG("print in testfunc")

return result

# if __name__ == "__main__":

# TestFunc()

session = requests.Session()

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'http://www.renren.com/'

page_text = requests.get(url,headers = headers).text

tree = etree.HTML(page_text)

code_img_src = tree.xpath('//*[@id="verifyPic_login"]/@src')[0]

code_img_data = requests.get(code_img_src,headers = headers).content

with open('./code.jpg','wb') as fp:

fp.write(code_img_data)

result = TestFunc('code.jpg',30600)

print('识别结果为:' + result)

login_url = 'http://www.renren.com/ajaxLogin/login?1=1&uniqueTimestamp=2021121720536'

data = {

'email':'15893301681',

'icode': result,

'origURL': 'http://www.renren.com/home',

'domain': 'renren.com',

'key_id': '1',

'captcha_type':' web_login',

'password': '47e27dd5ef32b31041ebf56ec85a9b1e4233875e36396241c88245b188c56cdb',

'rkey': 'c655ef0c57a72755f1240d6c0efac67d',

'f': '',

}

response = session.post(login_url,headers = headers, data = data)

print(response.status_code)

with open('renren.html','w',encoding= 'utf-8') as fp:

fp.write(response.text)

# headers = {

# 'Cookies'

# }

detail_url = 'http://www.renren.com/975996803/profile'

detail_page_text = session.get(detail_url,headers = headers).text

with open('bobo.html','w',encoding= 'utf-8') as fp:

fp.write(detail_page_text)

第六章:高性能异步爬虫(线程池,协程)

1.aiohttp实现多任务异步爬虫

import requests

import asyncio

import time

import aiohttp

start = time.time()

urls = [

'http://127.0.0.1:5000/bobo','http://127.0.0.1:5000/jay','http://127.0.0.1:5000/tom'

]

async def get_page(url):

#print('正在下载',url)

#response = requests.get(url)

#print('下载完毕',response.text)

async with aiohttp.ClientSession() as session:

async with await session.get(url) as response:

page_text = await response.text()

print(page_text)

tasks = []

for url in urls:

c = get_page(url)

task = asyncio.ensure_future(c)

tasks.append(task)

loop = asyncio.get_event_loop()

loop.run_until_complete(asyncio.wait(tasks))

end = time.time()

print('总耗时',end - start)

2.flask服务

from flask import Flask

import time

app = Flask(__name__)

@app.route('/bobo')

def index_bobo():

time.sleep(2)

return 'Hello bobo'

@app.route('/jay')

def index_jay():

time.sleep(2)

return 'Hello jay'

@app.route('/tom')

def index_tom():

time.sleep(2)

return 'Hello tom'

if __name__ == '__main__':

app.run(threaded = True)

3.多任务协程

import asyncio

import time

async def request(url):

print('正在下载',url)

#time.sleep(2)

await asyncio.sleep(2)

print('下载完成',url)

start = time.time()

urls = ['www.baidu.com',

'www.sogou.com',

'www,goubanjia.com'

]

stasks = []

for url in urls:

c = request(url)

task = asyncio.ensure_future(c)

stasks.append(task)

loop = asyncio.get_event_loop()

loop.run_until_complete(asyncio.wait(stasks))

print(time.time()-start)

4.多任务异步爬虫

import requests

import asyncio

import time

#import aiohttp

start = time.time()

urls = [

'http://127.0.0.1:5000/bobo','http://127.0.0.1:5000/jay','http://127.0.0.1:5000/tom'

]

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

async def get_page(url):

print('正在下载',url)

response = requests.get(url,headers =headers)

print('下载完毕',response.text)

tasks = []

for url in urls:

c = get_page(url)

task = asyncio.ensure_future(c)

tasks.append(task)

loop = asyncio.get_event_loop()

loop.run_until_complete(asyncio.wait(tasks))

end = time.time()

print('总耗时',end - start)

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://www.pearvideo.com/videoStatus.jsp?contId=1719770&mrd=0.559512982919081'

response = requests.get(url,headers = headers)

print(response.text)

"https://video.pearvideo.com/mp4/short/20210209/1613307944808-15603370-hd.mp4

6.同步爬虫

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

urls = [

'https://www.cnblogs.com/shaozheng/p/12795953.html',

'https://www.cnblogs.com/hanfe1/p/12661505.html',

'https://www.cnblogs.com/tiger666/articles/11070427.html']

def get_content(url):

print('正在爬取:',url)

response = requests.get(url,headers = headers)

if response.status_code == 200:

return response.content

def parse_content(content):

print('响应数据的长度为:',len(content))

for url in urls:

content = get_content(url)

parse_content(content)

7.线程池基本使用

# import time

# def get_page(str):

# print('正在下载:',str)

# time.sleep(2)

# print('下载成功:',str)

# name_list = ['xiaozi','aa','bb','cc']

# start_time = time.time()

# for i in range(len(name_list)):

# get_page(name_list[i])

# end_time = time.time()

# print('%d second'%(end_time-start_time))

import time

from multiprocessing.dummy import Pool

start_time = time.time()

def get_page(str):

print('正在下载:',str)

time.sleep(2)

print('下载成功:',str)

name_list = ['xiaozi','aa','bb','cc']

pool = Pool(4)

pool.map(get_page,name_list)

end_time = time.time()

print(end_time-start_time)

8.线程池在爬虫案例中的应用

import requests

from lxml import etree

import re

from multiprocessing.dummy import Pool

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://www.pearvideo.com/'

page_text = requests.get(url,headers = headers).text

tree = etree.HTML(page_text)

li_list = tree.xpath('//div[@class="vervideo-tlist-bd recommend-btbg clearfix"]/ul/li')

#li_list = tree.xpath('//ul[@class="vervideo-tlist-small"]/li')

urls = []

for li in li_list:

detail_url = 'https://www.pearvideo.com/' + li.xpath('./div/a/@href')[0]

#name = li.xpath('./div/a/div[2]/text()')[0] + '.mp4'

name = li.xpath('./div/a/div[2]/div[2]/text()')[0] + '.mp4'

#print(detail_url,name)

detail_page_text = requests.get(detail_url,headers = headers).text

# ex = 'srcUrl="(.*?)",vdoUrl'

# video_url = re.findall(ex,detail_page_text)[0]

#video_url = tree.xpath('//img[@class="img"]/@src')[0]

#https://video.pearvideo.com/mp4/short/20210209/{}-15603370-hd.mp4

#xhrm码

print(detail_page_text)

'''

dic = {

'name':name,

'url':video_url

}

urls.append(dic)

def get_video_data(dic):

url = dic['url']

print(dic['name'],'正在下载......')

data = requests.get(url,headers = headers).context

with open(dic['name','w']) as fp:

fp.write(data)

print(dic['name'],'下载成功!')

pool = Pool(4)

pool.map(get_video_data,urls)

pool.close()

pool.join()

'''

import asyncio

async def request(url):

print('正在请求的url是',url)

print('请求成功,',url)

return url

c = request('www.baidu.com')

# loop = asyncio.get_event_loop()

# loop.run_until_complete(c)

# loop = asyncio.get_event_loop()

# task = loop.create_task(c)

# print(task)

# loop.run_until_complete(task)

# print(task)

# loop = asyncio.get_event_loop()

# task = asyncio.ensure_future(c)

# print(task)

# loop.run_until_complete(task)

# print(task)

def callback_func(task):

print(task.result())

loop = asyncio.get_event_loop()

task = asyncio.ensure_future(c)

task.add_done_callback(callback_func)

loop.run_until_complete(task)

第七章:动态加载数据处理(selenium模块应用,模拟登录12306)

1.selenium基础用法

from selenium import webdriver

from lxml import etree

from time import sleep

bro = webdriver.Chrome(executable_path='chromedriver.exe')

bro.get('http://scxk.nmpa.gov.cn:81/xk/')

page_text = bro.page_source

tree = etree.HTML(page_text)

li_list = tree.xpath('//ul[@id="gzlist"]/li')

for li in li_list:

name = li.xpath('./dl/@title')[0]

print(name)

sleep(5)

bro.quit()

2.selenium其他自动操作

from selenium import webdriver

from lxml import etree

from time import sleep

bro = webdriver.Chrome()

bro.get('https://www.taobao.com/')

sleep(2)

search_input = bro.find_element_by_xpath('//*[@id="q"]')

search_input.send_keys('Iphone')

sleep(2)

# bro.execute_async_script('window.scrollTo(0,document.body.scrollHeight)')

# sleep(5)

btn = bro.find_element_by_xpath('//*[@id="J_TSearchForm"]/div[1]/button')

print(type(btn))

btn.click()

bro.get('https://www.baidu.com')

sleep(2)

bro.back()

sleep(2)

bro.forward()

sleep(5)

bro.quit()

3.12306登录示例代码

# 大二

# 2021年2月18日

# 寒假开学时间3月7日

from selenium import webdriver

import time

from PIL import Image

from selenium.webdriver.chrome.options import Options

from selenium.webdriver import ChromeOptions

from selenium.webdriver import ActionChains

# chrome_options = Options()

# chrome_options.add_argument('--headless')

# chrome_options.add_argument('--disable-gpu')

bro = webdriver.Chrome()

bro.maximize_window()

time.sleep(5)

# option = ChromeOptions()

# option.add_experimental_option('excludeSwitches', ['enable-automation'])

# bro = webdriver.Chrome(chrome_options=chrome_options)

# chrome_options.add_argument("--window-size=1920,1050")

# bro = webdriver.Chrome(chrome_options=chrome_options,options= option)

bro.get('https://kyfw.12306.cn/otn/resources/login.html')

time.sleep(3)

bro.find_element_by_xpath('/html/body/div[2]/div[2]/ul/li[2]/a').click()

bro.save_screenshot('aa.png')

time.sleep(2)

code_img_ele = bro.find_element_by_xpath('//*[@id="J-loginImg"]')

time.sleep(2)

location = code_img_ele.location

print('location:',location)

size = code_img_ele.size

print('size',size)

rangle = (

int(location['x']),int(location['y']),int(location['x'] + int(size['width'])),int(location['y']+int(size['height']))

)

print(rangle)

i = Image.open('./aa.png')

code_img_name = './code.png'

frame = i.crop(rangle)

frame.save(code_img_name)

#bro.quit()

# 大二

# 2021年2月19日

# 寒假开学时间3月7日

#验证码坐标无法准确识别,坐标错位,使用无头浏览器可以识别

'''

result = print(chaojiying.PostPic(im, 9004)['pic_str'])

all_list = []

if '|' in result:

list_1 = result.split('!')

count_1 = len(list_1)

for i in range(count_1):

xy_list = []

x = int(list_1[i].split(',')[0])

y = int(list_1[i].split(',')[1])

xy_list.append(x)

xy_list.append(y)

all_list.append(xy_list)

else:

xy_list = []

x = int(list_1[i].split(',')[0])

y = int(list_1[i].split(',')[1])

xy_list.append(x)

xy_list.append(y)

all_list.append(xy_list)

print(all_list)

for l in all_list:

x = l[0]

y = l[1]

ActionChains(bro).move_to_element_with_offset(code_img_ele,x,y).click().perform()

time.sleep(0.5)

bro.find_element_by_id('J-userName').send_keys('')

time.sleep(2)

bro.find_element_by_id('J-password').send_keys('')

time.sleep(2)

bro.find_element_by_id('J-login').click()

bro.quit()

'''

4.动作链与iframe的处理

from selenium import webdriver

from time import sleep

from selenium.webdriver import ActionChains

bro = webdriver.Chrome()

bro.get('https://www.runoob.com/try/try.php?filename=juquryui-api-droppable')

bro.switch_to.frame('id')

div = bro.find_elements_by_id('')

action = ActionChains(bro)

action.click_and_hold(div)

for i in range(5):

action.move_by_offset(17,0)

sleep(0.3)

action.release()

print(div)

5.谷歌无头浏览器+反检测

from selenium import webdriver

from time import sleep

from selenium.webdriver.chrome.options import Options

from selenium.webdriver import ChromeOptions

chrome_options = Options()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

option = ChromeOptions()

option.add_experimental_option('excludeSwitches', ['enable-automation'])

bro = webdriver.Chrome(chrome_options=chrome_options,options=option)

bro.get('https://www.baidu.com')

print(bro.page_source)

sleep(2)

bro.quit()

6.基于selenium实现1236模拟登录

#2021年2.18

import requests

from hashlib import md5

class Chaojiying_Client(object):

def __init__(self, username, password, soft_id):

self.username = username

password = password.encode('utf8')

self.password = md5(password).hexdigest()

self.soft_id = soft_id

self.base_params = {

'user': self.username,

'pass2': self.password,

'softid': self.soft_id,

}

self.headers = {

'Connection': 'Keep-Alive',

'User-Agent': 'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0)',

}

def PostPic(self, im, codetype):

"""

im: 图片字节

codetype: 题目类型 参考 http://www.chaojiying.com/price.html

"""

params = {

'codetype': codetype,

}

params.update(self.base_params)

files = {'userfile': ('ccc.jpg', im)}

r = requests.post('http://upload.chaojiying.net/Upload/Processing.php', data=params, files=files, headers=self.headers)

return r.json()

def ReportError(self, im_id):

"""

im_id:报错题目的图片ID

"""

params = {

'id': im_id,

}

params.update(self.base_params)

r = requests.post('http://upload.chaojiying.net/Upload/ReportError.php', data=params, headers=self.headers)

return r.json()

# if __name__ == '__main__':

# chaojiying = Chaojiying_Client('超级鹰用户名', '超级鹰用户名的密码', '96001')

# im = open('a.jpg', 'rb').read()

# print chaojiying.PostPic(im, 1902)

# chaojiying = Chaojiying_Client('xxxxxxxxxx', 'xxxxxxxxxx', 'xxxxxxx')

# im = open('第七章:动态加载数据处理/12306.jpg', 'rb').read()

# print(chaojiying.PostPic(im, 9004)['pic_str'])

from selenium import webdriver

import time

bro = webdriver.Chrome()

bro.get('https://kyfw.12306.cn/otn/resources/login.html')

time.sleep(3)

bro.find_element_by_xpath('/html/body/div[2]/div[2]/ul/li[2]/a').click()

7.模拟登录qq空间

from selenium import webdriver

from selenium.webdriver import ActionChains

from time import sleep

bro = webdriver.Chrome()

bro.get('https://qzone.qq.com/')

bro.switch_to.frame('login_frame')

bro.find_element_by_id('switcher_plogin').click()

#account = input('请输入账号:')

bro.find_element_by_id('u').send_keys('')

#password = input('请输入密码:')

bro.find_element_by_id('p').send_keys('')

bro.find_element_by_id('login_button').click()

第八章:scrapy框架

1.各种项目实战,scrapy各种配置修改

2.bossPro示例

# 大二

# 2021年2月23日星期二

# 寒假开学时间3月7日

import requests

from lxml import etree

#url = 'https://www.zhipin.com/c101010100/?query=python&ka=sel-city-101010100'

url = 'https://www.zhipin.com/c101120100/b_%E9%95%BF%E6%B8%85%E5%8C%BA/?ka=sel-business-5'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.150 Safari/537.36'

}

page_text = requests.get(url,headers = headers).text

tree = etree.HTML(page_text)

print(tree)

li_list = tree.xpath('//*[@id="main"]/div/div[2]/ul/li')

print(li_list)

for li in li_list:

job_name = li.xpath('.//span[@class="job-name"]a/text()')

print(job_name)

3.qiubaiPro示例

# -*- coding: utf-8 -*-

# 大二

# 2021年2月21日星期日

# 寒假开学时间3月7日

import requests

from lxml import etree

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.150 Safari/537.36'

}

url = 'https://www.qiushibaike.com/text/'

page_text = requests.get(url,headers = headers).text

tree = etree.HTML(page_text)

div_list = tree.xpath('//div[@id="content"]/div[1]/div[2]/div')

print(div_list)

# print(tree.xpath('//*[@id="qiushi_tag_124072337"]/a[1]/div/span//text()'))

for div in div_list:

auther = div.xpath('./div[1]/a[2]/h2/text()')[0]

# print(auther)

content = div.xpath('./a[1]/div/span//text()')

content = ''.join(content)

# content = div.xpath('//*[@id="qiushi_tag_124072337"]/a[1]/div/span')

# print(content)

print(auther,content)

# print(tree.xpath('//*[@id="qiushi_tag_124072337"]/div[1]/a[2]/h2/text()'))

4.数据库示例

# 大二

# 2021年2月21日星期日

# 寒假开学时间3月7日

import pymysql

# 链接数据库

# 参数1:mysql服务器所在主机ip

# 参数2:用户名

# 参数3:密码

# 参数4:要链接的数据库名

# db = pymysql.connect("localhost", "root", "200829", "wj" )

db = pymysql.connect("192.168.31.19", "root", "200829", "wj" )

# 创建一个cursor对象

cursor = db.cursor()

sql = "select version()"

# 执行sql语句

cursor.execute(sql)

# 获取返回的信息

data = cursor.fetchone()

print(data)

# 断开

cursor.close()

db.close()

在这上面scrapy项目不容易上传

有需要scrapy相关的,可以在我的资源上下载

也可以在公众号(yk 坤帝,跟博客昵称一样)获取

公众号获取的速度可能有点慢,才申请的,还在探索过程

有问题的,想交流的也可以在公众号上留言

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK