多协程和队列,爬取时光网电视剧TOP100的数据(剧名、导演、主演和简介)

source link: https://blog.csdn.net/weixin_45735242/article/details/115402710

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

首先查看电视剧网页结构,发现所有电视剧都在下面位置:

从中我们并未发现电视剧的链接,于是我们打开几部电视剧发现网页url有没有什么规律。

发现信息在下面文件里:

打开它的Headers:

url:http://front-gateway.mtime.com/library/movie/detail.api?tt=1617353592649&movieId=269369&locationId=290

然后我们打开另一部电视剧看看url有没有什么相同的。

url:http://front-gateway.mtime.com/library/movie/detail.api?tt=1617351165871&movieId=111754&locationId=290

我们发现其中两个url的参数tt和movieId不同。

但是我们试一试如果去掉tt看看是否能访问到内容:

url:http://front-gateway.mtime.com/library/movie/detail.api?movieId=111754&locationId=290

我们发现是能的。

所有我们只需要找到每部电视剧的movieId就可以进行所有电视剧访问了。

movieId在电视剧首页:

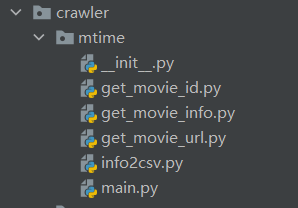

请按照如下形式组织代码:

get_movie_id.py

import requests

def get_movie_id(url):

movieId_list = []

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/89.0.4389.82 Safari/537.36'

}

res_json = requests.get(url=url, headers=headers).json()

# print(type(res_json))

list_movieId = res_json['data']['items'][2]['items']

for item in list_movieId:

movieId_list.append(item['movieInfo']['movieId'])

return movieId_list

get_movie_url.py

def get_movieid_url(movieId_list):

movie_url_list = []

for id in movieId_list:

movie_url = 'http://front-gateway.mtime.com/library/movie/detail.api?movieId={}&locationId=290'.format(id)

movie_url_list.append(movie_url)

return movie_url_list

get_movie_info.py

from gevent import monkey

monkey.patch_all()

import requests

import gevent

from gevent.queue import Queue

import random

work = Queue()

movie_info = {'剧名': [], '导演': [], '主演': [], '简介': []}

headers = {

'Accept': 'pplication/json, text/plain, */*',

'Accept-Encoding': 'gzip, deflate',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Connection': 'keep-alive',

'Content-Type': 'application/json',

'Cookie': '_tt_=5F42EA056AFCC7EBAD146F143CEE70BC; __utma=196937584.1304778651.1617260335.1617260335.1617260335.1; __utmz=196937584.1617260335.1.1.utmcsr=(direct)|utmccn=(direct)|utmcmd=(none); Hm_lvt_07aa95427da600fc217b1133c1e84e5b=1617196207,1617259101,1617346023; Hm_lpvt_07aa95427da600fc217b1133c1e84e5b=1617346629',

'Host': 'front-gateway.mtime.com',

'Origin': 'http://movie.mtime.com',

'Referer': 'http://movie.mtime.com/109956/',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36 SLBrowser/7.0.0.2261 SLBChan/25',

'X-Mtime-Wap-CheckValue': 'mtime'

}

def get_movie_info(movie_url_list, num_crawler=2):

for url in movie_url_list:

work.put_nowait(url)

taskes_list = []

for i in range(num_crawler):

task = gevent.spawn(crawler)

taskes_list.append(task)

gevent.joinall(taskes_list)

return movie_info

def crawler():

while not work.empty():

url = work.get_nowait()

res = requests.get(url, headers=headers)

# print(res.status_code)

# if res.status_code != 200:

# print(url)

res = res.json()['data']['basic']

actors = []

directors = []

for actor in res['actors']:

if actor['name']:

actors.append(actor['name'])

for director in res['directors']:

if director['name']:

directors.append(director['name'])

title = res['name']

story = res['story']

movie_info['剧名'].append(title)

movie_info['导演'].append(directors)

movie_info['主演'].append(actors)

movie_info['简介'].append(story)

info2csv.py

import pandas as pd

def tocsv(movie_dict, path):

movie_info = pd.DataFrame(movie_dict)

movie_info.to_csv(path + r'\mtime_movie_info.csv', encoding='utf-8')

main.py

from crawler.mtime.get_movie_id import get_movie_id

from crawler.mtime.get_movie_info import get_movie_info

from crawler.mtime.get_movie_url import get_movieid_url

from crawler.mtime.info2csv import tocsv

url = 'http://front-gateway.mtime.com/community/top_list/query.api?tt=1617345962465&type=2&pageIndex=1&pageSize=10'

movie_id_list = get_movie_id(url)

movie_url_list = get_movieid_url(movie_id_list)

num_crawler = 1 # 爬虫个数, 多了会被服务器关闭访问, 建议多几个headers随机用

movie_dict = get_movie_info(movie_url_list, num_crawler)

path = r'D:\Study\Python' # 文件保存位置

tocsv(movie_dict, path)

print('OK')

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK