Golang 内存分配优化

source link: http://vearne.cc/archives/671

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

版权声明 本站原创文章 由 萌叔 发表

转载请注明 萌叔 | http://vearne.cc

如果你正在使用imroc/req io.Copy 或 ioutil.ReadAll,或者尝试对大量对象的内存分配和释放的场景进行优化,这篇文章可能对你有帮助

在我们的一个程序中,使用库imroc/req请求后端的HTTP服务,

从HTTP响应读取结果

有如下调用关系

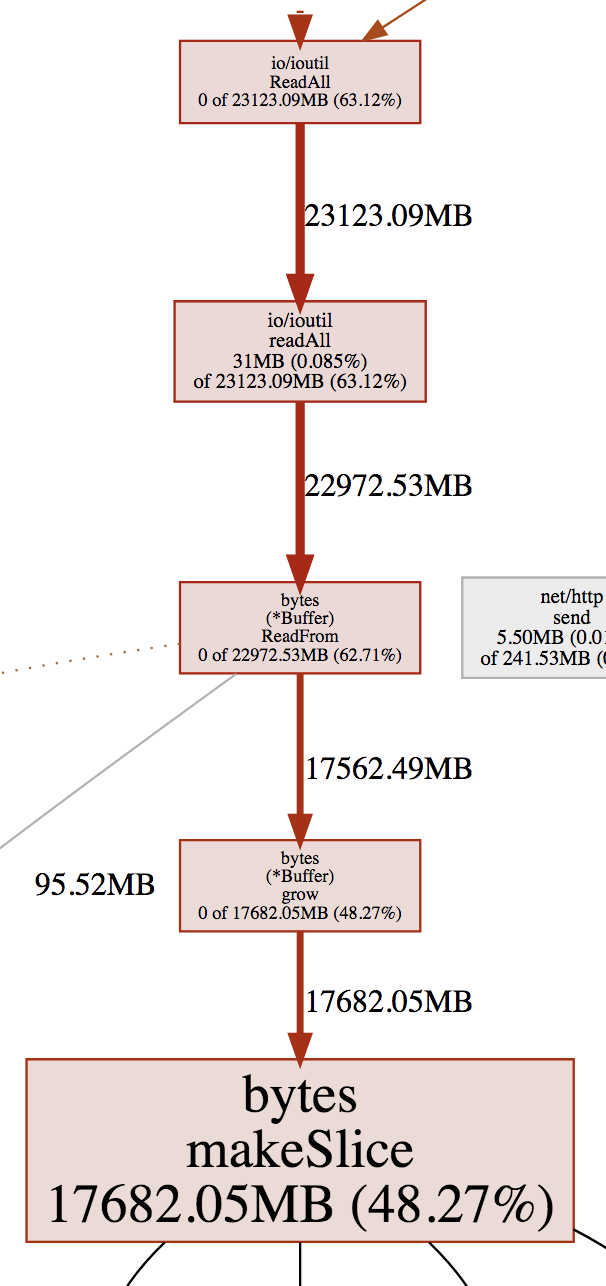

Resq.Bytes() -> Resq.ToBytes() -> ioutil.ReadAll(r io.Reader) -> Buff.ReadFrom(r io.Reader)

使用pprof收集内存累计分配情况可以发现大量的内存分配由Buffer.grow(n int) 触发

3. 原因分析

ReadFrom的源码如下

// MinRead is the minimum slice size passed to a Read call by

// Buffer.ReadFrom. As long as the Buffer has at least MinRead bytes beyond

// what is required to hold the contents of r, ReadFrom will not grow the

// underlying buffer.

const MinRead = 512

// ReadFrom reads data from r until EOF and appends it to the buffer, growing

// the buffer as needed. The return value n is the number of bytes read. Any

// error except io.EOF encountered during the read is also returned. If the

// buffer becomes too large, ReadFrom will panic with ErrTooLarge.

func (b *Buffer) ReadFrom(r io.Reader) (n int64, err error) {

b.lastRead = opInvalid

for {

i := b.grow(MinRead)

m, e := r.Read(b.buf[i:cap(b.buf)])

if m < 0 {

panic(errNegativeRead)

}

b.buf = b.buf[:i+m]

n += int64(m)

if e == io.EOF {

return n, nil // e is EOF, so return nil explicitly

}

if e != nil {

return n, e

}

}

}

由于ioutil.ReadAll不知道最终需要多大的空间来存储结果数据,它采取的做法是,初始分配一个较小的Buff(大小为MinRead), 一边从输入中读取数据放入Buff, 一边看是否能够存下所有数据,如果不能则尝试扩大这个Buff(调用Buffer.grow())

// grow grows the buffer to guarantee space for n more bytes.

// It returns the index where bytes should be written.

// If the buffer can't grow it will panic with ErrTooLarge.

func (b *Buffer) grow(n int) int {

m := b.Len()

// If buffer is empty, reset to recover space.

if m == 0 && b.off != 0 {

b.Reset()

}

// Try to grow by means of a reslice.

if i, ok := b.tryGrowByReslice(n); ok {

return i

}

// Check if we can make use of bootstrap array.

if b.buf == nil && n <= len(b.bootstrap) {

b.buf = b.bootstrap[:n]

return 0

}

c := cap(b.buf)

if n <= c/2-m {

// We can slide things down instead of allocating a new

// slice. We only need m+n <= c to slide, but

// we instead let capacity get twice as large so we

// don't spend all our time copying.

copy(b.buf, b.buf[b.off:])

} else if c > maxInt-c-n {

panic(ErrTooLarge)

} else {

// Not enough space anywhere, we need to allocate.

buf := makeSlice(2*c + n) // **注意这里**

copy(buf, b.buf[b.off:])

b.buf = buf

}

// Restore b.off and len(b.buf).

b.off = 0

b.buf = b.buf[:m+n]

return m

}

如果需要读取的结果数据较大,则可能反复 调用makeSlice(n int)创建字节数组。这将会产生大量无用的临时对象,且增加GC的难度。

4.优化方案

- 如果我们大致可以知道HTTP响应的大小,那么可以提前给Buff分配一个合适且较大的字节数组,避免反复调用

makeSlice(n int) - 使用sync.Pool 重复使用临时对象,减少重复创建的开销

为了验证方案,这里做出对比试验

1)未优化代码

2)优化代码

执行10,000次的操作的结果对比

指标 未优化方案 优化方案 mem.TotalAlloc(为堆对象总计分配的字节数) 186,170,656 42,164,192 mem.Mallocs(为创建堆对象总计的内存申请次数) 732,161 681,532 mem.Frees(为销毁堆对象总计的内存释放次数) 717,737 661,557由上面图表知,临时对象的申请和释放次数都有明显下降,优化方案是有效的(事实上随着执行次数的增加,指标的差距还会进一步拉大)。

其它类似场景的优化

在某些情况下,你可能会用到io.Copy

io.Copy(dst Writer, src Reader)

io.Copy 从src拷贝数据到dst,中间会借助字节数组作为buf

你可以使用io.CopyBuffer 替代 io.Copy

io.CopyBuffer(dst Writer, src Reader, buf []byte)

并使用sync.Pool缓存buf, 以减少临时对象的申请和释放。

如果我的文章对你有帮助,你可以给我打赏以促使我拿出更多的时间和精力来分享我的经验和思考总结。

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK