webrtcH4cKS: ~ Bisecting Browser Bugs (Arne Georg Gisnås Gleditsch)

source link: https://webrtchacks.com/bisecting-chrome-regressions/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

webrtcH4cKS: ~ Bisecting Browser Bugs (Arne Georg Gisnås Gleditsch)

When running WebRTC at scale, you end up hitting issues and frequent regressions. Being able to quickly identify what exactly broke is key to either preventing a regression from landing in Chrome Stable or adapting your own code to avoid the problem. Chrome’s bisect-builds.py tool makes this process much easier than you would suspect. Arne from Whereby gives you an example of how he used this to workaround an issue that came up recently.

{“editor”, “Philipp Hancke“}

In this post I am going to provide a blow-by-blow account of how a change to Chrome triggered a bug in Whereby and how we went about determining exactly what that change was.

Building an application on the foundation of WebRTC comes with some benefits and some drawbacks. The big benefit is obviously that you’re able to leverage the tremendous amount of awesome work that has gone into making WebRTC what it is today. However, it does also have some drawbacks. The correct operation of your application will depend on the correct operation of the supporting WebRTC technologies (like the browser) as well as the correct interaction between your application code and those technologies. When that interaction develops faults, the nature of those faults can either be blindingly obvious, very obscure, or somewhere in between. This is a story of a situation where it was neither obvious nor somewhere in between.

The Problem

That should never happen

The story starts when our support specialist Ashley reaches out to discuss the problems of a customer who is experiencing something weird. They reported that recently they had been experiencing problems transmitting video out past their corporate firewall. Audio was fine and video passed the firewall into their corporate network just fine. Participants outside the firewall, however, could only hear the participants on the inside the firewall, not see them. Even stranger, if a participant inside the firewall shares their screen, that will transmit out past the firewall. Outside participants can then observe the self-view of the inside participants via screen sharing, but the main video feed remains blank.

Knowing the architecture of WebRTC under the hood, this is an odd failure mode. If a firewall is letting audio packets past its gates, the odds that it would stop the accompanying video packets are quite unlikely. At the very least it would require some pretty invasive (D)TLS man-in-the-middle setup in order to know the difference between the two at all. So we started digging.

The first relevant clue is that this customer is using one of our paid-for SFU meeting rooms, meaning the video feed from the individual participants is sent as several different spatial resolution feeds simultaneously (aka “simulcast”). Our alumni fippo is offering some commentary from the sidelines as we are investigating this, and early on suggested simulcast as a triggering condition. Quite correctly, it would turn out.

The twist

The second relevant clue is that the affected connections are transiting via our TURN network as well. Presumably this is because the firewalls in question are quite restrictive in what outbound ports they allow traffic towards. As we are hard at work trying to understand the exact network conditions the customer is operating under, fellow engineer Hans Christian demonstrates that he can replicate the behavior simply by forcing TURN to be used towards our SFUs. There are some other subtleties too, like the order in which the different participants join the room, but once it is possible to reproduce the problem these are easier to map out. Still, no flashes of insights based on these two clues. TURN is designed to pass traffic along indiscriminately, and with the amount of TURN traffic we process (a lot), the outcry would have been much wider if this was not operating as it should.

Narrowing it down

Being able to reproduce, we quite quickly are able to determine that:

- it doesn’t matter what equipment is used by the receiving participant, and

- that the sending participant whose video goes missing needs to run Whereby on Chrome 73 or later.

Our attention turns to the release notes and change log for Chrome 73, but still nothing stands out. Time to look at packet traces.

Our SFU is capable of dumping RTP packets in the clear in development mode to facilitate this kind of debugging. However, in this case we want to inspect the packets on both sides of the TURN server. Even if the SFU is able to provide a log of the packets it sees after stripping DTLS, these can not immediately be compared to the packet traces towards the TURN server. Here, the somewhat obscure Chrome Canary command line flag --disable-webrtc-encryption comes in handy. After adding similar functionality to our SFU code base (again in development mode, of course), we are able to obtain clear text packet traces from both the inbound and outbound leg of Chrome 73’s TURN server session. It is then quickly done to eliminate this as the source of error; the TURN server is transmitting data as faithfully as could be wished for.

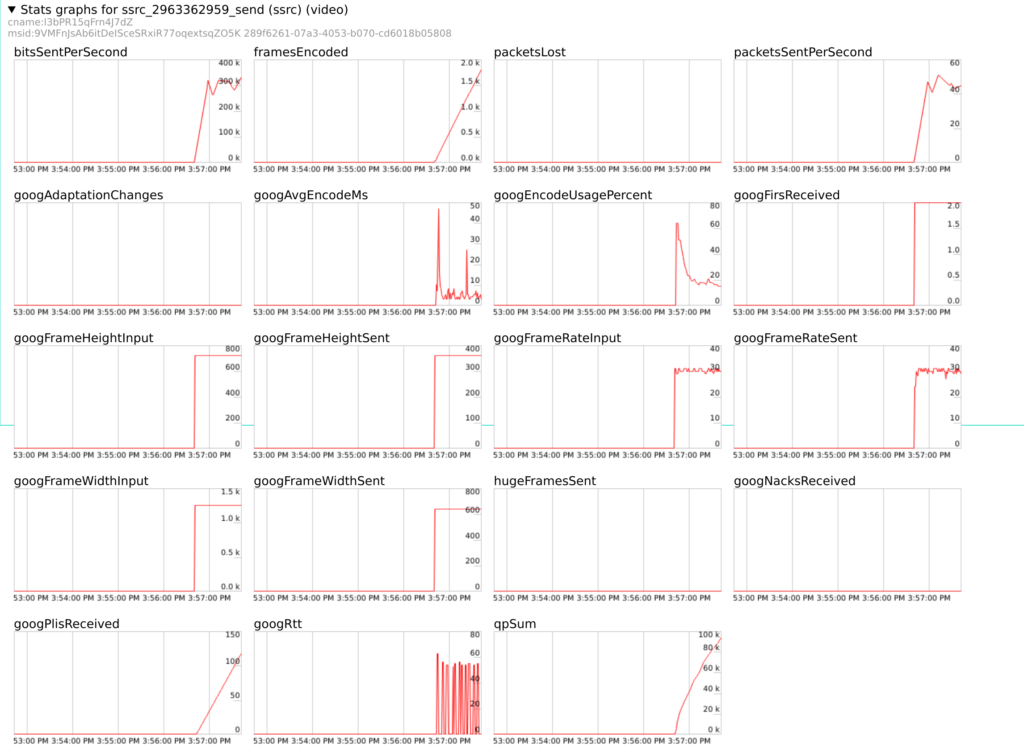

With the clear text packet traces in hand though, it is time to start delving into the actual contents of the RTP stream. It shortly becomes apparent that our SFU is not receiving the keyframes it is expecting from Chrome 73. This is very odd, as we are sending quite a lot of PLIs (“Picture Loss Indicators”) in Chrome’s direction, asking for some:

At this point we feel we have something tangible to report to the Chrome team, and do so. However, the failure still seems pretty tied to our particular setup, so we continue investigations on our end as well.

Debugging Chrome with Bisect

Divide and conquer

Being able to reproduce a customer’s problem in a controlled environment makes all the difference in the world when investigating a tough issue. For one thing, it makes it easier to vary parameters to see exactly what contributes to triggering the problem. Additionally, it also increases the amount of parameters you can vary a lot. The precise version of the browser used to run your application is one thing you can vary in your debug environment that it is hard to get the customer to do for you. If you can try any browser version you want, this enables a powerful technique called “bisecting”.

When troubleshooting software, you often know you have one version of code that exhibits the problem you are trying to avoid and one that does not. If you want to determine which change to the software code base introduced the problem, one way to do that is to just replay all changes that were made to it on its way from the first version to the other, testing the software at each step to see if the problem has manifested yet. While this works, it can be a very tedious and time-consuming process if you only become aware of the problem after the software code base has significantly changed, since the number of steps to retrace can become excessive.

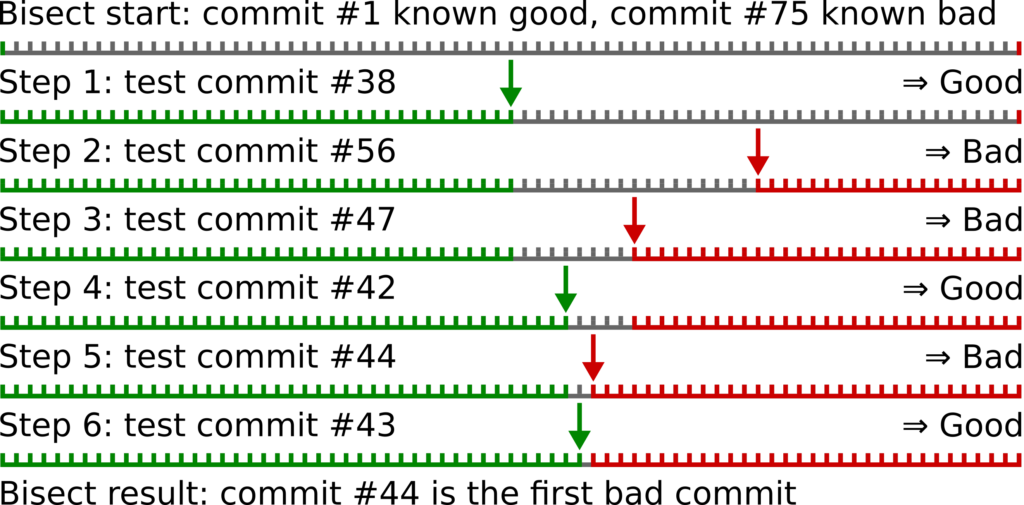

When bisecting, you assume that the problem was introduced at one particular point, and then remains for all subsequent versions. This allows you to optimize this process by iteratively selecting a version midway between your know “good” version and the known “bad” version, testing it to see if it exhibits the problem or not. This midway version then either becomes your new good or bad version, and you start over by selecting a new midway point. Eventually the good and bad versions are close enough together that you can reason about all the changes between them and hopefully spot the change that introduced the problem. (Side note: while the terminology “good”, “bad” and “problem” implies qualitative judgement, this process can be used to pinpoint any change in behavior, harmful or benign. In our case, at this point we didn’t know if we were looking for a problem in Chrome or just a change that triggered problematic behavior in our application.)

Bisection was made popular by git, which introduced a dedicated command called “git bisect” to automate this process. The difference between your good and bad versions in git is represented as the path of commits from one to the other. The midway point is picked as the commit closest to the midway point on this path. The process terminates when your most recently found good version is the parent of your most recently found bad version. It is then up to you to examine the changes introduced by the bad commit to determine what the actual problem is. To get the most out of this process, the commit path between your original good and bad versions should be mostly linear, all the commits on the path should represent runnable versions of the software, and the changes introduced by each commit should be small enough that they can be effectively analyzed once the bisection process terminates. (There are ways to cope if these requirements don’t hold all of the time, though.)

Play it again, Sam

This sounds nice in theory, but how do we apply this to Chrome and our application? Our premise is that under Chrome 72, our application doesn’t exhibit the behavior we are trying to eliminate, while under Chrome 73 it does. If our last known good version had been e.g “Chrome 62”, it could have made sense to bisect over Chrome major versions, but in this case we already know that the issue we are investigating was introduced in major version 73. We want to go one level deeper. We need to break the difference difference between Chrome 72 and Chrome 73 into smaller steps, and then try to apply the bisection approach to that list of steps. Fortunately, as part of the Chromium build infrastructure, Google maintains a pretty nifty change set lookup tool called omahaproxy. We use this to look up Chrome versions “72.0.3626.121” and “73.0.3683.90”, which yields “base branch positions” 612437 and 625896, respectively. So there are over 13000 “base branch” steps (which corresponds to (small chunks of) git commits to the main Chrome git repository) between Chrome versions 72 and 73, which is a lot. Compiling and testing these step by step to see if the problem has manifested yet is obviously untenable. However, since bisecting works by halving the search space for each iteration, we should be able to narrow this down to one base branch step in log2(13459) ≈ 14 steps. Still, this is a significant undertaking, compiling Chrome from scratch takes a lot of time, and the first iterations will yield steps far enough apart that the benefit from iterative compilations will be marginal.

A hidden gem

At this point, we’re treated to a very pleasant surprise. It turns out (again, hat tip to the intrepid fippo) that Google actually provides precompiled binaries for all these base branch steps! They even provide a python script to run bisection using these binaries! There’s a good description of how to obtain and use this on the chrome developer pages. Having installed this, and given the base branch steps we found above, we can now search through the full list of steps very easily. By giving bisect-builds.py the range we want to search, it will present us with different Chrome candidates to test. We’ll tell bisect-builds what arguments we want to run Chrome with, and it will start the candidates automatically for us, one by one. It is then up to us to perform the necessary steps to reproduce our problem, terminate the candidate Chrome and report if the problem was present or not. The next candidate started will be selected based on our report. In practice this process looks like this:

This whole process is surprisingly efficient and on the whole takes around ten minutes.

Once more, with feeling

The bad news is that the identified change is a dependency update, bumping the version of the bundled WebRTC code base. The version bump encompasses 53 different commits to the WebRTC repository, from 74ba99062c to 71b5a7df77 . Looking at the list of commits, we can speculate as to which ones are likely to be involved in our issue (did you notice the entry “2019-01-21 [email protected] Remove simulcast constraints in SimulcastEncoderAdapter” in that list..?). We can also start reading the code diffs to understand how behavior has changed. If we were luckier, the problematic interaction would have been obvious by now, and we could have called it a day.

However, while we are getting nearer to answering “what” has triggered our problem, the “why” is still eluding us. We conclude that we need to instrument the changed code paths to understand better how they interact with our application. Fortunately, the Chrome (or rather, Chromium) source code is publicly available, and Google provides good instructions for building it yourself. Following the steps described in the build instructions, we soon have the Chromium source code and related Google tooling installed on our local machine. At this point we deviate slightly from the official build instructions. Since we want to build a historic version of Chromium rather than the tip of master, we use git checkout 94cbf3f56 to position the tree at the correct revision. We then make sure all dependencies are synced to this Chromium version using gclient sync . From here on out, most of the action is in the subdirectory third_party/webrtc . This is a separate git repository from the main Chromium repo, and after the sync above gclient has positioned us at revision 71b5a7df77 here.

Having identified a likely offending commit, we could now speculatively back this out to see if the problem disappears. However, as long as we have to compile Chromium from scratch anyway, we choose to be thorough and bisect our way through these 53 commits as well. We do this by running a traditional git bisect process over the WebRTC repository change span that was indicated earlier. The fundamentals of this process are similar to the first bisect process we went through, but some of the bits that previously happened behind the curtain are now up to us to perform by hand. At each step, we now have to compile and start our Chromium candidates explicitly:

The first compile of Chromium in a fresh tree takes a significant amount of time (like double-digit number of hours), so we leave this overnight and return to the task the next morning. The subsequent builds take around 5 minutes to prepare at each step, and the rest of the process runs more or less interactively. This is much slower than bisecting with pre-built binaries, but tolerable for our remaining 5-6 steps. Had our steps been further apart, the time for each build would have increased significantly, approaching the time for a full build. This just highlights how efficient that first bisection process was.

At the rainbow’s end

Ultimately, as shown above, this process confirms that https://webrtc-review.googlesource.com/c/src/+/114429 is the change that causes our app to misbehave. This is just 124 lines of added code, and while we are still in the dark as to what the actual problem is, at this point it is feasible to instrument all the changed code flows in Chromium to understand how they interact with our application code.

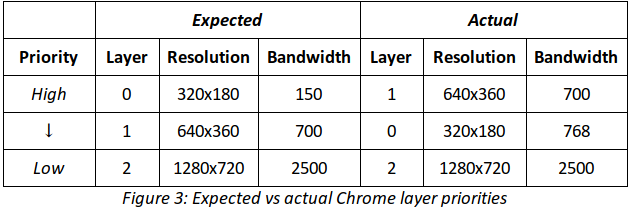

As we do so, it gradually becomes clear what the culprit is. The Chrome change ensures that simulcast layers are always sorted in the right order, as sometimes (apparently) the layers can be given in reverse order (highest bandwidth allocated to the bottom layer, rather than the top layer). This should be a no-op for Whereby – the layers are always ordered from lowest to highest bandwidth anyway. Or so we thought.

Looking at debug printouts reveals that Chrome has ordered our layers in the order [1 (640×360), 0 (320×180), 2 (1280×720)] – so neither ascending or descending order, but some sort of jumbled mess. Digging further, layer 0 was been set with a bandwidth cap of 768kbps, which is higher than the cap given for layer 1 and lower than the one given for layer 2. So, when there’s only bandwidth available for one layer, Chrome will only send video on layer 1, not layer 0 as our SFU expects. At this point we are able to make the connection to the Whereby application code.

As mentioned earlier, we push a significant amount of TURN data. In an effort to control the cost spent on relaying this data, when we determine that ICE has resorted to using a “relay” candidate, we use RTCRtpSender.setParameters to restrict the bandwidth target to, coincidentally, 768kbps. This logic predates our use of simulcast. The unfortunate consequence is that when both SFU, TURN and simulcast are used, we effectively set the target bandwidth for layer 0 to a much higher value than the intended usual 150kbps. As a result, this layer is down-prioritized and left dry. Our SFU would receive a video stream on layer 1, but since it was expecting layer 0 to be the initially active one, it got stuck trying to obtain that first keyframe. This left the user with just audio and no video stream, exactly as reported.

The change to fix this was 7 or 8 lines of code.

Takeaways

So, what should you take away from this story? There are several lessons to pick from:

- Good support people are golden. Take them seriously, listen to them and help them do efficient triage

- Investigate things that “can’t happen” too

- Replicating client conditions allows you to reproduce reported bugs in a controlled environment – make sure you have the field diagnostics and the required product knobs and levers available to do this

- Bisection is a powerful technique to pinpoint problems, both in your own application and in your dependencies

- Even if you can’t fix the bug, being able to report the offending change when requesting upstream assistance is going to increase your odds of getting help

- Good git hygiene (commits as small as possible, every commit compileable/testable, (roughly) linear commit history) will make sure bisection can be used to its fullest potential on your own code

- Google has some amazing tooling that it pays to be aware of

- You can always go one level deeper

- Open source is awesome!

{“author”: “Arne Georg Gisnås Gleditsch“}

Want to keep up on our latest posts? Please click here to subscribe to our mailing list if you have not already. We only email post updates. You can also follow us on twitter at @webrtcHacks for blog updates.

Related Posts

Troubleshooting Unwitting Browser Experiments (Al Brooks)

Troubleshooting Unwitting Browser Experiments (Al Brooks) Suspending Simulcast Streams for Savvy Streamlining (Brian Baldino)

Suspending Simulcast Streams for Savvy Streamlining (Brian Baldino) A playground for Simulcast without an SFU

A playground for Simulcast without an SFU Chrome Screensharing Blues – preparing for getDisplayMedia

Chrome Screensharing Blues – preparing for getDisplayMedia So your VPN is leaking because of Chrome’s WebRTC…

So your VPN is leaking because of Chrome’s WebRTC… How to capture & replay WebRTC video streams with video_replay (Stian Selnes)

How to capture & replay WebRTC video streams with video_replay (Stian Selnes)

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK